Reasoning on Multi-Hop Questions with Hotpotqa

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The 2015 Miss Usa Pageant Brings

THE 2015 MISS USA® PAGEANT BRINGS THE HEAT BACK TO BATON ROUGE SUNDAY, JULY 12 ON NBC (8-11 P.M. ET) LIVE FROM THE BATON ROUGE RIVER CENTER IN LOUISIANA New York, NY – April 14, 2015 – NBC, Donald J. Trump and Paula M. Shugart, president of the Miss Universe Organization, announced today that the 2015 MISS USA® Pageant will take place in Baton Rouge, La. After the success of last year’s telecast, the MISS USA pageant returns to air LIVE from The Baton Rouge River Center Sunday, July 12 (8-11 p.m. ET) on NBC. “The 2014 MISS USA® Pageant delivered an unprecedented amount of national and international exposure for Baton Rouge, taking its reputation for tourism and business opportunities beyond the local level,” says Trump. “This year’s pageant will be no different. Continuing our partnership guarantees another sold-out show that will provide Louisiana’s capital with another three hours of primetime television coverage,” adds Trump. “There is always a genuine feeling of true hospitality when visiting Baton Rouge,” says Shugart. “We have created an incredible partnership with the city, and are honored to have the responsibility of sharing what their community has to offer through our worldwide telecasts,” adds Shugart. “Baton Rouge was recently named one of the Top 10 Happiest Cities in America by the National Bureau of Economic Research, which is no surprise to us. We truly cannot wait to go back.” The Miss Universe Organization will return to the “Capital City” to produce the 2015 MISS USA® Pageant after the tremendous results from last summer. -

P40 Layout 1

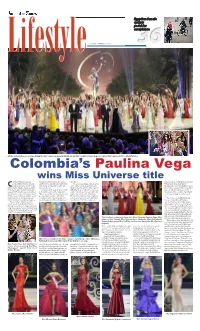

Egyptian female cyclists pedal for acceptance TUESDAY, JANUARY 27, 2015 36 All the contestants pose on stage during the Miss Universe pageant in Miami. (Inset) Miss Colombia Paulina Vega is crowned Miss Universe 2014.— AP/AFP photos Colombia’s Paulina Vega wins Miss Universe title olombia’s Paulina Vega was Venezuelan Gabriela Isler. She edged the map. Cuban soap opera star William Levy and crowned Miss Universe Sunday, out first runner-up, Nia Sanchez from the “We are persevering people, despite Philippine boxing great Manny Cbeating out contenders from the United States, hugging her as the win all the obstacles, we keep fighting for Pacquiao. The event is actually the 2014 United States, Ukraine, Jamaica and The was announced. what we want to achieve. After years of Miss Universe pageant. The competition Netherlands at the world’s top beauty London-born Vega dedicated her title difficulty, we are leading in several areas was scheduled to take place between pageant in Florida. The 22-year-old mod- to Colombia and to all her supporters. on the world stage,” she said earlier dur- the Golden Globes and the Super Bowl el and business student triumphed over “We are proud, this is a triumph, not only ing the question round. Colombian to try to get a bigger television audi- 87 other women from around the world, personal, but for all those 47 million President Juan Manuel Santos applaud- ence. and is only the second beauty queen Colombians who were dreaming with ed her, praising the brown-haired beau- The contest, owned by billionaire from Colombia to take home the prize. -

Rihanna Se Une a Mccartney

EXCELSIOR LUNES 26 DE ENERO DE 2015 [email protected] @Funcion_Exc Foto: AP BIRDMAN SUMA TRIUNFOS Durante la entrega de los premios del Sindicato de Actores de Hollywood, el filme Birdman o La inesperada virtud de la igno- rancia, dirigido por el mexicano Alejandro G. Iñárritu, se llevó la presea por Mejor Elenco de Película, rubro en el que competía con cintas como Boyhood: Momentos de una vida, del texano LA MÁS BELLA DEL MUNDO Richard Linklater, El gran hotel Budapest, del también texano Wes Anderson, El código enigma, del noruego Morten Tyldum, y La teoría del todo, del realizador británico James Marsh. “Estoy orgulloso de haber sido parte de este gran equipo. La actuación es como el máximo trabajo en equipo. Este premio es para Alejandro (G. Iñárritu) y para los productores del filme”, dijo el actor Michael Keaton, protagonista de Birdman >10 y 11 Tras superar las pruebas de traje de baño y de noche, Paulina Vega Dieppa, originaria de Barranquilla, fue nombrada Miss Universo, imponiéndose a Estados Unidos, Holanda, Ucrania y Jamaica, las otras finalistas >8 y 9 Foto: Reuters RIHANNA SE UNE A MCCARTNEY Foto: Archivo La cantante Rihanna lanzó ayer, por POR SIEMPRE NEWMAN sorpresa, Four Five Seconds, el primer sencillo de su nuevo álbum, en el que la Un día como hoy, pero de hace 90 años, nació el actor Paul Newman, una de las más grandes estrellas del séptimo arte. acompaña el exbeatle Paul McCartney Su leyenda en la actuación se comenzó a escribir en 1954, y el rapero estadunidense Kanye West. -

Sábado 24 De Enero De 2015 | San Luis Potosí, San Luis Potosí | Año 3 No

Síguenos: www.planoinformativo.com | www.planodeportivo.com | www.quetalvirtual.com Sábado 24 de enero de 2015 | San Luis Potosí, San Luis Potosí | Año 3 No. 752 | Una producción más de Grupo Plano Informativo Locales Xavier Azuara contenderá por el PAN; mientras que por el PRI sería Manuel Lozano. Se define contienda Redacción Plano Informativo por la alcaldía on el registro de Manuel Lozano Y es que este día, dando cumplimien- circunstancia Lozano Nieto quedaría como Nieto ante la comisión de procesos to con lo establecido por el PRI para el re- candidato oficial. Cinternos del PRI, para contender gistro de aspirantes a la candidatura a la Teniendo en cuenta lo sucedido este por la candidatura del tricolor a la alcaldía alcaldía de la capital, se inscribió Manuel viernes, y la candidatura ganada por Xa- de la capital, y la elección de Xavier Azua- Lozano, siendo el único registro. vier Azuara para contender por la alcaldía ra por el PAN, el pasado mes de diciembre, Aunque esta por iniciar todo el proceso representando al PAN, el pasado 14 de di- se define la contienda municipal para el 7 interno y lograr la validación de acuerdo a ciembre, la lucha por la alcaldía capitalina de junio de 2015. lo marcado por la convocatoria, dadas las queda lista. La regidora Margarita puede estar tranquila: Marvelly Emilia Monreal trincante para ella en busca de la candida- Plano Informativo tura por el PRI para diputado local. Costanzo Rangel mencionó que en el a ex presidenta de Nuestro Centro, caso del PRD, está a la espera de lo que LAdriana Marvelly Costanzo Rangel el CEN defina, ya que este fin de semana desmintió que el PRI le haya ofrecido ser tendrán reunión del Consejo para definir candidata para diputada por el VIII Distri- los candidatos, por lo que sólo se hizo un to Local. -

Protect Wildlife from Super-Toxic Rat Poisons

Protect Wildlife From Super-toxic Rat Poisons August 31, 2020 Dear Governor Newsom, We, the 4,812 undersigned, urge you to place an immediate moratorium on the use of second-generation anticoagulant rodenticides in California, except in cases of public health or ecosystem emergencies, to protect the state's wildlife from imminent harm. Right now dozens of species are being hurt by these super-toxic rat poisons, including golden eagles, Humboldt martens, San Joaquin kit foxes and spotted owls. And more than 85 percent of California mountain lions, bobcats and Pacific fishers have been exposed to these rodenticides. Mountain lions in Southern California are in especially grave danger, as their populations are already isolated and close to extinction. Several mountain lions were recently found dead with multiple super-toxic rat poisons in their bloodstreams. Immediate action by the state is critical to prevent these populations' extinctions. In 2014 the state of California did act by pulling this class of rodenticides from consumer shelves. Unfortunately there hasn't been a decrease in the rate of wildlife poisoning from these products because licensed pest-control applicators continue to use them throughout the state. Stronger prohibitions are necessary. There's a wide range of safer, cost-effective alternatives on the shelves today. Strategies that prevent rodent infestations in the first place, by sealing buildings and eliminating food and water sources, are a necessary first step. Then, if need be, lethal strategies can be used that involve snap traps, electric traps and other nontoxic methods. Please — move forward with a statewide moratorium on the use of these super-toxic rat poisons, pending the passage of A.B. -

OFFICE of the ADMINISTRATOR Washington Office Center 409 3Rd Street, S.W

ADMINISTRATOR OFFICE OF THE ADMINISTRATOR Washington Office Center 409 3rd Street, S.W. Suite 7000 Washington, DC 20024-3212 Telephone Number (202)205-6605 Facsimile Number: (202)205-6802 TTY / TDD Number (800)877-8339 Mail Code: 2110 Individual Fax Listing Administrator Administrator Mills, Karen (202)205-6605 (202)481-0789 White House Liaison Burdick, Meaghan (202)205-7085 (202)481-0014 Scheduler Chan, Christopher (202)205-6605 (202)292-3865 Program Analyst Elliston, Joan L (202)205-6600 (202)481-4278 Chief Operating Officer Harrington, Eileen (202)205-6605 (202)481-2825 Staff Assistant Harris, Andrienne (202)205-6605 (202)292-3884 White House Liaison Jones, Daniel S (202)205-6685 Consultant Leffler, Joshua (202)205-6605 (202)481-4655 Counselor to the Administrator Lew, Ginger (202)205-6392 (202)292-3760 Program Assistant McCane, Theresa A (202)205-1396 (202)481-4625 Director Robbins, David B (202)205-6340 (202)481-0730 Special Assistant Smith, Megan K (202)205-6605 (202)481-4765 Consultant Bouchard Hall, Derek A (202)205-6487 (202)481-1589 Policy Analyst Chait, David D (202)401-2973 (202)481-4806 Special Assistant Iyer, Subash S (202)619-1732 (202)292-3798 Confidential Assistant Peyser, Kimberly A (202)205-6914 (202)481-0428 Student Intern Hodges, Brittany S (202)205-6484 Deputy Administrator Special Assistant Lonick, Sandy J (202)205-6605 (202)481-5827 Staff Assistant Nelson, Nicole M (202)205-6605 (202)481-0215 Senior Advisor VanWert, James M (202)205-7024 (202)481-2360 Chief Of Staff Chief of Staff Ma, Ana M (202)205-6605 (202)292-3832 Special Assistant Brown, Michael A (202)205-6484 (202)481-1794 Special Assistant Nemat, Anahita (202)619-0379 (202)292-3859 Program Analyst Wood, Donna (202)619-1608 (202)481-1715 Director of Scheduling Press Secretary Matz, Hayley K (202)205-6948 (202)481-2673 OFFICE OF STRATEGIC ALLIANCE Washington Office Center 409 3rd Street, S.W. -

The Beacon, January 21, 2015 Florida International University

Florida International University FIU Digital Commons The aP nther Press (formerly The Beacon) Special Collections and University Archives 1-21-2015 The Beacon, January 21, 2015 Florida International University Follow this and additional works at: https://digitalcommons.fiu.edu/student_newspaper Recommended Citation Florida International University, "The Beacon, January 21, 2015" (2015). The Panther Press (formerly The Beacon). 477. https://digitalcommons.fiu.edu/student_newspaper/477 This work is brought to you for free and open access by the Special Collections and University Archives at FIU Digital Commons. It has been accepted for inclusion in The aP nther Press (formerly The Beacon) by an authorized administrator of FIU Digital Commons. For more information, please contact [email protected]. A Forum for Free Student Expression at Florida International University One copy per person. Additional copies are 25 cents. Vol. 26 Issue 52 fiusm.com Wednesday, January 21, 2015 ‘Smart’ parking garage to spare students time in cars MARIA C. SERRANO “Beyond just building the Staff Writer garage, we are launching a smart [email protected] garage,” Tom said. “The new garage enhances ease of access The opening of PG6 at and ease of parking throughout the university’s main campus the university, all while reducing brings hundreds of new spaces traffic congestion and the need to for commuters. More than that, hunt for a parking space.” the garage brings new, smart Even though PG6 took technology to parking on campus. nearly a year to construct, it has The PG6 structure enables the lightened the lives of drivers integration of license plate at the Modesto A. -

Revised Agenda for Regular Meeting of the East Baton

REVISED AGENDA FOR REGULAR MEETING OF THE EAST BATON ROUGE PARISH LIBRARY BOARD OF CONTROL MAIN LIBRARY FIRST FLOOR CONFERENCE ROOM 7711 GOODWOOD BOULEVARD BATON ROUGE, LA 70806 JULY 16, 2015 4:00 P.M. I. ROLL CALL II. APPROVAL OF THE MINUTES OF THE REGULAR MEETING OF JUNE 18, 2015 III. REPORTS BY THE DIRECTOR A. FINANCIAL REPORT B. SYSTEM REPORTS IV. OTHER REPORTS A. RIVER CENTER BRANCH LIBRARY B. MAINTENANCE AND ADDITIONAL CAPITAL PROJECTS C. MISCELLANEOUS REPORTS V. NEW BUSINESS A. VOTE TO ADOPT PROPOSED 2016 LIBRARY BUDGET B. VOTE TO AMEND THE LIBRARY RULES OF BEHAVIOR FOR PATRONS TO INCLUDE A LIMITED RESTRICTION ON BEVERAGES AND FOOD IN LIBRARY FACILITIES C. VOTE TO SEND COMMENTS TO CITY-PARISH ADMINISTRATION CONCERNING ONE TAX ABATEMENT PROPOSAL - MR. SPENCER WATTS VI. OLD BUSINESS A. UPDATE ON SELECTING A BROKER TO SEARCH FOR A SITE FOR A SOUTH BRANCH LIBRARY – MR. SPENCER WATTS AND AD HOC COMMITTEE VII. COMMENTS BY THE LIBRARY BOARD OF CONTROL ALL MEETINGS ARE OPEN TO THE PUBLIC IN ACCORDANCE WITH THE BOARD’S PUBLIC COMMENT POLICY, ALL ITEMS ON WHICH ACTION IS TO BE TAKEN ARE OPEN FOR PUBLIC COMMENT, AND COMMENTS AND QUESTIONS MAY BE RECEIVED ON OTHER TOPICS REPORTED AT SUCH TIME AS THE OPPORTUNITY IS ANNOUNCED BY THE PRESIDENT OF THE BOARD OR THE PERSON CONDUCTING THE MEETING. Minutes of the Meeting of the East Baton Rouge Parish Library Board of Control July 16, 2015 The regular meeting of the East Baton Rouge Parish Library Board of Control was held in the first floor Conference Room of the Main Library at Goodwood at 7711 Goodwood Boulevard on Thursday, July 16 2015. -

Kuwait Establishes New Anti-Terror Committee

SUBSCRIPTION TUESDAY, JULY 14, 2015 RAMADAN 27, 1436 AH www.kuwaittimes.net IS threatens entire region: Emsak: 03:14 Iraqi speaker Fajer: 03:24 Shrooq 04:58 Dohr: 11:54 Asr: 15:28 Maghreb: 18:50 3 Eshaa: 20:20 Kuwait establishes new Min 32º Max 47º anti-terror committee High Tide 10:00 & 23:35 Panel will work to drain sources of terror funding Low Tide 03:25 & 17:20 40 PAGES NO: 16581 150 FILS KUWAIT: The Gulf state of Kuwait, hit by the worst sui- Ramadan Kareem cide attack in its history last month, decided yesterday to set up a permanent committee to fight “terrorism” Muslim’s journey and extremism. At its weekly meeting, the cabinet “decided to form a through life permanent committee to coordinate between various bodies to ensure security and fight against all forms of terrorism... and extremism,” a statement said. By Teresa Lesher A Saudi suicide bomber blew himself up in a mosque ur journey in life is never alone. Each of us has in Kuwait City on June 26, killing 26 worshippers and parents, and each is born into a family. In Islam, wounding 227 others in an attack claimed by the Othe journey through life is intrinsically woven Islamic State jihadist group. into family life. It is within the family structure that the The interior ministry has arrested more than 40 peo- journey of life takes shape. And it is in the holy Quran ple in connection with the attack and referred them to and prophetic traditions that the Muslim finds guidance the public prosecution for legal action. -

Miss Oklahoma Usa, Olivia Jordan, Wins the Miss Usa 2015 Title During the Live Reelz Channel Telecast from Baton Rouge, Louisiana

MISS OKLAHOMA USA, OLIVIA JORDAN, WINS THE MISS USA 2015 TITLE DURING THE LIVE REELZ CHANNEL TELECAST FROM BATON ROUGE, LOUISIANA Baton Rouge, LA – July 12, 2015 – This evening, a panel of former Miss Universe Organization titleholders chose Miss Oklahoma USA, Olivia Jordan, as Miss USA 2015. Emmy Award-winning game show host Todd Newton and former Miss Wisconsin USA 2009 Alex Wehrley hosted the competition. OK! TV’s Julie Alexandria provided backstage behind-the-scenes commentary from the Baton Rouge River Center in Baton Rouge, Louisiana. Olivia Jordan, a 26-year-old from Tulsa, Oklahoma, has appeared in several national/international commercials and feature films. Olivia is a graduate of Boston University, where she earned a degree in health science. As a model, Olivia has walked for Sherri Hill in New York Fashion Week 2015 and the runway at Miami Mercedes-Benz Fashion Week Swim 2014. She has also been featured in Cosmopolitan, Shape and Vogue Japan. Olivia has been recognized by Children of the Night, a group dedicated to getting children out of prostitution, for her work with the organization. The judging panel for the 2015 MISS USA® Pageant included Nana Meriwether, Miss USA 2012, two-time All- American athlete and co-founder of the non-profit Meriwether Foundation, which serves the most impoverished sectors in five southern African nations; Leila Umenyiora, Miss Universe 2011, humanitarian and named Drylands Ambassador by the United Nation Convention to Combat Desertification (UNCCD); Rima Fakih, Miss USA 2010, named one of the -

P36-40 Layout 1

Looking for traces of ‘Mockingbird’ in Harper Lee’s hometown TUESDAY, JULY 14, 2015 39 A Malaysian woman walks past mannequins displaying traditional head scarves or tudung at a Ramadan market ahead of the Eid Al-Fitr festival in Kuala Lumpur. — AFP Miss USA crowned amid Trump controversy ith flashes of flesh and impressive Trump did not attend the pageant, tweeting that resumes, Miss USA was crowned Sunday, he was busy campaigning in Arizona. Several com- Wafter the pageant was shunned by major panies and countries have decried Trump over the networks following controversial comments on comments. Mexican immigrants by presidential candidate Costa Rica pulled out of the Miss Universe com- Donald Trump. Miss Oklahoma, Olivia Jordan, petition following the remarks, and said they took top honors at the contest, which is co-owned would not be sending their contestant to the by Trump and came under fire after his controver- international pageant. But Trump’s voluble and sial claim that Mexico was sending criminals to the unfiltered style has won him a surge of support United States. amid a chaotic Republican field with few frontrun- Broadcaster NBC and Spanish-language ners. While the mogul’s business interests have Univision both said they would not air the show taken a hit, his political profile has soared. He is and a co-host pulled out, but the pageant went polling as the number two contender in the par- forward and included numerous contestants with ty’s showdown for the 2016 election. — AFP Miss Oklahoma Olivia Jordan celebrates after being ties to Mexico. -

DUR 26/01/2015 : CUERPO E : 1 : Página 1

Jurado El actor William Levy, el productor musical Emilio Estefan, la periodista colombiana Nina García, el E jugador de futbol americano DeSean Jackson, el actor Rob LUNES 26 Dyrdek y el beisbolista DE ENERO Giancarlo Stanton, fueron DE 2015 parte del jurado. kiosko@elsiglodeduran- Paulina Vega, de 22 años, se convirtió en la sucesora de la venezolana Gabriela Isler. EL SIGLO DE DURANGO cursantes modelaran en Durango, Dgo. traje de baño. Emociones al límite fue LO SOBRESALIENTE lo que dejó la noche del do- Tras nombrar a las diez mingo 25 de enero, durante bellezas que pasaban a la la 63 edición del certamen siguiente etapa, apareció de belleza más importante, Nick Jonas, quien las Miss Universo, en donde la acompañó con su música colombiana de 22 años, mientras realizaban el tra- Paulina Vega, se convirtió dicional recorrido en ves- en la ganadora, tras vencer tido de gala. en la final a las represen- En esta edición del cer- tantes de Jamaica, Ucrania, tamen que por primera Estados Unidos y Holanda. vez se extendió a tres horas, la repre- INICIA LA EDICIÓN 63 sentante de Nigeria En punto de las 19:00 horas fue elegida como (hora centro de México), Miss Simpatía; el título Traje Típico. Indonesia destacó en el certamen en inició la transmisión en de Miss Fotogenia quedó en la categoría de Traje Típico. vivo del certamen celebra- manos de Puerto Rico, do en Miami, con la bien- mientras que el esperado venida de los conductores reconocimiento a Traje Tí- Natalie Morales y Thomas pico fue para Indonesia.