STAT 810 Probability Theory I

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dynkin (Λ-) and Π-Systems; Monotone Classes of Sets, and of Functions – with Some Examples of Application (Mainly of a Probabilistic flavor)

Dynkin (λ-) and π-systems; monotone classes of sets, and of functions { with some examples of application (mainly of a probabilistic flavor) Matija Vidmar February 7, 2018 1 Dynkin and π-systems Some basic notation: Throughout, for measurable spaces (A; A) and (B; B), (i) A=B will denote the class of A=B-measurable maps, and (ii) when A = B, A_B := σA(A[B) will be the smallest σ-field on A containing both A and B (this notation has obvious extensions to arbitrary families of σ-fields on a given space). Furthermore, for a measure µ on F, µf := µ(f) := R fdµ will signify + − the integral of an f 2 F=B[−∞;1] against µ (assuming µf ^ µf < 1). Finally, for a probability space (Ω; F; P) and a sub-σ-field G of F, PGf := PG(f) := EP[fjG] will denote the conditional + − expectation of an f 2 F=B[−∞;1] under P w.r.t. G (assuming Pf ^ Pf < 1; in particular, for F 2 F, PG(F ) := P(F jG) = EP[1F jG] will be the conditional probability of F under P given G). We consider first Dynkin and π-systems. Definition 1. Let Ω be a set, D ⊂ 2Ω a collection of its subsets. Then D is called a Dynkin system, or a λ-system, on Ω, if (i) Ω 2 D, (ii) fA; Bg ⊂ D and A ⊂ B, implies BnA 2 D, and (iii) whenever (Ai)i2N is a sequence in D, and Ai ⊂ Ai+1 for all i 2 N, then [i2NAi 2 D. -

Problem Set 1 This Problem Set Is Due on Friday, September 25

MA 2210 Fall 2015 - Problem set 1 This problem set is due on Friday, September 25. All parts (#) count 10 points. Solve the problems in order and please turn in for full marks (140 points) • Problems 1, 2, 6, 8, 9 in full • Problem 3, either #1 or #2 (not both) • Either Problem 4 or Problem 5 (not both) • Problem 7, either #1 or #2 (not both) 1. Let D be the dyadic grid on R and m denote the Lebesgue outer measure on R, namely for A ⊂ R ( ¥ ¥ ) [ m(A) = inf ∑ `(Ij) : A ⊂ Ij; Ij 2 D 8 j : j=1 j=1 −n #1 Let n 2 Z. Prove that m does not change if we restrict to intervals with `(Ij) ≤ 2 , namely ( ¥ ¥ ) (n) (n) [ −n m(A) = m (A); m (A) := inf ∑ `(Ij) : A ⊂ Ij; Ij 2 D 8 j;`(Ij) ≤ 2 : j=1 j=1 N −n #2 Let t 2 R be of the form t = ∑n=−N kn2 for suitable integers N;k−N;:::;kN. Prove that m is invariant under translations by t, namely m(A) = m(A +t) 8A ⊂ R: −n −m Hints. For #2, reduce to the case t = 2 for some n. Then use #1 and that Dm = fI 2 D : `(I) = 2 g is invariant under translation by 2−n whenever m ≥ n. d d 2. Let O be the collection of all open cubes I ⊂ R and define the outer measure on R given by ( ¥ ¥ ) [ n(A) = inf ∑ jRnj : A ⊂ R j; R j 2 O 8 j n=0 n=0 where jRj is the Euclidean volume of the cube R. -

Dynkin Systems and Regularity of Finite Borel Measures Homework 10

Math 105, Spring 2012 Professor Mariusz Wodzicki Dynkin systems and regularity of finite Borel measures Homework 10 due April 13, 2012 1. Let p 2 X be a point of a topological space. Show that the set fpg ⊆ X is closed if and only if for any point q 6= p, there exists a neighborhood N 3 q such that p 2/ N . Derive from this that X is a T1 -space if and only if every singleton subset is closed. Let C , D ⊆ P(X) be arbitrary families of subsets of a set X. We define the family D:C as D:C ˜ fE ⊆ X j C \ E 2 D for every C 2 C g. 2. The Exchange Property Show that, for any families B, C , D ⊆ P(X), one has B ⊆ D:C if and only if C ⊆ D:B. Dynkin systems1 We say that a family of subsets D ⊆ P(X) of a set X is a Dynkin system (or a Dynkin class), if it satisfies the following three conditions: c (D1) if D 2 D , then D 2 D ; S (D2) if fDigi2I is a countable family of disjoint members of D , then i2I Di 2 D ; (D3) X 2 D . 3. Show that any Dynkin system satisfies also: 0 0 0 (D4) if D, D 2 D and D ⊆ D, then D n D 2 D . T 4. Show that the intersection, i2I Di , of any family of Dynkin systems fDigi2I on a set X is a Dynkin system on X. It follows that, for any family F ⊆ P(X), there exists a smallest Dynkin system containing F , namely the intersection of all Dynkin systems containing F . -

![THE DYNKIN SYSTEM GENERATED by BALLS in Rd CONTAINS ALL BOREL SETS Let X Be a Nonempty Set and S ⊂ 2 X. Following [B, P. 8] We](https://docslib.b-cdn.net/cover/5210/the-dynkin-system-generated-by-balls-in-rd-contains-all-borel-sets-let-x-be-a-nonempty-set-and-s-2-x-following-b-p-8-we-915210.webp)

THE DYNKIN SYSTEM GENERATED by BALLS in Rd CONTAINS ALL BOREL SETS Let X Be a Nonempty Set and S ⊂ 2 X. Following [B, P. 8] We

PROCEEDINGS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 128, Number 2, Pages 433{437 S 0002-9939(99)05507-0 Article electronically published on September 23, 1999 THE DYNKIN SYSTEM GENERATED BY BALLS IN Rd CONTAINS ALL BOREL SETS MIROSLAV ZELENY´ (Communicated by Frederick W. Gehring) Abstract. We show that for every d N each Borel subset of the space Rd with the Euclidean metric can be generated2 from closed balls by complements and countable disjoint unions. Let X be a nonempty set and 2X. Following [B, p. 8] we say that is a Dynkin system if S⊂ S (D1) X ; (D2) A ∈S X A ; ∈S⇒ \ ∈S (D3) if A are pairwise disjoint, then ∞ A . n ∈S n=1 n ∈S Some authors use the name -class instead of Dynkin system. The smallest Dynkin σ S system containing a system 2Xis denoted by ( ). Let P be a metric space. The system of all closed ballsT⊂ in P (of all Borel subsetsD T of P , respectively) will be denoted by Balls(P ) (Borel(P ), respectively). We will deal with the problem of whether (?) (Balls(P )) = Borel(P ): D One motivation for such a problem comes from measure theory. Let µ and ν be finite Radon measures on a metric space P having the same values on each ball. Is it true that µ = ν?If (Balls(P )) = Borel(P ), then obviously µ = ν.IfPis a Banach space, then µ =Dν again (Preiss, Tiˇser [PT]). But Preiss and Keleti ([PK]) showed recently that (?) is false in infinite-dimensional Hilbert spaces. We prove the following result. -

Measure and Integration

¦ Measure and Integration Man is the measure of all things. — Pythagoras Lebesgue is the measure of almost all things. — Anonymous ¦.G Motivation We shall give a few reasons why it is worth bothering with measure the- ory and the Lebesgue integral. To this end, we stress the importance of measure theory in three different areas. ¦.G.G We want a powerful integral At the end of the previous chapter we encountered a neat application of Banach’s fixed point theorem to solve ordinary differential equations. An essential ingredient in the argument was the observation in Lemma 2.77 that the operation of differentiation could be replaced by integration. Note that differentiation is an operation that destroys regularity, while in- tegration yields further regularity. It is a consequence of the fundamental theorem of calculus that the indefinite integral of a continuous function is a continuously differentiable function. So far we used the elementary notion of the Riemann integral. Let us quickly recall the definition of the Riemann integral on a bounded interval. Definition 3.1. Let [a, b] with −¥ < a < b < ¥ be a compact in- terval. A partition of [a, b] is a finite sequence p := (t0,..., tN) such that a = t0 < t1 < ··· < tN = b. The mesh size of p is jpj := max1≤k≤Njtk − tk−1j. Given a partition p of [a, b], an associated vector of sample points (frequently also called tags) is a vector x = (x1,..., xN) such that xk 2 [tk−1, tk]. Given a function f : [a, b] ! R and a tagged 51 3 Measure and Integration partition (p, x) of [a, b], the Riemann sum S( f , p, x) is defined by N S( f , p, x) := ∑ f (xk)(tk − tk−1). -

Measures 1 Introduction

Measures These preliminary lecture notes are partly based on textbooks by Athreya and Lahiri, Capinski and Kopp, and Folland. 1 Introduction Our motivation for studying measure theory is to lay a foundation for mod- eling probabilities. I want to give a bit of motivation for the structure of measures that has developed by providing a sort of history of thought of measurement. Not really history of thought, this is more like a fictionaliza- tion (i.e. with the story but minus the proofs of assertions) of a standard treatment of Lebesgue measure that you can find, for example, in Capin- ski and Kopp, Chapter 2, or in Royden, books that begin by first studying Lebesgue measure on <. For studying probability, we have to study measures more generally; that will follow the introduction. People were interested in measuring length, area, volume etc. Let's stick to length. How was one to extend the notion of the length of an interval (a; b), l(a; b) = b − a to more general subsets of <? Given an interval I of any type (closed, open, left-open-right-closed, etc.), let l(I) be its length (the difference between its larger and smaller endpoints). Then the notion of Lebesgue outer measure (LOM) of a set A 2 < was defined as follows. Cover A with a countable collection of intervals, and measure the sum of lengths of these intervals. Take the smallest such sum, over all countable collections of ∗ intervals that cover A, to be the LOM of A. That is, m (A) = inf ZA, where 1 1 X 1 ZA = f l(In): A ⊆ [n=1Ing n=1 ∗ (the In referring to intervals). -

Probability Theory I

Probability theory I Prof. Dr. Alexander Drewitz Universit¨at zu K¨oln Preliminary version of July 16, 2019 If you spot any typos or mistakes, please drop an email to [email protected]. 2 Contents 1 Set functions 5 1.1 Systems of sets ..................................... 5 1.1.1 Semirings, rings, and algebras ......................... 5 1.1.2 σ-algebras and Dynkin systems ........................ 11 1.2 Set functions ...................................... 17 1.2.1 Properties of set functions ........................... 17 1.3 Carath´eodory’s extension theorem (‘Maßerweiterungssatz’) ............ 25 1.3.1 Lebesgue measure ............................... 29 1.3.2 Lebesgue-Stieltjes measure .......................... 31 1.4 Measurable functions, random variables ....................... 32 1.5 Image measures, distributions ............................. 38 2 The Lebesgue integral 41 2.0.1 Integrals of simple functions .......................... 41 2.0.2 Lebesgue integral for measurable functions ................. 43 2.0.3 Lebesgue vs. Riemann integral ........................ 46 2.1 Convergence theorems ................................. 47 2.1.1 Dominated and monotone convergence .................... 47 2.2 Measures with densities, absolute continuity ..................... 50 2.2.1 Almost sure / almost everywhere properties ................. 50 2.2.2 Hahn-Jordan decomposition .......................... 53 2.2.3 Lebesgue’s decomposition theorem, Radon-Nikodym derivative ...... 55 2.2.4 Integration with respect to image measures ................ -

21-721 Probability Spring 2010

21-721 Probability Spring 2010 Prof. Agoston Pisztora notes by Brendan Sullivan May 6, 2010 Contents 0 Introduction 2 1 Measure Theory 2 1.1 σ-Fields . .2 1.2 Dynkin Systems . .4 1.3 Probability Measures . .6 1.4 Independence . .9 1.5 Measurable Maps and Induced Measures . 13 1.6 Random Variables and Expectation . 16 1.6.1 Integral (expected value) . 19 1.6.2 Convergence of RVs . 24 1.7 Product Spaces . 28 1.7.1 Infinite product spaces . 31 2 Laws of Large Numbers 34 2.1 Examples and Applications of LLN . 40 3 Weak Convergence of Probability Measures 42 3.1 Fourier Transforms of Probability Measures . 47 4 Central Limit Theorems and Poisson Distributions 51 4.1 Poisson Convergence . 56 5 Conditional Expectations 61 5.1 Properties and computational tools . 65 5.2 Conditional Expectation and Product Measures . 66 5.2.1 Conditional Densities . 68 1 6 Martingales 69 6.1 Gambling Systems and Stopping Times . 70 6.2 Martingale Convergence . 75 6.3 Uniformly Integrable Martingales . 77 6.4 Further Applications of Martingale Convergence . 79 6.4.1 Martingales with L1-dominated increments . 79 6.4.2 Generalized Borel-Cantelli II . 80 6.4.3 Branching processes . 82 6.5 Sub and supermartingales . 83 6.6 Maximal inequalities . 87 6.7 Backwards martingales . 90 6.8 Concentration inequalities: the Martingale method . 91 6.8.1 Applications . 93 6.9 Large Deviations: Cramer's Theorem . 94 6.9.1 Further properties under Cramer's condition . 95 0 Introduction Any claim marked with (***) is meant to be proven as an exercise. -

Dynkin Systems 1 1

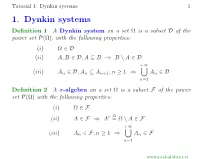

Tutorial 1: Dynkin systems 1 1. Dynkin systems Definition 1 A Dynkin system on a set Ω is a subset D of the power set P(Ω), with the following properties: (i)Ω∈D (ii) A, B ∈D,A⊆ B ⇒ B \ A ∈D +∞ (iii) An ∈D,An ⊆ An+1,n≥ 1 ⇒ An ∈D n=1 Definition 2 A σ-algebra on a set Ω is a subset F of the power set P(Ω) with the following properties: (i)Ω∈F (ii) A ∈F ⇒ Ac =Ω\ A ∈F +∞ (iii) An ∈F,n≥ 1 ⇒ An ∈F n=1 www.probability.net Tutorial 1: Dynkin systems 2 Exercise 1. Let F be a σ-algebra on Ω. Show that ∅∈F,that if A, B ∈Fthen A ∪ B ∈Fand also A ∩ B ∈F. Recall that B \ A = B ∩ Ac and conclude that F is also a Dynkin system on Ω. Exercise 2. Let (Di)i∈I be an arbitrary family of Dynkin systems on Ω, with I = ∅. Show that D = ∩i∈I Di is also a Dynkin system on Ω. Exercise 3. Let (Fi)i∈I be an arbitrary family of σ-algebras on Ω, with I = ∅. Show that F = ∩i∈I Fi is also a σ-algebra on Ω. Exercise 4. Let A be a subset of the power set P(Ω). Define: D(A) = {D Dynkin system on Ω : A⊆D} Show that P(Ω) is a Dynkin system on Ω, and that D(A)isnotempty. Define: D(A) = D D∈D(A) www.probability.net Tutorial 1: Dynkin systems 3 Show that D(A) is a Dynkin system on Ω such that A⊆D(A), and that it is the smallest Dynkin system on Ω with such property, (i.e. -

Dynkin's Lemma in Measure Theory

FORMALIZED MATHEMATICS Volume 9, Number 3, 2001 University of Białystok Dynkin’s Lemma in Measure Theory Franz Merkl University of Bielefeld Summary. This article formalizes the proof of Dynkin’s lemma in measure theory. Dynkin’s lemma is a useful tool in measure theory and probability theory: it helps frequently to generalize a statement about all elements of a intersection- stable set system to all elements of the sigma-field generated by that system. MML Identifier: DYNKIN. The terminology and notation used in this paper have been introduced in the following articles: [5], [11], [1], [4], [2], [3], [7], [6], [12], [13], [8], [10], and [9]. 1. Preliminaries For simplicity, we adopt the following rules: O1 denotes a non empty set, f denotes a sequence of subsets of O1, X, A, B denote subsets of O1, D denotes a non empty subset of 2O1 , n, m denote natural numbers, F denotes a non empty set, and x, Y denote sets. Next we state two propositions: (1) For every sequence f of subsets of O1 and for every x holds x ∈ rng f iff there exists n such that f(n) = x. (2) For every n holds PSeg n is finite. Let us consider n. One can verify that PSeg n is finite. Next we state the proposition (3) For all sets x, y, z such that x ⊆ y holds x misses z \ y. Let a, b, c be sets. The functor a, b followed by c is defined as follows: (Def. 1) a, b followed by c = (N 7−→ c)+·[0 7−→ a, 1 7−→ b]. -

Sigma-Algebra from Wikipedia, the Free Encyclopedia Chapter 1

Sigma-algebra From Wikipedia, the free encyclopedia Chapter 1 Algebra of sets The algebra of sets defines the properties and laws of sets, the set-theoretic operations of union, intersection, and complementation and the relations of set equality and set inclusion. It also provides systematic procedures for evalu- ating expressions, and performing calculations, involving these operations and relations. Any set of sets closed under the set-theoretic operations forms a Boolean algebra with the join operator being union, the meet operator being intersection, and the complement operator being set complement. 1.1 Fundamentals The algebra of sets is the set-theoretic analogue of the algebra of numbers. Just as arithmetic addition and multiplication are associative and commutative, so are set union and intersection; just as the arithmetic relation “less than or equal” is reflexive, antisymmetric and transitive, so is the set relation of “subset”. It is the algebra of the set-theoretic operations of union, intersection and complementation, and the relations of equality and inclusion. For a basic introduction to sets see the article on sets, for a fuller account see naive set theory, and for a full rigorous axiomatic treatment see axiomatic set theory. 1.2 The fundamental laws of set algebra The binary operations of set union ( [ ) and intersection ( \ ) satisfy many identities. Several of these identities or “laws” have well established names. Commutative laws: • A [ B = B [ A • A \ B = B \ A Associative laws: • (A [ B) [ C = A [ (B [ C) • (A \ B) \ C = A \ (B \ C) Distributive laws: • A [ (B \ C) = (A [ B) \ (A [ C) • A \ (B [ C) = (A \ B) [ (A \ C) The analogy between unions and intersections of sets, and addition and multiplication of numbers, is quite striking. -

Advanced Probability Theory

Advanced probability theory Jiˇr´ı Cern´yˇ June 1, 2016 Preface These are lecture notes for the lecture `Advanced Probability Theory' given at Uni- versity of Vienna in SS 2014 and 2016. This is a preliminary version which will be updated regularly during the term. If you have questions, corrections or suggestions for improvements in the text, please let me know. ii Contents 1 Introduction 1 2 Probability spaces, random variables, expectation 5 2.1 Kolmogorov axioms . .5 2.2 Random variables . .7 2.3 Expectation of real-valued random variables . 10 3 Independence 14 3.1 Definitions . 14 3.2 Dynkin's lemma . 15 3.3 Elementary facts about the independence . 17 3.4 Borel-Cantelli lemma . 19 3.5 Kolmogorov 0{1 law . 22 4 Laws of large numbers 25 4.1 Kolmogorov three series theorem . 25 4.2 Weak law of large numbers . 29 4.3 Strong law of large numbers . 30 4.4 Law of large numbers for triangular arrays . 35 5 Large deviations 37 5.1 Sub-additive limit theorem . 37 5.2 Cram´er'stheorem . 38 6 Weak convergence of probability measures 41 6.1 Weak convergence on R ........................... 41 6.2 Weak convergence on metric spaces . 44 6.3 Tightness on R ................................ 47 6.4 Prokhorov's theorem* . 48 7 Central limit theorem 52 7.1 Characteristic functions . 52 7.2 Central limit theorem . 55 7.3 Some generalisations of the CLT* . 56 8 Conditional expectation 60 8.1 Regular conditional probabilities* . 65 iii 9 Martingales 67 9.1 Definition and Examples . 67 9.2 Martingales convergence, a.s.