Embedded Demonstrator for Video Presentation and Manipulation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Serial Bus Isolation Significantly Improved System Protocols

1 On-Orbit Implementation I. INTRODUCTION ANY robotic, automotive, and aerospace systems rely of Discrete Isolation M on serial communication buses to integrate the mul- titude of distributed devices necessary for system operation Schemes for Improved [1]. As more commercial-off-the-shelf (COTS) electronics find their way into these applications, so do many COTS practices and protocols [2]. Two of the most prevalent COTS serial Reliability of Serial communication protocols, Inter-Integrated Circuit (I2C) and Serial Peripheral Interface (SPI), are inherently vulnerable to Communication Buses single-point failures capable of disabling entire branches of the distributed system [3]. However, the embedded systems literature is lacking a concise discussion on how these serial MAXIMILLIAN HOLLIDAY Stanford University, Stanford, USA communication buses can fail, the impact bus failure poses to the system, and how to readily protect I2C and SPI buses J. GABRIEL BUCKMASTER Stanford University, Stanford, USA from their inherent single-point failure modes without custom device or controller-level modifications to the system [4]. ZACHARY MANCHESTER 2 Carnegie Mellon University, Pittsburgh, USA Their simplicity and ubiquitous hardware support make I C and SPI communication buses an integral part of modern DEBBIE G. SENESKY Stanford University, Stanford, USA embedded systems applications requiring distributed sensor and computing nodes. For example, consider the design task of selecting digital temperature and inertial sensor compo- nents needed at multiple locations along a robotic arm. Of Abstract—Serial communication buses are used in electronic the 109 digital temperature sensors currently sold by Texas systems to interconnect sensors and other devices, but two of 2 the most widely used protocols, I2C and SPI, are vulnerable to Instruments, 93% rely on either I C or SPI as a communi- bus-wide failures if even one device on the bus malfunctions. -

LTC1400 Complete SO-8, 12-Bit, 400Ksps ADC with Shutdown May 1996

Final Electrical Specifications LTC1400 Complete SO-8, 12-Bit, 400ksps ADC with Shutdown May 1996 FEATURES DESCRIPTIONU ■ Complete 12-Bit ADC in SO-8 The LTC ®1400 is a complete 400ksps, 12-bit A/D con- ■ Single Supply 5V or ±5V Operation verter which draws only 75mW from a 5V or ± 5V supplies. ■ Sample Rate: 400ksps This easy-to-use device comes complete with a 200ns ■ Power Dissipation: 75mW (Typ) sample-and-hold and a precision reference. Unipolar and ■ 70dB S/(N + D) and 74dB THD at Nyquist bipolar conversion modes add to the flexibility of the ADC. ■ No Missing Codes over Temperature The LTC1400 is capable of going into two power saving ■ NAP Mode with Instant Wake-Up: 6mW modes: NAP and SLEEP. In NAP mode, it consumes only ■ SLEEP Mode: 30µW 6mW of power and can wake up and convert immediately. ■ High Impendance Analog Input In the SLEEP mode, it consumes 30µW of power typically. ■ Input Range (1mV/LSB): 0V to 4.096 or ± 2.048V Upon power-up from SLEEP mode, a reference ready ■ Internal Reference Can Be Overdriven Externally (REFRDY) signal is available in the serial data word to ■ 3-Wire Interface to DSPs and Processors indicate that the reference has settled and the chip is ready to convert. APPLICATIOUNS The LTC1400 converts 0V to 4.096V unipolar inputs from a single 5V supply and ±2.048V bipolar inputs from ±5V ■ High Speed Data Acquisition supplies. Maximum DC specs include ±1LSB INL, ±1LSB ■ Digital Signal Processing DNL and 45ppm/°C drift over temperature. Guaranteed AC ■ Multiplexed Data Acquisition Systems performance includes 70dB S/(N + D) and 76dB THD at an ■ Audio and Telecom Processing input frequency of 100kHz, over temperature. -

Dual-Channel ∑∆ Modulator ADM0D79*

a Dual-Channel ∑∆ Modulator ADM0D79* FEATURES FUNCTIONAL BLOCK DIAGRAM High-Performance ∑∆ ADC Building Block VINL+ Fifth-Order, 64 Times Oversampling Modulator with Patented Noise-Shaping DAC Modulator Clock Rate to 3.57 MHz 103 dB Dynamic Range (for 20 kHz Input LOUT Bandwidth) ∫∫ ∫ ∫ ∫ ∫ Differential Architecture for Superior SNR and Dynamic Range DAC Dual-Channel Differential Analog Inputs (±6.2 V VINL– Differential Input Voltage) REFERENCE On-Chip Voltage Reference VINR+ APPLICATIONS DAC Digital Audio ROUT Medical Electronics ∫∫ ∫ ∫ ∫ ∫ Electronic Imaging Sonar Signal Processing DAC Instrumentation VINR– PRODUCT OVERVIEW dynamic range and excellent common-mode rejection character- The ADMOD79 Sigma-Delta (∑∆) modulator is a building istics. Because its modulator is single-bit, the ADMOD79 is block which can be used to build a superior analog-to-digital inherently monotonic and has no mechanism for producing conversion system customized to a particular application’s differential linearity errors. Analog and digital supply connec- requirement. The ADMOD79 is a two-channel, fully differen- tions are separated to isolate the analog circuitry from the digital tial modulator. Each channel consists of a fifth-order one-bit supplies. noise shaping modulator. An on-chip voltage reference provides The ADMOD79 is fabricated in a BiCMOS process and is a voltage source to both channels that is stable over temperature supplied in a 0.6" wide 28-lead cerdip package. The and time. There are separate single-bit digital outputs for each ADMOD79 operates from ±5 V power supplies over the channel. The ADMOD79 accepts a 64 × F input master clock S temperature range of –25°C to +70°C. -

DFT-Based Synchrophasor Estimation Algorithms and Their Integration in Advanced Phasor Measurement Units for the Real-Time Monitoring of Active Distribution Networks

DFT-based Synchrophasor Estimation Algorithms and their Integration in Advanced Phasor Measurement Units for the Real-time Monitoring of Active Distribution Networks THÈSE NO 6906 (2016) PRÉSENTÉE LE 5 FÉVRIER 2016 À LA FACULTÉ DES SCIENCES ET TECHNIQUES DE L'INGÉNIEUR LABORATOIRE DES SYSTÈMES ÉLECTRIQUES DISTRIBUÉS - CHAIRE EOS HOLDING PROGRAMME DOCTORAL EN ENERGIE ÉCOLE POLYTECHNIQUE FÉDÉRALE DE LAUSANNE POUR L'OBTENTION DU GRADE DE DOCTEUR ÈS SCIENCES PAR Paolo ROMANO acceptée sur proposition du jury: Dr S.-R. Cherkaoui, président du jury Prof. M. Paolone, directeur de thèse Prof. C. Muscas, rapporteur Dr R. Plana, rapporteur Prof. F. Rachidi-Haeri, rapporteur Suisse 2016 And in the end the love you take is equal to the love you make. A mia nonna Marisa, a mio zio Alfredo Acknowledgements Few but sincere words for those people who came along with me during this four years-long journey that looks like it started yesterday. First of all, I would like to express my heartfelt gratitude to Mario, a competent and intimate supervisor, almost a friend, that has given me the opportunity to start this experience. I am really grateful for the trust you put in me and the responsibilities you gave me that have allowed my personal and professional growth in a particularly friendly environment. Next a big thanks to my colleagues of DESL-PWRS group and particularly to Asja, Fabrizio, Lorenzo and Marco with whom I have spent most of my time, inside and outside the lab, during the last four years. Friends rather than colleagues! Thanks to my parents that supported me from the moment I took the decision to move abroad. -

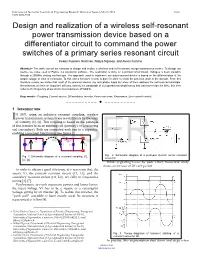

Design and Realization of a Wireless Self-Resonant Power Transmission

International Journal of Scientific & Engineering Research Volume 9, Issue 3, March-2018 1039 ISSN 2229-5518 Design and realization of a wireless self-resonant power transmission device based on a differentiator circuit to command the power switches of a primary series resonant circuit Fekou Kuekem Narcisse, Ndjiya Ngasop, and Alexis Kuitche Abstract-- The work carried out consists to design and realize a wireless and self-resonant energy transmission device. To design our device, we make used of Pspice 9.2 simulation software. The realization is done on a printed circuit board. Voltages is been visualize through a 200MHz analog oscilloscope. The approach used to implement our auto-resonant device is based on the differentiation of the output voltage in view of command. To this end a literature review is done in other to know the previous work in the domain. From this literature review, we notice that most of the previous device use lock phase loops but none of them address the self-resonant principle. Nevertheless we face an important difficulty, namely the bandwidth of our operational amplificatory that was lower than the MHz, this then reduces the frequency of our device to a maximum of 50MHz. Keys words-- Coupling, Current source, Differentiator, Inverter, Power switches, Resonance, Zero current control. —————————— —————————— 1 INTRODUCTION N 2007, using an inductive resonant coupling, wireless I power transmission systems were revolutionize by the team of witricity [1], [2]. This coupling is based on the principle of flux transfer from an emitting coil (primary), to a receiving coil (secondary). Both are connected each one to a capacitor, enabling a resonant functioning (see figure 1). -

LTC1400 Complete SO-8, 12-Bit

LTC1400 Complete SO-8, 12-Bit, 400ksps ADC with U Shutdown FEATURES DESCRIPTIO ■ Complete 12-Bit ADC in SO-8 The LTC®1400 is a complete 400ksps, 12-bit A/D converter ■ Single Supply 5V or ±5V Operation which draws only 75mW from 5V or ±5V supplies. This ■ Sample Rate: 400ksps easy-to-use device comes complete with a 200ns sample- ■ Power Dissipation: 75mW (Typ) and-hold and a precision reference. Unipolar and bipolar ■ 72dB S/(N + D) and –80dB THD at Nyquist conversion modes add to the flexibility of the ADC. The ■ No Missing Codes over Temperature LTC1400 has two power saving modes: Nap and Sleep. ■ Nap Mode with Instant Wake-Up: 6mW In Nap mode, it consumes only 6mW of power and can ■ Sleep Mode: 30μW wake up and convert immediately. In the Sleep mode, it ■ High Impedance Analog Input consumes 30μW of power typically. Upon power-up from ■ Input Range (1mV/LSB): 0V to 4.096V or ±2.048V Sleep mode, a reference ready (REFRDY) signal is available ■ Internal Reference Can Be Overdriven Externally in the serial data word to indicate that the reference has ■ 3-Wire Interface to DSPs and Processors (SPI and settled and the chip is ready to convert. TM MICROWIRE Compatible) The LTC1400 converts 0V to 4.096V unipolar inputs from APPLICATIOUS a single 5V supply and ±2.048V bipolar inputs from ±5V supplies. Maximum DC specs include ±1LSB INL, ±1LSB ■ High Speed Data Acquisition DNL and 45ppm/°C drift over temperature. Guaranteed AC ■ Digital Signal Processing performance includes 70dB S/(N + D) and –76dB THD at ■ Multiplexed Data Acquisition Systems an input frequency of 100kHz, over temperature. -

Data Sheet Before the Capacitor Is Used

LTC1404 Complete SO-8, 12-Bit, 600ksps ADC with Shutdown FEATURES DESCRIPTIO U ■ Complete 12-Bit ADC in SO-8 The LTC ®1404 is a complete 600ksps, 12-bit A/D con- ■ Single Supply 5V or ±5V Operation verter which draws only 75mW from 5V or ± 5V supplies. ■ Sample Rate: 600ksps This easy-to-use device comes complete with a 160ns ■ Power Dissipation: 75mW (Typ) sample-and-hold and a precision reference. Unipolar and ■ 72dB S/(N + D) and –80dB THD at Nyquist bipolar conversion modes add to the flexibility of the ADC. ■ No Missing Codes over Temperature The LTC1404 has two power saving modes: Nap and ■ Nap Mode with Instant Wake-Up: 7.5mW Sleep. In Nap mode, it consumes only 7.5mW of power ■ Sleep Mode: 60µW and can wake up and convert immediately. In the Sleep ■ High Impedance Analog Input mode, it consumes 60µW of power typically. Upon power- ■ Input Range (1mV/LSB): 0V to 4.096V or ± 2.048V up from Sleep mode, a reference ready (REFRDY) signal ■ Internal Reference Can Be Overdriven Externally is available in the serial data word to indicate that the ■ 3-Wire Interface to DSPs and Processors (SPI and reference has settled and the chip is ready to convert. TM MICROWIRE Compatible) The LTC1404 converts 0V to 4.096V unipolar inputs from U a single 5V supply and ±2.048V bipolar inputs from ±5V APPLICATIO S supplies. Maximum DC specs include ±1LSB INL, ±1LSB ■ High Speed Data Acquisition DNL and 45ppm/°C full-scale drift over temperature. Guaranteed AC performance includes 69dB S/(N + D) ■ Digital Signal Processing ■ Multiplexed Data Acquisition Systems and –76dB THD at an input frequency of 100kHz over ■ Audio and Telecom Processing temperature. -

Modeling, Design and Characterization of Delay-Chains Based True Random Number Generator Molka Ben Romdhane

Modeling, design and characterization of delay-chains based true random number generator Molka Ben Romdhane To cite this version: Molka Ben Romdhane. Modeling, design and characterization of delay-chains based true random number generator. Micro and nanotechnologies/Microelectronics. Télécom ParisTech, 2014. English. NNT : 2014ENST0055. tel-01354263 HAL Id: tel-01354263 https://pastel.archives-ouvertes.fr/tel-01354263 Submitted on 18 Aug 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. 2014-ENST-0055 EDITE - ED 130 Doctorat ParisTech T H È S E pour obtenir le grade de docteur délivré par TELECOM ParisTech Spécialité « Electronique et Communication » présentée et soutenue publiquement par Molka BEN ROMDHANE le 1er Octobre 2014 Modélisation, implémentation et caractérisation de circuits générateurs de nombres aléatoires vrais utilisant des chaînes à retards Directeur de thèse : Jean-Luc DANGER, Professeur, Télécom ParisTech Co-encadrement de la thèse : Tarik GRABA, Maître de Conférences, Télécom ParisTech Jury M. Viktor FISCHER Rapporteur, Professeur, Université Jean Monnet Saint Etienne M. Olivier SENTIEYS Rapporteur, Professeur, Université de Rennes I M. Yannick TEGLIA Examinateur, Expert en sécurité, ST Microelectronics M. Guy GOGNIAT Examinateur, Professeur, Lab-STICC, Université de Bretagne Sud M. -

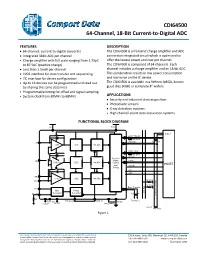

CDI64500 64-Channel, 18-Bit Current-To-Digital

CDI64500 64-Channel, 18-Bit Current-to-Digital ADC FEATURES DESCRIPTION • 64-channel, current-to-digital converter The CDI64500 is a frontend charge amplifier and ADC • Integrated 18bit ADC per channel conversion integrated circuit which is optimized to • Charge amplifier with full scale ranging from 1.75pC offer the lowest power and cost per channel. to 87.5pC (positive charge) The CDI64500 is composed of 64 channels. Each • Less than 1.5mW per channel channel includes a charge amplifier and an 18-bit ADC. • LVDS interface for data transfer and sequencing This combination results in low power consumption • I2C interface for device configuration and low noise on the IC device. • Up to 16 devices can be programmed and read out The CDI64500 is available in a 9x9mm fpBGA, known by sharing the same data lines good dies (KGD) or complete 8” wafers. • Programmable timing for offset and signal sampling • System clock from 30MHz to 68MHz APPLICATIONS • Security and industrial data acquisition • Photodiode sensors • X-ray detection systems • High channel-count data acquisition systems FUNCTIONAL BLOCK DIAGRAM 5V 3.3V Data In Start_Transfer 100 Ohm 100 Ohm IN0 DATAO CH0 CDS 18b ADC Start_Conv 100 Ohm Ready Common INx Digital Clock CH1..62 CDS 18b ADC Control HOST and Resetb Timing SCL SDA IN63 CH63 CDS 18b ADC 1K Ohm t Zero u O a t 1K Ohm a s D u End_ Transfer B n Vset o Bias Ramp C m 2 I Generator Generator m Ext_res 100 Ohm o C 6K Ramp_out Ramp_in To next IC Test_out DGND Test_in AGND Test_enable Last IC 100 Ohm Figure 1. -

Ltc1400is8#Pbf

LTC1400 Complete SO-8, 12-Bit, 400ksps ADC with U Shutdown FEATURES DESCRIPTIO ■ Complete 12-Bit ADC in SO-8 The LTC®1400 is a complete 400ksps, 12-bit A/D converter ■ Single Supply 5V or ±5V Operation which draws only 75mW from 5V or ±5V supplies. This ■ Sample Rate: 400ksps easy-to-use device comes complete with a 200ns sample- ■ Power Dissipation: 75mW (Typ) and-hold and a precision reference. Unipolar and bipolar ■ 72dB S/(N + D) and –80dB THD at Nyquist conversion modes add to the flexibility of the ADC. The ■ No Missing Codes over Temperature LTC1400 has two power saving modes: Nap and Sleep. ■ Nap Mode with Instant Wake-Up: 6mW In Nap mode, it consumes only 6mW of power and can ■ Sleep Mode: 30μW wake up and convert immediately. In the Sleep mode, it ■ High Impedance Analog Input consumes 30μW of power typically. Upon power-up from ■ Input Range (1mV/LSB): 0V to 4.096V or ±2.048V Sleep mode, a reference ready (REFRDY) signal is available ■ Internal Reference Can Be Overdriven Externally in the serial data word to indicate that the reference has ■ 3-Wire Interface to DSPs and Processors (SPI and settled and the chip is ready to convert. TM MICROWIRE Compatible) The LTC1400 converts 0V to 4.096V unipolar inputs from APPLICATIOUS a single 5V supply and ±2.048V bipolar inputs from ±5V supplies. Maximum DC specs include ±1LSB INL, ±1LSB ■ High Speed Data Acquisition DNL and 45ppm/°C drift over temperature. Guaranteed AC ■ Digital Signal Processing performance includes 70dB S/(N + D) and –76dB THD at ■ Multiplexed Data Acquisition Systems an input frequency of 100kHz, over temperature. -

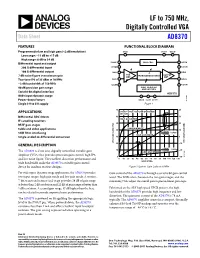

Data Sheet Rev. B

LF to 750 MHz, Digitally Controlled VGA Data Sheet AD8370 FEATURES FUNCTIONAL BLOCK DIAGRAM Programmable low and high gain (<2 dB resolution) VCCI VCCO VCCO Low range: −11 dB to +17 dB 3 11 6 High range: 6 dB to 34 dB Differential input and output PWUP 4 BIAS CELL 5 VOCM 200 Ω differential input ICOM 2 7 OCOM 100 Ω differential output INHI 1 8 OPHI 7 dB noise figure @ maximum gain PRE TRANSCONDUCTANCE OUTPUT AMP AMP Two-tone IP3 of 35 dBm @ 70 MHz INLO 16 9 OPLO −3 dB bandwidth of 750 MHz ICOM 15 10 OCOM 40 dB precision gain range SHIFT REGISTER AND LATCHES Serial 8-bit digital interface AD8370 Wide input dynamic range 14 13 12 Power-down feature DATA CLCK LTCH 03692-001 Single 3 V to 5 V supply Figure 1. 70 40 APPLICATIONS CODE = LAST 7 BITS OF GAIN CODE (NO MSB) Differential ADC drivers 60 30 HIGH GAIN MODE IF sampling receivers RF/IF gain stages 50 20 LOW GAIN MODE Cable and video applications 40 10 GAIN 0.409 SAW filter interfacing HIGH GAIN MODE CODE Single-ended-to-differential conversion 30 0 VOLTAGE GAIN (dB) VOLTAGE GAIN (V/V) GENERAL DESCRIPTION 20 –10 GAIN 10 0.059 –20 CODE The AD8370 is a low cost, digitally controlled, variable gain LOW GAIN MODE amplifier (VGA) that provides precision gain control, high IP3, 0 –30 03692-002 and low noise figure. The excellent distortion performance and 0 1020304050 60 70 80 90100 110 120 130 GAIN CODE wide bandwidth make the AD8370 a suitable gain control device for modern receiver designs. -

An Embedded Systems Programming Language (Especial)

Master of Science HES-SO in Engineering Av. de Provence 6 CH-1007 Lausanne Master of Science HES-SO in Engineering Orientation : Technologies industrielles (TIN) ESPecIaL : an Embedded Systems Programming Language Fait par Christopher Métrailler Sous la direction de Dr Pierre-André Mudry HES-SO // Valais, Systems Engineering Expert Prof. Pascal Felber Université de Neuchâtel, Institut d'informatique Lausanne, HES-SO//Master, 6 février 2015 Accepté par la HES SO//Master (Suisse, Lausanne) sur proposition de Prof. Pierre-André Mudry, conseiller de travail de Master Prof. Pascal Felber, expert principal Lausanne, 6 février 2015 Prof. Pierre-André Mudry Prof. Pierre Pompili Conseiller Responsable de la filière Systèmes industriels Layout based on the EPFL EDOC LATEX 2"template and BIBTEX. Report version 1.1 - April 2015. iii Abstract Nowadays embedded systems, available at very low cost, are becoming more and more present in many fields such as industry, automotive and education. This master thesis presents a prototype implementation of an embedded systems programming language. This report focuses on a high-level language, specially developed to build embedded applications, based on the dataflow paradigm. Using ready-to-use blocks, the user describes the block diagram of his application, and its corresponding C++ code is generated automatically, for a specific target embedded system. With the help of this prototype Domain Specific Language (DSL), implemented using the Scala programming language, embedded applications can be built with ease. Low-level C/C++ codes are no more necessary. Real-world applications based on the developed Embedded Systems Programming Language are presented at the end of this document.