Autotagger: a Model for Predicting Social Tags from Acoustic Features on Large Music Databases

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trance Generation by Transformation

Trance generation by transformation Darrell Conklin1,2 and Louis Bigo1 1 Department of Computer Science and Artificial Intelligence University of the Basque Country UPV/EHU, San Sebasti´an, Spain 2 IKERBASQUE, Basque Foundation for Science, Bilbao, Spain 1 Introduction Music generation methods can be broadly divided into two categories: rule-based methods use specified rules and constraints for style emulation and algorithmic composition; machine learning approaches generate musical objects by sampling from statistical models built from large corpora of music. In this paper we apply a mixed approach, generation by transformation, which conserves structural fea- tures of an existing piece while generating variable parts with a statistical model (Bigo and Conklin, 2015; Goienetxea and Conklin, 2015). In recent years several music informatics studies have started to explore electronic dance music (EDM) using audio analysis (Diakopoulos et al., 2009; Collins, 2012; Rocha et al., 2013; Yadati et al., 2014; Panteli et al., 2014). The symbolic generation of EDM is a new problem that has been explored in the work of Eigenfeldt and Pasquier (2013), who use a corpus of 100 EDM songs for the extraction of low order statistics. In our approach to EDM generation, a single template piece in Logic Pro X (LPX) format is transformed using a statistical model. As in most digital audio workstation formats, an LPX project contains e↵ects, instrumentation, and orchestration, which can all be inherited by a transformed piece. 2 Methods In this work we focus on generation of the EDM subgenre of trance.The approach to trance generation follows three steps: chord sequence extraction and transformation; chord voicing; and LPX streaming. -

The Dark Romanticism of Francisco De Goya

The University of Notre Dame Australia ResearchOnline@ND Theses 2018 The shadow in the light: The dark romanticism of Francisco de Goya Elizabeth Burns-Dans The University of Notre Dame Australia Follow this and additional works at: https://researchonline.nd.edu.au/theses Part of the Arts and Humanities Commons COMMONWEALTH OF AUSTRALIA Copyright Regulations 1969 WARNING The material in this communication may be subject to copyright under the Act. Any further copying or communication of this material by you may be the subject of copyright protection under the Act. Do not remove this notice. Publication Details Burns-Dans, E. (2018). The shadow in the light: The dark romanticism of Francisco de Goya (Master of Philosophy (School of Arts and Sciences)). University of Notre Dame Australia. https://researchonline.nd.edu.au/theses/214 This dissertation/thesis is brought to you by ResearchOnline@ND. It has been accepted for inclusion in Theses by an authorized administrator of ResearchOnline@ND. For more information, please contact [email protected]. i DECLARATION I declare that this Research Project is my own account of my research and contains as its main content work which had not previously been submitted for a degree at any tertiary education institution. Elizabeth Burns-Dans 25 June 2018 This work is licenced under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International licence. i ii iii ACKNOWLEDGMENTS This thesis would not have been possible without the enduring support of those around me. Foremost, I would like to thank my supervisor Professor Deborah Gare for her continuous, invaluable and guiding support. -

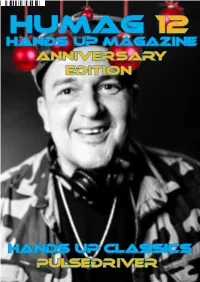

Hands up Classics Pulsedriver Hands up Magazine Anniversary Edition

HUMAG 12 Hands up Magazine anniversary Edition hANDS Up cLASSICS Pulsedriver Page 1 Welcome, The time has finally come, the 12th edition of the magazine has appeared, it took a long time and a lot has happened, but it is done. There are again specials, including an article about pulsedriver, three pages on which you can read everything that has changed at HUMAG over time and how the de- sign has changed, one page about one of the biggest hands up fans, namely Gabriel Chai from Singapo- re, who also works as a DJ, an exclusive interview with Nick Unique and at the locations an article about Der Gelber Elefant discotheque in Mühlheim an der Ruhr. For the first time, this magazine is also available for download in English from our website for our friends from Poland and Singapore and from many other countries. We keep our fingers crossed Richard Gollnik that the events will continue in 2021 and hopefully [email protected] the Easter Rave and the TechnoBase birthday will take place. Since I was really getting started again professionally and was also ill, there were unfortu- nately long delays in the publication of the magazi- ne. I apologize for this. I wish you happy reading, Have a nice Christmas and a happy new year. Your Richard Gollnik Something Christmas by Marious The new Song Christmas Time by Mariousis now available on all download portals Page 2 content Crossword puzzle What is hands up? A crossword puzzle about the An explanation from Hands hands up scene with the chan- Up. -

Spiritual but Not Religious: the Influence of the Current Romantic

ATR/88:3 Spiritual but Not Religious: The Influence of the Current Romantic Movement Owen C. Thomas* A significant number of people today identify themselves as “spiritual but not religious,” distancing themselves from contin- uing religious traditions of formation. Various explanations have been offered for this. This article claims that this phenomenon is the result of the influence of a new Romantic movement that began to emerge in the 1960s. Historians, sociologists, philoso- phers, and contemporary theologians give evidence for such a new movement, which is found in popular culture, and in post- modernism, neoconservatism, the new consumerism, and espe- cially in the current spirituality movement. Romantic movements tend to disparage traditional religion and to affirm unorthodox, exotic, esoteric, mystical, and individualistic spiritualities; this is true of the current spirituality movement. The current spirituality movement also resembles Romantic movements in its ambiguity, and in its destructive as well as constructive tendencies. Many commentators have noted the current cultural phenome- non of the large number of people, perhaps twenty percent of Ameri- cans, who are self-identified as “spiritual but not religious.” Some of these commentators have offered explanations for this phenomenon. Among these are Weber’s idea of the “routinization of charisma” in or- ganized religion, which may have turned off many seekers; the regular emergence in the religious traditions of renewal movements of which the spirituality movement may be an example; the unprecedented contact and interchange among the world religions; the suspicion of institutions of all kinds and the resulting search for something more * Owen C. Thomas is Professor of Theology emeritus of the Episcopal Divinity School and the author of several books in the philosophy of religion and theology. -

Dive Covenant Ah Cama-Sotz Revolting Cocks

edition April - June 2017 free of charge, not for sale 25 quarterly published music magazine DIVE COVENANT AH CAMA-SOTZ REVOLTING COCKS ARSENIC OF JABIR | FROZEN NATION | ALVAR CAUSENATION | DOLLS OF PAIN | VUDUVOX BESTIAL MOUTHS | 31ST TILE | ALPHAMAY X-MOUTH SYNDROME | LLUMEN | ELM - 1 - www.peek-a-boo-magazine.be - 2 - contents 04 CD reviews 22 Interview REVOLTING COCKS 06 Interview ALPHAMAY 24 Interview VUDUVOX 08 Interview DOLLS OF PAIN 26 CD reviews 10 Interview LLUMEN 28 Interview COVENANT 12 Interview ELM 30 CD reviews 14 Interview X-MOUTH SYNDROME 32 Interview AH CAMA-SOTZ 16 Interview ALVAR 18 Interview DIVE 34 Interview ARSENIC OF JABIR 20 Interview BESTIAL MOUTHS 35 Calendar Peek-A-Boo Magazine • BodyBeats Productions • Tabaksvest 40 • 2000 Antwerpen • contact@ and [email protected] colophon ORGANISATION EDITORS WRITERS (continued) BODYBEATS Productions Gea STAPELVOORT Paul PLEDGER www.bodybeats.be Leanne AITKEN Ron SCHOONWATER Dimitri CAUVEREN Sara VANNACCI Stef COLDHEART Wool-E Shop Tine SWAENEPOEL Ward DE PRINS Dries HAESELDONCKX Wim LENAERTS Bunkerleute WRITERS Xavier KRUTH Frédéric COTTON Charles “Chuck” MOORHOUSE Le Fantastique Fred GADGET PHOTOGRAPHERS PARTNERS Gustavo A. ROSELINSKY Benny SERNEELS Dark Entries team Jurgen BRAECKEVELT Marquis(pi)X www.darkentries.be Manu L DASH Gothville team Mark VAN MULLEM MAGAZINE & WEBSITE www.gothville.com Masha KASHA Ward DE PRINS - 3 - www.peek-a-boo-magazine.be ONCEHUMAN - Evolution (CD) (Ear Music) Sometimes you have to take a leap of faith when choosing a CD for your listening pleasure and experience. However, mostly, you take a diligent peek at the visual aspects (artwork, layout, photography...). -

Cooked in the Lab Dark, Techy D&B Trio Ivy Lab Break Bad on LP P.108

MUSIC DECEMBER ON THE DANCEFLOOR This month’s tracks played out p. 92 GO LONG! The long-players listened to p. 108 WILD COMBINATION The most crucial compilations p. 113 Cooked In The Lab Dark, techy d&b trio Ivy Lab break bad on LP p.108 djmag.com 091 HOUSE REVIEWS BEN ARNOLD [email protected] melancholy pianos, ‘A Fading Glance’ is a lovely, swelling thing, QUICKIES gorgeously understated. ‘Mayflies’ is brimming with moody, building La Fleur Fred P atmospherics, minor chord pads Make A Move Modern Architect and Burial-esque snatches of vo- Watergate Energy Of Sound cal. ‘Whenever I Try To Leave’ winds 9.0 8.5 it up, a wash of echoing percus- The first lady of Berlin’s A most generous six sion, deep, unctuous vibrations Watergate unleashes tracks from the superb and gently soothing pianos chords. three tracks of Fred Peterkin. It’s all This could lead Sawyer somewhere unrivalled firmness. If great, but ‘Tokyo To special. ‘Make A Move’’s hoover Chiba’, ‘Don’t Be Afraid’, bass doesn’t get you, with Minako on vocals, Hexxy/Andy Butler ‘Result’’s emotive vibes and ‘Memory P’ stand Edging/Bewm Chawqk will. Lovely. out. Get involved. Mr. Intl 7. 5 Various Shift Work A statuesque release from Andy Hudd Traxx Now & Document II ‘Hercules & Love Affair’ Butler’s Mr. Then Houndstooth Intl label. Hexxy is his new project Hudd Traxx 7. 5 with DJ Nark, founder of the excel- 7. 5 Fine work in the lent ‘aural gallery’ site Bottom Part four of four in this hinterland between Forty and Nark magazine. -

Abandoned Streets and Past Ruins of the Future in the Glossy Punk Magazine

This is a repository copy of Digging up the dead cities: abandoned streets and past ruins of the future in the glossy punk magazine. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/119650/ Version: Accepted Version Article: Trowell, I.M. orcid.org/0000-0001-6039-7765 (2017) Digging up the dead cities: abandoned streets and past ruins of the future in the glossy punk magazine. Punk and Post Punk, 6 (1). pp. 21-40. ISSN 2044-1983 https://doi.org/10.1386/punk.6.1.21_1 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Digging up the Dead Cities: Abandoned Streets and Past Ruins of the Future in the Glossy Punk Magazine Abstract - This article excavates, examines and celebrates P N Dead! (a single issue printed in 1981), Punk! Lives (11 issues printed between 1982 and 1983) and Noise! (16 issues in 1982) as a small corpus of overlooked dedicated punk literature coincident with the UK82 incarnation of punk - that takes the form of a pop-style poster magazine. -

17. Kowalczyk

STUDIA ROSSICA POSNANIENSIA, vol. XL, cz. 1: 2015, pp. 177-188. ISBN 978-83-232-2878-3. ISSN 0081-6884. Adam Mickiewicz University Press, Poznań KLASYFIKACJA SEMANTYCZNA ROSYJSKICH I POLSKICH NAZW MUZYKI ROZRYWKOWEJ SEMANTIC CLASSIFICATION OF NAMES OF POPULAR MUSIC GENRES IN RUSSIAN AND POLISH RAFAŁ KOWALCZYK ABSTRACT . This paper presents a practical application of multifaceted research into the vocabulary of popular music in contemporary Russian and Polish. It contains explanatory notes in the first part and appendixes, i.e. mini Russian-Polish dictionaries, in the second part. Lexical entries are divided into six semantic groups with the following key concepts: jazz , rock , metal , house , techno and trance , which describe the music styles that appeal to popular tastes. Rafał Kowalczyk, Uniwersytet Wrocławski , Wrocław – Polska. Część opisowa Proponowany uwadze czytelnika szkic ma dwa zamierzone cele: glot- todydaktyczny i translatoryczny. Zamieszczone w nim aneksy (zob. część słownikowa) mogą służyć studentom i wykładowcom jako materiały po - glądowe 1 zarówno na zajęciach z nauki praktycznej języka rosyjskiego, jak i na zajęciach z przekładu, szczególnie funkcjonalnego. Tak więc z meryto - rycznego punktu widzenia mogą one być pomocne w rozwijaniu i utrwa - laniu takich kompet encji, jak: językowa (gramatyczna i leksotaktyczna), tłumaczeniowa (recepcyjna i reprodukcyjna), terminologiczna i kulturowa 2. ________________ 1 Zob. inne opracowane przeze mnie materiały poglądowe: R. K o w a l c z y k, Próbny rosyjsko-polski słownik teatralny , [w:] tegoż, Rosyjskie słownictwo teatralne w porównaniu z polskim, „Slavica Wratislaviensia”, Wrocław: Wydawnictwo Uniwersytetu Wrocław - skiego, 2005, nr CXXXVII, s. 125 –134; tegoż, Nomina actionis i nomina essendi w rosyjskim i polskim słownictwie teatralnym, „Rozprawy Komisji Językowej WTN”, Wrocław: Wydaw - nictwo WTN, 2006, nr XXXII, s. -

1 : Daniel Kandi & Martijn Stegerhoek

1 : Daniel Kandi & Martijn Stegerhoek - Australia (Original Mix) 2 : guy mearns - sunbeam (original mix) 3 : Close Horizon(Giuseppe Ottaviani Mix) - Thomas Bronzwaer 4 : Akira Kayos and Firestorm - Reflections (Original) 5 : Dave 202 pres. Powerface - Abyss 6 : Nitrous Oxide - Orient Express 7 : Never Alone(Onova Remix)-Sebastian Brandt P. Guised 8 : Salida del Sol (Alternative Mix) - N2O 9 : ehren stowers - a40 (six senses remix) 10 : thomas bronzwaer - look ahead (original mix) 11 : The Path (Neal Scarborough Remix) - Andy Tau 12 : Andy Blueman - Everlasting (Original Mix) 13 : Venus - Darren Tate 14 : Torrent - Dave 202 vs. Sean Tyas 15 : Temple One - Forever Searching (Original Mix) 16 : Spirits (Daniel Kandi Remix) - Alex morph 17 : Tallavs Tyas Heart To Heart - Sean Tyas 18 : Inspiration 19 : Sa ku Ra (Ace Closer Mix) - DJ TEN 20 : Konzert - Remo-con 21 : PROTOTYPE - Remo-con 22 : Team Face Anthem - Jeff Mills 23 : ? 24 : Giudecca (Remo-con Remix) 25 : Forerunner - Ishino Takkyu 26 : Yomanda & DJ Uto - Got The Chance 27 : CHICANE - Offshore (Kitch 'n Sync Global 2009 Mix) 28 : Avalon 69 - Another Chance (Petibonum Remix) 29 : Sophie Ellis Bextor - Take Me Home 30 : barthez - on the move 31 : HEY DJ (DJ NIKK HARDHOUSE MIX) 32 : Dj Silviu vs. Zalabany - Dearly Beloved 33 : chicane - offshore 34 : Alchemist Project - City of Angels (HardTrance Mix) 35 : Samara - Verano (Fast Distance Uplifting Mix) http://keephd.com/ http://www.video2mp3.net Halo 2 Soundtrack - Halo Theme Mjolnir Mix declub - i believe ////// wonderful Dinka (trance artist) DJ Tatana hard trance ffx2 - real emotion CHECK showtek - electronic stereophonic (feat. MC DV8) Euphoria Hard Dance Awards 2010 Check Gammer & Klubfiller - Ordinary World (Damzen's Classix Remix) Seal - Kiss From A Rose Alive - Josh Gabriel [HQ] Vincent De Moor - Fly Away (Vocal Mix) Vincent De Moor - Fly Away (Cosmic Gate Remix) Serge Devant feat. -

Street Parade 2018 Starting Order

STREET PARADE 2018 STARTING ORDER Opening: 13:00h, Start Parade 14:00h. Veranstalter Start-Time DJs Motto Genre The BPM Festival (POR), Club Bellevue (ZH), 1 14:00 Nicole Moudaber, Dubfire Tech-House, Techno Verein Friends Of Street Parade (ZH) 2 Tito Torres & friends, Chic Music (ZH) 14:08 Tito Torres, Joe T Vannelli, Sachi Toyama, B.K., Ron Red Passion Techno, House, Tech-House 3 Pat Farrell, Dukes (ZH, ZG) 14:16 Pat Farrell, Robin Tune, Bass Hours Garden of Eden Progressive, Bigroom, Future House 4 Vagabundos (ES), Terrazzza (ZH) 14:24 Luciano, Ezikiel, David Aurel, Murciano, Kantarik Tech-House 5 Mz Mz Mz (ZH) 14:32 Animal Trainer, Visionkids Tech-House, Techno 6 Mr. Da-Nos, LeeRoy, XworkX Artists (ZH) 14:40 Mr. Da-Nos, LeeRoy, DeeCello, Sven May, Black Spirit, Roger M. Be part of our One Nation Family! House, Electro, EDM 7 Synergy Events (VD) 14:48 Liquid Soul, Sean Tyas, Darren Porter, Madwave, Ferry Tale, Andy Wonderland «The Trance Journey Trance 8 Materiamusic (TI) 14:56 Fabio Florido, Stallo, Max Evolve, expand, impress Techno 9 JUR Records (AG) 15:04 Agent C., Nade, Hellchild, Z-Groove, Infernat Bastard, K-Base Louder Than Hell Drum and Bass 10 Attico Club (ZH), Carol Fernandez (BE) 15:12 Carol Fernandez, Basement Brothers, Jose Maria Ramonm, Sei B, Xicu Portas live, u.a. Egypt - Black'n'Gold House, Deep-, Techhouse, EDM 11 Klaus (ZH) 15:20 De La Maso, Raphael Raban, Nici Faerber, Pazkal, Aaron, Khaleian Klaus macht mobil Deep House, Tech Hous 12 Party-Fraktion Wattenscheid (DE) 15:28 Quicksilver, Spacekid, Pearl, PFW Allstars Back to the 90s. -

Text-Based Description of Music for Indexing, Retrieval, and Browsing

JOHANNES KEPLER UNIVERSITAT¨ LINZ JKU Technisch-Naturwissenschaftliche Fakult¨at Text-Based Description of Music for Indexing, Retrieval, and Browsing DISSERTATION zur Erlangung des akademischen Grades Doktor im Doktoratsstudium der Technischen Wissenschaften Eingereicht von: Dipl.-Ing. Peter Knees Angefertigt am: Institut f¨ur Computational Perception Beurteilung: Univ.Prof. Dipl.-Ing. Dr. Gerhard Widmer (Betreuung) Ao.Univ.Prof. Dipl.-Ing. Dr. Andreas Rauber Linz, November 2010 ii Eidesstattliche Erkl¨arung Ich erkl¨are an Eides statt, dass ich die vorliegende Dissertation selbstst¨andig und ohne fremde Hilfe verfasst, andere als die angegebenen Quellen und Hilfsmittel nicht benutzt bzw. die w¨ortlich oder sinngem¨aß entnommenen Stellen als solche kenntlich gemacht habe. iii iv Kurzfassung Ziel der vorliegenden Dissertation ist die Entwicklung automatischer Methoden zur Extraktion von Deskriptoren aus dem Web, die mit Musikst¨ucken assoziiert wer- den k¨onnen. Die so gewonnenen Musikdeskriptoren erlauben die Indizierung um- fassender Musiksammlungen mithilfe vielf¨altiger Bezeichnungen und erm¨oglichen es, Musikst¨ucke auffindbar zu machen und Sammlungen zu explorieren. Die vorgestell- ten Techniken bedienen sich g¨angiger Web-Suchmaschinen um Texte zu finden, die in Beziehung zu den St¨ucken stehen. Aus diesen Texten werden Deskriptoren gewon- nen, die zum Einsatz kommen k¨onnen zur Beschriftung, um die Orientierung innerhalb von Musikinterfaces zu ver- • einfachen (speziell in einem ebenfalls vorgestellten dreidimensionalen Musik- interface), als Indizierungsschlagworte, die in Folge als Features in Retrieval-Systemen f¨ur • Musik dienen, die Abfragen bestehend aus beliebigem, beschreibendem Text verarbeiten k¨onnen, oder als Features in adaptiven Retrieval-Systemen, die versuchen, zielgerichtete • Vorschl¨age basierend auf dem Suchverhalten des Benutzers zu machen. -

The Quest for Ground Truth in Musical Artist Tagging in the Social Web Era

THE QUEST FOR GROUND TRUTH IN MUSICAL ARTIST TAGGING IN THE SOCIAL WEB ERA Gijs Geleijnse Markus Schedl Peter Knees Philips Research Dept. of Computational Perception High Tech Campus 34 Johannes Kepler University Eindhoven (the Netherlands) Linz (Austria) [email protected] fmarkus.schedl,[email protected] ABSTRACT beled with a tag, the more the tag is assumed to be relevant to the item. Research in Web music information retrieval traditionally Last.fm is a popular internet radio station where users focuses on the classification, clustering or categorizing of are invited to tag the music and artists they listen to. More- music into genres or other subdivisions. However, cur- over, for each artist, a list of similar artists is given based rent community-based web sites provide richer descrip- on the listening behavior of the users. In [4], Ellis et al. tors (i.e. tags) for all kinds of products. Although tags propose a community-based approach to create a ground have no well-defined semantics, they have proven to be truth in musical artist similarity. The research question an effective mechanism to label and retrieve items. More- was whether artist similarities as perceived by a large com- over, these tags are community-based and hence give a munity can be predicted using data from All Music Guide description of a product through the eyes of a community and from shared folders for peer-to-peer networks. Now, rather than an expert opinion. In this work we focus on with the Last.fm data available for downloading, such com- Last.fm, which is currently the largest music community munity-based data is freely available for non-commercial web service.