Chess Algorithms Theory and Practice

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Deep Fritz 11 Rating

Deep fritz 11 rating CCRL 40/4 main list: Fritz 11 has no rank with rating of Elo points (+9 -9), Deep Shredder 12 bit (+=18) + 0 = = 0 = 1 0 1 = = = 0 0 = 0 = = 1 = = 1 = = 0 = = 0 1 0 = 0 1 = = 1 1 = – Deep Sjeng WC bit 4CPU, , +12 −13, (−65), 24 − 13 (+13−2=22). %. This is one of the 15 Fritz versions we tested: Compare them! . − – Deep Sjeng bit, , +22 −22, (−15), 19 − 15 (+13−9=12). % / Complete rating list. CCRL 40/40 Rating List — All engines (Quote). Ponder off Deep Fritz 11 4CPU, , +29, −29, %, −, %, %. Second on the list is Deep Fritz 11 on the same hardware, points below The rating list is compiled by a Swedish group on the basis of. Fritz is a German chess program developed by Vasik Rajlich and published by ChessBase. Deep Fritz 11 is eighth on the same list, with a rating of Overall the answer for what to get is depending on the rating Since I don't have Rybka and I do have Deep Fritz 11, I'll vote for game vs Fritz using Opening or a position? - Chess. Find helpful customer reviews and review ratings for Fritz 11 Chess Playing Software for PC at There are some algorithms, from Deep fritz I have a small Engine Match database and use the Fritz 11 Rating Feature. Could somebody please explain the logic behind the algorithm? Chess games of Deep Fritz (Computer), career statistics, famous victories, opening repertoire, PGN download, Feb Version, Cores, Rating, Rank. DEEP Rybka playing against Fritz 11 SE using Chessbase We expect, that not only the rating figures, but also the number of games and the 27, Deep Fritz 11 2GB Q 2,4 GHz, , 18, , , 62%, Deep Fritz. -

The Check Is in the Mail June 2007

The Check Is in the Mail June 2007 NOTICE: I will be out of the office from June 16 through June 25 to teach at Castle Chess Camp in Atlanta, Georgia. During that GM Cesar Augusto Blanco-Gramajo time I will be unable to answer any of your email, US mail, telephone calls, or GAME OF THE MONTH any other form of communication. Cesar’s provisional USCF rating is 2463. NOTICE #2 As you watch this game unfold, you can The email address for USCF almost see Blanco’s rating go upwards. correspondence chess matters has changed to [email protected] RUY LOPEZ (C67) White: Cesar Blanco (2463) ICCF GRANDMASTER in 2006 Black: Benjamin Coraretti (0000) ELECTRONIC KNIGHTS 2006 Electronic Knights Cesar Augusto Blanco-Gramajo, born 1.e4 e5 2.Nf3 Nc6 3.Bb5 Nf6 4.0–0 January 14, 1959, is a Guatemalan ICCF Nxe4 5.d4 Nd6 6.Bxc6 dxc6 7.dxe5 Grandmaster now living in the US and Ne4 participating in the 2006 Electronic An unusual sideline that seems to be Knights. Cesar has had an active career gaining in popularity lately. in ICCF (playing over 800 games there) and sports a 2562 ICCF rating along 8.Qe2 with the GM title which was awarded to him at the ICCF Congress in Ostrava in White chooses to play the middlegame 2003. He took part in the great Rest of as opposed to the endgame after 8. the World vs. Russia match, holding Qxd8+. With an unstable Black Knight down Eleventh Board and making two and quick occupancy of the d-file, draws against his Russian opponent. -

Preparation of Papers for R-ICT 2007

Chess Puzzle Mate in N-Moves Solver with Branch and Bound Algorithm Ryan Ignatius Hadiwijaya / 13511070 Program Studi Teknik Informatika Sekolah Teknik Elektro dan Informatika Institut Teknologi Bandung, Jl. Ganesha 10 Bandung 40132, Indonesia [email protected] Abstract—Chess is popular game played by many people 1. Any player checkmate the opponent. If this occur, in the world. Aside from the normal chess game, there is a then that player will win and the opponent will special board configuration that made chess puzzle. Chess lose. puzzle has special objective, and one of that objective is to 2. Any player resign / give up. If this occur, then that checkmate the opponent within specifically number of moves. To solve that kind of chess puzzle, branch and bound player is lose. algorithm can be used for choosing moves to checkmate the 3. Draw. Draw occur on any of the following case : opponent. With good heuristics, the algorithm will solve a. Stalemate. Stalemate occur when player doesn‟t chess puzzle effectively and efficiently. have any legal moves in his/her turn and his/her king isn‟t in checked. Index Terms—branch and bound, chess, problem solving, b. Both players agree to draw. puzzle. c. There are not enough pieces on the board to force a checkmate. d. Exact same position is repeated 3 times I. INTRODUCTION e. Fifty consecutive moves with neither player has Chess is a board game played between two players on moved a pawn or captured a piece. opposite sides of board containing 64 squares of alternating colors. -

Super Human Chess Engine

SUPER HUMAN CHESS ENGINE FIDE Master / FIDE Trainer Charles Storey PGCE WORLD TOUR Young Masters Training Program SUPER HUMAN CHESS ENGINE Contents Contents .................................................................................................................................................. 1 INTRODUCTION ....................................................................................................................................... 2 Power Principles...................................................................................................................................... 4 Human Opening Book ............................................................................................................................. 5 ‘The Core’ Super Human Chess Engine 2020 ......................................................................................... 6 Acronym Algorthims that make The Storey Human Chess Engine ......................................................... 8 4Ps Prioritise Poorly Placed Pieces ................................................................................................... 10 CCTV Checks / Captures / Threats / Vulnerabilities ...................................................................... 11 CCTV 2.0 Checks / Checkmate Threats / Captures / Threats / Vulnerabilities ............................. 11 DAFiii Attack / Features / Initiative / I for tactics / Ideas (crazy) ................................................. 12 The Fruit Tree analysis process ............................................................................................................ -

Should Chess Players Learn Computer Security?

1 Should Chess Players Learn Computer Security? Gildas Avoine, Cedric´ Lauradoux, Rolando Trujillo-Rasua F Abstract—The main concern of chess referees is to prevent are indeed playing against each other, instead of players from biasing the outcome of the game by either collud- playing against a little girl as one’s would expect. ing or receiving external advice. Preventing third parties from Conway’s work on the chess grandmaster prob- interfering in a game is challenging given that communication lem was pursued and extended to authentication technologies are steadily improved and miniaturized. Chess protocols by Desmedt, Goutier and Bengio [3] in or- actually faces similar threats to those already encountered in der to break the Feige-Fiat-Shamir protocol [4]. The computer security. We describe chess frauds and link them to their analogues in the digital world. Based on these transpo- attack was called mafia fraud, as a reference to the sitions, we advocate for a set of countermeasures to enforce famous Shamir’s claim: “I can go to a mafia-owned fairness in chess. store a million times and they will not be able to misrepresent themselves as me.” Desmedt et al. Index Terms—Security, Chess, Fraud. proved that Shamir was wrong via a simple appli- cation of Conway’s chess grandmaster problem to authentication protocols. Since then, this attack has 1 INTRODUCTION been used in various domains such as contactless Chess still fascinates generations of computer sci- credit cards, electronic passports, vehicle keyless entists. Many great researchers such as John von remote systems, and wireless sensor networks. -

{Download PDF} Kasparov Versus Deep Blue : Computer Chess

KASPAROV VERSUS DEEP BLUE : COMPUTER CHESS COMES OF AGE PDF, EPUB, EBOOK Monty Newborn | 322 pages | 31 Jul 2012 | Springer-Verlag New York Inc. | 9781461274773 | English | New York, NY, United States Kasparov versus Deep Blue : Computer Chess Comes of Age PDF Book In terms of human comparison, the already existing hierarchy of rated chess players throughout the world gave engineers a scale with which to easily and accurately measure the success of their machine. This was the position on the board after 35 moves. Nxh5 Nd2 Kg4 Bc7 Famously, the mechanical Turk developed in thrilled and confounded luminaries as notable as Napoleon Bonaparte and Benjamin Franklin. Kf1 Bc3 Deep Blue. The only reason Deep Blue played in that way, as was later revealed, was because that very same day of the game the creators of Deep Blue had inputted the variation into the opening database. KevinC The allegation was that a grandmaster, presumably a top rival, had been behind the move. When it's in the open, anyone could respond to them, including people who do understand them. Qe2 Qd8 Bf3 Nd3 IBM Research. Nearly two decades later, the match still fascinates This week Time Magazine ran a story on the famous series of matches between IBM's supercomputer and Garry Kasparov. Because once you have an agenda, where does indifference fit into the picture? Raa6 Ba7 Black would have acquired strong counterplay. In fact, the first move I looked at was the immediate 36 Be4, but in a game, for the same safety-first reasons the black counterplay with a5 I might well have opted for 36 axb5. -

Solving a Hypothetical Chess Problem: a Comparative Analysis of Computational Methods and Human Reasoning

Revista Brasileira de Computação Aplicada, Abril, 2019 DOI: 10.5335/rbca.v11i1.9111 Vol. 11, No 1, pp. 96–103 Homepage: seer.upf.br/index.php/rbca/index ORIGINALPAPER Solving a hypothetical chess problem: a comparative analysis of computational methods and human reasoning Léo Pasqualini de Andrade1, Augusto Cláudio Santa Brígida Tirado1, Valério Brusamolin1 and Mateus das Neves Gomes1 1Instituto Federal do Paraná, campus Paranaguá *[email protected]; [email protected]; [email protected]; [email protected] Received: 2019-02-15. Revised: 2019-02-27. Accepted: 2019-03-15. Abstract Computational modeling has enabled researchers to simulate tasks which are very often impossible in practice, such as deciphering the working of the human mind, and chess is used by many cognitive scientists as an investigative tool in studies on intelligence, behavioral patterns and cognitive development and rehabilitation. Computer analysis of databases with millions of chess games allows players’ cognitive development to be predicted and their behavioral patterns to be investigated. However, computers are not yet able to solve chess problems in which human intelligence analyzes and evaluates abstractly without the need for many concrete calculations. The aim of this article is to describe and simulate a chess problem situation proposed by the British mathematician Sir Roger Penrose and thus provide an opportunity for a comparative discussion by society of human and articial intelligence. To this end, a specialist chess computer program, Fritz 12, was used to simulate possible moves for the proposed problem. The program calculated the variations and reached a dierent result from that an amateur chess player would reach after analyzing the problem for only a short time. -

New Architectures in Computer Chess Ii New Architectures in Computer Chess

New Architectures in Computer Chess ii New Architectures in Computer Chess PROEFSCHRIFT ter verkrijging van de graad van doctor aan de Universiteit van Tilburg, op gezag van de rector magnificus, prof. dr. Ph. Eijlander, in het openbaar te verdedigen ten overstaan van een door het college voor promoties aangewezen commissie in de aula van de Universiteit op woensdag 17 juni 2009 om 10.15 uur door Fritz Max Heinrich Reul geboren op 30 september 1977 te Hanau, Duitsland Promotor: Prof. dr. H.J.vandenHerik Copromotor: Dr. ir. J.W.H.M. Uiterwijk Promotiecommissie: Prof. dr. A.P.J. van den Bosch Prof. dr. A. de Bruin Prof. dr. H.C. Bunt Prof. dr. A.J. van Zanten Dr. U. Lorenz Dr. A. Plaat Dissertation Series No. 2009-16 The research reported in this thesis has been carried out under the auspices of SIKS, the Dutch Research School for Information and Knowledge Systems. ISBN 9789490122249 Printed by Gildeprint © 2009 Fritz M.H. Reul All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronically, mechanically, photocopying, recording or otherwise, without prior permission of the author. Preface About five years ago I completed my diploma project about computer chess at the University of Applied Sciences in Friedberg, Germany. Immediately after- wards I continued in 2004 with the R&D of my computer-chess engine Loop. In 2005 I started my Ph.D. project ”New Architectures in Computer Chess” at the Maastricht University. In the first year of my R&D I concentrated on the redesign of a computer-chess architecture for 32-bit computer environments. -

A Proposed Heuristic for a Computer Chess Program

A Proposed Heuristic for a Computer Chess Program copyright (c) 2013 John L. Jerz [email protected] Fairfax, Virginia Independent Scholar MS Systems Engineering, Virginia Tech, 2000 MS Electrical Engineering, Virginia Tech, 1995 BS Electrical Engineering, Virginia Tech, 1988 December 31, 2013 Abstract How might we manage the attention of a computer chess program in order to play a stronger positional game of chess? A new heuristic is proposed, based in part on the Weick organizing model. We evaluate the 'health' of a game position from a Systems perspective, using a dynamic model of the interaction of the pieces. The identification and management of stressors and the construction of resilient positions allow effective postponements for less-promising game continuations due to the perceived presence of adaptive capacity and sustainable development. We calculate and maintain a database of potential mobility for each chess piece 3 moves into the future, for each position we evaluate. We determine the likely restrictions placed on the future mobility of the pieces based on the attack paths of the lower-valued enemy pieces. Knowledge is derived from Foucault's and Znosko-Borovsky's conceptions of dynamic power relations. We develop coherent strategic scenarios based on guidance obtained from the vital Vickers/Bossel/Max- Neef diagnostic indicators. Archer's pragmatic 'internal conversation' provides the mechanism for our artificially intelligent thought process. Initial but incomplete results are presented. keywords: complexity, chess, game -

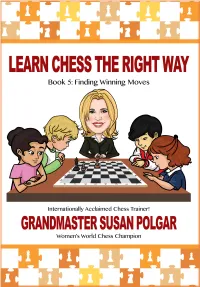

Learn Chess the Right Way

Learn Chess the Right Way Book 5 Finding Winning Moves! by Susan Polgar with Paul Truong 2017 Russell Enterprises, Inc. Milford, CT USA 1 Learn Chess the Right Way Learn Chess the Right Way Book 5: Finding Winning Moves! © Copyright 2017 Susan Polgar ISBN: 978-1-941270-45-5 ISBN (eBook): 978-1-941270-46-2 All Rights Reserved No part of this book maybe used, reproduced, stored in a retrieval system or transmitted in any manner or form whatsoever or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without the express written permission from the publisher except in the case of brief quotations embodied in critical articles or reviews. Published by: Russell Enterprises, Inc. PO Box 3131 Milford, CT 06460 USA http://www.russell-enterprises.com [email protected] Cover design by Janel Lowrance Front cover Image by Christine Flores Back cover photo by Timea Jaksa Contributor: Tom Polgar-Shutzman Printed in the United States of America 2 Table of Contents Introduction 4 Chapter 1 Quiet Moves to Checkmate 5 Chapter 2 Quite Moves to Win Material 43 Chapter 3 Zugzwang 56 Chapter 4 The Grand Test 80 Solutions 141 3 Learn Chess the Right Way Introduction Ever since I was four years old, I remember the joy of solving chess puzzles. I wrote my first puzzle book when I was just 15, and have published a number of other best-sellers since, such as A World Champion’s Guide to Chess, Chess Tactics for Champions, and Breaking Through, etc. With over 40 years of experience as a world-class player and trainer, I have developed the most effective way to help young players and beginners – Learn Chess the Right Way. -

Yearbook.Indb

PETER ZHDANOV Yearbook of Chess Wisdom Cover designer Piotr Pielach Typesetting Piotr Pielach ‹www.i-press.pl› First edition 2015 by Chess Evolution Yearbook of Chess Wisdom Copyright © 2015 Chess Evolution All rights reserved. No part of this publication may be reproduced, stored in a retrieval sys- tem or transmitted in any form or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without prior permission of the publisher. isbn 978-83-937009-7-4 All sales or enquiries should be directed to Chess Evolution ul. Smutna 5a, 32-005 Niepolomice, Poland e-mail: [email protected] website: www.chess-evolution.com Printed in Poland by Drukarnia Pionier, 31–983 Krakow, ul. Igolomska 12 To my father Vladimir Z hdanov for teaching me how to play chess and to my mother Tamara Z hdanova for encouraging my passion for the game. PREFACE A critical-minded author always questions his own writing and tries to predict whether it will be illuminating and useful for the readers. What makes this book special? First of all, I have always had an inquisitive mind and an insatiable desire for accumulating, generating and sharing knowledge. Th is work is a prod- uct of having carefully read a few hundred remarkable chess books and a few thousand worthy non-chess volumes. However, it is by no means a mere compilation of ideas, facts and recommendations. Most of the eye- opening tips in this manuscript come from my refl ections on discussions with some of the world’s best chess players and coaches. Th is is why the book is titled Yearbook of Chess Wisdom: it is composed of 366 self-suffi - cient columns, each of which is dedicated to a certain topic. -

A Higher Creative Species in the Game of Chess

Articles Deus Ex Machina— A Higher Creative Species in the Game of Chess Shay Bushinsky n Computers and human beings play chess differently. The basic paradigm that computer C programs employ is known as “search and eval- omputers and human beings play chess differently. The uate.” Their static evaluation is arguably more basic paradigm that computer programs employ is known as primitive than the perceptual one of humans. “search and evaluate.” Their static evaluation is arguably more Yet the intelligence emerging from them is phe- primitive than the perceptual one of humans. Yet the intelli- nomenal. A human spectator is not able to tell the difference between a brilliant computer gence emerging from them is phenomenal. A human spectator game and one played by Kasparov. Chess cannot tell the difference between a brilliant computer game played by today’s machines looks extraordinary, and one played by Kasparov. Chess played by today’s machines full of imagination and creativity. Such ele- looks extraordinary, full of imagination and creativity. Such ele- ments may be the reason that computers are ments may be the reason that computers are superior to humans superior to humans in the sport of kings, at in the sport of kings, at least for the moment. least for the moment. This article is about how Not surprisingly, the superiority of computers over humans in roles have changed: humans play chess like chess provokes antagonism. The frustrated critics often revert to machines, and machines play chess the way humans used to play. the analogy of a competition between a racing car and a human being, which by now has become a cliché.