Useful Examples of the Usage of Unix/Linux Filters and Utilities

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

At—At, Batch—Execute Commands at a Later Time

at—at, batch—execute commands at a later time at [–csm] [–f script] [–qqueue] time [date] [+ increment] at –l [ job...] at –r job... batch at and batch read commands from standard input to be executed at a later time. at allows you to specify when the commands should be executed, while jobs queued with batch will execute when system load level permits. Executes commands read from stdin or a file at some later time. Unless redirected, the output is mailed to the user. Example A.1 1 at 6:30am Dec 12 < program 2 at noon tomorrow < program 3 at 1945 pm August 9 < program 4 at now + 3 hours < program 5 at 8:30am Jan 4 < program 6 at -r 83883555320.a EXPLANATION 1. At 6:30 in the morning on December 12th, start the job. 2. At noon tomorrow start the job. 3. At 7:45 in the evening on August 9th, start the job. 4. In three hours start the job. 5. At 8:30 in the morning of January 4th, start the job. 6. Removes previously scheduled job 83883555320.a. awk—pattern scanning and processing language awk [ –fprogram–file ] [ –Fc ] [ prog ] [ parameters ] [ filename...] awk scans each input filename for lines that match any of a set of patterns specified in prog. Example A.2 1 awk '{print $1, $2}' file 2 awk '/John/{print $3, $4}' file 3 awk -F: '{print $3}' /etc/passwd 4 date | awk '{print $6}' EXPLANATION 1. Prints the first two fields of file where fields are separated by whitespace. 2. Prints fields 3 and 4 if the pattern John is found. -

DC Console Using DC Console Application Design Software

DC Console Using DC Console Application Design Software DC Console is easy-to-use, application design software developed specifically to work in conjunction with AML’s DC Suite. Create. Distribute. Collect. Every LDX10 handheld computer comes with DC Suite, which includes seven (7) pre-developed applications for common data collection tasks. Now LDX10 users can use DC Console to modify these applications, or create their own from scratch. AML 800.648.4452 Made in USA www.amltd.com Introduction This document briefly covers how to use DC Console and the features and settings. Be sure to read this document in its entirety before attempting to use AML’s DC Console with a DC Suite compatible device. What is the difference between an “App” and a “Suite”? “Apps” are single applications running on the device used to collect and store data. In most cases, multiple apps would be utilized to handle various operations. For example, the ‘Item_Quantity’ app is one of the most widely used apps and the most direct means to take a basic inventory count, it produces a data file showing what items are in stock, the relative quantities, and requires minimal input from the mobile worker(s). Other operations will require additional input, for example, if you also need to know the specific location for each item in inventory, the ‘Item_Lot_Quantity’ app would be a better fit. Apps can be used in a variety of ways and provide the LDX10 the flexibility to handle virtually any data collection operation. “Suite” files are simply collections of individual apps. Suite files allow you to easily manage and edit multiple apps from within a single ‘store-house’ file and provide an effortless means for device deployment. -

BIMM 143 Introduction to UNIX

BIMM 143 Introduction to UNIX Barry Grant http://thegrantlab.org/bimm143 Do it Yourself! Lets get started… Mac Terminal PC Git Bash SideNote: Terminal vs Shell • Shell: A command-line interface that allows a user to Setting Upinteract with the operating system by typing commands. • Terminal [emulator]: A graphical interface to the shell (i.e. • Mac users: openthe a window Terminal you get when you launch Git Bash/iTerm/etc.). • Windows users: install MobaXterm and then open a terminal Shell prompt Introduction To Barry Grant Introduction To Shell Barry Grant Do it Yourself! Print Working Directory: a.k.a. where the hell am I? This is a comment line pwd This is our first UNIX command :-) Don’t type the “>” bit it is the “shell prompt”! List out the files and directories where you are ls Q. What do you see after each command? Q. Does it make sense if you compare to your Mac: Finder or Windows: File Explorer? On Mac only :( open . Note the [SPACE] is important Download any file to your current directory/folder curl -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip curl -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip ls unzip bggn213_01_unix.zip Q. Does what you see at each step make sense if you compare to your Mac: Finder or Windows: File Explorer? Download any file to your current directory/folder curl -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip List out the files and directories where you are (NB: Use TAB for auto-complete) ls bggn213_01_unix.zip Un-zip your downloaded file unzip bggn213_01_unix.zip curlChange -O https://bioboot.github.io/bggn213_S18/class-material/bggn213_01_unix.zip directory (i.e. -

Unix Essentials (Pdf)

Unix Essentials Bingbing Yuan Next Hot Topics: Unix – Beyond Basics (Mon Oct 20th at 1pm) 1 Objectives • Unix Overview • Whitehead Resources • Unix Commands • BaRC Resources • LSF 2 Objectives: Hands-on • Parsing Human Body Index (HBI) array data Goal: Process a large data file to get important information such as genes of interest, sorting expression values, and subset the data for further investigation. 3 Advantages of Unix • Processing files with thousands, or millions, of lines How many reads are in my fastq file? Sort by gene name or expression values • Many programs run on Unix only Command-line tools • Automate repetitive tasks or commands Scripting • Other software, such as Excel, are not able to handle large files efficiently • Open Source 4 Scientific computing resources 5 Shared packages/programs https://tak.wi.mit.edu Request new packages/programs Installed packages/programs 6 Login • Requesting a tak account http://iona.wi.mit.edu/bio/software/unix/bioinfoaccount.php • Windows PuTTY or Cygwin Xming: setup X-windows for graphical display • Macs Access through Terminal 7 Connecting to tak for Windows Command Prompt user@tak ~$ 8 Log in to tak for Mac ssh –Y [email protected] 9 Unix Commands • General syntax Command Options or switches (zero or more) Arguments (zero or more) Example: uniq –c myFile.txt command options arguments Options can be combined ls –l –a or ls –la • Manual (man) page man uniq • One line description whatis ls 10 Unix Directory Structure root / home dev bin nfs lab . jdoe BaRC_Public solexa_public -

ECOGEO Workshop 2: Introduction to Env 'Omics

ECOGEO Workshop 2: Introduction to Env ‘Omics Unix and Bioinformatics Ben Tully (USC); Ken Youens-Clark (UA) Unix Commands pwd rm grep tail install ls ‘>’ sed cut cd cat nano top mkdir ‘<’ history screen touch ‘|’ $PATH ssh cp sort less df mv uniq head rsync/scp Unix Command Line 1. Open Terminal window Unix Command Line 2. Open Chrome and navigate to Unix tutorial at Protocols.io 3. Group: ECOGEO 4. Protocol: ECOGEO Workshop 2: Unix Module ! This will allow you to copy, paste Unix scripts into terminal window ! ECOGEO Protocols.io for making copy, paste easier Unix Command Line $ ls ls - lists items in the current directory Many commands have additional options that can be set by a ‘-’ $ ls -a Unix Command Line $ ls -a lists all files/directories, including hidden files ‘.’ $ ls -l lists the long format File Permissions | # Link | User | Group | Size | Last modified $ ls -lt lists the long format, but ordered by date last modified Unix Command Line Unix Command Line $ cd ecogeo/ cd - change directory List the contents of the current directory Move into the directory called unix List contents $ pwd pwd - present working directory Unix Command Line /home/c-debi/ecogeo/unix When were we in the directory home? Or c-debi? Or ecogeo? $ cd / Navigates to root directory List contents of root directory This where everything is stored in the computer All the commands we are running live in /bin Unix Command Line / root bin sys home mnt usr c-debi BioinfPrograms cdebi Desktop Downloads ecogeo unix assembly annotation etc Typical Unix Layout Unix Command Line Change directory to home Change directory to c-debi Change directory to ecogeo Change directory to unix List contents Change directory to data Change directory to root Unix Command Line Change directory to unix/data in one step $ cd /home/c-debi/ecogeo/unix/data Tab can be used to auto complete names $ cd . -

Introduction to Unix

Introduction to Unix Rob Funk <[email protected]> University Technology Services Workstation Support http://wks.uts.ohio-state.edu/ University Technology Services Course Objectives • basic background in Unix structure • knowledge of getting started • directory navigation and control • file maintenance and display commands • shells • Unix features • text processing University Technology Services Course Objectives Useful commands • working with files • system resources • printing • vi editor University Technology Services In the Introduction to UNIX document 3 • shell programming • Unix command summary tables • short Unix bibliography (also see web site) We will not, however, be covering these topics in the lecture. Numbers on slides indicate page number in book. University Technology Services History of Unix 7–8 1960s multics project (MIT, GE, AT&T) 1970s AT&T Bell Labs 1970s/80s UC Berkeley 1980s DOS imitated many Unix ideas Commercial Unix fragmentation GNU Project 1990s Linux now Unix is widespread and available from many sources, both free and commercial University Technology Services Unix Systems 7–8 SunOS/Solaris Sun Microsystems Digital Unix (Tru64) Digital/Compaq HP-UX Hewlett Packard Irix SGI UNICOS Cray NetBSD, FreeBSD UC Berkeley / the Net Linux Linus Torvalds / the Net University Technology Services Unix Philosophy • Multiuser / Multitasking • Toolbox approach • Flexibility / Freedom • Conciseness • Everything is a file • File system has places, processes have life • Designed by programmers for programmers University Technology Services -

Cluster Generate — Generate Grouping Variables from a Cluster Analysis

Title stata.com cluster generate — Generate grouping variables from a cluster analysis Description Quick start Menu Syntax Options Remarks and examples Also see Description cluster generate creates summary or grouping variables from a hierarchical cluster analysis; the result depends on the function. A single variable may be created containing a group number based on the requested number of groups or cutting the dendrogram at a specified (dis)similarity value. A set of new variables may be created if a range of group sizes is specified. Users can add more cluster generate functions; see[ MV] cluster programming subroutines. Quick start Generate grouping variable g5 with 5 groups from the most recent cluster analysis cluster generate g5 = groups(5) As above, 4 grouping variables (g4, g5, g6, and g7) with 4, 5, 6, and 7 groups cluster generate g = groups(4/7) As above, but use the cluster analysis named myclus cluster generate g = groups(4/7), name(myclus) Generate grouping variable mygroups from the most recent cluster analysis by cutting the dendrogram at dissimilarity value 38 cluster generate mygroups = cut(38) Menu Statistics > Multivariate analysis > Cluster analysis > Postclustering > Summary variables from cluster analysis 1 2 cluster generate — Generate grouping variables from a cluster analysis Syntax Generate grouping variables for specified numbers of clusters cluster generate newvar j stub = groups(numlist) , options Generate grouping variable by cutting the dendrogram cluster generate newvar = cut(#) , name(clname) option Description name(clname) name of cluster analysis to use in producing new variables ties(error) produce error message for ties; default ties(skip) ignore requests that result in ties ties(fewer) produce results for largest number of groups smaller than your request ties(more) produce results for smallest number of groups larger than your request Options name(clname) specifies the name of the cluster analysis to use in producing the new variables. -

Student Number: Surname: Given Name

Computer Science 2211a Midterm Examination Sample Solutions 9 November 20XX 1 hour 40 minutes Student Number: Surname: Given name: Instructions/Notes: The examination has 35 questions on 9 pages, and a total of 110 marks. Put all answers on the question paper. This is a closed book exam. NO ELECTRONIC DEVICES OF ANY KIND ARE ALLOWED. 1. [4 marks] Which of the following Unix commands/utilities are filters? Correct answers are in blue. mkdir cd nl passwd grep cat chmod scriptfix mv 2. [1 mark] The Unix command echo HOME will print the contents of the environment variable whose name is HOME. True False 3. [1 mark] In C, the null character is another name for the null pointer. True False 4. [3 marks] The protection code for the file abc.dat is currently –rwxr--r-- . The command chmod a=x abc.dat is equivalent to the command: a. chmod 755 abc.dat b. chmod 711 abc.dat c. chmod 155 abc.dat d. chmod 111 abc.dat e. none of the above 5. [3 marks] The protection code for the file abc.dat is currently –rwxr--r-- . The command chmod ug+w abc.dat is equivalent to the command: a. chmod 766 abc.dat b. chmod 764 abc.dat c. chmod 754 abc.dat d. chmod 222 abc.dat e. none of the above 2 6. [3 marks] The protection code for def.dat is currently dr-xr--r-- , and the protection code for def.dat/ghi.dat is currently -r-xr--r-- . Give one or more chmod commands that will set the protections properly so that the owner of the two files will be able to delete ghi.dat using the command rm def.dat/ghi.dat chmod u+w def.dat or chmod –r u+w def.dat 7. -

Unix Programming

P.G DEPARTMENT OF COMPUTER APPLICATIONS 18PMC532 UNIX PROGRAMMING K1 QUESTIONS WITH ANSWERS UNIT- 1 1) Define unix. Unix was originally written in assembler, but it was rewritten in 1973 in c, which was principally authored by Dennis Ritchie ( c is based on the b language developed by kenThompson. 2) Discuss the Communication. Excellent communication with users, network User can easily exchange mail,dta,pgms in the network 3) Discuss Security Login Names Passwords Access Rights File Level (R W X) File Encryption 4) Define PORTABILITY UNIX run on any type of Hardware and configuration Flexibility credits goes to Dennis Ritchie( c pgms) Ported with IBM PC to GRAY 2 5) Define OPEN SYSTEM Everything in unix is treated as file(source pgm, Floppy disk,printer, terminal etc., Modification of the system is easy because the Source code is always available 6) The file system breaks the disk in to four segements The boot block The super block The Inode table Data block 7) Command used to find out the block size on your file $cmchk BSIZE=1024 8) Define Boot Block Generally the first block number 0 is called the BOOT BLOCK. It consists of Hardware specific boot program that loads the file known as kernal of the system. 9) Define super block It describes the state of the file system ie how large it is and how many maximum Files can it accommodate This is the 2nd block and is number 1 used to control the allocation of disk blocks 10) Define inode table The third segment includes block number 2 to n of the file system is called Inode Table. -

Useful Commands in Linux and Other Tools for Quality Control

Useful commands in Linux and other tools for quality control Ignacio Aguilar INIA Uruguay 05-2018 Unix Basic Commands pwd show working directory ls list files in working directory ll as before but with more information mkdir d make a directory d cd d change to directory d Copy and moving commands To copy file cp /home/user/is . To copy file directory cp –r /home/folder . to move file aa into bb in folder test mv aa ./test/bb To delete rm yy delete the file yy rm –r xx delete the folder xx Redirections & pipe Redirection useful to read/write from file !! aa < bb program aa reads from file bb blupf90 < in aa > bb program aa write in file bb blupf90 < in > log Redirections & pipe “|” similar to redirection but instead to write to a file, passes content as input to other command tee copy standard input to standard output and save in a file echo copy stream to standard output Example: program blupf90 reads name of parameter file and writes output in terminal and in file log echo par.b90 | blupf90 | tee blup.log Other popular commands head file print first 10 lines list file page-by-page tail file print last 10 lines less file list file line-by-line or page-by-page wc –l file count lines grep text file find lines that contains text cat file1 fiel2 concatenate files sort sort file cut cuts specific columns join join lines of two files on specific columns paste paste lines of two file expand replace TAB with spaces uniq retain unique lines on a sorted file head / tail $ head pedigree.txt 1 0 0 2 0 0 3 0 0 4 0 0 5 0 0 6 0 0 7 0 0 8 0 0 9 0 0 10 -

Install and Run External Command Line Softwares

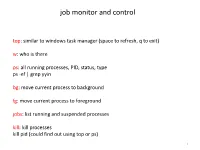

job monitor and control top: similar to windows task manager (space to refresh, q to exit) w: who is there ps: all running processes, PID, status, type ps -ef | grep yyin bg: move current process to background fg: move current process to foreground jobs: list running and suspended processes kill: kill processes kill pid (could find out using top or ps) 1 sort, cut, uniq, join, paste, sed, grep, awk, wc, diff, comm, cat All types of bioinformatics sequence analyses are essentially text processing. Unix Shell has the above commands that are very useful for processing texts and also allows the output from one command to be passed to another command as input using pipe (“|”). less cosmicRaw.txt | cut -f2,3,4,5,8,13 | awk '$5==22' | cut -f1 | sort -u | wc This makes the processing of files using Shell very convenient and very powerful: you do not need to write output to intermediate files or load all data into the memory. For example, combining different Unix commands for text processing is like passing an item through a manufacturing pipeline when you only care about the final product 2 Hands on example 1: cosmic mutation data - Go to UCSC genome browser website: http://genome.ucsc.edu/ - On the left, find the Downloads link - Click on Human - Click on Annotation database - Ctrl+f and then search “cosmic” - On “cosmic.txt.gz” right-click -> copy link address - Go to the terminal and wget the above link (middle click or Shift+Insert to paste what you copied) - Similarly, download the “cosmicRaw.txt.gz” file - Under your home, create a folder -

En Rgy Tr Ls

HTING LIG NAL SIO ES OF PR • • N O I T C E T O R P E G R U S • F O O R ENER P GY R C E O H N T A T E R W • O A L P S & S L O O P InTouch™ — The Centerpiece for Peaceful Living Z-wave™ makes reliable wireless home control possible. Several factors contribute to make Z-wave a breakthrough Intermatic makes it a reality with the InTouch™ series innovation. First is the “mesh” network, which basically of wireless controls. Until now home controls had only means that every line-powered device within the network acts two options — reliable controls that were too expensive or as a repeater to route signals among distant devices. Second is low cost controls that were unreliable. That’s all changed that Z-wave networks operate in the 900Mhz band, providing a with Intermatic’s InTouch wireless controls, which bring penetrating signal to deliver reliable communications between reliable controls together with low cost to deliver a home devices. Another factor is that with more than 4 billion unique solution everyone can enjoy. house codes, Z-wave provides a secure network with no fear of interference from neighboring systems. Finally, Z-wave Named the Best New Emerging Technology by CNET at has made the leap from theory to reality as more than 100 the 2006 Consumer Electronic Show, and recipient of the companies are working in the Z-wave Alliance to develop Electronic House Product of the Year Award, Z-wave is a actual products that benefit homeowners today and in the breakthrough technology that enables products from many near future.