A Unified Memory Network Architecture for In-Memory Computing in Commodity Servers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

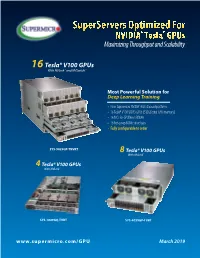

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

High Performance Computing and AI Solutions Portfolio

Brochure High Performance Computing and AI Solutions Portfolio Technology and expertise to help you accelerate discovery and innovation Discovery and innovation have always started with great minds Go ahead. dreaming big. As artificial intelligence (AI), high performance computing (HPC) and data analytics continue to converge and Dream big. evolve, they are fueling the next industrial revolution and the next quantum leap in human progress. And with the help of increasingly powerful technology, you can dream even bigger. Dell Technologies will be there every step of the way with the technology you need to power tomorrow’s discoveries and the expertise to bring it all together, today. 463 exabytes The convergence of HPC and AI is driven by data. The data‑driven age is dramatically reshaping industries and reinventing the future. As of data will be created each day by 20251 vast amounts of data pour in from increasingly diverse sources, leveraging that data is both critical and transformational. Whether you’re working to save lives, understand the universe, build better machines, neutralize financial risks or anticipate customer sentiment, 44X ROI data informs and drives decisions that impact the success of your organization — and Average return on investment (ROI) shapes the future of our world. for HPC2 AI, HPC and data analytics are technologies designed to unlock the value of your data. While they have long been treated as separate, the three technologies are converging as 83% of CIOs it becomes clear that analytics and AI are both big‑data problems that require the power, Say they are investing in AI scalable compute, networking and storage provided by HPC. -

BRKIOT-2394.Pdf

Unlocking the Mystery of Machine Learning and Big Data Analytics Robert Barton Jerome Henry Distinguished Architect Principal Engineer @MrRobbarto Office of the CTAO CCIE #6660 @wirelessccie CCDE #2013::6 CCIE #24750 CWNE #45 BRKIOT-2394 Cisco Webex Teams Questions? Use Cisco Webex Teams to chat with the speaker after the session How 1 Find this session in the Cisco Events Mobile App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space BRKIOT-2394 © 2020 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Tuesday, Jan. 28th Monday, Jan. 27th Wednesday, Jan. 29th BRKIOT-2600 BRKIOT-2213 16:45 Enabling OT-IT collaboration by 17:00 From Zero to IOx Hero transforming traditional industrial TECIOT-2400 networks to modern IoT Architectures IoT Fundamentals 08:45 BRKIOT-1618 Bootcamp 14:45 Industrial IoT Network Management PSOIOT-1156 16:00 using Cisco Industrial Network Director Securing Industrial – A Deep Dive. Networks: Introduction to Cisco Cyber Vision PSOIOT-2155 Enhancing the Commuter 13:30 BRKIOT-1775 Experience - Service Wireless technologies and 14:30 BRKIOT-2698 BRKIOT-1520 Provider WiFi at the Use Cases in Industrial IOT Industrial IoT Routing – Connectivity 12:15 Cisco Remote & Mobile Asset speed of Trains and Beyond Solutions PSOIOT-2197 Cisco Innovates Autonomous 14:00 TECIOT-2000 Vehicles & Roadways w/ IoT BRKIOT-2497 BRKIOT-2900 Understanding Cisco's 14:30 IoT Solutions for Smart Cities and 11:00 Automating the Network of Internet Of Things (IOT) BRKIOT-2108 Communities Industrial Automation Solutions Connected Factory Architecture Theory and 11:00 Practice PSOIOT-2100 BRKIOT-1291 Unlock New Market 16:15 Opening Keynote 09:00 08:30 Opportunities with LoRaWAN for IOT Enterprises Embedded Cisco services Technologies IOT IOT IOT Track #CLEMEA www.ciscolive.com/emea/learn/technology-tracks.html Cisco Live Thursday, Jan. -

NVIDIA Gpudirect RDMA (GDR) Host Memory Host Memory • Pipeline Through Host for Large Msg 2 IB IB 4 2

Efficient and Scalable Communication Middleware for Emerging Dense-GPU Clusters Ching-Hsiang Chu Advisor: Dhabaleswar K. Panda Network Based Computing Lab Department of Computer Science and Engineering The Ohio State University, Columbus, OH Outline • Introduction • Problem Statement • Detailed Description and Results • Broader Impact on the HPC Community • Expected Contributions Network Based Computing Laboratory SC 19 Doctoral Showcase 2 Trends in Modern HPC Architecture: Heterogeneous Multi/ Many-core High Performance Interconnects Accelerators / Coprocessors SSD, NVMe-SSD, Processors InfiniBand, Omni-Path, EFA high compute density, NVRAM <1usec latency, 100Gbps+ Bandwidth high performance/watt Node local storage • Multi-core/many-core technologies • High Performance Storage and Compute devices • High Performance Interconnects • Variety of programming models (MPI, PGAS, MPI+X) #1 Summit #2 Sierra (17,280 GPUs) #8 ABCI #22 DGX SuperPOD (27,648 GPUs) #10 Lassen (2,664 GPUs) (4,352 GPUs) (1,536 GPUs) Network Based Computing Laboratory SC 19 Doctoral Showcase 3 Trends in Modern Large-scale Dense-GPU Systems • Scale-up (up to 150 GB/s) • Scale-out (up to 25 GB/s) – PCIe, NVLink/NVSwitch – InfiniBand, Omni-path, Ethernet – Infinity Fabric, Gen-Z, CXL – Cray Slingshot Network Based Computing Laboratory SC 19 Doctoral Showcase 4 GPU-enabled HPC Applications Lattice Quantum Chromodynamics Weather Simulation Wave propagation simulation Fuhrer O, Osuna C, Lapillonne X, Gysi T, Bianco M, Schulthess T. Towards GPU-accelerated operational weather -

Dell EMC Poweredge C4140 Technical Guide

Dell EMC PowerEdge C4140 Technical Guide Regulatory Model: E53S Series Regulatory Type: E53S001 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2017 - 2019 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. 2019 - 09 Rev. A00 Contents 1 System overview ......................................................................................................................... 5 Introduction............................................................................................................................................................................ 5 New technologies.................................................................................................................................................................. 5 2 System features...........................................................................................................................7 Specifications......................................................................................................................................................................... 7 Product comparison............................................................................................................................................................. -

HPE Apollo 6500 Gen10 System Overview

QuickSpecs HPE Apollo 6500 Gen10 System Overview HPE Apollo 6500 Gen10 System The ability of computers to autonomously learn, predict, and adapt using massive datasets is driving innovation and competitive advantage across many industries and applications. The HPE Apollo 6500 Gen10 System is an ideal HPC and Deep Learning platform providing unprecedented performance with industry leading GPUs, fast GPU interconnect, high bandwidth fabric and a configurable GPU topology to match your workloads. The system with rock-solid RAS features (reliable, available, secure) includes up to eight high power GPUs per server tray (node), NVLink 2.0 for fast GPU-to-GPU communication, Intel® Xeon® Scalable Processors support, choice of up to four high-speed / low latency fabric adapters, and the ability to optimize your configurations to match your workload and choice of GPU. And while the HPE Apollo 6500 Gen10 System is ideal for deep learning workloads, the system is suitable for complex high performance computing workloads such as simulation and modeling. Eight GPU per server for faster and more economical deep learning system training compared to more servers with fewer GPU each. Keep your researchers productive as they iterate on a model more rapidly for a better solution, in less time. Now available with NVLink 2.0 to connect GPUs at up to 300 GB/s for the world’s most powerful computing servers. HPC and AI models that would consume days or weeks can now be trained in a few hours or minutes. Front View –with two HPE DL38X Gen10 Premium 6 SFF SAS/SATA + 2 NVMe drive cages shown 1. -

The Computer That Could Be Smarter Than Us Cognitive Computing

outthink limits The Computer that could be smarter than us Cognitive Computing Ingolf Wittmann Technical Director Leader of HPC Kinetica - Unstructured Databases What is Kinetica? Unparalleled acceleration Kinetica’s in-memory database powered by graphics processing units (GPUs) was built from the ground up to deliver truly real-time insights on data in 2.5x motion: orders of magnitude faster Bandwidth performance (potentially 100, 1000X) at 100100 ticktick QueryQuery Time:Time: CompetingCompeting SystemSystem PCI-EPCI-E x16x16 3.03.0 10% to 25% of the cost of traditional An industry first — POWER8 with data platforms. NVIDIA NVLink delivers 2.5X the Calculation* bandwidth to GPU accelerators, Data Transfer allowing you to experience Kinetica at 73 ticks 27 ticks the speed it was intended compared to x86 based systems. What are the Key Markets? 65% Reduction • Retail: Inventory Mgt, BI, Apps, Big Data tools, HPA Real-time results • Distribution / Logistics : Supply Chain Data Transfer Calculation* Mgt 26 ticks 14 ticks • Financial Services : Fraud Detection, AML 4040 ticktick QueryQuery Time:Time: S822LCS822LC forfor HPC,HPC, NVLinkNVLink 10x • Ad-Tech : More Targeted Marketing * Includes non-overlapping: CPU, GPU, and idle times. Performance • IoT : End Point Management, RFID With the unique capabilities of Tesla 65% reduction in data transfer time (3X improvement) in for P100 + POWER8, Kinetica has 2.4x POWER8 with NVLink the performance of competing Kinetica GPU-accelerated DB PCIe x16 3.0/x86 System System systems enabling you to analyze and • Less data-induced latency in all applications Xeon E5-2640 v4 Power Systems S822LC visualize large datasets in • with 4 Tesla K80s : Unique to POWER8 with NVLink with 4 Tesla P100s: milliseconds vs. -

NVIDIA Geforce RTX 2080 User Guide | 3 Introduction

2080 TABLE OF CONTENTS 01 INTRODUCTION ..................................................................................... 3 About This Guide ................................................................................................................................ 3 Minimum System Requirements ....................................................................................................... 4 02 UNPACKING .......................................................................................... 5 Equipment .......................................................................................................................................... 6 03 Hardware Installation ............................................................................. 7 Safety Instructions ............................................................................................................................. 7 Before You Begin ................................................................................................................................ 8 Installing the GeForce Graphics Card ............................................................................................... 8 04 SOFTWARE INSTALLATION ................................................................... 12 GeForce Experience Software Installation ...................................................................................... 12 05 CONFIGURING NVLink ......................................................................... 14 06 CONFIGURING HDMI ........................................................................... -

NVIDIA Geforce RTX 2080 User Guide | 3 Introduction

2080 TABLE OF CONTENTS 01 INTRODUCTION ..................................................................................... 3 About This Guide ................................................................................................................................ 3 Minimum System Requirements ....................................................................................................... 4 02 UNPACKING .......................................................................................... 5 Equipment .......................................................................................................................................... 6 03 Hardware Installation ............................................................................. 7 Safety Instructions ............................................................................................................................. 7 Before You Begin ................................................................................................................................ 8 Installing the GeForce Graphics Card ............................................................................................... 8 04 SOFTWARE INSTALLATION ................................................................... 12 GeForce Experience Software Installation ...................................................................................... 12 05 CONFIGURING NVLink ......................................................................... 14 06 CONFIGURING HDMI ........................................................................... -

Performance Analysis of Deep Learning Workloads on Leading-Edge Systems

Performance Analysis of Deep Learning Workloads on Leading-edge Systems Yihui (Ray) Ren Shinjae Yoo Adolfy Hoisie Computational Science Initiative Outline • Hardware Systems (DGX-1, DGX-2, AWS P3, IBM AC922, RTX-2080Ti) • Communication Bandwidth Test (NCCL) • Realistic Deep Learning Workloads (Computer Vision, NLP) • Training Throughput Results and Performance Analysis GPUs and NVLink SXM2 Module • NVIDIA Tesla V100 (32GB HBM2 memory, 6 NVLinks, 15.7 TFLOPS) • NVIDIA RTX 2080Ti (11GB GDDR6 memory, 2 NVLinks, 13.4TFLOPS) • Each NVLink has bandwidth of 25GB/s in and 25GB/s out. All leading-edge AI systems use V100 GPUs. Image Ref: https://devblogs.nvidia.com/using-cuda-warp-level-primitives/ The difference is how these NVLinks are connected. AWS p3dn.24xlarge (DGX-1V) • 8x V100 (32GB) GPUs • Hybrid cube-mesh topology • 2x 24-core Xeon 8175M (96 logic cores in total) • 768 GB system memory • 2 TB NVMe SSD • 2x AWS P3 (16 GPUs in total) • Connected through 1.25GB/s Ethernet. (*then) Image Ref: https://images.nvidia.com/content/pdf/dgx1-v100-system- architecture-whitepaper.pdf DGX-2 and NVSwitch • 16x V100 (32GB) GPUs • 12x on-node NVSwitches • Each NVSwitch has 18 NVLink ports (16 in use). • 2x 24-core Xeon 8186 (96 logic cores in total) • 1.5 TB system memory • 30 TB NVMe SSD in 8-way RAID0 Image Ref: https://images.nvidia.com/content/pdf/nvswitch-technical- overview.pdf https://www.nvidia.com/en-us/data-center/hgx/ IBM Power System AC922 (8335-GTH) • 4x V100 (32GB) GPUs • 2x IBM 20-core Power9 CPU (160 logic cores in total) • Each IBM Power9 CPU has 6 NVLinks. -

The Ultimate Pc Gpu Nvidia Titan Rtx

THE ULTIMATE PC GPU NVIDIA TITAN RTX FEATURES Breakthrough PC Performance SPECIFICATIONS > Three DisplayPort 1.4 for Developers and Creators connectors GPU Memory 24 GB GDDR6 > One HDMI display connector Memory Interface 384-bit NVIDIA TITAN RTX is the ultimate PC GPU for the world’s > One USB Type-C connector Memory Bandwidth Up to 672 GB/s most demanding users—AI researchers, data scientists, > NVIDIA® NVLink™2 and content creators. Powered by NVIDIA Turing™, NVIDIA CUDA® Cores 4,608 NVIDIA’s next-generation GPU architecture designed NVIDIA Tensor Cores 576 for AI and ray tracing, TITAN RTX delivers the best PC NVIDIA RT Cores 72 performance for training neural networks, processing Single-Precision 16.3 TFLOPS large datasets, and creating ultra-resolution video and Performance 3D content. Tensor Performance 130 TFLOPS TITAN RTX features 576 multi-precision Turing Tensor NVIDIA NVLink Connects 2 TITAN RTX Cores that deliver up to 130 teraFLOPS (TFLOPS) for GPUs deep learning training; 72 Turing RT Cores that provide NVIDIA NVLink 100 GB/s (bidirectional) up to 11 GigaRays per second for maximum real-time ray Bandwidth tracing performance; and 24 gigabytes (GB) of GDDR6 System Interface PCI Express 3.0 x 16 memory for training with higher batch sizes, processing Power Consumption 280 W larger datasets and animation models, and managing Thermal Solution Active the most demanding creative workflows. Pair two TITANs Form Factor 4.4” H x 10.5” L, together with NVIDIA NVLink and double your memory Dual slot, full height 1 and performance. Display Connectors 3x DisplayPort, 1x HDMI, TITAN RTX is supported by the CUDA-X AI SDK for AI and 1x USB Type-C data science and NVIDIA’s Studio Driver program that Max Simultaneous 4x 4096 x 2160 @ 120 Hz, Displays 4x 5120 x 2880 @ 60 Hz, brings support for creative applications to your PC. -

8335-GTB Hardware Architecture Overview

8335-GTB Hardware Architecture Overview Chris Mann Power Systems Development January 6, 2017 IBM CONFIDENTIAL Garrison Architecture Overview - Agenda • Hardware Overview • Processor Interfaces • Memory Architecture • GPU Interconnect • I/O Interconnect IBM CONFIDENTIAL 2 © 2014 IBM Corporation2 Garrison Architecture Overview - Agenda • Hardware Overview • Processor Interfaces • Memory Architecture • GPU Interconnect • I/O Interconnect IBM CONFIDENTIAL 3 © 2014 IBM Corporation3 Garrison Design - 2 Socket P8 with NVLink, 4 GPU System Power 8 with NVLink (2x) PCIe slot (2x) • 190W Sort • Gen3 PCIe • Integrated NVLink 1.0 • HHHL Adapter PCIe slot (1x) • Gen3 PCIe NVidia GPU • HHHL Adapter • SXM2 form factor • NVLink 1.0 Service Controller Card • 300 W • BMC Content • Max of 2 per socket Memory DIMM’s Riser (8x) • 4 IS DDR4 DIMMs per riser • Single Centaur per riser • 32 IS DIMM’s total Power Supplies (2x) • 32-1024 GB memory capacity • 1300W • Common Form Factor Supply Cooling Fans (4x) • 80mm Counter- Rotating Fans • Hot swap Storage Option (2x) • 0-2, SATA HDD.SSD • Tray design for install/removal • Hot Swap IBM CONFIDENTIAL 4 © 2014 IBM Corporation Garrison Design - 2 Socket P8 with NVLink, 4 GPU System Power Supplies (2x) Power 8 with NVLink (2x) • 1300W • 190W Sort Service Controller Card • Common Form Factor Supply • Integrated NVLink 1.0 • BMC Content PCIe slot (1x) • Gen3 PCIe • HHHL Adapter Water Cooling Access NVidia GPU • Removable panel for water • SXM2 form factor line access • NVLink 1.0 • 300 W PCIe slot (2x) • Max of 2 per