Downloading Additional Components of the Webpage

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Usability Evaluation of Privacy Add-Ons for Web Browsers

A Usability Evaluation of Privacy Add-ons for Web Browsers Matthew Corner1, Huseyin Dogan1, Alexios Mylonas1 and Francis Djabri2 1 Bournemouth University, Bournemouth, United Kingdom {i7241812,hdogan,amylonas}@bournemouth.ac.uk 2 Mozilla Corporation, San Francisco, United States of America [email protected] Abstract. The web has improved our life and has provided us with more oppor- tunities to access information and do business. Nonetheless, due to the preva- lence of trackers on websites, web users might be subject to profiling while ac- cessing the web, which impairs their online privacy. Privacy browser add-ons, such as DuckDuckGo Privacy Essentials, Ghostery and Privacy Badger, extend the privacy protection that the browsers offer by default, by identifying and blocking trackers. However, the work that focuses on the usability of the priva- cy add-ons, as well as the users’ awareness, feelings, and thoughts towards them, is rather limited. In this work, we conducted usability evaluations by uti- lising System Usability Scale and Think-Aloud Protocol on three popular priva- cy add-ons, i.e., DuckDuckGo Privacy Essentials, Ghostery and Privacy Badg- er. Our work also provides insights into the users’ awareness of online privacy and attitudes towards the abovementioned privacy add-ons; in particular trust, concern, and control. Our results suggest that the participants feel safer and trusting of their respective add-on. It also uncovers areas for add-on improve- ment, such as a more visible toolbar logo that offers visual feedback, easy ac- cess to thorough help resources, and detailed information on the trackers that have been found. Keywords: Usability, Privacy, Browser Add-ons. -

Demystifying Content-Blockers: a Large-Scale Study of Actual Performance Gains

Demystifying Content-blockers: A Large-scale Study of Actual Performance Gains Ismael Castell-Uroz Josep Sole-Pareta´ Pere Barlet-Ros Universitat Politecnica` de Catalunya Universitat Politecnica` de Catalunya Universitat Politecnica` de Catalunya Barcelona, Spain Barcelona, Spain Barcelona, Spain [email protected] [email protected] [email protected] Abstract—With the evolution of the online advertisement and highly parallel network measurement system [10] that loads tracking ecosystem, content-filtering has become the reference every website using one of the most relevant content-blockers tool for improving the security, privacy and browsing experience of each category and compares their performance. when surfing the Internet. It is also commonly believed that using content-blockers to stop unsolicited content decreases the time We found that, although we can observe some improvements needed for loading websites. In this work, we perform a large- in terms of effective page size, the results do not directly scale study with the 100K most popular websites on the actual translate to gains in loading time. In some cases, there could performance improvements of using content-blockers. We focus even be an overhead to be paid. This is the case for two of the our study on two relevant metrics for measuring the browsing studied plugins, especially in small and fast loading websites. performance; page size and loading time. Our results show that using such tools results in small improvements in terms of page The measurement system and methodology proposed in this size but, contrary to popular belief, it has a negligible impact in paper can also be useful for network and service administrators terms of loading time. -

Whotracks. Me: Shedding Light on the Opaque World of Online Tracking

WhoTracks.Me: Shedding light on the opaque world of online tracking Arjaldo Karaj Sam Macbeth Rémi Berson [email protected] [email protected] [email protected] Josep M. Pujol [email protected] Cliqz GmbH Arabellastraße 23 Munich, Germany ABSTRACT print users and their devices [25], and the extent to Online tracking has become of increasing concern in recent which these methods are being used across the web [5], years, however our understanding of its extent to date has and quantifying the value exchanges taking place in on- been limited to snapshots from web crawls. Previous at- line advertising [7, 27]. There is a lack of transparency tempts to measure the tracking ecosystem, have been done around which third-party services are present on pages, using instrumented measurement platforms, which are not and what happens to the data they collect is a common able to accurately capture how people interact with the web. concern. By monitoring this ecosystem we can drive In this work we present a method for the measurement of awareness of the practices of these services, helping to tracking in the web through a browser extension, as well as inform users whether they are being tracked, and for a method for the aggregation and collection of this informa- what purpose. More transparency and consumer aware- tion which protects the privacy of participants. We deployed ness of these practices can help drive both consumer this extension to more than 5 million users, enabling mea- and regulatory pressure to change, and help researchers surement across multiple countries, ISPs and browser con- to better quantify the privacy and security implications figurations, to give an accurate picture of real-world track- caused by these services. -

Web Privacy Beyond Extensions

Web Privacy Beyond Extensions: New Browsers Are Pursuing Deep Privacy Protections Peter Snyder <[email protected]> Privacy Researcher at Brave Software In a slide… • Web privacy is a mess. • Privacy activists and researchers are limited by the complexity of modern browsers. • New browser vendors are eager to work with activists to deploy their work. Outline 1. Background Extension focus in practical privacy tools 2. Present Privacy improvements require deep browser modifications 3. Next Steps Call to action, how to keep improving Outline 1. Background Extension focus in practical privacy tools 2. Present Privacy improvements require deep browser modifications 3. Next Steps Call to action, how to keep improving Browsers are Complicated uBlock PrivacyBadger Disconnect AdBlock Plus Firefox Safari Privacy concern Chrome Edge / IE Browser maintenance experience Extensions as a Compromise uBlock PrivacyBadger Disconnect AdBlock Plus Runtime Extensions modifications Firefox Safari Privacy concern Chrome Edge / IE Browser maintenance experience Privacy and Browser Extensions � • Successes! uBlock Origin, HTTPS Everywhere, Ghostery, Disconnect, Privacy Badger, EasyList / EasyPrivacy, etc… • Appealing Easy(er) to build, easy to share • Popular Hundreds of thousands of extensions, Millions of users Browser Extension Limitations � • Limited Capabilities Networking, request modification, rendering, layout, image processing, JS engine, etc… • Security and Privacy Possibly giving capabilities to malicious parties • Performance Limited to JS, secondary access Extensions vs Runtime uBlock PrivacyBadger Disconnect AdBlock Plus Runtime Extensions modifications Firefox Safari Privacy concern Chrome Edge / IE Browser maintenance experience Under Explored Space uBlock PrivacyBadger Disconnect ? AdBlock Plus Runtime Extensions modifications Firefox Safari Privacy concern Chrome Edge / IE Browser maintenance experience Outline 1. Background Extension focus in practical privacy tools 2. -

General Characteristics of Android Browsers with Focus on Security and Privacy Features

BÁNKI KÖZLEMÉNYEK 3. ÉVFOLYAM 1. SZÁM General characteristics of Android browsers with focus on security and privacy features Petar Čisar*, Sanja Maravic Cisar**, Igor Fürstner*** Academy of Criminalistic and Police Studies, Belgrade, Serbia, **Subotica Tech, Subotica, Serbia, ***Óbuda University, Bánki Donát Faculty of Mechanical and Safety Engineering, Budapest, Hungary, [email protected], [email protected], [email protected] • Incognito browsing mode - offers real private Abstract —Satisfactory level of security in the use of the browsing experience without leaving any historical Internet in mobile devices depends on several factors. One of data. them is safe browsing. A key factor in providing secure • Using of HTTPS protocol - enforces SSL (Secure browsing is the application of a browser with the appropriate Socket Layer) security protocol (using of certificates) methods applied: clearing cookies, cache and history, ability wherever that’s possible. of incognito browsing, using of whitelists and encryptions and others. This paper presents an overview of the various • Disabling features like JavaScript, DOM (Document security and privacy features used in the most frequently Object Model) storage used Android browsers. Also, in the case of several browsers • Using fingerprinting techniques and types of mobile devices, the use of benchmark tests is Further sections of this paper provide an overview of the shown. Bearing in mind the differences, when choosing a applied security and privacy methods for more popular browser, special attention should be paid to the applied Android browsers. Also, in order to compare the adequate security and privacy features. features of browsers, the use of benchmark tests on different mobile devices will be shown. -

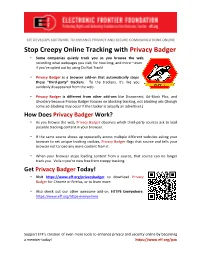

Privacy Badger One-Pager-1

EFF DEVELOPS SOFTWARE TO ENHANCE PRIVACY AND SECURE COMMUNICATIONS ONLINE Stop Creepy Online Tracking with Privacy Badger • Some companies quietly track you as you browse the web, recording what webpages you visit, for how long, and more—even if you’ve opted out by using Do Not Track! • Privacy Badger is a browser add-on that automatically stops these “third-party” trackers. To the trackers, it's like you suddenly disappeared from the web. • Privacy Badger is different from other add-ons like Disconnect, Ad-Block Plus, and Ghostery because Privacy Badger focuses on blocking tracking, not blocking ads (though some ad-blocking may occur if the tracker is actually an advertiser.) How Does Privacy Badger Work? • As you browse the web, Privacy Badger observes which third-party sources ask to load possible tracking content in your browser. • If the same source shows up repeatedly across multiple different websites asking your browser to set unique tracking cookies, Privacy Badger flags that source and tells your browser not to load any more content from it. • When your browser stops loading content from a source, that source can no longer track you. Voila—you’re now free from creepy tracking. Get Privacy Badger Today! • Visit https://www.eff.org/privacybadger to download Privacy Badger for Chrome or Firefox, or to learn more. • Also check out our other awesome add-on, HTTPS Everywhere. https://www.eff.org/https-everywhere Support EFF’s creation of even more tools to enhance privacy and security online by becoming a member today! https://www.eff.org/join . -

A Deep Dive Into the Technology of Corporate Surveillance

Behind the One-Way Mirror: A Deep Dive Into the Technology of Corporate Surveillance Author: Bennett Cyphers and Gennie Gebhart A publication of the Electronic Frontier Foundation, 2019. “Behind the One-Way Mirror: A Deep Dive Into the Technology of Corporate Surveillance” is released under a Creative Commons Attribution 4.0 International License (CC BY 4.0). View this report online: https://www.eff.org/wp/behind-the-one-way-mirror ELECTRONIC FRONTIER FOUNDATION 1 Behind the One-Way Mirror: A Deep Dive Into the Technology of Corporate Surveillance Behind the One-Way Mirror A Deep Dive Into the Technology of Corporate Surveillance BENNETT CYPHERS AND GENNIE GEBHART December 2, 2019 ELECTRONIC FRONTIER FOUNDATION 2 Behind the One-Way Mirror: A Deep Dive Into the Technology of Corporate Surveillance Introduction 4 First-party vs. third-party tracking 4 What do they know? 5 Part 1: Whose Data is it Anyway: How Do Trackers Tie Data to People? 6 Identifiers on the Web 8 Identifiers on mobile devices 17 Real-world identifiers 20 Linking identifiers over time 22 Part 2: From bits to Big Data: What do tracking networks look like? 22 Tracking in software: Websites and Apps 23 Passive, real-world tracking 27 Tracking and corporate power 31 Part 3: Data sharing: Targeting, brokers, and real-time bidding 33 Real-time bidding 34 Group targeting and look-alike audiences 39 Data brokers 39 Data consumers 41 Part 4: Fighting back 43 On the web 43 On mobile phones 45 IRL 46 In the legislature 46 ELECTRONIC FRONTIER FOUNDATION 3 Behind the One-Way Mirror: A Deep Dive Into the Technology of Corporate Surveillance Introduction Trackers are hiding in nearly every corner of today’s Internet, which is to say nearly every corner of modern life. -

People's Tech Movement to Kick Big Tech out of Africa Could Form a Critical Part of the Global Protests Against the Enduring Legacy of Racism and Colonialism

CONTENTS Acronyms ................................................................................................................................................ 1 1 Introduction: The Rise of Digital Colonialism and Surveillance Capitalism ..................... 2 2 Threat Modeling .......................................................................................................................... 8 3 The Basics of Information Security and Software ............................................................... 10 4 Mobile Phones: Talking and Texting ...................................................................................... 14 5 Web Browsing ............................................................................................................................ 18 6 Searching the Web .................................................................................................................... 23 7 Sharing Data Safely ................................................................................................................... 25 8 Email Encryption ....................................................................................................................... 28 9 Video Chat ................................................................................................................................... 31 10 Online Document Collaboration ............................................................................................ 34 11 Protecting Your Data ................................................................................................................ -

BIG DATA Handbook a Guide for Lawyers

W BIG DATA Handbook A Guide for Lawyers Robert Maier, Partner, Baker Botts Joshua Sibble, Senior Associate, Baker Botts BIG DATA Handbook—A Guide for Lawyers Executive Summary BIG DATA AND THE FUTURE OF COMMERCE Big Data is the future. Each year Big Data—large sets of data processed to reveal previously unseen trends, behaviors, and other patterns—takes on an increasingly important role in the global economy. In 2010, the Big Data market was valued at $100 billion worldwide, and it is expected to grow to over $200 billion by 2020. The power of Big Data and artificial intelligence to crunch vast amounts of information to gain insights into the world around us, coupled with the emerging ubiquity of the Internet of Things (IoT) to sense and gather that data about nearly everything on earth and beyond, has triggered a revolution the likes of which have not been seen since the advent of the personal computer. These technologies have the potential to shatter the norms in nearly every aspect of society—from the research into what makes a workforce happier and more productive, to healthcare, to the ways we think about climate change, and to the ways we bank and do business. Not surprisingly, it seems everyone is getting into the Big Data game, from the expected—multinational computer hardware, software, and digital storage manufacturers—to the unexpected—real estate agents, small businesses, and even wildlife conservationists. Within a few years, every sector of the global economy will be touched in some way by Big Data, its benefits, and its pitfalls. -

Tracking the Cookies a Quantitative Study on User Perceptions About Online Tracking

Bachelor of Science in Computer Science Mars 2019 Tracking the cookies A quantitative study on user perceptions about online tracking. Christian Gribing Arlfors Simon Nilsson Faculty of Computing, Blekinge Institute of Technology, 371 79 Karlskrona, Sweden This thesis is submitted to the Faculty of Computing at Blekinge Institute of Technology in partial fulfilment of the requirements for the degree of Bachelor of Science in Computer Science. The thesis is equivalent to 20 weeks of full time studies. The authors declare that they are the sole authors of this thesis and that they have not used any sources other than those listed in the bibliography and identified as references. They further declare that they have not submitted this thesis at any other institution to obtain a degree. Contact Information: Author(s): Christian Gribing Arlfors E-mail: [email protected] Simon Nilsson E-mail: [email protected] University advisor: Fredrik Erlandsson Department of Computer Science Faculty of Computing Internet : www.bth.se Blekinge Institute of Technology Phone : +46 455 38 50 00 SE–371 79 Karlskrona, Sweden Fax : +46 455 38 50 57 Abstract Background. Cookies and third-party requests are partially implemented to en- hance user experience when traversing the web, without them the web browsing would be a tedious and repetitive task. However, their technology also enables com- panies to track users across the web to see which sites they visit, which items they buy and their overall browsing habits which can intrude on users privacy. Objectives. This thesis will present user perceptions and thoughts on the tracking that occurs on their most frequently visited websites. -

LINUX JOURNAL (ISSN 1075-3583) Is Published Monthly by Linux Journal, LLC

Getting Started Review: the A Look at GDPR’s with Nextcloud Librem 13v2 Massive Impact Since 1994: The original magazine of the Linux community PRIVACY HOW TO PROTECT YOUR DATA EFFECTIVE PRIVACY PLUGINS GIVE YOUR SERVERS SOME PRIVACY WITH TOR HIDDEN SERVICES INTERVIEW: PRIVATE INTERNET ACCESS GOES OPEN SOURCE ISSUE 286 | MAY 2018 www.linuxjournal.com MAY 2018 CONTENTS ISSUE 286 82 DEEP DIVE: PRIVACY 83 Data Privacy: Why It Matters and How to Protect Yourself by Petros Koutoupis When it comes to privacy on the internet, 118 Facebook the safest approach is to cut your Ethernet cable or power down your Compartmentalization device. In reality though, most people by Kyle Rankin can’t actually do that and remain productive. This article provides an I don’t always use Facebook, but when overview of the situation, steps you I do, it’s over a compartmentalized can take to mitigate risks and finishes browser over Tor. with a tutorial on setting up a virtual private network. 121 The Fight for Control: 106 Privacy Plugins Andrew Lee on by Kyle Rankin Open-Sourcing PIA by Doc Searls Protect yourself from privacy-defeating ad trackers and malicious JavaScript with When I learned that our sister company, these privacy-protecting plugins. Private Internet Access (PIA) was opening its source code, I immediately wanted to know the backstory, especially since 113 Tor Hidden Services privacy is the theme of this month’s issue. So I contacted Andrew Lee, who founded by Kyle Rankin PIA, and an interview ensued. Why should clients get all the privacy? Give your -

How to Enable Javascript in a Web Browser

How to enable JavaScript in a web browser To allow all Web sites in the Internet zone to run scripts, use the steps that apply to your browser: Windows Internet Explorer (all versions except Pocket Internet Explorer) Note To allow scripting on this Web site only, and to leave scripting disabled in the Internet zone, add this Web site to the Trusted sites zone. 1. On the Tools menu, click Internet Options , and then click the Security tab. 2. Click the Internet zone. 3. If you do not have to customize your Internet security settings, click Default Level . Then do step 4 If you have to customize your Internet security settings, follow these steps: a. Click Custom Level . b. In the Security Settings – Internet Zone dialog box, click Enable for Active Scripting in the Scripting section. 4. Click the Back button to return to the previous page, and then click the Refresh button to run scripts. Mozilla Corporation’s Firefox By default, Firefox enables the use of JavaScript and requires no additional installation. Note To allow and block JavaScript on certain domains you can install privacy extensions such as: NoScript: Allows JavaScript and other content to run only on websites of your choice. Ghostery: Allows you to block scripts from companies that you don't trust. For more information please refer to mozzila support web page. Google Chrome 1. Select Customize and control Google Chrome (the icon with 3 stacked horizontal lines) to the right of the address bar. 2. From the drop-down menu, select Settings . 3. At the bottom of the page, click Show advanced settings..