Leveraging Mixed Reality for Augmented Structural Mechanics Education

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Student Guide to Msc Studies Institute of Informatics, University of Szeged

Student Guide to MSc Studies Institute of Informatics, University of Szeged 2. Contact information Address: Árpád square 2, Szeged, Hungary Postal Address: Institute of Informatics 6701 Szeged, Hungary, P. O. Box 652. Telephone: +36 62 546396 Fax: +36 62 546397 E-mail: [email protected] Url: http://www.inf.u-szeged.hu 2 3. Contents 1. Title page................................................................................................................................1 2. Contact information...............................................................................................................2 3. Contents.................................................................................................................................3 4. Foreword................................................................................................................................4 5. Organization...........................................................................................................................5 6. Main courses..........................................................................................................................6 7. Education...............................................................................................................................8 8. Research...............................................................................................................................10 9. Miscellania...........................................................................................................................11 -

094 on Reinforcing Learning in Engineering Education by Means of Interactive Pen Displays

03 Engineering Education 160-227_Layout 1 18/09/2012 1:35 π.μ. Page 202 40th annual 23-26 September 2012 conference Thessaloniki, Greece Engineering Education Research 094 On Reinforcing Learning in Engineering Education by Means of Interactive Pen Displays J. Fabra1, J. Civera, J. R. Asensio Department of Computer Science and Systems Engineering Universidad de Zaragoza, Spain {jfabra, jcivera, jrasensi}@unizar.es Conference Topic: Engineering Education Research, Information and Communication Technologies. Keywords: ICT in Education, Interactive Pen Displays, Tablet PC, Engineering Education, Poll Analysis he use of traditional blackboards imposes several constraints in the learning experience, being the most relevant: 1) the unidirectional communication channel they provide; 2) their limited T graphical possibilities; and 3) the impossibility of recovering the sequential flow of the lecture once it has finished. In engineering lectures, these constraints represent serious limitations. In order to overcome them, digital slides have been traditionally used. More recently, digital displays and tablets have been used to further improve the learning process. Interactive Pen Displays (from Wacom manufacturer) are display devices with some particularities over Tablet PC’s that naturally overcome most of these constraints. First, existing software provides endless display possibilities (geometric drawings, colors, simulations, graphs…). Also, not only the lecture re- sults can be accurately recorded, but also the whole lecture process in its temporal dimension. This frees students from the tedious task of recording and taking notes and provides richer resources for their posterior autonomous work, as well as allowing recordings to be uploaded as learning objects to collaborative learning environments such as Blackboard or Moodle, for instance. -

A Research Agenda for Augmented and Virtual Reality in Architecture

Advanced Engineering Informatics 45 (2020) 101122 Contents lists available at ScienceDirect Advanced Engineering Informatics journal homepage: www.elsevier.com/locate/aei A research agenda for augmented and virtual reality in architecture, T engineering and construction ⁎ ⁎ Juan Manuel Davila Delgadoa, , Lukumon Oyedelea, , Peter Demianc, Thomas Beachb a Big Data Enterprise and Artificial Intelligence Laboratory, University of West of England Bristol, UK b School of Engineering, Cardiff University, UK c School of Architecture, Building and Civil Engineering, Loughborough University, UK ARTICLE INFO ABSTRACT Keywords: This paper presents a study on the usage landscape of augmented reality (AR) and virtual reality (VR) in the Augmented reality architecture, engineering and construction sectors, and proposes a research agenda to address the existing gaps Virtual reality in required capabilities. A series of exploratory workshops and questionnaires were conducted with the parti- Construction cipation of 54 experts from 36 organisations from industry and academia. Based on the data collected from the Immersive technologies workshops, six AR and VR use-cases were defined: stakeholder engagement, design support, design review, Mixed reality construction support, operations and management support, and training. Three main research categories for a Visualisation future research agenda have been proposed, i.e.: (i) engineering-grade devices, which encompasses research that enables robust devices that can be used in practice, e.g. the rough and complex conditions of construction sites; (ii) workflow and data management; to effectively manage data and processes required by ARandVRtech- nologies; and (iii) new capabilities; which includes new research required that will add new features that are necessary for the specific construction industry demands. -

About the Contributors

310 About the Contributors Ioannis Minis is a Professor in the Department of Financial and Management Engineering of the University of Aegean. He conducts research in design, production and operations systems, including supply chain management. Dr. Minis holds a Ph.D. degree from the University of Maryland in Me- chanical Engineering, and has held the positions of Assistant and Associate Professor of Mechanical Engineering at the same University (1988-1997). He is conducting research in Operations for over 20 years and has authored two edited volumes, several book chapters, over 45 journal articles and over 60 articles in conference proceedings. Dr. Minis has been the recipient of the 1993 Earl E. Walker Out- standing Young Manufacturing Engineer Award from the Society of Manufacturing Engineers (SME). Vasileios Zeimpekis is Adjunct Lecturer in the Department of Financial & Management Engineer- ing at the University of the Aegean. He is also Senior Research Officer at the Design, Operations, & Production Systems (DeOPSys) Lab situated at the same University. His interests focus on road trans- portation & logistics with emphasis on decision support systems and telematics. He has published 3 edited volumes and more than 40 papers in the area of supply chain and transportation. Vasileios, holds a BEng(Hons) in Telecommunications Engineering from the University of Essex, (UK). He has also been awarded with an MSc in Mobile & Satellite Communications from the University of Surrey (UK) and an MSc with Distinction in Engineering Business Management from the University of Warwick (UK). He earned his PhD from the Department of Management Science & Technology at the Athens University of Economics & Business. -

Systems Integration and Collaboration in Architecture, Engineering, Construction, and Facilities Management: a Review

Advanced Engineering Informatics 24 (2010) 196–207 Contents lists available at ScienceDirect Advanced Engineering Informatics journal homepage: www.elsevier.com/locate/aei Systems integration and collaboration in architecture, engineering, construction, and facilities management: A review Weiming Shen *, Qi Hao, Helium Mak, Joseph Neelamkavil, Helen Xie, John Dickinson, Russ Thomas, Ajit Pardasani, Henry Xue Institute for Research in Construction, National Research Council Canada, London, Ont., Canada N6G 4X8 article info abstract Article history: With the rapid advancement of information and communication technologies, particularly Internet and Received 27 February 2009 Web-based technologies during the past 15 years, various systems integration and collaboration technol- Received in revised form 27 August 2009 ogies have been developed and deployed to different application domains, including architecture, engi- Accepted 13 September 2009 neering, construction, and facilities management (AEC/FM). These technologies provide a consistent set Available online 2 October 2009 of solutions to support the collaborative creation, management, dissemination, and use of information through the entire product and project lifecycle, and further to integrate people, processes, business sys- tems, and information more effectively. This paper presents a comprehensive review of research litera- ture on systems integration and collaboration in AEC/FM, and discusses challenging research issues and future research opportunities. Crown Copyright Ó 2009 Published by Elsevier Ltd. All rights reserved. 1. Introduction integration becomes an important prerequisite to achieve efficient and effective collaboration. In fact, systems integration is all about Due to rapid changes in technology, demographics, business, interoperability. Under the context of this paper, interoperability re- the economy, and the world, we are entering a new era where peo- fers to the ability of diverse software and hardware systems to man- ple participate in the economy like never before. -

Augmented Reality Visualization: a Review of Civil Infrastructure System Applications ⇑ Amir H

Advanced Engineering Informatics 29 (2015) 252–267 Contents lists available at ScienceDirect Advanced Engineering Informatics journal homepage: www.elsevier.com/locate/aei Augmented reality visualization: A review of civil infrastructure system applications ⇑ Amir H. Behzadan a, Suyang Dong b, Vineet R. Kamat b, a Department of Civil, Environmental, and Construction Engineering, University of Central Florida, Orlando, FL 32816, USA b Department of Civil and Environmental Engineering, University of Michigan, Ann Arbor, MI 48109, USA article info abstract Article history: In Civil Infrastructure System (CIS) applications, the requirement of blending synthetic and physical Received 25 August 2014 objects distinguishes Augmented Reality (AR) from other visualization technologies in three aspects: Received in revised form 25 January 2015 (1) it reinforces the connections between people and objects, and promotes engineers’ appreciation about Accepted 11 March 2015 their working context; (2) it allows engineers to perform field tasks with the awareness of both the physi- Available online 7 April 2015 cal and synthetic environment; and (3) it offsets the significant cost of 3D Model Engineering by including the real world background. This paper reviews critical problems in AR and investigates technical Keywords: approaches to address the fundamental challenges that prevent the technology from being usefully Engineering visualization deployed in CIS applications, such as the alignment of virtual objects with the real environment continu- Augmented reality Excavation safety ously across time and space; blending of virtual entities with their real background faithfully to create a Building damage reconnaissance sustained illusion of co-existence; and the integration of these methods to a scalable and extensible com- Visual simulation puting AR framework that is openly accessible to the teaching and research community. -

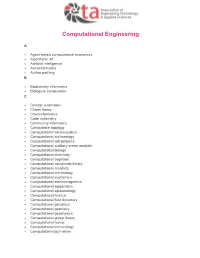

AETA-Computational-Engineering.Pdf

Computational Engineering A Agent-based computational economics Algorithmic art Artificial intelligence Astroinformatics Author profiling B Biodiversity informatics Biological computation C Cellular automaton Chaos theory Cheminformatics Code stylometry Community informatics Computable topology Computational aeroacoustics Computational archaeology Computational astrophysics Computational auditory scene analysis Computational biology Computational chemistry Computational cognition Computational complexity theory Computational creativity Computational criminology Computational economics Computational electromagnetics Computational epigenetics Computational epistemology Computational finance Computational fluid dynamics Computational genomics Computational geometry Computational geophysics Computational group theory Computational humor Computational immunology Computational journalism Computational law Computational learning theory Computational lexicology Computational linguistics Computational lithography Computational logic Computational magnetohydrodynamics Computational Materials Science Computational mechanics Computational musicology Computational neurogenetic modeling Computational neuroscience Computational number theory Computational particle physics Computational photography Computational physics Computational science Computational engineering Computational scientist Computational semantics Computational semiotics Computational social science Computational sociology Computational -

Advanced Engineering Informatics)

Invitation to contribute to the Special Issue on Robotics in the Construction Industry (Advanced Engineering Informatics) We would like to invite you to contribute to a Special Issue of Robotics in the Construction Industry that will be dedicated to the nascent field of construction robots. Research into construction robotics mainly focuses on developing new robotic hardware and software applications, but so far has developed limited knowledge about how to build robotic systems that can leverage carefully formalized knowledge of experienced craftsman and site managers. The Special Issue will elucidate the technical and institutional difficulties inherent in the construction industry and provide several online workshops for sharing potential ways to overcome them. The first online workshop will be held on June 26, 2020 to discuss ideas for possible papers to include in the special issue. As a pioneer in this field, you recognize the potential importance of construction robots – as well as the challenges - which is why you have been invited to join the workshop and contribute a paper for inclusion in this Special Issue. Potential topics will include: - Computational design support for robotic construction - Design of construction robotic systems for construction activities requiring high craftsmanship - Advanced control strategies and artificial intelligence for robot locomotion and manipulation inspired by detailed studies of construction workers - Sensing technologies for situation awareness in construction applications allowing to detect -

0.3 Criteria for the Accrediation of Degree Programmes

ASIIN_Broschürentitel - Kriterien Programm, System, AR, ASIIN 2016-01-14_Layout 1 14.01.2016 16:31 Seite 12 Criteria for the Accreditation of Degree Programmes - ASIIN Quality Seal Engineering, Informatics, Architecture, Natural Sciences, Mathematics, individually and in combination with other Subject Areas ASIIN e.V. Accreditation Agency for Degree Programmes in Engineering, Informatics/Computer Science, the Natural Sciences and Mathematics e.V. PO Box 10 11 39 40002 Düsseldorf, Germany Tel.: +49 211 900 9770 Fax: +49 211 900 97799 URL: http://www.asiin.de Email: [email protected] As of: 10/12/2015 Copyright notice: This document is subject to copyright law. Written consent is required for any editing and any type of use beyond the scope of copyright law, in particular for commercial purposes. Table of contents 1. Purpose of this document .................................................................................................................... 4 2. Requirements for the award of the ASIIN quality seal for degree programmes (including EUR-ACE®, Euro-Inf®, Eurobachelor® and Euromaster®) ........................................................................................... 4 2.1 Relation between the ASIIN quality seal and the European subject-specific labels................................. 5 2.2 General Criteria ............................................................................................................................... 5 2.3 Requirements for degree programmes with a special outline ........................................................... -

Neuro Informatics 2010

Neuro Informatics 2010 August 30 - September 1 Kobe, Japan ABSTRACT BOOK Neuroinformatics 2010 3rd INCF Congress of Neuroinformatics Program and Abstracts August 30 to September 1, 2010 Kobe, Japan Neuroinformatics 2010 1 Welcome to the 3rd INCF Congress in Kobe, Japan! Neuroinformatics 2010 is organized by the INCF in cooperation with the INCF Japan Node. As with the two first INCF Congress- es (in Stockholm and Pilsen), the Kobe meeting brings together neuroinformatics researchers from numerous disciplines and dozens of countries. The single-track program includes five keynote speakers, four scientific workshops and two poster and demonstration sessions. In addition, the last half day will be devoted to an INCF Japan Node special session with lectures and discussions on INCF global initiatives and neuroinformatics in the Asian-Pacific circle. We also collaborate with the Neuro2010 conference, which follows immediately and spans a broad variety of neuroscience. In all, we anticipate an exceptional sci- entific event that will help raise the level of neuroinformatics research worldwide. Please enjoy the many fine presentations, posters, and demos! David Van Essen Washington University School of Medicine, USA INCF 2010 Program Committee Chair Organizers Program Committee: Kenji Doya, Principal Investigator, IRP-OIST David Van Essen (Chair) - Washington University School of Program Chair, Neuro2010 Medicine Keiji Tanaka, Deputy Director, RIKEN BSI Richard Baldock - Medical Research Council, Edinburgh Delegate, INCF Japan-Node Svein Dahl -

About the Contributors

About the Contributors Nik Bessis is currently a senior lecturer and head of postgraduate taught courses, in the Department of Computing and Information Systems at University of Bedfordshire (UK). He obtained a BA from the TEI of Athens in 1991 and completed his PhD (2002) and MA (1995) at De Montfort University, Leicester, UK. His research interest is the development of distributed information systems for virtual organizations with a particular focus in next generation Grid technology; data integration and data push for the creative and other sectors. * * * Giuseppe Andronico received his degree on theoretical physics with his thesis on the methods in Lattice QCD on July 1991. Since 2000, he is working in Grid computing collaborating with EDG, EGEE and other projects. In 2006 and 2007 was technical manager in the project EUChinaGRID where started his work with interoperability between GOS and EGEE. Vassiliki Andronikou received her MSc from the Electrical and Computer Engineering Depart- ment of the National Technical University of Athens (NTUA) in 2004. She has worked in the bank and telecommunications sector, while currently she is a research associate in the Telecommunications Laboratory of the NTUA, with her interests focusing on the security and privacy aspects of biometrics and data management in Grid. Nick Antonopoulos is currently a senior lecturer (US associate professor) at the Department of Computing, University of Surrey, UK. He holds a BSc in physics (1st class) from the University of Athens in1993, an MSc in information technology from Aston University in 1994 and a PhD in computer sci- ence from the University of Surrey in 2000. -

Combining Multi-Parametric Programming and NMPC for The

A publication of CHEMICAL ENGINEERING TRANSACTIONS The Italian Association VOL. 35, 2013 of Chemical Engineering www.aidic.it/cet Guest Editors: Petar Varbanov, Jiří Klemeš, Panos Seferlis, Athanasios I. Papadopoulos, Spyros Voutetakis Copyright © 2013, AIDIC Servizi S.r.l., ISBN 978-88-95608-26-6; ISSN 1974-9791 DOI: 10.3303/CET1335152 Combining Multi-Parametric Programming and NMPC for the Efficient Operation of a PEM Fuel Cell Chrysovalantou Ziogoua,b,*, Michael C. Georgiadisa,c, d a,e a Efstratios N. Pistikopoulos , Simira Papadopoulou , Spyros Voutetakis a Chemical Process and Energy Resources Institute (CPERI), Centre for Research and Technology Hellas (CERTH), PO Box 60361, 57001, Thessaloniki, Greece b Department of Engineering Informatics and Telecommunications, University of Western Macedonia, Vermiou and Lygeris str., Kozani, 50100, Greece c Department of Chemical Engineering, Aristotle University of Thessaloniki, Thessaloniki, 54124, Greece d Department of Chemical Engineering, Centre for Process Systems Engineering, Imperial College London, SW7 2AZ London, UK e Department of Automation, Alexander Technological Educational Institute of Thessaloniki, PO Box 141, 54700 Thessaloniki, Greece [email protected] This work presents an integrated advanced control framework for a small-scale automated Polymer Electrolyte Membrane (PEM) Fuel Cell system. At the core of the nonlinear model predictive control (NMPC) formulation a nonlinear programming (NLP) problem is solved utilising a dynamic model which is discretized based on a direct transcription method. Prior to the online solution of the NLP problem a pre- processing search space reduction (SSR) algorithm is applied which is guided by the offline solution of a multi-parametric Quadratic Programming (mpQP) problem. This synergy augments the typical NMPC approach and aims at improving both the computational requirements of the multivariable nonlinear controller and the quality of the control action.