Administering Oracle Goldengate

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

International Language Environments Guide

International Language Environments Guide Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 806–6642–10 May, 2002 Copyright 2002 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, AnswerBook, AnswerBook2, Java, XView, ToolTalk, Solstice AdminTools, SunVideo and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. SunOS, Solaris, X11, SPARC, UNIX, PostScript, OpenWindows, AnswerBook, SunExpress, SPARCprinter, JumpStart, Xlib The OPEN LOOK and Sun™ Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. -

Messages and Codes

NetView IBM Messages and Codes SH12-5483-07 NetView IBM Messages and Codes SH12-5483-07 Note! Before using this information and the product it supports, be sure to read the general information under “Notices” on page v. Eighth Edition, December 1994 This is a major revision of, and obsoletes, SH12-5483-06. This edition applies to Version 2 Release 2 Modification Level 1 of NetView File Transfer Program for MVS (5685-108) Version 1 Release 1 Modification Level 1 of NetView File Transfer Program for VSE (5686-013) Version 1 Release 1 Modification Level 1 of NetView File Transfer Program for VM (5684-048) and to all subsequent releases and modifications until otherwise indicated in new editions or technical newsletters. Make sure you are using the correct edition for the level of the product. Order publications through your IBM representative or the IBM branch office serving your locality. Publications are not stocked at the address below. IBM welcomes your comments. A form for readers’ comments may be provided at the back of this publication, or you may address your comments to the following address: IBM Deutschland Entwicklung GmbH Information Development, Dept. 0446 Postfach 1380 71003 Boeblingen Germany When you send information to IBM, you grant IBM a nonexclusive right to use or distribute the information in any way it believes appropriate without incurring any obligation to you. Copyright International Business Machines Corporation 1988, 1994. All rights reserved. Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp. -

LS2208 Product Reference Guide, P/N MN000754A02 Rev A

LS2208 PRODUCT REFERENCE GUIDE LS2208 PRODUCT REFERENCE GUIDE MN000754A02 Revision A March 2015 ii LS2208 Product Reference Guide No part of this publication may be reproduced or used in any form, or by any electrical or mechanical means, without permission in writing. This includes electronic or mechanical means, such as photocopying, recording, or information storage and retrieval systems. The material in this manual is subject to change without notice. The software is provided strictly on an “as is” basis. All software, including firmware, furnished to the user is on a licensed basis. We grant to the user a non-transferable and non-exclusive license to use each software or firmware program delivered hereunder (licensed program). Except as noted below, such license may not be assigned, sublicensed, or otherwise transferred by the user without our prior written consent. No right to copy a licensed program in whole or in part is granted, except as permitted under copyright law. The user shall not modify, merge, or incorporate any form or portion of a licensed program with other program material, create a derivative work from a licensed program, or use a licensed program in a network without our written permission. The user agrees to maintain our copyright notice on the licensed programs delivered hereunder, and to include the same on any authorized copies it makes, in whole or in part. The user agrees not to decompile, disassemble, decode, or reverse engineer any licensed program delivered to the user or any portion thereof. Zebra reserves the right to make changes to any product to improve reliability, function, or design. -

Top 40 Insurance Companies

2013 Insurance Commissioner’s Annual Report Appendix E Top 40 Insurance Companies by Line of Business in Washington 2013 State of Washington Page 1 of 1 Office of Insurance Commissioner 2013 Washington Market Share and Loss Ratio Top 40 Authorized Companies Zero Premium and Loss Companies Excluded Line of Business: Aggregate Write-ins For Other Lines of Business All Dollars in Thousands Direct Direct Direct NAIC Premiums Market Premiums Losses Loss Rank Company Name Code Dom Written Share Earned Incurred Ratio(1) 1 Arag Ins Co 34738 IA $6,070 34.00% $6,070 $2,086 34.36% 2 Physicians Ins A Mut Co 40738 WA $4,143 23.21% $4,143 $2,386 57.61% 3 Midwest Employers Cas Co 23612 DE $3,084 17.27% $2,776 $5,731 206.43% 4 Triton Ins Co 41211 TX $1,160 6.50% $945 $273 28.89% 5 Yosemite Ins Co 26220IN $750 4.20% $387 $245 63.29% 6 Central States Ind Co Of Omaha 34274NE $730 4.09% $731 ($24) (3.35)% 7 American Road Ins Co 19631MI $506 2.83% $506 $42 8.29% 8 Courtesy Ins Co 26492FL $381 2.13% $286 $48 16.72% 9 St Paul Fire & Marine Ins Co 24767CT $289 1.62% $275 $42 15.18% 10 Allstate Prop & Cas Ins Co 17230IL $210 1.17% $215 $0 0.00% 11 Ace Amer Ins Co 22667PA $207 1.16% $207 $8 3.96% 12 Esurance Ins Co 25712 WI $128 0.72% $128 $0 0.00% 13 Stonebridge Cas Ins Co 10952 OH $70 0.39% $70 ($1) (0.85)% 14 Excess Share Ins Corp 10003 OH $59 0.33% $59 $0 0.00% 15 American Bankers Ins Co Of FL 10111 FL $44 0.25% $44 $2 5.54% 16 Great Amer Ins Co 16691 OH $16 0.09% ($46) $274 (589.70)% 17 Markel Ins Co 38970IL $5 0.03% $2 $0 7.68% 18 American Reliable Ins Co 19615AZ $4 0.02% $4 $0 (0.69)% 19 Great Amer Assur Co 26344 OH ($3) (0.02)% $93 $30 32.29% All 5 Other Companies $1 0.01% $1 ($35) (2587.29)% Totals (Loss Ratio is average) $17,852 100.00% $16,895 $11,107 65.74% (1)Excluding all Loss Adjustment Expenses (LAE) Copyright 1990 - 2014 National Association of Insurance Commissioners. -

Windows NLS Considerations Version 2.1

Windows NLS Considerations version 2.1 Radoslav Rusinov [email protected] Windows NLS Considerations Contents 1. Introduction ............................................................................................................................................... 3 1.1. Windows and Code Pages .................................................................................................................... 3 1.2. CharacterSet ........................................................................................................................................ 3 1.3. Encoding Scheme ................................................................................................................................ 3 1.4. Fonts ................................................................................................................................................... 4 1.5. So Why Are There Different Charactersets? ........................................................................................ 4 1.6. What are the Difference Between 7 bit, 8 bit and Unicode Charactersets? ........................................... 4 2. NLS_LANG .............................................................................................................................................. 4 2.1. Setting the Character Set in NLS_LANG ............................................................................................ 4 2.2. Where is the Character Conversion Done? ......................................................................................... -

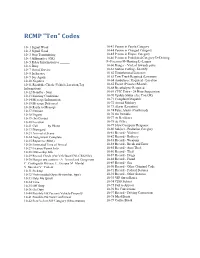

RCMP Ten Codes

RCMP "Ten" Codes 10- 1 Signal Weak 10-43 Person in Parole Category 10- 2 Signal Good 10-44 Person in Charged Category 10- 3 Stop Transmitting 10-45 Person in Elopee Category 10- 4 Affirmative (OK) 10-46 Person in Prohibited Category D=Driving 10- 5 Relay Information to ______ F=Firearms H=Hunting L=Liquor 10- 6 Busy 10-60 Danger - Violent towards police 10- 7 Out of Service 10-61 Station Calling - Identify 10- 8 In Service 10-62 Unauthorized Listeners 10- 9 Say Again 10-63 Tow Truck Required (Location) 10-10 Negative 10-64 Ambulance Required - Location 10-11 Roadside Check (Vehicle,Location,Tag 10-65 Escort (Prisoner/Mental) Information) 10-68 Breathalyzer Required 10-12 Standby - Stop 10-69 CPIC Entry - 24 Hour Suspension 10-13 Existing Conditions 10-70 Update Status (Are You OK) 10-14 Message/Information 10-71 Complaint Dispatch 10-15 Message Delivered 10-72 Armed Robbery 10-16 Reply to Message 10-73 Alarm (Location) 10-17 Enroute 10-74 False Alarm (Confirmed) 10-18 Urgent 10-76 On Portable 10-19 (In) Contact 10-77 At Residence 10-20 Location 10-78 At Office 10-21 Call _____ by Phone 10-79 Slow Computer Response 10-22 Disregard 10-80 Subject - Probation Category 10-23 Arrived at Scene 10-81 Record - Violence 10-24 Assignment Complete 10-82 Record - Robbery 10-25 Report to (Meet) 10-83 Record - Weapons 10-26 Estimated Time of Arrival 10-84 Record - Break and Enter 10-27 Licence/Permit Info 10-85 Record - Auto Theft 10-28 Ownership Info 10-86 Record - Theft 10-29 Record Check (Per/Veh/Boat/CNI-CRS File) 10-87 Record - Drugs 10-30 Danger -

Arcsde Configuration and Tuning Guide for Oracle

ArcSDE® Configuration and Tuning Guide for Oracle® ArcGIS® 9 Copyright © 1999-2004 ESRI All Rights Reserved. Printed in the United States of America. The information contained in this document is the exclusive property of ESRI. This work is protected under United States copyright law and the copyright laws of the given countries of origin and applicable international laws, treaties, and/or conventions. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying or recording, or by any information storage or retrieval system, except as expressly permitted in writing by ESRI. All requests should be sent to Attention: Contracts Manager, ESRI, 380 New York Street, Redlands, CA 92373-8100, USA. The information contained in this document is subject to change without notice. U. S. GOVERNMENT RESTRICTED/LIMITED RIGHTS Any software, documentation, and/or data delivered hereunder is subject to the terms of the License Agreement. In no event shall the U.S. Government acquire greater than RESTRICTED/LIMITED RIGHTS. At a minimum, use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in FAR §52.227-14 Alternates I, II, and III (JUN 1987); FAR §52.227-19 (JUN 1987) and/or FAR §12.211/12.212 (Commercial Technical Data/Computer Software); and DFARS §252.227-7015 (NOV 1995) (Technical Data) and/or DFARS §227.7202 (Computer Software), as applicable. Contractor/Manufacturer is ESRI, 380 New York Street, Redlands, CA 92373-8100, USA. ESRI, ArcView, ArcIMS, ArcInfo Librarian, MapObjects, ArcInfo, ArcSDE, ArcCatalog, ArcMap, ArcToolbox, ArcStorm, and ArcGIS are trademarks, registered trademarks, or service marks of ESRI in the United States, the European Community, or certain other jurisdictions. -

Character Encoding

$7.00 U.S. InsIde: Developing User Interface Standards INTERNATIONAL Plus! Who Owns the ® Data? SSpecpecTHE MULTIVALUE TEttCHNOLOGYrr umMumAGAZINE I MAR/APR 2011 Character Encoding Getting Ready for the World Stage intl-spectrum.com Advanced 6 Spec_Layout 1 2/14/11 2:22 PM Page 1 Advanced database technology for breakthrough applications This makes applications fly. Embed our post-relational database if you Caché eliminates the need for object-relational want your next application to have breakthrough mapping. Which can reduce your development features, run withC abclahzéing speed, be massively cycle by as much as 40%. scalable and require mi®nimal administration. Caché is available for all major platforms – InterSystems has advanced object and it supports MultiValue development. Caché is technology that makes it easier to build applica- deployed on more than 100,000 systems world- tions with XML, Web services, AJAX, Java, and .NET. wide, ranging from two to over 50,000 users. And Caché can run SQL up to 5 times faster than For over 30 years, we’ve provided advanced relational databases. ™ software technologies for breakthrough With its unique Unified Data Architecture , applications. Visit us at the International Spectrum Conference, April 4-7, 2011, West Palm Beach, Florida. InterSystems.com/Advanced6WW Download a free, fully functio©n 20a11l I,n tnerSoys-tetmism Corpeor-altiomn. Alil rtig hctso repseryve do. Inft eCrSyastecmhs Céach, éo is ra r ergiesteqredu treadsemta rikt o f oIntnerS yDsteVmsD Cor,p oaratti on. 2-11 Adv6Spec INTERNATIONAL ® SSpecpecTHE MULTIVALUE tt TErrCHNOLOGYumum MAGAZINE FEATURES I MARCH/APRIL 2011 Character Encoding It’s a small world and getting smaller, Business Tech: User Ownership of Data Gone are the days especially6 thanks to the Internet and 10 when the Data Processing department was both keeper and defender web-enabled applications. -

Timesten Reference

Oracle® TimesTen In-Memory Database Reference 11g Release 2 (11.2.2) E21643-05 December 2011 Oracle TimesTen In-Memory Database Reference, 11g Release 2 (11.2.2) E21643-05 Copyright © 2011, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). -

International Character Code Standard for the BE2

°, , CMU-ITC-87-091 International Character Code Standard for the BE2 June 18, 1987 Tomas Centerlind Information Technology Center (ITC) Camegie Mellon University 1. Major problems with foreign languages All European languages have a set of unique characters, even Great Britain with their Pound sign. Most of these characters are static and do not change if they are in the end or in the middle of the word. The Greek sigma sign however is an example of a character that changes look depending on the position. If we move on to the non-Roman alphabets like Arabic, they have a more complex structure. A basic rule is that certain of the characters are written together if they follow each other but not otherwise. This complicates representation and requires look ahead. In addition to this many of the languages have leftwards or downwards writing. All together these properties makes it very difficult to integrate them with Roman languages. Lots of guidelines have to be established to solve these problems, and before any coding can be done a set of standards must be selected or defined. In this paper I intend to gather all that I can think of that must be considered before selecting standards. Only the basic level of the implementation will be designed, so therefore routines that for example display complex languages like Arabic must be written by the user. A basic method that dislpays Roman script correctly will be supported. 1. Standards 1.1 Existing standards Following is a list of currently existing and used standards. 1.1.1 ASCII, ISO646 The ASCII standard, that is in use ahnost anywhere, will probably have to rcmain as a basic part of the system, and without any doubt it must be possible to read and write ASCII coded documents in the foreseeable future. -

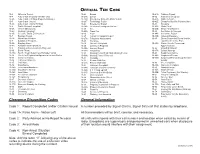

Official Ten Codes.Pdf

OFFICIAL TEN CODE 10-3 Officer in Trouble 10-23 Errand 10-41A Robbery Report 10-4 Auto Crash (Property Damage only) 10-23A Escort 10-42 Robbery in Progress 10-4A Auto Crash (Hit Skip, Property Damage) 10-23B Emergency Entry into Motor Vehicle 10-42A Robbery Alarm 10-5 Auto Crash (Injury) 10-23T Technology Repair 10-42E Electronic Satellite Robbery Alert 10-5A Auto Crash (Injury Hit Skip) 10-24 Emergency Squad 10-43 Shooting 10-6 Traffic Violator/Complaint 10-24A Infectious/Contagious Disease 10-43A Shots Fired 10-6A Vehicle Obstructing 10-25 Fire 10-43B Shots Fired/Hunters 10-6B Parking Complaint 10-25A Trash Fire 10-44 Sex Crime in Progress 10-6S Selective Traffic Enforcement 10-26 Fight 10-44A Sex Crime Report 10-7 Burglary Report 10-27 Assault or Hospital Report 10-44B Indecent Exposure 10-7A Open Door/Window 10-27A Telephone Harassment 10-45 Stolen/Suspected Stolen Vehicle, 10-8 Burglary in Progress 10-28 Homicide Lost/Stolen License Plate 10-8A Burglary Alarm 10-29 Juvenile Complaint 10-45A Stolen Vehicle Recovered/ 10-8B Burglary-Vacant Structure 10-30 Larceny in Progress Apprehensions 10-9 Fraudulent Documents (In Progress) 10-30A Larceny Report 10-46 Stranded Motorist 10-10 Bomb Threat 10-30B Shoplifting 10-47A Suicide Attempt 10-10A Bomb Threat (Suspicious Package Found) 10-31 Missing Person/High-Risk Missing Person 10-48 Suspicious Vehicle 10-12 Check for Registration/Operator’s License/Stolen 10-31A Missing Person Returned 10-48A Suspicious Person 10-13 Check for Tickets 10-32 Message 10-48G Suspected Threat Group Member/ 10-14 -

When You Advertise in the Yellow Pages...You Buy^

When you advertise in the Yellow Pages... ...you buy^ High Circulation Constant Readership - Your advertising is available to your customers, or pros• pective customers, 24 hours a day, every day. Convenience - Better customer service rendered by making it easy for people to find you. Good Will-Your advertisement in the Yellow Pages tells the buyers that you are interested in their buying problems. A Missing Link-That constantly ties your customer, your product, services, and other advertising together in a solid chain of merchandising. Point of Purchase Advertising-You reach your customer at the Point of Pur• chase when they need your advertising message most. Insurance-Against fluctuating markets because you always reach the spendable income groups regardless of business conditions. Share of the Market-The one factor that creates growth for all businesses and keeps them healthy. 1 Yellow Plages © The Mountain States Telephone and Telegraph Company 1973 2 the power of the ®W7 , adults used the Yellow Pages LT to locate products and services. they averaged 40 references per year of these references were followed by action! of the references to the Yellow Pages were made with a name in mind % of the references were made without a name in mind. Based on a study conducted by an independent research organization, Audits and Surveys Co., New York, N.Y. The Mountain States Telephone and Telegraph Company 1973 Do you know that you can reach 3 out of 4 of your buyers through the Yellow Pages? Buyers looking for Buyers looking for you because: anyone, who are: YOU WERE RECOMMENDED NEWCOMERS, VISITORSOR YOUNG ADULTS YOUR ADVERTISING REACHED THEM EMERGENCY BUYERS YOUR SALES OFFICE CONTACTED THEM DISSATISFIED WITH A COMPETITOR THEY HAVE PASSED BY INFREQUENT BUYERS THEY ARE FORMER CUSTOMERS COMPARISON SHOPPERS Here's how to make sure These buyers are these buyers find you: influenced by: 1.