Best Practices in Pilot Selection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Carol Raskin

Carol Raskin Artistic Images Make-Up and Hair Artists, Inc. Miami – Office: (305) 216-4985 Miami - Cell: (305) 216-4985 Los Angeles - Office: (310) 597-1801 FILMS DATE FILM DIRECTOR PRODUCTION ROLE 2019 Fear of Rain Castille Landon Pinstripe Productions Department Head Hair (Kathrine Heigl, Madison Iseman, Israel Broussard, Harry Connick Jr.) 2018 Critical Thinking John Leguizamo Critical Thinking LLC Department Head Hair (John Leguizamo, Michael Williams, Corwin Tuggles, Zora Casebere, Ramses Jimenez, William Hochman) The Last Thing He Wanted Dee Rees Elena McMahon Productions Additional Hair (Miami) (Anne Hathaway, Ben Affleck, Willem Dafoe, Toby Jones, Rosie Perez) Waves Trey Edward Shults Ultralight Beam LLC Key Hair (Sterling K. Brown, Kevin Harrison, Jr., Alexa Demie, Renee Goldsberry) The One and Only Ivan Thea Sharrock Big Time Mall Productions/Headliner Additional Hair (Bryan Cranston, Ariana Greenblatt, Ramon Rodriguez) (U. S. unit) 2017 The Florida Project Sean Baker The Florida Project, Inc. Department Head Hair (Willem Dafoe, Bria Vinaite, Mela Murder, Brooklynn Prince) Untitled Detroit City Yann Demange Detroit City Productions, LLC Additional Hair (Miami) (Richie Merritt Jr., Matthew McConaughey, Taylour Paige, Eddie Marsan, Alan Bomar Jones) 2016 Baywatch Seth Gordon Paramount Worldwide Additional Hair (Florida) (Dwayne Johnson, Zac Efron, Alexandra Daddario, David Hasselhoff) Production, Inc. 2015 The Infiltrator Brad Furman Good Films Production Department Head Hair (Bryan Cranston, John Leguizamo, Benjamin Bratt, Olympia -

The Regime Change Consensus: Iraq in American Politics, 1990-2003

THE REGIME CHANGE CONSENSUS: IRAQ IN AMERICAN POLITICS, 1990-2003 Joseph Stieb A dissertation submitted to the faculty at the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of History in the College of Arts and Sciences. Chapel Hill 2019 Approved by: Wayne Lee Michael Morgan Benjamin Waterhouse Daniel Bolger Hal Brands ©2019 Joseph David Stieb ALL RIGHTS RESERVED ii ABSTRACT Joseph David Stieb: The Regime Change Consensus: Iraq in American Politics, 1990-2003 (Under the direction of Wayne Lee) This study examines the containment policy that the United States and its allies imposed on Iraq after the 1991 Gulf War and argues for a new understanding of why the United States invaded Iraq in 2003. At the core of this story is a political puzzle: Why did a largely successful policy that mostly stripped Iraq of its unconventional weapons lose support in American politics to the point that the policy itself became less effective? I argue that, within intellectual and policymaking circles, a claim steadily emerged that the only solution to the Iraqi threat was regime change and democratization. While this “regime change consensus” was not part of the original containment policy, a cohort of intellectuals and policymakers assembled political support for the idea that Saddam’s personality and the totalitarian nature of the Baathist regime made Iraq uniquely immune to “management” strategies like containment. The entrenchment of this consensus before 9/11 helps explain why so many politicians, policymakers, and intellectuals rejected containment after 9/11 and embraced regime change and invasion. -

AN EXPERIENTIAL EXERCISE in PERSONNEL SELECTION “Be

AN EXPERIENTIAL EXERCISE IN PERSONNEL SELECTION “Be careful what you wish for.” An experiential exercise in personnel selection Submitted to the Experiential Learning Association Eastern Academy of Management AN EXPERIENTIAL EXERCISE IN PERSONNEL SELECTION “Be careful what you wish for.” An experiential exercise in personnel selection Abstract Personnel selection is a key topic in Human Resource Management (HRM) courses. This exercise intends to help students in HRM courses understand fundamental tasks in the selection process. Groups of students act as management teams to determine the suitability of applicants for a job posting for the position of instructor for a future offering of an HRM course. At the start of the exercise, the tasks include determining desirable qualifications and developing and ranking selection criteria based on the job posting and discussions among team members. Subsequently, each group reviews three resumes of fictitious candidates and ranks them based on the selection criteria. A group reflection and plenary discussion follow. Teaching notes, examples of classroom use and student responses are provided. Keywords: Personnel selection, HRM, experiential exercise AN EXPERIENTIAL EXERCISE IN PERSONNEL SELECTION “Be careful what you wish for.” An experiential exercise in personnel selection Selection is a key topic in Human Resource Management (HRM) courses and yet, selection exercises that can engage students in learning about the selection process are not abundant. Part of the challenge lies in providing students with a context to which they can relate. Many selection exercises focus on management situations that are unfamiliar for students in introductory HRM courses. Consequently, this exercise uses a context in which students have some knowledge – what they consider a good candidate for the position of sessional instructor for a future HRM course. -

The Lost Generation in American Foreign Policy How American Influence Has Declined, and What Can Be Done About It

September 2020 Perspective EXPERT INSIGHTS ON A TIMELY POLICY ISSUE JAMES DOBBINS, GABRIELLE TARINI, ALI WYNE The Lost Generation in American Foreign Policy How American Influence Has Declined, and What Can Be Done About It n the aftermath of World War II, the United States accepted the mantle of global leadership and worked to build a new global order based on the principles of nonaggression and open, nondiscriminatory trade. An early pillar of this new Iorder was the Marshall Plan for European reconstruction, which British histo- rian Norman Davies has called “an act of the most enlightened self-interest in his- tory.”1 America’s leaders didn’t regard this as charity. They recognized that a more peaceful and more prosperous world would be in America’s self-interest. American willingness to shoulder the burdens of world leadership survived a costly stalemate in the Korean War and a still more costly defeat in Vietnam. It even survived the end of the Cold War, the original impetus for America’s global activ- ism. But as a new century progressed, this support weakened, America’s influence slowly diminished, and eventually even the desire to exert global leadership waned. Over the past two decades, the United States experienced a dramatic drop-off in international achievement. A generation of Americans have come of age in an era in which foreign policy setbacks have been more frequent than advances. C O R P O R A T I O N Awareness of America’s declining influence became immunodeficiency virus (HIV) epidemic and by Obama commonplace among observers during the Barack Obama with Ebola, has also been widely noted. -

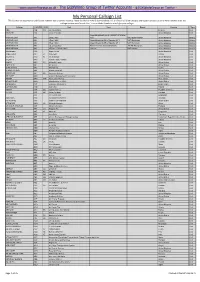

My Personal Callsign List This List Was Not Designed for Publication However Due to Several Requests I Have Decided to Make It Downloadable

- www.egxwinfogroup.co.uk - The EGXWinfo Group of Twitter Accounts - @EGXWinfoGroup on Twitter - My Personal Callsign List This list was not designed for publication however due to several requests I have decided to make it downloadable. It is a mixture of listed callsigns and logged callsigns so some have numbers after the callsign as they were heard. Use CTL+F in Adobe Reader to search for your callsign Callsign ICAO/PRI IATA Unit Type Based Country Type ABG AAB W9 Abelag Aviation Belgium Civil ARMYAIR AAC Army Air Corps United Kingdom Civil AgustaWestland Lynx AH.9A/AW159 Wildcat ARMYAIR 200# AAC 2Regt | AAC AH.1 AAC Middle Wallop United Kingdom Military ARMYAIR 300# AAC 3Regt | AAC AgustaWestland AH-64 Apache AH.1 RAF Wattisham United Kingdom Military ARMYAIR 400# AAC 4Regt | AAC AgustaWestland AH-64 Apache AH.1 RAF Wattisham United Kingdom Military ARMYAIR 500# AAC 5Regt AAC/RAF Britten-Norman Islander/Defender JHCFS Aldergrove United Kingdom Military ARMYAIR 600# AAC 657Sqn | JSFAW | AAC Various RAF Odiham United Kingdom Military Ambassador AAD Mann Air Ltd United Kingdom Civil AIGLE AZUR AAF ZI Aigle Azur France Civil ATLANTIC AAG KI Air Atlantique United Kingdom Civil ATLANTIC AAG Atlantic Flight Training United Kingdom Civil ALOHA AAH KH Aloha Air Cargo United States Civil BOREALIS AAI Air Aurora United States Civil ALFA SUDAN AAJ Alfa Airlines Sudan Civil ALASKA ISLAND AAK Alaska Island Air United States Civil AMERICAN AAL AA American Airlines United States Civil AM CORP AAM Aviation Management Corporation United States Civil -

ASSESSMENT METHODS in RECRUITMENT, SELECTION& PERFORMANCE 00 Prelims AMIR.Qxd 16/06/2005 05:51 Pm Page Ii Assessment Methods TP 31/8/05 10:25 Am Page 1

Assessment Methods HP 31/8/05 10:25 am Page 1 ASSESSMENT METHODS IN RECRUITMENT, SELECTION& PERFORMANCE 00_prelims_AMIR.qxd 16/06/2005 05:51 pm Page ii Assessment Methods TP 31/8/05 10:25 am Page 1 ASSESSMENT METHODS IN RECRUITMENT, SELECTION& PERFORMANCE A manager’s guide to psychometric testing, interviews and assessment centres Robert Edenborough London and Sterling, VA 00_prelims_AMIR.qxd 16/06/2005 05:51 pm Page iv To all the people whom I have studied, assessed and counselled over the last 40 years Publisher’s note Every possible effort has been made to ensure that the information contained in this book is accurate at the time of going to press, and the publisher and author cannot accept responsibility for any errors or omissions, however caused. No responsibility for loss or damage occasioned to any person acting, or refraining from action, as a result of the material in this publication can be accepted by the editor, the publisher or the author. First published in Great Britain and the United States in 2005 by Kogan Page Limited Apart from any fair dealing for the purposes of research or private study, or criticism or review, as permitted under the Copyright, Designs and Patents Act 1988, this publication may only be reproduced, stored or transmitted, in any form or by any means, with the prior permission in writing of the publishers, or in the case of reprographic reproduction in accordance with the terms and licences issued by the CLA. Enquiries concerning reproduction outside these terms should be sent to the publishers at the undermentioned addresses: 120 Pentonville Road 22883 Quicksilver Drive London N1 9JN Sterling VA 20166-2012 United Kingdom USA www.kogan-page.co.uk © Robert Edenborough, 2005 The right of Robert Edenborough to be identified as the author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988. -

ASPPB 2016 JTA Report

Association of State and Provincial Psychology Boards Examination for Professional Practice in Psychology (EPPP) Job Task Analysis Report May 19, 2016–September 30, 2016 Prepared by: Pearson VUE November 2016 Copyright © 2016 NCS Pearson, Inc. All rights reserved. PEARSON logo is a trademark in the U.S. and/or other countries. Table of Contents Scope of Work ................................................................................................................. 1 Glossary .......................................................................................................................... 1 Executive Summary ........................................................................................................ 2 Job Task Analysis ............................................................................................................ 3 Expert Panel Meeting .................................................................................................. 4 Developing the Survey ................................................................................................ 4 Piloting the Survey ..................................................................................................... 6 Administering the Survey ............................................................................................ 6 Analyzing the Survey .................................................................................................. 7 Test Specifications Meeting ......................................................................................... -

Air Line Pilots Page 5 Association, International Our Skies

March 2015 ALSO IN THIS ISSUE: » Landing Your » Known Crewmember » Sleep Apnea Air Dream Job page 20 page 29 Update page 28 Line PilOt Safeguarding Official Journal of the Air Line Pilots page 5 Association, International Our Skies Follow us on Twitter PRINTED IN THE U.S.A. @wearealpa Sponsored Airline- Career Track ATP offers the airline pilot career training solution with a career track from zero time to 1500 hours sponsored by ATP’s airline alliances. Airline Career month FAST TRACK Demand for airline pilots and ATP graduates is soaring, Pilot Program with the “1500 hour rule” and retirements at the majors. AIRLINES Airlines have selected ATP as a preferred training provider to build their pilot pipelines Private, Instrument, Commercial Multi Also available with... & Certified Flight Instructor (Single, Multi 100 Hours Multi-Engine Experience with the best training in the fastest & Instrument) time frame possible. 225 Hours Flight Time / 100 Multi 230 Hours Flight Time / 40 Multi In the Airline Career Pilot Program, your airline Gain Access to More Corporate, Guaranteed Flight Instructor Job Charter, & Multi-Engine Instructor interview takes place during the commercial phase Job Opportunities of training. Successful applicants will receive a Airline conditional offer of employment at commercial phase of training, based on building Fly Farther & Faster with Multi- conditional offer of employment from one or more of flight experience to 1500 hours in your guaranteed Engine Crew Cross-Country ATP’s airline alliances, plus a guaranteed instructor CFI job. See website for participating airlines, Experience job with ATP or a designated flight school to build admissions, eligibility, and performance requirements. -

The Pivot in Southeast Asia Balancing Interests and Values

WORKING PAPER The Pivot in Southeast Asia Balancing Interests and Values Joshua Kurlantzick January 2015 This publication has been made possible by a grant from the Open Society Foun- dations. The project on the pivot and human rights in Southeast Asia is also sup- ported by the United States Institute of Peace. The Council on Foreign Relations (CFR) is an independent, nonpartisan membership organization, think tank, and publisher dedicated to being a resource for its members, government officials, busi- ness executives, journalists, educators and students, civic and religious leaders, and other interested citizens in order to help them better understand the world and the foreign policy choices facing the United States and other countries. Founded in 1921, CFR carries out its mission by maintaining a diverse membership, with special programs to promote interest and develop expertise in the next generation of foreign policy leaders; convening meetings at its headquarters in New York and in Washington, DC, and other cities where senior government officials, members of Congress, global leaders, and prominent thinkers come together with CFR members to discuss and debate major in- ternational issues; supporting a Studies Program that fosters independent research, enabling CFR scholars to produce articles, reports, and books and hold roundtables that analyze foreign policy is- sues and make concrete policy recommendations; publishing Foreign Affairs, the preeminent journal on international affairs and U.S. foreign policy; sponsoring Independent Task Forces that produce reports with both findings and policy prescriptions on the most important foreign policy topics; and providing up-to-date information and analysis about world events and American foreign policy on its website, CFR.org. -

Infant and Toddler Contracted Slots Pilot Program: Evaluation Report Chad Dorn, Phd

Infant and Toddler Contracted Slots Pilot Program: Evaluation Report Chad Dorn, PhD. August 2020 2 Propulscreating force leading toon movement Contents ACKNOWLEDGEMENT............................................................................................................... 4 OVERVIEW OF INFANT TODDLER CONTRACTED SLOTS PILOT ....................................... 5 THE EVALUATION........................................................................................................................ 7 Implementation Science (IS) Framework............................................................................... 7 Building the capacity to effectively implement...................................................................... 9 Implementation Teams............................................................................................... 9 Data-Informed Feedback Loops................................................................................. 9 Implementation Infrastructure.................................................................................. 10 Data Collection and Timeline................................................................................. ............... 10 Data Sources and Collection .................................................................................................. 11 Administrative Data.................................................................................................... 11 Program Director Survey – Pre and Post................................................................... -

Tracing Fairy Tales in Popular Culture Through the Depiction of Maternity in Three “Snow White” Variants

University of Louisville ThinkIR: The University of Louisville's Institutional Repository College of Arts & Sciences Senior Honors Theses College of Arts & Sciences 5-2014 Reflective tales : tracing fairy tales in popular culture through the depiction of maternity in three “Snow White” variants. Alexandra O'Keefe University of Louisville Follow this and additional works at: https://ir.library.louisville.edu/honors Part of the Children's and Young Adult Literature Commons, and the Comparative Literature Commons Recommended Citation O'Keefe, Alexandra, "Reflective tales : tracing fairy tales in popular culture through the depiction of maternity in three “Snow White” variants." (2014). College of Arts & Sciences Senior Honors Theses. Paper 62. http://doi.org/10.18297/honors/62 This Senior Honors Thesis is brought to you for free and open access by the College of Arts & Sciences at ThinkIR: The University of Louisville's Institutional Repository. It has been accepted for inclusion in College of Arts & Sciences Senior Honors Theses by an authorized administrator of ThinkIR: The University of Louisville's Institutional Repository. This title appears here courtesy of the author, who has retained all other copyrights. For more information, please contact [email protected]. O’Keefe 1 Reflective Tales: Tracing Fairy Tales in Popular Culture through the Depiction of Maternity in Three “Snow White” Variants By Alexandra O’Keefe Submitted in partial fulfillment of the requirements for Graduation summa cum laude University of Louisville March, 2014 O’Keefe 2 The ability to adapt to the culture they occupy as well as the two-dimensionality of literary fairy tales allows them to relate to readers on a more meaningful level. -

Aviation Finance Report 2015

AVIATION FINANCE REPORT 2015 U.S. economy. With that tightening of The rising dollar and declining aircraft residual values the labor market, more competition for workers should translate to rising are proving to be a drag on the industry’s recovery wages, which would further stimulate the U.S. economy.” www.ainonline.com by Curt Epstein Despite the brightening economic picture in the U.S., for many the With the economic downturn now bellwethers such as the stock mar- wounds from the downturn inspire seven years in the rear-view mirror, the ket indexes reached record levels in caution in the decision-making pro- U.S. economy has continued its slow but late May, when the Dow Jones Indus- cesses. In this economic expansion, steady recovery this year, reaching, in trial Average hit 18,312 and the S&P notes Wayne Starling, senior vice pres- the eyes of most of the world, an envi- 500 peaked at 2,131. Likewise, unem- ident and national sales manager for able streak of 22 consecutive quarters of ployment has dropped from a high of PNC Aviation Finance, “companies expansion. But the strengthening of the 10 percent in October 2009 to nearly and individuals have retained more U.S. dollar has placed a chill on the inter- half that now. “We continue to reduce cash, deferred capital expenditures and national business jet market, which is still the slack in the labor market that has deferred investment in plant, property dealing with the effects of declining air- persisted since the great recession,” and equipment” to a greater extent than craft residual values.