Symplecticity in Beam Dynamics: an Introduction*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Covariant Hamiltonian Field Theory 3

December 16, 2020 2:58 WSPC/INSTRUCTION FILE kfte COVARIANT HAMILTONIAN FIELD THEORY JURGEN¨ STRUCKMEIER and ANDREAS REDELBACH GSI Helmholtzzentrum f¨ur Schwerionenforschung GmbH Planckstr. 1, 64291 Darmstadt, Germany and Johann Wolfgang Goethe-Universit¨at Frankfurt am Main Max-von-Laue-Str. 1, 60438 Frankfurt am Main, Germany [email protected] Received 18 July 2007 Revised 14 December 2020 A consistent, local coordinate formulation of covariant Hamiltonian field theory is pre- sented. Whereas the covariant canonical field equations are equivalent to the Euler- Lagrange field equations, the covariant canonical transformation theory offers more gen- eral means for defining mappings that preserve the form of the field equations than the usual Lagrangian description. It is proved that Poisson brackets, Lagrange brackets, and canonical 2-forms exist that are invariant under canonical transformations of the fields. The technique to derive transformation rules for the fields from generating functions is demonstrated by means of various examples. In particular, it is shown that the infinites- imal canonical transformation furnishes the most general form of Noether’s theorem. We furthermore specify the generating function of an infinitesimal space-time step that conforms to the field equations. Keywords: Field theory; Hamiltonian density; covariant. PACS numbers: 11.10.Ef, 11.15Kc arXiv:0811.0508v6 [math-ph] 15 Dec 2020 1. Introduction Relativistic field theories and gauge theories are commonly formulated on the basis of a Lagrangian density L1,2,3,4. The space-time evolution of the fields is obtained by integrating the Euler-Lagrange field equations that follow from the four-dimensional representation of Hamilton’s action principle. -

Moon-Earth-Sun: the Oldest Three-Body Problem

Moon-Earth-Sun: The oldest three-body problem Martin C. Gutzwiller IBM Research Center, Yorktown Heights, New York 10598 The daily motion of the Moon through the sky has many unusual features that a careful observer can discover without the help of instruments. The three different frequencies for the three degrees of freedom have been known very accurately for 3000 years, and the geometric explanation of the Greek astronomers was basically correct. Whereas Kepler’s laws are sufficient for describing the motion of the planets around the Sun, even the most obvious facts about the lunar motion cannot be understood without the gravitational attraction of both the Earth and the Sun. Newton discussed this problem at great length, and with mixed success; it was the only testing ground for his Universal Gravitation. This background for today’s many-body theory is discussed in some detail because all the guiding principles for our understanding can be traced to the earliest developments of astronomy. They are the oldest results of scientific inquiry, and they were the first ones to be confirmed by the great physicist-mathematicians of the 18th century. By a variety of methods, Laplace was able to claim complete agreement of celestial mechanics with the astronomical observations. Lagrange initiated a new trend wherein the mathematical problems of mechanics could all be solved by the same uniform process; canonical transformations eventually won the field. They were used for the first time on a large scale by Delaunay to find the ultimate solution of the lunar problem by perturbing the solution of the two-body Earth-Moon problem. -

7.5 Lagrange Brackets

Chapter 7 Brackets Module 1 Poisson brackets and Lagrange brackets 1 Brackets are a powerful and sophisticated tool in Classical mechanics particularly in Hamiltonian formalism. It is a way of characterization of canonical transformations by using an operation, known as bracket. It is very useful in mathematical formulation of classical mechanics. It is a powerful geometrical structure on phase space. Basically it is a mapping which maps two functions into a new function. Reformulation of classical mechanics is indeed possible in terms of brackets. It provides a direct link between classical and quantum mechanics. 7.1 Poisson brackets Poisson bracket is an operation which takes two functions of phase space and time and produces a new function. Let us consider two functions F1(,,) qii p t and F2 (,,) qii p t . The Poisson bracket is defined as n FFFF1 2 1 2 FF12,. (7.1) i1 qi p i p i q i For single degree of freedom, Poisson bracket takes the following form FFFF FF,. 1 2 1 2 12 q p p q Basically in Poisson bracket, each term contains one derivative of F1 and one derivative of F2. One derivative is considered with respect to a coordinate q and the other is with respect to the conjugate momentum p. A change of sign occurs in the terms depending on which function is differentiated with respect to the coordinate and which with respect to the momentum. Remark: Some authors use square brackets to represent the Poisson bracket i.e. instead ofFF12, , they prefer to use FF12, . Example 1: Let F12( q , p ) p q , F ( q , p ) sin q . -

Æçæá Ä Ìê Æë Çêå Ìáçæë

¾ CAÆÇÆÁCAÄ ÌÊAÆËFÇÊÅAÌÁÇÆË As for a generic system of differential equations, coordinate transformations can be used in order to bring the system to a simpler form. If the system is Hamiltonian it is desirable to keep the Hamiltonian form of the equations when the system is transformed. The search for a class of transformations satisfying the latter property leads to introducing the group of the so called canonical transformations. The condition of canonicity can be expressed in terms of Poisson brackets, La- grange brackets and differential forms. In this chapter I will discuss five different criteria of canonicity. Precisely, a coordinate transformation is canonical in case: (i) the Jacobian matrix of the transformation is a symplectic matrix; (ii) the transformation preserves the Poisson brackets; (iii) the transformation preserves the Lagrange brackets; (iv) the transformation preserves the differential 2–form dpj dqj; j ∧ (v) the transformation preserves the integral over a closed curve of the 1–form P j pjdqj. An useful method for constructing canonicals transformations is furnished by the the- ory of generatingP functions. This is the basis for further development of the integration methods, eventually leading to the Hamilton–Jacobi’s equation. 2.1 Elements of symplectic geometry Before entering the discussion of canonical transformations we recall a few aspects of symplectic geometry that will be useful. A relevant role is played by the bilinear antisymmetric form induced by the matrix J introduced in sect. 1.1.3, formula (1.16). Symplectic geometry is characterized by the skew symmetric matrix J in the same sense as Euclidean geometry is characterized by the identity matrix I. -

Cocycles, Compatibility, and Poisson Brackets for Complex Fluids

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Caltech Authors Cocycles, Compatibility, and Poisson Brackets for Complex Fluids Hern´an Cendra,∗ Jerrold Marsden,T† udor S. Ratiu‡ Dedicated to Alan Weinstein on the Occasion of his 60th Birthday Vienna, August, 2003 Abstract Motivated by Poisson structures for complex fluids containing cocycles, such as the Poisson structure for spin glasses given by Holm and Kupershmidt in 1988, we investigate a general construction of Poisson brackets with cocycles. Connections with the construction of compatible brackets found in the theory of integrable systems are also briefly discussed. 1Introduction Purpose of this Paper. The goal of this paper is to explore a general construc- tion of Poisson brackets with cocycles and to link it to questions of compatibility similar to those encountered in the theory of integrable systems. The study is mo- tivated by the specific brackets for spin glasses studied in Holm and Kupershmidt [1988]. In the body of the paper, we first describe several examples of Poisson brackets as reduced versions of, in principle, simpler brackets. Then we will show how this technique can be applied to study the compatibility of Poisson brackets. In the last section, we give the example of spin glasses and show that the Jacobi identity for the bracket given in Holm and Kupershmidt [1988]isaresult of a cocycle identity. Background. It is a remarkable fact that many important examples of Poisson brackets are reduced versions of canonical Poisson brackets. Perhaps the most basic case of this may be described as follows. -

9. Canonical Transformations

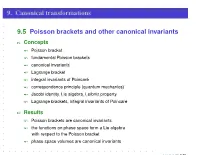

9. Canonical transformations 9.5 Poisson brackets and other canonical invariants Z Concepts z Poisson bracket z fundamental Poisson brackets z canonical invariants z Lagrange bracket z integral invariants of Poincaré z correspondence principle (quantum mechanics) z Jacobi identity, Lie algebra, Leibniz property z Lagrange brackets, integral invariants of Poincaré Z Results z Poisson brackets are canonical invariants z the functions on phase space form a Lie algebra with respect to the Poisson bracket z phase space volumes are canonical invariants JF 12 10 18 – p. 11/?? 9. Canonical transformations 9.5 Poisson brackets and other canonical invariants Z Formulas ∂u ∂v ∂u ∂v z (9.67) {u, v}q,p = − X ∂q ∂p ∂p ∂q i i i i i ∂u ∂v z (9.68) {u, v} = J ~η ∂~η ∂~η z (9.69) {qi, pj }q,p = δi,j {pi, pj }q,p = 0 = {qi, qj }q,p z (9.70) {~η, ~η}~η = J JF 12 10 18 – p. 11/?? 9. Canonical transformations 9.6 Equations of motion, infinitesimal canonical transformations, conservation laws Z Concepts z total time derivative Z Results z Poisson’s theorem: u and v conserved =⇒ {u, v} conserved z time evolution as continuous sequence of canonical transformations z existence of canonical transformation to constant canonical variables z constant of motion as generating function of canonical transformation Z Formulas du ∂u z (9.94) = {u, H} + dt ∂t z (9.95) ~η˙ = {~η, H} ∂u z (9.97) {H, u} = (u conserved) ∂t z (9.100) δ~η = ε {~η, G} JF 12 10 18 – p. -

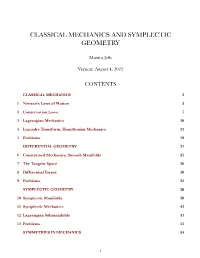

Classical Mechanics and Symplectic Geometry

CLASSICAL MECHANICS AND SYMPLECTIC GEOMETRY Maxim Jeffs Version: August 4, 2021 CONTENTS CLASSICAL MECHANICS 3 1 Newton’s Laws of Motion 3 2 Conservation Laws 7 3 Lagrangian Mechanics 10 4 Legendre Transform, Hamiltonian Mechanics 15 5 Problems 19 DIFFERENTIAL GEOMETRY 21 6 Constrained Mechanics, Smooth Manifolds 21 7 The Tangent Space 26 8 Differential Forms 30 9 Problems 35 SYMPLECTIC GEOMETRY 38 10 Symplectic Manifolds 38 11 Symplectic Mechanics 42 12 Lagrangian Submanifolds 47 13 Problems 51 SYMMETRIES IN MECHANICS 54 1 14 Lie Groups 54 15 Hamiltonian Group Actions 58 16 Marsden-Weinstein Theorem 64 17 Arnol’d-Liouville Theorem 70 18 The Hamilton-Jacobi Equation 74 19 Problems 75 FURTHER TOPICS 78 20 Pseudoholomorphic Curves 78 21 Symplectic Topology 82 22 Celestial Mechanics 87 23 Rigid Body Mechanics 88 24 Geometric Quantization 89 25 Kolmogorov-Arnol’d-Moser Theory 90 26 Symplectic Toric Manifolds 91 27 Contact Manifolds 92 28 Kähler Manifolds 93 Index 94 Bibliography 97 2 1 NEWTON’S LAWS OF MOTION Use the Force, Luke. Star Wars: A New Hope Firstly, what is classical mechanics? Classical mechanics is that part of physics that describes the motion of large-scale bodies (much larger than the Planck length) moving slowly (much slower than the speed of light). The paradigm example is the motion of the celestial bodies; this is not only the oldest preoccupation of science, but also has very important practical applications to navigation which continue to the present day. We shall have much to say about this example in this class. More than this, classical mechanics is a complete mathematical theory of the universe as it appears at such scales, with a rich mathematical structure; it has been studied by almost every great mathematician from Euler to Hilbert. -

Cocycles, Compatibility, and Poisson Brackets for Complex Fluids

Cocycles, Compatibility, and Poisson Brackets for Complex Fluids Hern´an Cendra,∗ Jerrold Marsden,T† udor S. Ratiu‡ Dedicated to Alan Weinstein on the Occasion of his 60th Birthday Vienna, August, 2003 Abstract Motivated by Poisson structures for complex fluids containing cocycles, such as the Poisson structure for spin glasses given by Holm and Kupershmidt in 1988, we investigate a general construction of Poisson brackets with cocycles. Connections with the construction of compatible brackets found in the theory of integrable systems are also briefly discussed. 1Introduction Purpose of this Paper. The goal of this paper is to explore a general construc- tion of Poisson brackets with cocycles and to link it to questions of compatibility similar to those encountered in the theory of integrable systems. The study is mo- tivated by the specific brackets for spin glasses studied in Holm and Kupershmidt [1988]. In the body of the paper, we first describe several examples of Poisson brackets as reduced versions of, in principle, simpler brackets. Then we will show how this technique can be applied to study the compatibility of Poisson brackets. In the last section, we give the example of spin glasses and show that the Jacobi identity for the bracket given in Holm and Kupershmidt [1988]isaresult of a cocycle identity. Background. It is a remarkable fact that many important examples of Poisson brackets are reduced versions of canonical Poisson brackets. Perhaps the most basic case of this may be described as follows. Start with a configuration manifold Q with 1Departamento de Matem´atica, Universidad Nacional del Sur, Av. -

Application of Geometric Algebra to Generalized Hamiltonian (Birkhoffian) Mechanics

Military Technical College 5th International Conference on Kobry Elkobbah, Mathematics and Engineering Cairo, Egypt Physics (ICMEP-5) May 25-27,2010 EM-21 Application of Geometric Algebra to Generalized Hamiltonian (Birkhoffian) Mechanics E. T. Abou El Dahab Abstract The basic concepts of generalized Hamiltonian ( Birkhoffian ) mechanics are derived using Gemotric Algebra. Both Generalized Poisson bracket (GPB) and Generalized Lagrange bracket (GLB) are introduced. Similarly Generalized plus Poisson bracket (GPPB) and Generalized plus Lagrange bracket (GPLB) are derived which seems different from the forms by other authors. Differential constrains are derived, one of which resembles that of electromagnetic field strength. 1 Introduction In this paper, the axioms of geometric Algebras as outlined in [1, 2, 3, 4, 5, 6] are used to formulate the generalized Hamiltonian (Birkhoffian) Mechanics. In section 2 a brief introduction to Geometric Algebra is made. In section 3 a formulation of the generalized Hamiltonian (Birkhoffian) mechanics on phase space is presented [7], and [8] and shows how to get a simple form using Geometric Algebras. The generalized Poisson bracket (BPB) is introduced in terms of geometric Algebra and in tensor forms in terms of coordinates. Jacobi’ identity enables us to introduce some differential constrains on the constituents of the anti- symmetric tensor defining the GPB. One of these constraints resembles the equation satisfied by the electromagnetic field strength. Similarly, the generalized Lagrange bracket (GLB) is introduced. Also, the generalized plus Poisson bracket as well as generalized plus Lagrange bracket are introduced in term of geometric Algebra and in tensor forms. In section 4 we introduce the Dirac quantization map which is a generalization of what was introduced in [11]. -

Dequantized Differential Operators Between Tensor Densities As

Dequantized Differential Operators between Tensor Densities as Modules over the Lie Algebra of Contact Vector Fields Ya¨el Fr´egier ∗, Pierre Mathonet †, Norbert Poncin ∗ August 5, 2021 Abstract In recent years, algebras and modules of differential operators have been extensively studied. Equivariant quantization and dequantization establish a tight link between invariant operators connecting modules of differential operators on tensor densities, and module morphisms that con- nect the corresponding dequantized spaces. In this paper, we investigate dequantized differential operators as modules over a Lie subalgebra of vector fields that preserve an additional structure. More precisely, we take an interest in invariant operators between dequantized spaces, viewed as modules over the Lie subalgebra of infinitesimal contact or projective contact transformations. The principal symbols of these invariant operators are invariant tensor fields. We first provide full description of the algebras of such affine-contact- and contact-invariant tensor fields. These characterizations allow showing that the algebra of projective-contact-invariant operators between dequantized spaces implemented by the same density weight, is generated by the vertical cotan- gent lift of the contact form and a generalized contact Hamiltonian. As an application, we prove a second key-result, which asserts that the Casimir operator of the Lie algebra of infinitesimal projective contact transformations, is diagonal. Eventually, this upshot entails that invariant op- erators between spaces induced by different density weights, are made up by a small number of building bricks that force the parameters of the source and target spaces to verify Diophantine-type equations. Key-words: Modules of differential operators, tensor densities, contact geometry, invariant oper- ators, representation theory of algebras, equivariant quantization and dequantization MSC: 13N10, 53D10, 16G30 1 Introduction arXiv:0710.0222v1 [math.RT] 1 Oct 2007 Equivariant quantization, in the sense of C. -

Legendre Transformations Lagrange & Poisson Bracket

Chapter 6 Lecture 3 Legendre Transformations Lagrange & Poisson Bracket Dr. Akhlaq Hussain 6.3 Legendre Transformations Lagrangian of the system 퐿 = 퐿 푞푖, 푞푖ሶ , 푡 푑 휕퐿 휕퐿 Lagrange's equation − = 0 푑푡 휕푞푖ሶ 휕푞푖 휕퐿 푞푖, 푞푖ሶ ,푡 Where momentum 푝푖 = 휕푞푖ሶ In Lagrangian to Hamiltonian transition variables changes from 푞, 푞푖ሶ , 푡 to 푞, 푝, 푡 , where p is related to 푞 and 푞푖ሶ by above equation. This transformation process is known as Legendre transformation. Consider a function of only two variable 푓(푥, 푦) 휕푓 휕푓 푑푓 = 푑푥 + 푑푦 휕푥 휕푦 푑푓 = 푢푑푥 + 푣푑푦 2 6.3 Legendre Transformations If we wish to change the basis of description from 푥, 푦 to a new set of variable 푦,푢 Let 푔 푦, 푢 be a function of 푢 and 푦 defined as 푔 = 푓 − 푢푥 (I) Therefore 푑푔 = 푑푓 − 푥푑푢 − 푢푑푥 since 푑푓 = 푢푑푥 + 푣푑푦 So 푑푔 = 푢푑푥 + 푣푑푦 − 푥푑푢 − 푢푑푥 푑푔 = 푣푑푦 − 푥푑푢 And the quantities 푣 and 푥 are function of 푦 and 푢 respectively. 휕푔 휕푔 And 푑푔 = 푑푦 + 푑푢 휕푦 휕푢 휕푔 휕푔 = 푣 & = −푥 휕푦 휕푢 3 6.3 Legendre Transformations Equation I represent Legendre transformation. The Legendre transformation is frequently used in thermodynamics as 푑푈 = 푇푑푆 − 푃푑푉 for 푈(푆, 푉) And 퐻 = 푈 + 푃푉 퐻 푆, 푃 푑퐻 = 푇푑푆 + 푉푑푃 And 퐹 = 푈 − 푇푆 퐹(푇, 푉) 퐺 = 퐻 − 푇푆 퐺 푇, 푃 U = Internal energy T = Temperature S = Entropy P = Pressure V = Volume H = Enthalpy F = Helmholtz Free Energy G=Gibbs free energy 4 6.3 Legendre Transformations The transformation of 푞, 푞ሶ, 푡 to 푞, 푝, 푡 is however different. -

Faculty of Mathematical and Statistical Sciences INSH

Faculty of Mathematical and Statistical Sciences INSH PROGRAMME M.Sc. (Mathematics) Programme Learning Objective Mathematics Postgraduate curriculum should such that it offers our student To train qualified, adaptable, motivated, and responsible mathematicians who will contribute to the scientific and technological development. To develop the mathematical skills needed in modeling and solving practical problems. To prepare students to face the present challenges in different field of Mathematics. To develop an outlook and high level study skills that will be hugely valuable whatever career path follow after post graduation. To impart an intensive and in-depth learning to the students in the field of Mathematics. To develop students’ skills in dissertation writing. The programme also provides students with the knowledge and opportunity to progress towards a Doctorate programme. Programme Learning Outcomes After doing Mathematics Postgraduate Course, our student will be able to PLO1: Identify fundamental concepts of higher mathematics as applied to applied mathematics. PLO2: Apply analytic, numerical and computational skill to analyze and solve higher Mathematical problems. PLO3: Apply knowledge of higher Mathematics with integrative approach in diverse fields. PLO4: Acquire appropriate skill in higher Mathematics to handle research oriented scientific problems. PLO5: Gain specific knowledge and understanding will be determined by your particular choice of modules, according to your particular needs and interests. PLO6: Communicate scientific information effectively in written and oral formats. PLO7: The basic and advanced knowledge attained by student will also make him/her enable to inspire them to pursue higher studies. PLO8: Apply their responsibilities in social and environmental context. STUDY & EVALUATION SCHEME (Effective from the session 2017 -2018) STUDY & EVALUATION SCHEME (Session 2019-2020) M.