Local Linear Spatial Regression

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Summary and Analysis of Extension Program Evaluation in R

SUMMARY AND ANALYSIS OF EXTENSION PROGRAM EVALUATION IN R SALVATORE S. MANGIAFICO Rutgers Cooperative Extension New Brunswick, NJ VERSION 1.18.8 i ©2016 by Salvatore S. Mangiafico. Non-commercial reproduction of this content, with attribution, is permitted. For-profit reproduction without permission is prohibited. If you use the code or information in this site in a published work, please cite it as a source. Also, if you are an instructor and use this book in your course, please let me know. [email protected] Mangiafico, S.S. 2016. Summary and Analysis of Extension Program Evaluation in R, version 1.18.8. rcompanion.org/documents/RHandbookProgramEvaluation.pdf . Web version: rcompanion.org/handbook/ ii List of Chapters List of Chapters List of Chapters .......................................................................................................................... iii Table of Contents ...................................................................................................................... vi Introduction ............................................................................................................................... 1 Purpose of This Book.......................................................................................................................... 1 Author of this Book and Version Notes ............................................................................................... 2 Using R ............................................................................................................................................. -

Chapter 5 Local Regression Trees

Chapter 5 Local Regression Trees In this chapter we explore the hypothesis of improving the accuracy of regression trees by using smoother models at the tree leaves. Our proposal consists of using local regression models to improve this smoothness. We call the resulting hybrid models, local regression trees. Local regression is a non-parametric statistical methodology that provides smooth modelling by not assuming any particular global form of the unknown regression function. On the contrary these models fit a functional form within the neighbourhood of the query points. These models are known to provide highly accurate predictions over a wide range of problems due to the absence of a “pre-defined” functional form. However, local regression techniques are also known by their computational cost, low comprehensibility and storage requirements. By integrating these techniques with regression trees, not only we improve the accuracy of the trees, but also increase the computational efficiency and comprehensibility of local models. In this chapter we describe the use of several alternative models for the leaves of regression trees. We study their behaviour in several domains an present their advantages and disadvantages. We show that local regression trees improve significantly the accuracy of “standard” trees at the cost of some additional computational requirements and some loss of comprehensibility. Moreover, local regression trees are also more accurate than trees that use linear models in the leaves. We have also observed that 167 168 CHAPTER 5. LOCAL REGRESSION TREES local regression trees are able to improve the accuracy of local modelling techniques in some domains. Compared to these latter models our local regression trees improve the level of computational efficiency allowing the application of local modelling techniques to large data sets. -

A Tutorial on Bayesian Multi-Model Linear Regression with BAS and JASP

Behavior Research Methods https://doi.org/10.3758/s13428-021-01552-2 A tutorial on Bayesian multi-model linear regression with BAS and JASP Don van den Bergh1 · Merlise A. Clyde2 · Akash R. Komarlu Narendra Gupta1 · Tim de Jong1 · Quentin F. Gronau1 · Maarten Marsman1 · Alexander Ly1,3 · Eric-Jan Wagenmakers1 Accepted: 21 January 2021 © The Author(s) 2021 Abstract Linear regression analyses commonly involve two consecutive stages of statistical inquiry. In the first stage, a single ‘best’ model is defined by a specific selection of relevant predictors; in the second stage, the regression coefficients of the winning model are used for prediction and for inference concerning the importance of the predictors. However, such second-stage inference ignores the model uncertainty from the first stage, resulting in overconfident parameter estimates that generalize poorly. These drawbacks can be overcome by model averaging, a technique that retains all models for inference, weighting each model’s contribution by its posterior probability. Although conceptually straightforward, model averaging is rarely used in applied research, possibly due to the lack of easily accessible software. To bridge the gap between theory and practice, we provide a tutorial on linear regression using Bayesian model averaging in JASP, based on the BAS package in R. Firstly, we provide theoretical background on linear regression, Bayesian inference, and Bayesian model averaging. Secondly, we demonstrate the method on an example data set from the World Happiness Report. Lastly, -

Comparation on Several Smoothing Methods in Nonparametric Regression

Comparation on Several Smoothing Methods in Nonparametric Regression R. Rizal Isnanto Abstract – There are three nonparametric regression Smoothing Method, completed with its variants will be methods covered in this section. These are Moving also analyzed. The advantages of all above methods are Average Filtering-Based Smoothing, Local Regression discussed as well as the disadvantages of the methods. Smoothing, and Kernel Smoothing Methods. The Moving Average Filtering-Based Smoothing methods discussed here are Moving Average Filtering and Savitzky-Golay Filtering. While, the Local Regression Smoothing II. MOVING AVERAGE FILTERING -BASED SMOOTHING techniques involved here are Lowess and Loess. In this type of smoothing, Robust Smoothing and Upper-and- A moving average is defined as an artificially Lower Smoothing are also explained deeply, related to constructed time series in which the value for a given Lowess and Loess. Finally, the Kernel Smoothing Method time period is replaced by the mean of that value and involves three methods discussed. These are Nadaraya- the values for some number of preceding and Watson Estimator, Priestley-Chao Estimator, and Local succeeding time periods [6]. Linear Kernel Estimator. The advantages of all above Moving average filtering is the former of smoothing methods are discussed as well as the disadvantages of the techniques. A moving average filter smooths data by methods. replacing each data point with the average of the Keywords : nonparametric regression , smoothing, moving neighboring data points defined within the span. This average, estimator, curve construction. process is equivalent to lowpass filtering with the response of the smoothing given by the difference I. INTRODUCTION equation: Nowadays, maybe the names “lowess” and “loess” which are derived from the term “locally weighted scatterplot smoothing,” as both methods use locally where ys(i) is the smoothed value for the ith data point, weighted linear regression to smooth data, is often N is the number of neighboring data points on either discussed. -

![Arxiv:1807.03931V2 [Cs.LG] 1 Aug 2018 E-Mail: Franzi.Meier@Gmail.Com A](https://docslib.b-cdn.net/cover/4965/arxiv-1807-03931v2-cs-lg-1-aug-2018-e-mail-franzi-meier-gmail-com-a-3564965.webp)

Arxiv:1807.03931V2 [Cs.LG] 1 Aug 2018 E-Mail: [email protected] A

Noname manuscript No. (will be inserted by the editor) A Hierarchical Bayesian Linear Regression Model with Local Features for Stochastic Dynamics Approximation Behnoosh Parsa · Keshav Rajasekaran · Franziska Meier · Ashis G. Banerjee Received: date / Accepted: date Abstract One of the challenges with model-based control of stochastic dynamical systems is that the state transition dynamics are involved, making it difficult and inefficient to make good-quality predictions of the states. Moreover, there are not many representational models for the majority of autonomous systems, as it is not easy to build a compact model that captures all the subtleties and uncertainties in the system dynamics. In this work, we present a hierarchical Bayesian linear regres- sion model with local features to learn the dynamics of such systems. The model is hierarchical since we consider non-stationary priors for the model parameters which increases its flexibility. To solve the maximum likelihood (ML) estimation problem for this hierarchical model, we use the variational expectation maximiza- tion (EM) algorithm, and enhance the procedure by introducing hidden target variables. The algorithm is guaranteed to converge to the optimal log-likelihood values under certain reasonable assumptions. It also yields parsimonious model structures, and consistently provides fast and accurate predictions for all our ex- amples, including two illustrative systems and a challenging micro-robotic system, involving large training and test sets. These results demonstrate the effectiveness of the method in approximating stochastic dynamics, which make it suitable for future use in a paradigm, such as model-based reinforcement learning, to compute optimal control policies in real time. B. -

Incremental Local Gaussian Regression

Incremental Local Gaussian Regression Franziska Meier1 Philipp Hennig2 Stefan Schaal1;2 [email protected] [email protected] [email protected] 1University of Southern California 2Max Planck Institute for Intelligent Systems Los Angeles, CA 90089, USA Spemannstraße 38, Tubingen,¨ Germany Abstract Locally weighted regression (LWR) was created as a nonparametric method that can approximate a wide range of functions, is computationally efficient, and can learn continually from very large amounts of incrementally collected data. As an interesting feature, LWR can regress on non-stationary functions, a beneficial property, for instance, in control problems. However, it does not provide a proper generative model for function values, and existing algorithms have a variety of manual tuning parameters that strongly influence bias, variance and learning speed of the results. Gaussian (process) regression, on the other hand, does provide a generative model with rather black-box automatic parameter tuning, but it has higher computational cost, especially for big data sets and if a non-stationary model is required. In this paper, we suggest a path from Gaussian (process) regression to locally weighted regression, where we retain the best of both approaches. Using a localizing function basis and approximate inference techniques, we build a Gaussian (process) regression algorithm of increasingly local nature and similar computational complexity to LWR. Empirical evaluations are performed on several synthetic and real robot datasets of increasing complexity and (big) data scale, and demonstrate that we consistently achieve on par or superior performance compared to current state-of-the-art methods while retaining a principled approach to fast incremental regression with minimal manual tuning parameters. -

Locally Weighted Polynomial Regression: Parameter Choice and Application to Forecasts of the Great Salt Lake

Utah State University DigitalCommons@USU Reports Utah Water Research Laboratory January 1995 Locally Weighted Polynomial Regression: Parameter Choice and Application to Forecasts of the Great Salt Lake Upmanu Lall Young-II Moon Ken Bosworth Follow this and additional works at: https://digitalcommons.usu.edu/water_rep Part of the Civil and Environmental Engineering Commons, and the Water Resource Management Commons Recommended Citation Lall, Upmanu; Moon, Young-II; and Bosworth, Ken, "Locally Weighted Polynomial Regression: Parameter Choice and Application to Forecasts of the Great Salt Lake" (1995). Reports. Paper 243. https://digitalcommons.usu.edu/water_rep/243 This Report is brought to you for free and open access by the Utah Water Research Laboratory at DigitalCommons@USU. It has been accepted for inclusion in Reports by an authorized administrator of DigitalCommons@USU. For more information, please contact [email protected]. LOCALLY WEIGHTED POLYNOMIAL REGRESSION: PARAMETER CHOICE AND APPLICATION TO FORECASTS OF THE GREAT SALT LAKE By: Upmanu Lall, Young-II Moon and Ken Bosworth Working Paper WP-95-HWR-UL/014 May 1995 Utah Water Research Laboratory Utah State University Logan, UT 84322-8200 (801) 797-3155 Fax (801) 797-3663 Locally Weighted Polynomial Regression: Parameter Choice and Application to Forecasts of the Great Salt Lake by Upmanu Lall and Young-n Moon Utah Water Research Lab. and Dept. of Civil and Environ. Eng. Utah State University, Logan UT 84322-8200 and Ken Bosworth Department of Mathematics, Idaho State University, Pocatello ID 83209-8085 Abstract Relationships between hydrologic variables are often nonlinear. Usually the functional form of such a relationship is not known a priori. -

Baseline Subtraction Using Robust Local Regression Estimation

Journal of Quantitative Spectroscopy & Radiative Transfer 68 (2001) 179}193 Baseline subtraction using robust local regression estimation Andreas F. Ruckstuhl! *, Matthew P. Jacobson", Robert W. Field", James A. Dodd# !Departement P, Zurcher Hochschule Winterthur (Zurich University of Applied Sciences), Postfach 805, 8401 Winterthur, Switzerland "Department of Chemistry and G.R. Harrison Spectroscopy Laboratory, Massachusetts Institute of Technology, Cambridge, MA 02139, USA #Space Vehicles Directorate, Air Force Research Laboratory, Hanscom Air Force Base, MA 01731-3010, USA Received 6 September 1999 Abstract A technique entitled robust baseline estimation is introduced, which uses techniques of robust local regression to estimate baselines in spectra that consist of sharp features superimposed upon a continuous, slowly varying baseline. The technique is applied to synthetic spectra, to evaluate its capabilities, and to laser-induced #uorescence spectra of OH (produced from the reaction of ozone with hydrogen atoms). The latter example is a particularly challenging case for baseline estimation because the experimental noise varies as a function of frequency. ( 2000 Elsevier Science Ltd. All rights reserved. Keywords: Baseline removal; LOWESS; Robust "tting; Smoothing 1. Introduction The robust baseline estimation (RBE) technique that is introduced in this paper is a technique for baseline removal; that is, in spectra that consist of sharp features superimposed upon a continuous, slowly varying baseline, it is designed to permit the separation of the two components, spectrum and baseline. There exist, of course, numerous techniques for baseline removal from spectra, because the problem is ubiquitous within spectroscopy. In many cases, baselines are simply removed `by eyea. There is nothing inherently wrong with such an approach, but we believe that, even for a `mundanea task such as baseline removal, it is bene"cial to use numerical tools that minimize the need for judgement calls and permit reproduction of the results by others. -

Automating the Smoothing of Time Series Data Shilpy Sharma*, David a Swayne and Charlie Obimbo School of Computer Science, University of Guelph, Canada

ntal & A me na n ly o t ir ic v a n l Sharma et al., J Environ Anal Toxicol 2015, 5:5 T E o Journal of f x o i l c o a DOI: 10.4172/2161-0525.1000304 n l o r g u y o J Environmental & Analytical Toxicology ISSN: 2161-0525 ResearchResearch Article Article OpenOpen Access Access Automating the Smoothing of Time Series Data Shilpy Sharma*, David A Swayne and Charlie Obimbo School of Computer Science, University of Guelph, Canada Abstract Modelling requires comparison of model outputs to measurements, for calibration and verification. A key aspect data smoothing is to “filter out” noise. Often, data must be adjusted to a model’s time step (e.g. hourly to daily). For noisy data, LOWESS/LOESS (Locally Weighted Scatterplot Smoothing) is a popular piecewise regression technique. It produces a “smoothed” time series. LOWESS/LOESS is often used to visually assess the relationship between variables. The selection of LOWESS tuning parameters is usually performed on a visual trial and error basis. We investigate the so-called robust AIC (Akaike Information Criteria) for automatic selection of smoothing. Robust Pearson correlation coefficient and mean-squared error are employed to determine the polynomial degree of piecewise regression. The exclusion of outliers is attempted using a Hampel outlier identifier. We illustrate, assuming noisy linear data, how our proposed methods work for auto-tuning both the smoothing parameter and the degree of polynomial for LOWESS/ LOESS. Keywords: LOWESS; LOESS; Data smoothing most efficient and robust method for identification of outliers [9]. -

Econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Loader, Catherine Working Paper Smoothing: Local Regression Techniques Papers, No. 2004,12 Provided in Cooperation with: CASE - Center for Applied Statistics and Economics, Humboldt University Berlin Suggested Citation: Loader, Catherine (2004) : Smoothing: Local Regression Techniques, Papers, No. 2004,12, Humboldt-Universität zu Berlin, Center for Applied Statistics and Economics (CASE), Berlin This Version is available at: http://hdl.handle.net/10419/22186 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. www.econstor.eu Smoothing: Local Regression Techniques Catherine Loader1 Department of Statistics, Case Western Reserve University, Cleveland, OH 44106, USA. [email protected] Smoothing methods attempt to find functional relationships between different measurements. As in the standard regression setting, the data is assumed to consist of measurements of a response variable, and one or more predictor variables. -

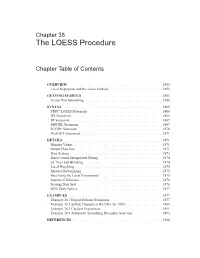

The LOESS Procedure

Chapter 38 The LOESS Procedure Chapter Table of Contents OVERVIEW ...................................1855 Local Regression and the Loess Method ....................1855 GETTING STARTED ..............................1856 ScatterPlotSmoothing.............................1856 SYNTAX .....................................1865 PROCLOESSStatement............................1866 BYStatement..................................1866 IDStatement..................................1867 MODELStatement...............................1867 SCORE Statement ...............................1870 WEIGHTStatement..............................1871 DETAILS .....................................1871 MissingValues.................................1871 OutputDataSets................................1871 DataScaling...................................1873 Direct versus Interpolated Fitting . ......................1874 kdTreesandBlending.............................1874 LocalWeighting.................................1875 IterativeReweighting..............................1875 Specifying the Local Polynomials . ......................1875 StatisticalInference...............................1876 ScoringDataSets................................1876 ODSTableNames...............................1877 EXAMPLES ...................................1877 Example38.1EngineExhaustEmissions...................1877 Example 38.2 Sulfate Deposits in the USA for 1990 . ...........1885 Example38.3CatalystExperiment.......................1891 Example38.4AutomaticSmoothingParameterSelection...........1893 -

Extending Linear Regression: Weighted Least Squares and Local Regression

Extending Linear Regression: Weighted Least Squares and Local Regression 36-350, Data Mining 10 October 2008 1 Weighted Least Squares Instead of minimizing the residual sum of squares, n X 2 (yi − ~xi · β) (1) i=1 we could minimize the weighted sum of squares, n X 2 wi(yi − ~xi · β) (2) i=1 This includes ordinary least squares as the special case where all the weights wi = 1. (Exercise: Is that a necessary as well as a sufficient condition for (2) and (1) to have the same minimum?) We can solve it by the same kind of algebra we used to solve the ordinary linear least squares problem. But why would we want to solve it? For three reasons. 1. Focusing accuracy. We may care very strongly about predicting the re- sponse for certain values of the input — ones we expect to see often again, ones where mistakes are especially costly or embarrassing or painful, etc. — than others. If we give the points xi near that region big weights wi, and points elsewhere smaller weights, the regression will be pulled towards matching the data in that region. 2. Discounting imprecision. Ordinary least squares is the maximum likeli- hood estimate when the in Y = X~ · β + is IID Gaussian white noise. This means that the variance of has to be constant, and we measure the regression curve with the same precision elsewhere. This situation, of constant noise variance, is called homoskedasticity. Often however the magnitude of the noise is not constant, and the data are heteroskedastic. When we have heteroskedasticity, even if each noise term is still Gaussian, ordinary least squares is no longer the maximum likelihood estimate, and 1 2 so no longer efficient.