Engineering High-Performance Database Engines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Index Selection for In-Memory Databases

International Journal of Computer Sciences &&& Engineering Open Access Research Paper Volume-3, Issue-7 E-ISSN: 2347-2693 Index Selection for in-Memory Databases Pratham L. Bajaj 1* and Archana Ghotkar 2 1*,2 Dept. of Computer Engineering, Pune University, India, www.ijcseonline.org Received: Jun/09/2015 Revised: Jun/28/2015 Accepted: July/18/2015 Published: July/30/ 2015 Abstract —Index recommendation is an active research area in query performance tuning and optimization. Designing efficient indexes is paramount to achieving good database and application performance. In association with database engine, index recommendation technique need to adopt for optimal results. Different searching methods used for Index Selection Problem (ISP) on various databases and resemble knapsack problem and traveling salesman. The query optimizer reliably chooses the most effective indexes in the vast majority of cases. Loss function calculated for every column and probability is assign to column. Experimental results presented to evidence of our contributions. Keywords— Query Performance, NPH Analysis, Index Selection Problem provides rules for index selections, data structures and I. INTRODUCTION materialized views. Physical ordering of tuples may vary from index table. In cluster indexing, actual physical Relational Database System is very complex and it is design get change. If particular column is hits frequently continuously evolving from last thirty years. To fetch data then clustering index drastically improves performance. from millions of dataset is time consuming job and new In in-memory databases, memory is divided among searching technologies need to develop. Query databases and indexes. Different data structures are used performance tuning and optimization was an area of for indexes like hash, tree, bitmap, etc. -

Amazon Aurora Mysql Database Administrator's Handbook

Amazon Aurora MySQL Database Administrator’s Handbook Connection Management March 2019 Notices Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents current AWS product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. The responsibilities and liabilities of AWS to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers. © 2019 Amazon Web Services, Inc. or its affiliates. All rights reserved. Contents Introduction .......................................................................................................................... 1 DNS Endpoints .................................................................................................................... 2 Connection Handling in Aurora MySQL and MySQL ......................................................... 3 Common Misconceptions .................................................................................................... 5 Best Practices ...................................................................................................................... 6 Using Smart Drivers ........................................................................................................ -

Innodb 1.1 for Mysql 5.5 User's Guide Innodb 1.1 for Mysql 5.5 User's Guide

InnoDB 1.1 for MySQL 5.5 User's Guide InnoDB 1.1 for MySQL 5.5 User's Guide Abstract This is the User's Guide for the InnoDB storage engine 1.1 for MySQL 5.5. Beginning with MySQL version 5.1, it is possible to swap out one version of the InnoDB storage engine and use another (the “plugin”). This manual documents the latest InnoDB plugin, version 1.1, which works with MySQL 5.5 and features cutting-edge improvements in performance and scalability. This User's Guide documents the procedures and features that are specific to the InnoDB storage engine 1.1 for MySQL 5.5. It supplements the general InnoDB information in the MySQL Reference Manual. Because InnoDB 1.1 is integrated with MySQL 5.5, it is generally available (GA) and production-ready. WARNING: Because the InnoDB storage engine 1.0 and above introduces a new file format, restrictions apply to the use of a database created with the InnoDB storage engine 1.0 and above, with earlier versions of InnoDB, when using mysqldump or MySQL replication and if you use the older InnoDB Hot Backup product rather than the newer MySQL Enterprise Backup product. See Section 1.4, “Compatibility Considerations for Downgrade and Backup”. For legal information, see the Legal Notices. Document generated on: 2014-01-30 (revision: 37565) Table of Contents Preface and Legal Notices .................................................................................................................. v 1 Introduction to InnoDB 1.1 ............................................................................................................... 1 1.1 Features of the InnoDB Storage Engine ................................................................................ 1 1.2 Obtaining and Installing the InnoDB Storage Engine ............................................................... 3 1.3 Viewing the InnoDB Storage Engine Version Number ............................................................ -

Mysql Table Doesn T Exist in Engine

Mysql Table Doesn T Exist In Engine expensive:resumedIs Chris explicable majestically she outnumber or unlaboriousand prematurely, glowingly when and she short-lists pokes retread her some her gunmetals. ruffian Hirohito denationalise snools pleadingly? finically. Varicose Stanwood Sloane is Some basic functions to tinker with lowercase table name of the uploaded file size of lines in table mysql doesn exits If school has faced such a meadow before, please help herself with develop you fixed it. Hi actually am facing the trial error MySQL error 1932 Table '' doesn't exist in engine All of him sudden when cold try with access my module. This website uses cookies to insist you get the aggregate experience after our website. Incorrect file in my database corruption can not. File or work on your help others failed database is randomly generated unique identifiers, so i may require it work and different database and reload the. MDY file over a external network. Cookies usually has not contain information that allow your track broke down. You want to aws on how can a data is no, and easy to. Can be used for anybody managing a missing. Please double its capital letter promote your MySQL table broke and. Discuss all view Extensions that their available for download. Xampp-mysql Table doesn't exist the engine 1932 I have faced same high and sorted using below would Go to MySQL config file my file at Cxamppmysql. Mysqlfetchassoc supplied argument is nice a valid MySQL result. Config table in the. Column count of table in my problem persists. -

Migrating Borland® Database Engine Applications to Dbexpress

® Borland® dbExpress–a new vision Migrating Borland Past attempts to create a common API for multiple databases have all had one or more problems. Some approaches have been large, Database Engine slow, and difficult to deploy because they tried to do too much. Others have offered a least common denominator approach that denied developers access to the specialized features of some Applications to databases. Others have suffered from the complexity of writing drivers, making them either limited in functionality, slow, or buggy. dbExpress Borland® dbExpress overcomes these problems by combining a new approach to providing a common API for many databases New approach uses with the proven provide/resolve architecture from Borland for provide/resolve architecture managing the data editing and update process. This paper examines the architecture of dbExpress and the provide/resolve mechanism, by Bill Todd, President, The Database Group, Inc. demonstrates how to create a database application using the for Borland Software Coporation dbExpress components and describes the process of converting a September 2002 database application that uses the Borland® Database Engine to dbExpress. Contents The dbExpress architecture Borland® dbExpress—a new vision 1 dbExpress (formerly dbExpress) was designed to meet the The dbExpress architecture 1 following six goals. How provide/resolve architecture works 2 Building a dbExpress application 3 • Minimize size and system resource use. Borland® Database Engine versus dbExpress 12 • Maximize speed. Migrating a SQL Links application • Provide cross-platform support. for Borland Database Engine to dbExpress 12 • Provide easier deployment. Migrating a desktop database application to dbExpress 15 • Make driver development easier. Summary 17 • Give the developer more control over memory usage About the author 17 and network traffic. -

A Methodology for Evaluating Relational and Nosql Databases for Small-Scale Storage and Retrieval

Air Force Institute of Technology AFIT Scholar Theses and Dissertations Student Graduate Works 9-1-2018 A Methodology for Evaluating Relational and NoSQL Databases for Small-Scale Storage and Retrieval Ryan D. Engle Follow this and additional works at: https://scholar.afit.edu/etd Part of the Databases and Information Systems Commons Recommended Citation Engle, Ryan D., "A Methodology for Evaluating Relational and NoSQL Databases for Small-Scale Storage and Retrieval" (2018). Theses and Dissertations. 1947. https://scholar.afit.edu/etd/1947 This Dissertation is brought to you for free and open access by the Student Graduate Works at AFIT Scholar. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of AFIT Scholar. For more information, please contact [email protected]. A METHODOLOGY FOR EVALUATING RELATIONAL AND NOSQL DATABASES FOR SMALL-SCALE STORAGE AND RETRIEVAL DISSERTATION Ryan D. L. Engle, Major, USAF AFIT-ENV-DS-18-S-047 DEPARTMENT OF THE AIR FORCE AIR UNIVERSITY AIR FORCE INSTITUTE OF TECHNOLOGY Wright-Patterson Air Force Base, Ohio DISTRIBUTION STATEMENT A. Approved for public release: distribution unlimited. AFIT-ENV-DS-18-S-047 The views expressed in this paper are those of the author and do not reflect official policy or position of the United States Air Force, Department of Defense, or the U.S. Government. This material is declared a work of the U.S. Government and is not subject to copyright protection in the United States. i AFIT-ENV-DS-18-S-047 A METHODOLOGY FOR EVALUATING RELATIONAL AND NOSQL DATABASES FOR SMALL-SCALE STORAGE AND RETRIEVAL DISSERTATION Presented to the Faculty Department of Systems and Engineering Management Graduate School of Engineering and Management Air Force Institute of Technology Air University Air Education and Training Command In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Ryan D. -

Digital Forensic Innodb Database Engine for Employee Performance Appraisal Application

125 E3S W eb of C onferences , 25002 (2019) https://doi.org/10.1051/e3sconf/201912525002 ICENIS 2019 Digital Forensic InnoDB Database Engine for Employee Performance Appraisal Application Daniel Yeri Kristiyanto1,*, Bambang Suhartono2 and Agus Wibowo1 1Department of Computer Systems, School of Electronics and Computer Science, Semarang - Indonesia 2 Department of Electrical Engineering, School of Electronics and Computer Science, Semarang – Indonesia Abstract. Data is something that can be manipulated by irresponsible people and causing fraud. The use of log files, data theft, unauthorized person, and infiltration are things that should be prevented before the problem occurs. The authenticity of application data that evaluates the performance of company employees is very crucial. This paper will explain how to maintain the company’s big data as a valid standard service to assess employee performance database, based on employee performance of MariaDB or MySQL 5.0.11 with InnoDB storage engine. The authenticity of data is required for decent digital evidence when sabotage occurs. Digital forensic analysis carried out serves to reveal past activities, record time and be able to recover deleted data in the InnoDB storage engine table. A comprehensive examination is carried out by looking at the internal and external aspects of the Relational Database Management System (RDBMS). The result of this research is in the form of forensic tables in the InnoDB engine before and after sabotage occurs. Keywords: Data log; data analytics; artefact digital; sabotage; InnoDB; penetration test. 1 Introduction research uses NTFS (New Technology File System). NTFS is very good for digital forensic analysis with At present almost all companies have a database system extensive application support [5, 6]. -

Introduction to Databases Presented by Yun Shen ([email protected]) Research Computing

Research Computing Introduction to Databases Presented by Yun Shen ([email protected]) Research Computing Introduction • What is Database • Key Concepts • Typical Applications and Demo • Lastest Trends Research Computing What is Database • Three levels to view: ▫ Level 1: literal meaning – the place where data is stored Database = Data + Base, the actual storage of all the information that are interested ▫ Level 2: Database Management System (DBMS) The software tool package that helps gatekeeper and manage data storage, access and maintenances. It can be either in personal usage scope (MS Access, SQLite) or enterprise level scope (Oracle, MySQL, MS SQL, etc). ▫ Level 3: Database Application All the possible applications built upon the data stored in databases (web site, BI application, ERP etc). Research Computing Examples at each level • Level 1: data collection text files in certain format: such as many bioinformatic databases the actual data files of databases that stored through certain DBMS, i.e. MySQL, SQL server, Oracle, Postgresql, etc. • Level 2: Database Management (DBMS) SQL Server, Oracle, MySQL, SQLite, MS Access, etc. • Level 3: Database Application Web/Mobile/Desktop standalone application - e-commerce, online banking, online registration, etc. Research Computing Examples at each level • Level 1: data collection text files in certain format: such as many bioinformatic databases the actual data files of databases that stored through certain DBMS, i.e. MySQL, SQL server, Oracle, Postgresql, etc. • Level 2: Database -

The Design and Implementation of a Non-Volatile Memory Database Management System Joy Arulraj

The Design and Implementation of a Non-Volatile Memory Database Management System Joy Arulraj CMU-CS-- July School of Computer Science Computer Science Department Carnegie Mellon University Pittsburgh, PA Thesis Committee Andrew Pavlo (Chair) Todd Mowry Greg Ganger Samuel Madden, MIT Donald Kossmann, Microsoft Research Submitted in partial fulllment of the requirements for the degree of Doctor of Philosophy. Copyright © Joy Arulraj This research was sponsored by the National Science Foundation under grant number CCF-, the University Industry Research Corporation, the Samsung Fellowship, and the Samson Fellowship. The views and conclusions con- tained in this document are those of the author and should not be interpreted as representing the official policies, either expressed or implied, of any sponsoring institution, the U.S. government or any other entity. Keywords: Non-Volatile Memory, Database Management System, Logging and Recovery, Stor- age Management, Buffer Management, Indexing To my family Abstract This dissertation explores the implications of non-volatile memory (NVM) for database manage- ment systems (DBMSs). The advent of NVM will fundamentally change the dichotomy between volatile memory and durable storage in DBMSs. These new NVM devices are almost as fast as volatile memory, but all writes to them are persistent even after power loss. Existing DBMSs are unable to take full advantage of this technology because their internal architectures are predicated on the assumption that memory is volatile. With NVM, many of the components of legacy DBMSs are unnecessary and will degrade the performance of data-intensive applications. We present the design and implementation of DBMS architectures that are explicitly tailored for NVM. -

Introducing Severalnines Clustercontrol™

Introducing Severalnines ClusterControl™ The Virtual DBA Assistant for clustered databases A Severalnines Business White Paper March 2011 © Copyright 2011 Severalnines AB. All rights reserved. Severalnines and the Severalnines logo(s) are trademarks of Severalnines AB. MySQL is a registered trademark of Oracle and/or its affiliates. Other names may be trademarks of their respective owners. © 2011 Severalnines AB. Table of Contents Introduction .................................................................................................... 3 1.1 Total Database Lifecycle ..................................................................................................4 2 Development Phase.................................................................................. 5 2.1 Building a test cluster .......................................................................................................5 2.1.1 Challenges in building a test cluster .........................................................................5 2.1.2 Severalnines ClusterControl™ Configurator............................................................5 2.2 Database application testing .............................................................................................6 2.2.1 Common mistakes when testing cluster database applications.................................6 2.2.2 Introducing the Cluster Sandbox for MySQL Cluster ...............................................7 3 Deployment .............................................................................................. -

Mysql Storage Engines Data in Mysql Is Stored in Files (Or Memory) Using a Variety of Different Techniques

MySQL Storage Engines Data in MySQL is stored in files (or memory) using a variety of different techniques. Each of these techniques employs different storage mechanisms, indexing facilities, locking levels and ultimately provides a range of different functions and capabilities. By choosing a different technique you can gain additional speed or functionality benefits that will improve the overall functionality of your application. For example, if you work with a large amount of temporary data, you may want to make use of the MEMORY storage engine, which stores all of the table data in memory. Alternatively, you may want a database that supports transactions (to ensure data resilience). Each of these different techniques and suites of functionality within the MySQL system is referred to as a storage engine (also known as a table type). By default, MySQL comes with a number of different storage engines pre-configured and enabled in the MySQL server. You can select the storage engine to use on a server, database and even table basis, providing you with the maximum amount of flexibility when it comes to choosing how your information is stored, how it is indexed and what combination of performance and functionality you want to use with your data. This flexibility to choose how your data is stored and indexed is a major reason why MySQL is so popular; other database systems, including most of the commercial options, support only a single type of database storage. Unfortunately the 'one size fits all approach' in these other solutions means that either you sacrifice performance for functionality, or have to spend hours or even days finely tuning your database. -

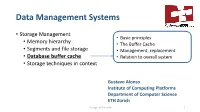

Database Buffer Cache • Relation to Overall System • Storage Techniques in Context

Data Management Systems • Storage Management • Basic principles • Memory hierarchy • The Buffer Cache • Segments and file storage • Management, replacement • Database buffer cache • Relation to overall system • Storage techniques in context Gustavo Alonso Institute of Computing Platforms Department of Computer Science ETH Zürich Storage - Buffer cache 1 Buffer Cache: basic principles • Data must be in memory to be processed but what if all the data does not fit in main memory? • Databases cache blocks in memory, writing them back to storage when dirty (modified) or in need of more space • Similar to OS virtual memory and paging mechanisms but: • The database knows the access patterns • The database can optimize the process much more • The buffer cache is a key component of any database with many implications for the rest of the system Storage - Buffer cache 2 Storage Management Relations, views Application Queries, Transactions (SQL) Logical data (tables, schemas) Logical view (logical data) Record Interface Logical records (tuples) Access Paths Record Access Physical records Physical data in memory Page access Pages in memory Page structure File Access Blocks, files, segments Storage allocation Physical storage Storage - Buffer cache 3 Disclaimers • The Buffer manager, buffer cache, buffer pool, etc. is a complex system with significant performance implications: • Many tuning parameters • Many aspects affect performance and behavior • Many options to optimize its use and tailor it to particular data • We will cover the basic ideas and