A Methodology for Evaluating Relational and Nosql Databases for Small-Scale Storage and Retrieval

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oracle Database VLDB and Partitioning Guide, 11G Release 2 (11.2) E25523-01

Oracle® Database VLDB and Partitioning Guide 11g Release 2 (11.2) E25523-01 September 2011 Oracle Database VLDB and Partitioning Guide, 11g Release 2 (11.2) E25523-01 Copyright © 2008, 2011, Oracle and/or its affiliates. All rights reserved. Contributors: Hermann Baer, Eric Belden, Jean-Pierre Dijcks, Steve Fogel, Lilian Hobbs, Paul Lane, Sue K. Lee, Diana Lorentz, Valarie Moore, Tony Morales, Mark Van de Wiel This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). -

Vmware Tunnel

VMware Tunnel VMware Workspace ONE UEM VMware Tunnel You can find the most up-to-date technical documentation on the VMware website at: https://docs.vmware.com/ VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com © Copyright 2021 VMware, Inc. All rights reserved. Copyright and trademark information. VMware, Inc. 2 Contents 1 Introduction to VMware Tunnel 5 2 Supported Platforms for VMware Workspace ONE Tunnel 7 3 Understanding the Key Concepts of VMware Tunnel 9 4 VMware Tunnel Architecture 14 5 VMware Tunnel Deployment Model 18 6 Configure VMware Tunnel 25 Configure AWCM Server and Enable API Access before VMware Tunnel installation 26 Configure Per-App Tunnel 26 Configure Network Traffic Rules for the Per-App Tunnel 34 Integrating VMware Tunnel with NSX 43 Configure VMware Tunnel Proxy 44 Configure Outbound Proxy 51 7 Configure SASE Admin Experience for Tunnel 57 8 VMware Tunnel Deployment with Unified Access Gateway 59 Installing VMware Tunnel with Unified Access Gateway 64 Configure VMware Tunnel Settings in the Unified Access Gateway UI 73 Upgrade VMware Tunnel Deployed with Unified Access Gateway 75 9 VMware Tunnel Deployment on a Linux Server 77 Single-Tier VMware Tunnel Installation 81 Multi-Tier VMware Tunnel Installation 84 Upgrade VMware Tunnel Deployed on a Linux Server 90 Uninstall VMware Tunnel 90 Migrating to VMware Tunnel 91 10 VMware Tunnel Management 92 Deploying Per-App Tunnel to devices 92 Configure Per-App Tunnel Profile for Android 95 Configure Per-App Tunnel Profile for iOS 95 Configure Per-App Tunnel -

Data Warehouse Fundamentals for Storage Professionals – What You Need to Know EMC Proven Professional Knowledge Sharing 2011

Data Warehouse Fundamentals for Storage Professionals – What You Need To Know EMC Proven Professional Knowledge Sharing 2011 Bruce Yellin Advisory Technology Consultant EMC Corporation [email protected] Table of Contents Introduction ................................................................................................................................ 3 Data Warehouse Background .................................................................................................... 4 What Is a Data Warehouse? ................................................................................................... 4 Data Mart Defined .................................................................................................................. 8 Schemas and Data Models ..................................................................................................... 9 Data Warehouse Design – Top Down or Bottom Up? ............................................................10 Extract, Transformation and Loading (ETL) ...........................................................................11 Why You Build a Data Warehouse: Business Intelligence .....................................................13 Technology to the Rescue?.......................................................................................................19 RASP - Reliability, Availability, Scalability and Performance ..................................................20 Data Warehouse Backups .....................................................................................................26 -

Not ACID, Not BASE, but SALT a Transaction Processing Perspective on Blockchains

Not ACID, not BASE, but SALT A Transaction Processing Perspective on Blockchains Stefan Tai, Jacob Eberhardt and Markus Klems Information Systems Engineering, Technische Universitat¨ Berlin fst, je, [email protected] Keywords: SALT, blockchain, decentralized, ACID, BASE, transaction processing Abstract: Traditional ACID transactions, typically supported by relational database management systems, emphasize database consistency. BASE provides a model that trades some consistency for availability, and is typically favored by cloud systems and NoSQL data stores. With the increasing popularity of blockchain technology, another alternative to both ACID and BASE is introduced: SALT. In this keynote paper, we present SALT as a model to explain blockchains and their use in application architecture. We take both, a transaction and a transaction processing systems perspective on the SALT model. From a transactions perspective, SALT is about Sequential, Agreed-on, Ledgered, and Tamper-resistant transaction processing. From a systems perspec- tive, SALT is about decentralized transaction processing systems being Symmetric, Admin-free, Ledgered and Time-consensual. We discuss the importance of these dual perspectives, both, when comparing SALT with ACID and BASE, and when engineering blockchain-based applications. We expect the next-generation of decentralized transactional applications to leverage combinations of all three transaction models. 1 INTRODUCTION against. Using the admittedly contrived acronym of SALT, we characterize blockchain-based transactions There is a common belief that blockchains have the – from a transactions perspective – as Sequential, potential to fundamentally disrupt entire industries. Agreed, Ledgered, and Tamper-resistant, and – from Whether we are talking about financial services, the a systems perspective – as Symmetric, Admin-free, sharing economy, the Internet of Things, or future en- Ledgered, and Time-consensual. -

MDA Process to Extract the Data Model from a Document-Oriented Nosql Database Amal Ait Brahim, Rabah Tighilt Ferhat, Gilles Zurfluh

MDA process to extract the data model from a document-oriented NoSQL database Amal Ait Brahim, Rabah Tighilt Ferhat, Gilles Zurfluh To cite this version: Amal Ait Brahim, Rabah Tighilt Ferhat, Gilles Zurfluh. MDA process to extract the data model from a document-oriented NoSQL database. ICEIS 2019: 21st International Conference on Enterprise Information Systems, May 2019, Heraklion, Greece. pp.141-148, 10.5220/0007676201410148. hal- 02886696 HAL Id: hal-02886696 https://hal.archives-ouvertes.fr/hal-02886696 Submitted on 1 Jul 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Open Archive Toulouse Archive Ouverte OATAO is an open access repository that collects the work of Toulouse researchers and makes it freely available over the web where possible This is an author’s version published in: https://oatao.univ-toulouse.fr/26232 Official URL : https://doi.org/10.5220/0007676201410148 To cite this version: Ait Brahim, Amal and Tighilt Ferhat, Rabah and Zurfluh, Gilles MDA process to extract the data model from a document-oriented NoSQL database. (2019) In: ICEIS 2019: 21st International Conference on Enterprise Information Systems, 3 May 2019 - 5 May 2019 (Heraklion, Greece). -

Database Systems Administrator FLSA: Exempt

CLOVIS UNIFIED SCHOOL DISTRICT POSITION DESCRIPTION Position: Database Systems Administrator FLSA: Exempt Department/Site: Technology Services Salary Grade: 127 Reports to/Evaluated by: Chief Technology Officer Salary Schedule: Classified Management SUMMARY Gathers requirements and provisions servers to meet the district’s need for database storage. Installs and configures SQL Server according to the specifications of outside software vendors and/or the development group. Designs and executes a security scheme to assure safety of confidential data. Implements and manages a backup and disaster recovery plan. Monitors the health and performance of database servers and database applications. Troubleshoots database application performance issues. Automates monitoring and maintenance tasks. Maintains service pack deployment and upgrades servers in consultation with the developer group and outside vendors. Deploys and schedules SQL Server Agent tasks. Implements and manages replication topologies. Designs and deploys datamarts under the supervision of the developer group. Deploys and manages cubes for SSAS implementations. DISTINGUISHING CAREER FEATURES The Database Systems Administrator is a senior level analyst position requiring specialized education and training in the field of study typically resulting in a degree. Advancement to this position would require competency in the design and administration of relational databases designated for business, enterprise, and student information. Advancement from this position is limited to supervisory management positions. ESSENTIAL DUTIES AND RESPONSIBILITIES Implements, maintains and administers all district supported versions of Microsoft SQL as well as other DBMS as needed. Responsible for database capacity planning and involvement in server capacity planning. Responsible for verification of backups and restoration of production and test databases. Designs, implements and maintains a disaster recovery plan for critical data resources. -

MDA Process to Extract the Data Model from Document-Oriented Nosql Database

MDA Process to Extract the Data Model from Document-oriented NoSQL Database Amal Ait Brahim, Rabah Tighilt Ferhat and Gilles Zurfluh Toulouse Institute of Computer Science Research (IRIT), Toulouse Capitole University, Toulouse, France Keywords: Big Data, NoSQL, Model Extraction, Schema Less, MDA, QVT. Abstract: In recent years, the need to use NoSQL systems to store and exploit big data has been steadily increasing. Most of these systems are characterized by the property "schema less" which means absence of the data model when creating a database. This property brings an undeniable flexibility by allowing the evolution of the model during the exploitation of the base. However, query expression requires a precise knowledge of the data model. In this article, we propose a process to automatically extract the physical model from a document- oriented NoSQL database. To do this, we use the Model Driven Architecture (MDA) that provides a formal framework for automatic model transformation. From a NoSQL database, we propose formal transformation rules with QVT to generate the physical model. An experimentation of the extraction process was performed on the case of a medical application. 1 INTRODUCTION however that it is absent in some systems such as Cassandra and Riak TS. The "schema less" property Recently, there has been an explosion of data offers undeniable flexibility by allowing the model to generated and accumulated by more and more evolve easily. For example, the addition of new numerous and diversified computing devices. attributes in an existing line is done without Databases thus constituted are designated by the modifying the other lines of the same type previously expression "Big Data" and are characterized by the stored; something that is not possible with relational so-called "3V" rule (Chen, 2014). -

What Is Nosql? the Only Thing That All Nosql Solutions Providers Generally Agree on Is That the Term “Nosql” Isn’T Perfect, but It Is Catchy

NoSQL GREG SYSADMINBURD Greg Burd is a Developer Choosing between databases used to boil down to examining the differences Advocate for Basho between the available commercial and open source relational databases . The term Technologies, makers of Riak. “database” had become synonymous with SQL, and for a while not much else came Before Basho, Greg spent close to being a viable solution for data storage . But recently there has been a shift nearly ten years as the product manager for in the database landscape . When considering options for data storage, there is a Berkeley DB at Sleepycat Software and then new game in town: NoSQL databases . In this article I’ll introduce this new cat- at Oracle. Previously, Greg worked for NeXT egory of databases, examine where they came from and what they are good for, and Computer, Sun Microsystems, and KnowNow. help you understand whether you, too, should be considering a NoSQL solution in Greg has long been an avid supporter of open place of, or in addition to, your RDBMS database . source software. [email protected] What Is NoSQL? The only thing that all NoSQL solutions providers generally agree on is that the term “NoSQL” isn’t perfect, but it is catchy . Most agree that the “no” stands for “not only”—an admission that the goal is not to reject SQL but, rather, to compensate for the technical limitations shared by the majority of relational database implemen- tations . In fact, NoSQL is more a rejection of a particular software and hardware architecture for databases than of any single technology, language, or product . -

The Relational Data Model and Relational Database Constraints

chapter 33 The Relational Data Model and Relational Database Constraints his chapter opens Part 2 of the book, which covers Trelational databases. The relational data model was first introduced by Ted Codd of IBM Research in 1970 in a classic paper (Codd 1970), and it attracted immediate attention due to its simplicity and mathematical foundation. The model uses the concept of a mathematical relation—which looks somewhat like a table of values—as its basic building block, and has its theoretical basis in set theory and first-order predicate logic. In this chapter we discuss the basic characteristics of the model and its constraints. The first commercial implementations of the relational model became available in the early 1980s, such as the SQL/DS system on the MVS operating system by IBM and the Oracle DBMS. Since then, the model has been implemented in a large num- ber of commercial systems. Current popular relational DBMSs (RDBMSs) include DB2 and Informix Dynamic Server (from IBM), Oracle and Rdb (from Oracle), Sybase DBMS (from Sybase) and SQLServer and Access (from Microsoft). In addi- tion, several open source systems, such as MySQL and PostgreSQL, are available. Because of the importance of the relational model, all of Part 2 is devoted to this model and some of the languages associated with it. In Chapters 4 and 5, we describe the SQL query language, which is the standard for commercial relational DBMSs. Chapter 6 covers the operations of the relational algebra and introduces the relational calculus—these are two formal languages associated with the relational model. -

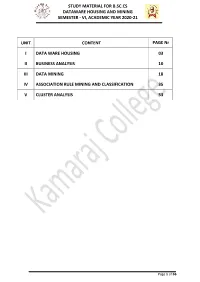

Study Material for B.Sc.Cs Dataware Housing and Mining Semester - Vi, Academic Year 2020-21

STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 UNIT CONTENT PAGE Nr I DATA WARE HOUSING 03 II BUSINESS ANALYSIS 10 III DATA MINING 18 IV ASSOCIATION RULE MINING AND CLASSIFICATION 35 V CLUSTER ANALYSIS 53 Page 1 of 66 STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 UNIT I: DATA WAREHOUSING Data warehousing Components: ->Overall Architecture Data warehouse architecture is Based on a relational database management system server that functions as the central repository (a central location in which data is stored and managed) for informational data In the data warehouse architecture, operational data and processing is separate and data warehouse processing is separate. Central information repository is surrounded by a number of key components. These key components are designed to make the entire environment- (i) functional, (ii) manageable and (iii) accessible by both the operational systems that source data into warehouse by end-user query and analysis tools. Page 2 of 66 STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 The source data for the warehouse comes from the operational applications As data enters the data warehouse, it is transformed into an integrated structure and format The transformation process may involve conversion, summarization, filtering, and condensation of data Because data within the data warehouse contains a large historical component the data warehouse must b capable of holding and managing large volumes of data and different data structures for the same database over time. ->Data Warehouse Database Central data warehouse database is a foundation for data warehousing environment. -

SQL Vs Nosql: a Performance Comparison

SQL vs NoSQL: A Performance Comparison Ruihan Wang Zongyan Yang University of Rochester University of Rochester [email protected] [email protected] Abstract 2. ACID Properties and CAP Theorem We always hear some statements like ‘SQL is outdated’, 2.1. ACID Properties ‘This is the world of NoSQL’, ‘SQL is still used a lot by We need to refer the ACID properties[12]: most of companies.’ Which one is accurate? Has NoSQL completely replace SQL? Or is NoSQL just a hype? SQL Atomicity (Structured Query Language) is a standard query language A transaction is an atomic unit of processing; it should for relational database management system. The most popu- either be performed in its entirety or not performed at lar types of RDBMS(Relational Database Management Sys- all. tems) like Oracle, MySQL, SQL Server, uses SQL as their Consistency preservation standard database query language.[3] NoSQL means Not A transaction should be consistency preserving, meaning Only SQL, which is a collection of non-relational data stor- that if it is completely executed from beginning to end age systems. The important character of NoSQL is that it re- without interference from other transactions, it should laxes one or more of the ACID properties for a better perfor- take the database from one consistent state to another. mance in desired fields. Some of the NOSQL databases most Isolation companies using are Cassandra, CouchDB, Hadoop Hbase, A transaction should appear as though it is being exe- MongoDB. In this paper, we’ll outline the general differences cuted in iso- lation from other transactions, even though between the SQL and NoSQL, discuss if Relational Database many transactions are execut- ing concurrently. -

Index Selection for In-Memory Databases

International Journal of Computer Sciences &&& Engineering Open Access Research Paper Volume-3, Issue-7 E-ISSN: 2347-2693 Index Selection for in-Memory Databases Pratham L. Bajaj 1* and Archana Ghotkar 2 1*,2 Dept. of Computer Engineering, Pune University, India, www.ijcseonline.org Received: Jun/09/2015 Revised: Jun/28/2015 Accepted: July/18/2015 Published: July/30/ 2015 Abstract —Index recommendation is an active research area in query performance tuning and optimization. Designing efficient indexes is paramount to achieving good database and application performance. In association with database engine, index recommendation technique need to adopt for optimal results. Different searching methods used for Index Selection Problem (ISP) on various databases and resemble knapsack problem and traveling salesman. The query optimizer reliably chooses the most effective indexes in the vast majority of cases. Loss function calculated for every column and probability is assign to column. Experimental results presented to evidence of our contributions. Keywords— Query Performance, NPH Analysis, Index Selection Problem provides rules for index selections, data structures and I. INTRODUCTION materialized views. Physical ordering of tuples may vary from index table. In cluster indexing, actual physical Relational Database System is very complex and it is design get change. If particular column is hits frequently continuously evolving from last thirty years. To fetch data then clustering index drastically improves performance. from millions of dataset is time consuming job and new In in-memory databases, memory is divided among searching technologies need to develop. Query databases and indexes. Different data structures are used performance tuning and optimization was an area of for indexes like hash, tree, bitmap, etc.