5 Signs Your Cache + Database Architecture May Be Obsolete

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Optimized Database Performance Contents Your Customers Need Lots of Data, Fast

Improve customer experience in B2B SaaS with optimized database performance Contents Your customers need lots of data, fast Your customers need lots of data, fast ......................... 2 Business to business applications such as human resources (HR) portals, collaboration tools and customer relationship management (CRM) Memory and storage: completing the pyramid .......... 3 systems must all provide fast access to data. Companies use these tools Traditional tiers of memory and storage ....................... 3 to increase their productivity, so delays will affect their businesses, and A new memory tier .................................................................. 4 ultimately their loyalty to the software provider. Additional benefits of the 2nd generation Intel® As data volumes grow, business to business software as a service (B2B SaaS) providers need to ensure their databases not only have the Xeon® Scalable processor .....................................................4 capacity for lots of data, but can also access it at speed. Improving caching with Memory Mode .......................... 5 Building services that deliver the data customers need at the moment More responsive storage with App Direct Mode ........ 6 they need it can lead to higher levels of customer satisfaction. That, in turn, could lead to improved loyalty, the opportunity to win new Crawl, walk, run ......................................................................... 8 business, and greater revenue for the B2B SaaS provider. The role of SSDs ...................................................................... -

Grade Database Architecture for Mission-‐Critical, Real

WHITE PAPER Building an Enterprise-Grade Database Architecture for Mission-Critical, Real-Time Applications Table of contents A new generation of applications – Systems of engagement ......................................... 3 New challenges – Always on at the speed of now .......................................................... 3 Shortcomings of conventional technologies ................................................................... 4 Limitations of relational databases .............................................................................. 4 Emergence of NoSQL ................................................................................................... 4 Challenges of adding a caching layer ........................................................................... 5 A better way – In-memory speed without a caching layer ............................................. 7 The industry’s first SSD-optimized NoSQL database architecture ............................... 7 How it works ................................................................................................................... 8 Exploiting cost and durability breakthroughs in flash memory technology ................ 9 Enterprise-grade NoSQL has arrived ............................................................................... 9 Financial services – Position system of record and risk ............................................... 9 More sample use cases ................................................................................................ -

Aerospike Multi-Site Clustering: Globally Distributed, Strongly Consistent, Highly Resilient

Aerospike Multi-site Clustering: Globally Distributed, Strongly Consistent, Highly Resilient Transactions at Scale Contents Executive Summary ......................................................................................................... 3 Applications and Use Cases ........................................................................................... 3 Fundamental Concepts.................................................................................................... 5 The Aerospike Approach ................................................................................................. 6 Core Technologies ........................................................................................................... 7 Rack Awareness ..................................................................................................................................... 7 Strong, Immediate Data Consistency ..................................................................................................... 8 Operational Scenarios ..................................................................................................... 9 Healthy Cluster ....................................................................................................................................... 9 Failure Situations .................................................................................................................................. 10 Summary......................................................................................................................... -

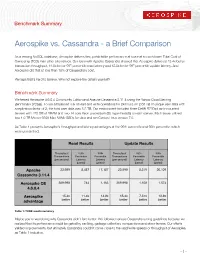

Aerospike Vs. Cassandra - a Brief Comparison

Benchmark Summary Aerospike vs. Cassandra - a Brief Comparison As a leading NoSQL database, Aerospike delivers fast, predictable performance at scale at a much lower Total Cost of Ownership (TCO) than other alternatives. Our tests with Apache Cassandra showed that Aerospike delivered 15.4x better transaction throughput, 14.8x better 99th percentile read latency and 12.8x better 99th percentile update latency. And Aerospike did that at less than 10% of Cassandra’s cost. Perhaps that’s hard to believe. Why not explore the details yourself? Benchmark Summary We tested Aerospike 4.6.0.4 Community Edition and Apache Cassandra 3.11.4 using the Yahoo Cloud Serving Benchmark (YCSB). To run a balanced mix of read and write operations for 24 hours on 2.65 TB of unique user data with a replication factor of 2, the total user data was 5.3 TB. Our environment included three Dell® R730xd rack-mounted servers with 128 GB of DRAM and two 14 core Xeon processors (56 hyperthreads) on each server. Each server utilized two 1.6 TB Micron 9200 Max NVMe SSDs for data and ran Centos Linux version 7.6. As Table 1 presents Aerospike’s throughput and latency advantages at the 99th percentile and 95th percentile, which were unmatched. Read Results Update Results Throughput 95th 99th Throughput 95th 99th Transactions Percentile Percentile Transactions Percentile Percentile (per second) Latency Latency (per second) Latency Latency (µsecs) (µsecs) (µsecs) (µsecs) Apache 23,999 8,487 17,187 23,999 8,319 20,109 Cassandra 3.11.4 Aerospike CE 369,998 744 1,163 369,998 1,108 1,574 4.6.0.4 Aerospike 15.4x 11.4x 14.8x 15.4x 7.51x 12.8x advantage better better better better better better Table 1: YCSB results summary Maybe you’re wondering why Cassandra didn’t fare better. -

Drivescale Architecture for Data Center Scale Composability

DriveScale Architecture for Data Center Scale Composability by Brian Pawlowski, Chris Unkel, Jim Hanko, Jean-François Remy 1 Overview of talk § Defining composable infrastructure § What the administrator sees § DriveScale design and architecture § Future directions § Recapitulation § Questions 2 Defining composable infrastructure 3 DriveScale – Disaggregate then Compose Captive, fixed DAS Composable DAS DAS Disaggregate DAS DAS DAS DAS Purchase Time Right sized Defined Infrastructure Software Defined Infrastructure 4 Composable Infrastructure – blade chassis scale • Single vendor hardware • Low scalability 10,000 Servers 100,000 Drives 5 Composable Infrastructure – data center scale 10,000 Servers 100,000 Drives • Choose optimized dense diskless servers, optimized storage platforms for HDD and SSD • Multivendor – mix and match • Multiprotocol Ethernet (iSCSI, ROCEv2, NVME over TCP) for wide variety of use cases • Composer platform server(s) configuration: dual socket x86, 128GB DRAM, SSD 6 Composable Infrastructure at scale Compose any compute to any drive Ethernet to storage adapters PhysicalLogical View View Hadoop Cassandra Aerospike 7 Think Bare Metal Virtualized Infrastructure for Linux, at scale – but without the VMs. 8 The devil is in the details § Breaking up (disaggregation) is easy (in my pocket) § Composable Infrastructure – at scale – is hard • Create secure durable bindings between servers and drives • Plumb end-to-end Linux storage stack over multiple protocols, multipathing, file systems, RAID configurations, hardware vendors -

Aerospike: Architecture of a Real-Time Operational DBMS

Aerospike: Architecture of a Real-Time Operational DBMS V. Srinivasan Brian Bulkowski Wei-Ling Chu Sunil Sayyaparaju Andrew Gooding Rajkumar Iyer Ashish Shinde Thomas Lopatic Aerospike, Inc. [email protected] ABSTRACT correct advertisement to a user, based on that user’s behavior. You In this paper, we describe the solutions developed to address key can see the basic architecture of the ecosystem illustrated in technical challenges encountered while building a distributed Figure 1. database system that can smoothly handle demanding real-time workloads and provide a high level of fault tolerance. Specifically, we describe schemes for the efficient clustering and data partitioning for the automatic scale out of processing across multiple nodes and for optimizing the usage of CPUs, DRAM, SSDs and networks to efficiently scale up performance on one node. The techniques described here were used to develop Aerospike (formerly Citrusleaf), a high performance distributed database system built to handle the needs of today’s interactive online services. Most real-time decision systems that use Aerospike require very high scale and need to make decisions within a strict SLA by reading from, and writing to, a database containing billions of data items at a rate of millions of operations per second with sub-millisecond latency. For over five years, Aerospike has been continuously used in over a hundred successful production deployments, as many enterprises have discovered that it can Figure 1: RTB technology stack substantially enhance their user experience. In order to participate in the real-time bidding [22] process, every participant in this ecosystem needs to have a high-performance 1. -

Memcached, Redis, and Aerospike Key-Value Stores Empirical

Memcached, Redis, and Aerospike Key-Value Stores Empirical Comparison Anthony Anthony Yaganti Naga Malleswara Rao University of Waterloo University of Waterloo 200 University Ave W 200 University Ave W Waterloo, ON, Canada Waterloo, ON, Canada +1 (226) 808-9489 +1 (226) 505-5900 [email protected] [email protected] ABSTRACT project. Thus, the results are somewhat biased as the tested DB The popularity of NoSQL database and the availability of larger setup might be set to give more advantage of one of the systems. DRAM have generated a great interest in in-memory key-value We will discuss more in Section 8 (Related Work). stores (kv-store) in the recent years. As a consequence, many In this work, we conduct a thorough experimental evaluation by similar kv-store store projects/products has emerged. Besides the comparing three major key-value stores nowadays, namely Redis, benchmarking results provided by the KV-store developers which Memcached, and Aerospike. We first elaborate the databases that are usually tested under their favorable setups and scenario, there we tested in Section 3. Then, the evaluation methodology are very limited comprehensive resources for users to decide comprises experimental setups, i.e., single and cluster mode; which kv-store to choose given a specific workload/use-case. To benchmark setups including the description of YCSB, dataset provide users with an unbiased and up-to-date insight in selecting configurations, types workloads (i.e., read-heavy, balanced, in-memory kv-stores, we conduct a study to empirically compare write-heavy), and concurrent access; and evaluation metrics will Redis, Memcached and Aerospike on equal ground by trying to be discussed in Section 4. -

Key Data Modeling Techniques

Key Data Modeling Techniques Piyush Gupta Director Customer Enablement Aerospike Aerospike is a "Record Centric", Distributed, NoSQL Database. 2 AEROSPIKE SUMMIT ‘19 | Proprietary & Confidential | All rights reserved. © 2019 Aerospike Inc Relational Data Modeling – Table Centric Schema, 3rdNF 3 AEROSPIKE SUMMIT ‘19 | Proprietary & Confidential | All rights reserved. © 2019 Aerospike Inc NoSQL Modeling: Record Centric Data Model § De-normalization implies duplication of data § Queries required dictate Data Model § No “Joins” across Tables (No View Table generation) § Aggregation (Multiple Data Entry) vs Association (Single Data Entry) § “Consists of” vs “related to” 4 AEROSPIKE SUMMIT ‘19 | Proprietary & Confidential | All rights reserved. © 2019 Aerospike Inc Before jumping into modeling your data ... What do you want to achieve? § Speed at Scale. § Need Consistency & Multi-Record Transactions? § Know your traffic. § Know your data. Model your data: § Even a simple key-value lookup model can be optimized to significantly reduce TCO. § Will you need secondary indexes? § List your Queries upfront. § Design de-normalized data model to address the queries. Data Modeling is tightly coupled with reducing the Total Cost of Operations. 5 AEROSPIKE SUMMIT ‘19 | Proprietary & Confidential | All rights reserved. © 2019 Aerospike Inc Aerospike Architecture Related Decisions [ Proprietary & Confidential || © 2017 Aerospike Inc. All rights reserved. 6 ] Namespaces – Select one or more. [Data Storage Options] § Storage: RAM– fastest, File storage slowest. SSDs: RAM like performance. § ALL FLASH: TCO advantage for petabyte stores/small size records. Latency penalty. 7 AEROSPIKE SUMMIT ‘19 | Proprietary & Confidential | All rights reserved. © 2019 Aerospike Inc Aerospike API Features for Data Modeling API Features to exploit for Data Modeling: § Write policy - GEN_EQUAL for Compare-and-Set (Read-Modify-Write). -

Accelerating Memcached on AWS Cloud Fpgas

Accelerating Memcached on AWS Cloud FPGAs Jongsok Choi, Ruolong Lian, Zhi Li, Andrew Canis, and Jason Anderson LegUp Computing Inc. 88 College St., Toronto, ON, Canada, M5G 1L4 [email protected] ABSTRACT In recent years, FPGAs have been deployed in data centres of major cloud service providers, such as Microsoft [1], Ama- zon [2], Alibaba [3], Tencent [4], Huawei [5], and Nimbix [6]. This marks the beginning of bringing FPGA computing to the masses, as being in the cloud, one can access an FPGA from anywhere. A wide range of applications are run in the cloud, includ- ing web servers and databases among many others. Mem- cached is a high-performance in-memory object caching sys- Figure 1: Memcached deployment architecture. tem, which acts as a caching layer between web servers and databases. It is used by many companies, including Flicker, Wikipedia, Wordpress, and Facebook [7, 8]. Another historic impediment to FPGA accessibility lies In this paper, we present a Memcached accelerator imple- in their programmability or ease-of-use. FPGAs have tra- mented on the AWS FPGA cloud (F1 instance). Compared ditionally been difficult to use, requiring expert hardware to AWS ElastiCache, an AWS-managed CPU Memcached designers to use low-level RTL code to design circuits. In service, our Memcached accelerator provides up to 9× bet- order for FPGAs to become mainstream computing devices, ter throughput and latency. A live demo of the Memcached they must be as easy to use as CPUs and GPUs. There has accelerator running on F1 can be accessed on our website [9]. -

Best Practices

Cloud Container Engine Best Practices Issue 01 Date 2021-09-07 HUAWEI TECHNOLOGIES CO., LTD. Copyright © Huawei Technologies Co., Ltd. 2021. All rights reserved. No part of this document may be reproduced or transmitted in any form or by any means without prior written consent of Huawei Technologies Co., Ltd. Trademarks and Permissions and other Huawei trademarks are trademarks of Huawei Technologies Co., Ltd. All other trademarks and trade names mentioned in this document are the property of their respective holders. Notice The purchased products, services and features are stipulated by the contract made between Huawei and the customer. All or part of the products, services and features described in this document may not be within the purchase scope or the usage scope. Unless otherwise specified in the contract, all statements, information, and recommendations in this document are provided "AS IS" without warranties, guarantees or representations of any kind, either express or implied. The information in this document is subject to change without notice. Every effort has been made in the preparation of this document to ensure accuracy of the contents, but all statements, information, and recommendations in this document do not constitute a warranty of any kind, express or implied. Issue 01 (2021-09-07) Copyright © Huawei Technologies Co., Ltd. i Cloud Container Engine Best Practices Contents Contents 1 Checklist for Deploying Containerized Applications in the Cloud...............................1 2 Cluster....................................................................................................................................... -

Ultra-High Performance Nosql Benchmarking

Ultra-High Performance NoSQL Benchmarking Analyzing Durability and Performance Tradeoffs Denis Nelubin, Director of Technology, Thumbtack Technology Ben Engber, CEO, Thumbtack Technology Overview As companies deal with ever larger amounts of data and increasingly demanding workloads, a new class of databases has taken hold. Dubbed “NoSQL”, these databases trade some of the features used by traditional relational databases in exchange for increased performance and/or partition tolerance. But as NoSQL solutions have proliferated and differentiated themselves (into key-value stores, document databases, graph databases, and “NewSQL”), trying to evaluate the database landscape for a particular class of problem becomes more and more difficult. In this paper we attempt to answer this question for one specific, but critical, class of functionality – applications that need the highest possible raw performance for a reliable storage engine. There have been a few attempts to provide standardized tools to measure performance or other characteristics, but these have been hobbled by the lack of a clear mandate on exactly what they’re testing, plus an inability to measure load at the highest volumes. In addition, there is an implicit tradeoff between the consistency and durability requirements of an application and the maximum throughput that can be processed. What is needed is not an attempt to quantify every NoSQL solution into one artificial bucket, but a more systemic analysis of how some of these databases can achieve under assumptions that mirror real-world application needs. We attempted to provide a comprehensive answer to one specific set of use cases for NoSQL databases -- consumer-facing applications which require extremely high throughput and low latency, and whose information can be represented using a key-value schema. -

Snapdeal Case Study

In-memory NoSQL Database fueled by Aerospike Snapdeal, India’s Largest Online Marketplace, Accelerates RESULTS Personalized Web Experience With Aerospike In-memory Aerospike database maintains sub-millisecond In India, Internet retail accounted for $3 billion in 2013 and is expected to latency on Amazon Elastic swell to $22 billion in the next five years. Still, with a total consumer market Compute Cloud (EC2) while managing 100 million-plus valued at $450 billion, India has been called the world’s last major frontier for objects stored in 8GB of DRAM e-commerce. Leading the industry in addressing this opportunity is Snapdeal, to support real-time dynamic India’s largest online marketplace. pricing. Snapdeal empowers sellers across the country to provide consumers with a Predictable low latency with 95- fully responsive and intuitive online shopping experience through an advanced 99% of transactions complet- ing within 10 milliseconds—es- platform that merges logistics subsystems with cutting-edge online and sential for enabling a responsive mobile payment models. The platform has a wide range of products from customer experience. thousands of national, international and regional brands. Aerospike’s highly efficient use Snapdeal.com now has a network of more than 20,000 sellers, of resources enables Snapdeal to cost effectively deploy on serving 20 million-plus members—one out of every six Internet just two servers in Amazon EC2. users in India. Powering this platform is the Aerospike flash and DRAM- optimized in-memory NoSQL data- Full replication across the base. By harnessing the real-time “There has been no need for main- Amazon EC2 servers ensures big data processing capabilities of tenance with Aerospike database; business continuity.