Lecture Notes 8 1 Minimax Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improving on the James-Stein Estimator

Improving on the James-Stein Estimator Yuzo Maruyama The University of Tokyo Abstract: In the estimation of a multivariate normal mean for the case where the unknown covariance matrix is proportional to the identity matrix, a class of generalized Bayes estimators dominating the James-Stein rule is obtained. It is noted that a sequence of estimators in our class converges to the positive-part James-Stein estimator. AMS 2000 subject classifications: Primary 62C10, 62C20; secondary 62A15, 62H12, 62F10, 62F15. Keywords and phrases: minimax, multivariate normal mean, generalized Bayes, James-Stein estimator, quadratic loss, improved variance estimator. 1. Introduction Let X and S be independent random variables where X has the p-variate normal 2 2 2 distribution Np(θ, σ Ip) and S/σ has the chi square distribution χn with n degrees of freedom. We deal with the problem of estimating the mean vector θ relative to the quadratic loss function kδ−θk2/σ2. The usual minimax estimator X is inadmissible for p ≥ 3. James and Stein (1961) constructed the improved estimator δJS = 1 − ((p − 2)/(n + 2))S/kXk2 X, JS which is also dominated by the James-Stein positive-part estimator δ+ = max(0, δJS) as shown in Baranchik (1964). We note that shrinkage estimators such as James-Stein’s procedure are derived by using the vague prior informa- tion that λ = kθk2/σ2 is close to 0. It goes without saying that we would like to get significant improvement of risk when the prior information is accurate. JS JS Though δ+ improves on δ at λ = 0, it is not analytic and thus inadmissi- ble. -

Domination Over the Best Invariant Estimator Is, When Properly

DOCUMENT RESUME ED 394 999 TM 024 995 AUTHOR Brandwein, Ann Cohen; Strawderman, William E. TITLE James-Stein Estimation. Program Statistics Research, Technical Report No. 89-86. INSTITUTION Educational Testing Service, Princeton, N.J. REPORT NO ETS-RR-89-20 PUB DATE Apr 89 NOTE 46p. PUB TYPE Reports Evaluative/Feasibility (142) Statistical Data (110) EDRS PRICE MF01/PCO2 Plus Postage. DESCRIPTORS Equations (Mathematics); *Estimation (Mathematics); *Maximum Likelihood Statistics; *Statistical Distributions IDENTIFIERS *James Stein Estimation; Nonnormal Distributions; *Shrinkage ABSTRACT This paper presents an expository development of James-Stein estimation with substantial emphasis on exact results for nonnormal location models. The themes of the paper are: (1) the improvement possible over the best invariant estimator via shrinkage estimation is not surprising but expected from a variety of perspectives; (2) the amount of shrinkage allowable to preserve domination over the best invariant estimator is, when properly interpreted, relatively free from the assumption of normality; and (3) the potential savings in risk are substantial when accompanied by good quality prior information. Relatively, much less emphasis is placed on choosing a particular shrinkage estimator than on demonstrating that shrinkage should produce worthwhile gains in problems where the error distribution is spherically symmetric. In addition, such gains are relatively robust with respect to assumptions concerning distribution and loss. (Contains 1 figure and 53 references.) -

DECISION THEORY APPLIED to an INSTRUMENTAL VARIABLES MODEL 1 Gary Chamberlain ABSTRACT This Paper Applies Some General Concepts

DECISION THEORY APPLIED TO AN INSTRUMENTAL VARIABLES MODEL 1 Gary Chamberlain ABSTRACT This paper applies some general concepts in decision theory to a simple instrumental variables model. There are two endogenous variables linked by a single structural equation; k of the exoge- nous variables are excluded from this structural equation and provide the instrumental variables (IV). The reduced-form distribution of the endogenous variables conditional on the exogenous vari- ables corresponds to independent draws from a bivariate normal distribution with linear regression functions and a known covariance matrix. A canonical form of the model has parameter vector (½; Á; !), where Á is the parameter of interest and is normalized to be a point on the unit circle. The reduced-form coe±cients on the instrumental variables are split into a scalar parameter ½ and a parameter vector !, which is normalized to be a point on the (k 1)-dimensional unit sphere; ¡ ½ measures the strength of the association between the endogenous variables and the instrumental variables, and ! is a measure of direction. A prior distribution is introduced for the IV model. The parameters Á, ½, and ! are treated as independent random variables. The distribution for Á is uniform on the unit circle; the distribution for ! is uniform on the unit sphere with dimension k 1. These choices arise from the solution of a minimax problem. The prior for ½ is left general. It¡turns out that given any positive value for ½, the Bayes estimator of Á does not depend upon ½; it equals the maximum-likelihood estimator. This Bayes estimator has constant risk; since it minimizes average risk with respect to a proper prior, it is minimax. -

Minimax Theory

Copyright c 2008–2010 John Lafferty, Han Liu, and Larry Wasserman Do Not Distribute Chapter 36 Minimax Theory Minimax theory provides a rigorous framework for establishing the best pos- sible performance of a procedure under given assumptions. In this chapter we discuss several techniques for bounding the minimax risk of a statistical problem, including the Le Cam and Fano methods. 36.1 Introduction When solving a statistical learning problem, there are often many procedures to choose from. This leads to the following question: how can we tell if one statistical learning procedure is better than another? One answer is provided by minimax theory which is a set of techniques for finding the minimum, worst case behavior of a procedure. In this chapter we rely heavily on the following sources: Yu (2008), Tsybakov (2009) and van der Vaart (1998). 36.2 Definitions and Notation Let be a set of distributions and let X ,...,X be a sample from some distribution P 1 n P . Let ✓(P ) be some function of P . For example, ✓(P ) could be the mean of P , the 2P variance of P or the density of P . Let ✓ = ✓(X1,...,Xn) denote an estimator. Given a metric d, the minimax risk is b b Rn Rn( )=infsup EP [d(✓,✓(P ))] (36.1) ⌘ P ✓ P 2P where the infimum is over all estimators. Theb sample complexityb is n(✏, )=min n : R ( ) ✏ . (36.2) P n P n845 o 846 Chapter 36. Minimax Theory 36.3 Example. Suppose that = N(✓, 1) : ✓ R where N(✓, 1) denotes a Gaussian P { 2 } with mean ✓ and variance 1. -

Lecture 16: October 4 Lecturer: Siva Balakrishnan

36-705: Intermediate Statistics Fall 2019 Lecture 16: October 4 Lecturer: Siva Balakrishnan In the last lecture we discussed the MSE and the bias-variance decomposition. We discussed briefly that finding uniformly optimal estimators (i.e. estimators with lowest possible MSE for every value of the unknown parameter) is hopeless, and then briefly discussed finding optimal unbiased estimators. We then introduced some quantities: the score and the Fisher information, and used these notions to discuss the Cram´er-Raobound, which provides a lower bound on the variance of any unbiased estimator. You might come across this terminology in your research: estimators that achieve the Cram´er-Raobound, i.e. are unbiased and achieve the Cram´er-Raolower bound on the variance, are often called efficient estimators. In many problems efficient estimators do not exist, and we often settle for a weaker notion of asymptotic efficiency, i.e. efficient but only as n ! 1. Next week, we will show that in many cases the MLE is asymptotically efficient. The Cram´er-Raobound suggests that the MSE in a parametric model typically scales as 1=(nI1(θ)). In essence 1=I1(θ) behaves like the variance (we will see this more clearly in the future), so that the MSE behaves as σ2=n, which is exactly analogous to what we have seen before with averages (think back to Chebyshev/sub-Gaussian tail bounds). Supposing that the Fisher information is non-degenerate, i.e. that I1(θ) > 0, and treating the model and θ as fixed: I1(θ) is a constant as n ! 1. -

Improved Minimax Estimation of a Multivariate Normal Mean Under Heteroscedasticity” (DOI: 10.3150/13-BEJ580SUPP; .Pdf)

Bernoulli 21(1), 2015, 574–603 DOI: 10.3150/13-BEJ580 Improved minimax estimation of a multivariate normal mean under heteroscedasticity ZHIQIANG TAN Department of Statistics, Rutgers University, 110 Frelinghuysen Road, Piscataway, NJ 08854, USA. E-mail: [email protected] Consider the problem of estimating a multivariate normal mean with a known variance matrix, which is not necessarily proportional to the identity matrix. The coordinates are shrunk directly in proportion to their variances in Efron and Morris’ (J. Amer. Statist. Assoc. 68 (1973) 117– 130) empirical Bayes approach, whereas inversely in proportion to their variances in Berger’s (Ann. Statist. 4 (1976) 223–226) minimax estimators. We propose a new minimax estimator, by approximately minimizing the Bayes risk with a normal prior among a class of minimax estimators where the shrinkage direction is open to specification and the shrinkage magnitude is determined to achieve minimaxity. The proposed estimator has an interesting simple form such that one group of coordinates are shrunk in the direction of Berger’s estimator and the remaining coordinates are shrunk in the direction of the Bayes rule. Moreover, the proposed estimator is scale adaptive: it can achieve close to the minimum Bayes risk simultaneously over a scale class of normal priors (including the specified prior) and achieve close to the minimax linear risk over a corresponding scale class of hyper-rectangles. For various scenarios in our numerical study, the proposed estimators with extreme priors yield more substantial risk reduction than existing minimax estimators. Keywords: Bayes risk; empirical Bayes; minimax estimation; multivariate normal mean; shrinkage estimation; unequal variances 1. -

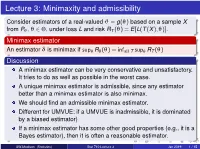

Stat 710: Mathematical Statistics Lecture 3

Lecture 3: Minimaxity and admissibility Consider estimators of a real-valued J = g(q) based on a sample X from Pq , q 2 Θ, under loss L and risk RT (q) = E[L(T (X);q)]. Minimax estimator An estimator d is minimax if supq Rd (q) = infall T supq RT (q) Discussion A minimax estimator can be very conservative and unsatisfactory. It tries to do as well as possible in the worst case. A unique minimax estimator is admissible, since any estimator better than a minimax estimator is also minimax. We should find an admissible minimax estimator. Different for UMVUE: if a UMVUE is inadmissible, it is dominated by a biased estimator) If a minimax estimator has some other good properties (e.g., it is a Bayes estimator), then it is often a reasonable estimator. beamer-tu-logo UW-Madison (Statistics) Stat 710 Lecture 3 Jan 2019 1 / 15 Minimax estimator The following result shows when a Bayes estimator is minimax. Theorem 4.11 (minimaxity of a Bayes estimator) Let Π be a proper prior on Θ and d be a Bayes estimator of J w.r.t. Π. Suppose d has constant risk on ΘΠ. If Π(ΘΠ) = 1, then d is minimax. If, in addition, d is the unique Bayes estimator w.r.t. Π, then it is the unique minimax estimator. Proof Let T be any other estimator of J. Then Z Z sup RT (q) ≥ RT (q)dΠ ≥ Rd (q)dΠ = sup Rd (q): q2Θ ΘΠ ΘΠ q2Θ If d is the unique Bayes estimator, then the second inequality in the previous expression should be replaced by > and, therefore, d is the unique minimax estimator. -

On the Bayesness, Minimaxity and Admissibility of Point Estimators of Allelic Frequencies Carlos Alberto Martínez , Khitij

On the Bayesness, minimaxity and admissibility of point estimators of allelic frequencies Carlos Alberto Martínez1,2, Khitij Khare2 and Mauricio A. Elzo1 1 Department of Animal Sciences, University of Florida, Gainesville, FL, USA 2 Department of Statistics, University of Florida, Gainesville, FL, USA Correspondence: C. A. Martínez, Department of Animal Sciences, University of Florida, Gainesville, FL 32611, USA. Tel: 352-328-1624. Fax: 352-392-7851. E-mail: [email protected] 1 Abstract In this paper, decision theory was used to derive Bayes and minimax decision rules to estimate allelic frequencies and to explore their admissibility. Decision rules with uniformly smallest risk usually do not exist and one approach to solve this problem is to use the Bayes principle and the minimax principle to find decision rules satisfying some general optimality criterion based on their risk functions. Two cases were considered, the simpler case of biallelic loci and the more complex case of multiallelic loci. For each locus, the sampling model was a multinomial distribution and the prior was a Beta (biallelic case) or a Dirichlet (multiallelic case) distribution. Three loss functions were considered: squared error loss (SEL), Kulback-Leibler loss (KLL) and quadratic error loss (QEL). Bayes estimators were derived under these three loss functions and were subsequently used to find minimax estimators using results from decision theory. The Bayes estimators obtained from SEL and KLL turned out to be the same. Under certain conditions, the Bayes estimator derived from QEL led to an admissible minimax estimator (which was also equal to the maximum likelihood estimator). The SEL also allowed finding admissible minimax estimators. -

Minimax Admissible Estimation of a Multivariate Normal Mean and Improvement Upon the James-Stein Estimator

Minimax Admissible Estimation of a Multivariate Normal Mean and Improvement upon the James-Stein Estimator By Yuzo Maruyama Faculty of Mathematics, Kyushu University A dissertation submitted to the Graduate School of Economics of University of Tokyo in partial fulfillment of the requirements for the degree of Doctor of Economics June, 2000 (Revised December, 2000) Abstract This thesis mainly focuses on the estimation of a multivariate normal mean, from the decision-theoretic point of view. We concentrate our energies on investigating a class of estimators satisfying two optimalities: minimaxity and admissibility. We show that all classes of minimax admissible generalized Bayes estimators in the hitherto researches are divided in two, on the basis of whether a certain function of the prior distribution is bounded or not. Furthermore by using Stein’s idea, we propose a new class of minimax admissible estimators. The relations among minimaxity, a prior distribution and a shrinkage factor of the genaralized Bayes estimator, are also investigated. For the problem of finding admissible estimators which dominate the inadmissible James-Stein estimator, we have some results. Moreover, in non-normal case, we consider the problem of proposing admissible minimax estimators. For a certain class of spherically symmetric distributions which includes at least multivariate-t distributions, admissible minimax estimators are de- rived. ii Acknowledgements I am indebted to my adviser, Professor Tatsuya Kubokawa for his guidance and encour- agement during the research for and preparation of this thesis. I wish to take opportunity to express my deep gratitude to Professors of Gradu- ate School of Economics, University of Tokyo: Akimichi Takemura, Naoto Kunitomo, Yoshihiro Yajima, Kazumitsu Nawata and Nozomu Matsubara, and to Professors of Faculty of Mathematics, Kyushu University: Takashi Yanagawa, Sadanori Konishi, Hiroto Hyakutake, Yoshihiko Maesono, Masayuki Uchida and Kaoru Fueda, for their encouragement and suggestions. -

Lecture 9 — October 20 9.1 Recap

STATS 300A: Theory of Statistics Fall 2015 Lecture 9 | October 20 Lecturer: Lester Mackey Scribe: Poorna Kumar, Shengjie (Jessica) Zhang Warning: These notes may contain factual and/or typographic errors. 9.1 Recap Let's take a moment to recall what we have covered so far and then give a brief preview of what we will cover in the future. We have been studying optimal point estimation. First, we showed that uniform optimality is typically not an attainable goal. Thus, we need to look for other meaningful optimality criteria. One approach is to find optimal estimators from classes of estimators possessing certain desirable properties, such as unbiasedness and equiv- ariance. Another approach is to collapse the risk function. Examples of this approach include Bayesian estimation (minimizing the average risk) and minimax estimation (minimizing the worst-case risk). After the midterm, we will study these same principles in the context of hypothesis testing. Today, we will complete our discussion of average risk optimality and introduce the notion of minimax optimality. 2 2 Example 1. Let X1; :::; Xn ∼ N (θ; σ ), with σ > 0 known. From last lecture, we know that X¯ is not a Bayes estimator for θ under squared error in the normal location model. However, X¯ does minimize a form of average risk: it minimizes the average risk with respect to the Lebesgue measure, that is with respect to the density π(θ) = 1 for all θ. We call this choice of π an improper prior, since the integral R π(θ)dθ = 1 and hence π does not define a proper probability distribution. -

Minimax Estimation of Discrete Distributions Under L1 Loss

1 Minimax Estimation of Discrete Distributions under ℓ1 Loss Yanjun Han, Student Member, IEEE, Jiantao Jiao, Student Member, IEEE, and Tsachy Weissman, Fellow, IEEE . Abstract—We consider the problem of discrete distribution 2) High dimensional asymptotics: we let the support size S estimation under ℓ1 loss. We provide tight upper and lower and the number of observations n grow together, char- bounds on the maximum risk of the empirical distribution acterize the scaling under which consistent estimation is (the maximum likelihood estimator), and the minimax risk in regimes where the support size S may grow with the number of feasible, and obtain the minimax rates. observations n. We show that among distributions with bounded 3) Infinite dimensional asymptotics: the distribution P may entropy H, the asymptotic maximum risk for the empirical have infinite support size, but is constrained to have distribution is 2H/ ln n, while the asymptotic minimax risk is bounded entropy H(P ) H, where the entropy [1] ln H/ n. Moreover, we show that a hard-thresholding estimator is defined as ≤ oblivious to the unknown upper bound H, is essentially minimax. However, if we constrain the estimates to lie in the simplex of S probability distributions, then the asymptotic minimax risk is H(P ) , p ln p . (1) − i i again 2H/ ln n. We draw connections between our work and i=1 the literature on density estimation, entropy estimation, total X We remark that results for the first regime follow from the variation distance (ℓ1 divergence) estimation, joint distribution estimation in stochastic processes, normal mean estimation, and well-developed theory of asymptotic statistics [2, Chap. -

Improving on the James-Stein Estimator

Improving on the James-Stein Estimator Yuzo Maruyama Abstract In the estimation of a multivariate normal mean for the case where the unknown covariance matrix is proportional to the identity matrix, a class of generalized Bayes estimators dominating the James-Stein rule is obtained. It is noted that a sequence of estimators in our class converges to the positive-part James-Stein estimator. AMS classification numbers 62C10, 62C20, 62A15, 62H12, 62F10, 62F15 Key words and phrases minimax, multivariate normal mean, generalized Bayes, James-Stein estimator, quadratic loss, improved variance estimator. 1 Introduction Let X and S be independent random variables where X has the p-variate normal 2 2 2 distribution Np(θ; σ Ip) and S/σ has the chi square distribution Ân with n degrees of freedom. We deal with the problem of estimating the mean vector θ relative to the quadratic loss function k± ¡ θk2/σ2. The usual minimax estimator X is inad- missible for p ¸ 3. James and Stein [6] constructed the improved estimator ±JS = [1 ¡ ((p ¡ 2)=(n + 2))S=kXk2] X; which is also dominated by the James-Stein positive- JS JS part estimator ±+ = max(0; ± ) as shown in Baranchik [2]. We note that shrinkage estimators such as James-Stein’s procedure are derived by using the vague prior infor- mation that ¸ = kθk2/σ2 is close to 0. It goes without saying that we would like to JS get significant improvement of risk when the prior information is accurate. Though ±+ improves on ±JS at ¸ = 0, it is not analytic and thus inadmissible. Kubokawa [7] showed that one minimax generalized Bayes estimator derived by Lin and Tsai [10] dominates ±JS.