More Usable Recommendation Systems for Improving Software Quality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Servoy Stuff Browser Suite FAQ

Servoy Stuff Browser Suite FAQ Servoy Stuff Browser Suite FAQ Please read carefully: the following contains important information about the use of the Browser Suite in its current version. What is it? It is a suite of native bean components and plugins all Servoy-Aware and tightly integrated with the Servoy platform: - a browser bean - a flash player bean - a html editor bean - a client plugin to rule them all - a server plugin to setup a few admin flags - an installer to help deployment What version of Servoy is supported? - Servoy 5+ Servoy 4.1.8+ will be supported, starting with version 0.9.40+, except for Mac OS X developer (client will work though) What OS/platforms are currently supported? In Developer: - Windows all platform - Mac OS X 10.5+ - Linux GTK based In Smart client: - Windows all platform - Mac OS X 10.5+ - Linux GTK based The web client is not currently supported, although a limited support might come in the future if there is enough demand for it… What Java versions are currently supported? - Windows: java 5 or java 6 (except updates 10 to 14) – 32 and 64-bit - Mac OS X: java 5 or java 6 – 32 and 64-bit - Linux: java 6 (except updates 10 to 14) – 32 and 64-bit Where can I get it? http://www.servoyforge.net/projects/browsersuite What is it made of? It is build on the powerful DJ-NativeSwing project ( http://djproject.sourceforge.net/ns ) v0.9.9 This library is using the Eclipse SWT project ( http://www.eclipse.org/swt ) v3.6.1 And various other projects like the JNA project ( https://jna.dev.java.net/ ) v3.2.4 The XULRunner -

Chapter 1 Introduction to Computers, Programs, and Java

Chapter 1 Introduction to Computers, Programs, and Java 1.1 Introduction • The central theme of this book is to learn how to solve problems by writing a program . • This book teaches you how to create programs by using the Java programming languages . • Java is the Internet program language • Why Java? The answer is that Java enables user to deploy applications on the Internet for servers , desktop computers , and small hand-held devices . 1.2 What is a Computer? • A computer is an electronic device that stores and processes data. • A computer includes both hardware and software. o Hardware is the physical aspect of the computer that can be seen. o Software is the invisible instructions that control the hardware and make it work. • Computer programming consists of writing instructions for computers to perform. • A computer consists of the following hardware components o CPU (Central Processing Unit) o Memory (Main memory) o Storage Devices (hard disk, floppy disk, CDs) o Input/Output devices (monitor, printer, keyboard, mouse) o Communication devices (Modem, NIC (Network Interface Card)). Bus Storage Communication Input Output Memory CPU Devices Devices Devices Devices e.g., Disk, CD, e.g., Modem, e.g., Keyboard, e.g., Monitor, and Tape and NIC Mouse Printer FIGURE 1.1 A computer consists of a CPU, memory, Hard disk, floppy disk, monitor, printer, and communication devices. CMPS161 Class Notes (Chap 01) Page 1 / 15 Kuo-pao Yang 1.2.1 Central Processing Unit (CPU) • The central processing unit (CPU) is the brain of a computer. • It retrieves instructions from memory and executes them. -

Programming Languages and Methodologies

Personnel Information: Şekip Kaan EKİN Game Developer at Alictus Mobile: +90 (532) 624 44 55 E-mail: [email protected] Website: www.kaanekin.com GitHub: https://github.com/sekin72 LinkedIn: www.linkedin.com/in/%C5%9Fekip-kaan-ekin-326646134/ Education: 2014 – 2019 B.Sc. Computer Engineering Bilkent University, Ankara, Turkey (Language of Education: English) 2011 – 2014 Macide – Ramiz Taşkınlar Science High School, Akhisar/Manisa, Turkey 2010 – 2011 Manisa Science High School, Manisa, Turkey 2002 – 2010 Misak-ı Milli Ali Şefik Primary School, Akhisar/Manisa, Turkey Languages: Turkish: Native Speaker English: Proficient Programming Languages and Methodologies: I am proficient and experienced in C#, Java, NoSQL and SQL, wrote most of my projects using these languages. I am also experienced with Scrum development methodology. I have some experience in C++, Python and HTML, rest of my projects are written with them. I have less experience in MATLAB, System Verilog, MIPS Assembly and MARS. Courses Taken: Algorithms and Programming I-II CS 101-102 Fundamental Structures of Computer Science I-II CS 201-202 Algorithms I CS 473 Object-Oriented Software Engineering CS319 Introduction to Machine Learning CS 464 Artificial Intelligence CS 461 Database Systems CS 353 Game Design and Research COMD 354 Software Engineering Project Management CS 413 Software Product Line Engineering CS 415 Application Lifecycle Management CS 453 Software Verification and Validation CS 458 Animation and Film/Television Graphics I-II GRA 215-216 Automata Theory and Formal -

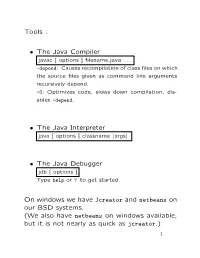

The Java Compiler • the Java Interpreter • the Java Debugger On

Tools : • The Java Compiler javac [ options ] filename.java . -depend: Causes recompilation of class files on which the source files given as command line arguments recursively depend. -O: Optimizes code, slows down compilation, dis- ables -depend. • The Java Interpreter java [ options ] classname hargsi • The Java Debugger jdb [ options ] Type help or ? to get started. On windows we have Jcreator and netbeans on our BSD systems. (We also have netbeans on windows available, but it is not nearly as quick as jcreator.) 1 The simplest program: public class Hello { public static void main(String[] args) { System.out.println("Hello."); } } Hello.java Executing this program would print to the console the sentence “Hello.”. A bit more complex: public class Second { public static void main(String[] input) { for (int i = input.length - 1; i >= 0; i--) System.out.print(input[i] + " "); System.out.print("\n"); } } Second.java Executing this program would print to the console the arguments in reverse order, each separated by one blank space. Primitive data types : • byte : 8 bit integer, [-128 , 127] • char : 16 bit unsigned integer, [0 , 65536]. This character set offers 65536 distincts Unicode char- acters. • short : 16 bit signed integer, [-32768 , 32767] • int : 32-bit integer, [-2147483648 , 2147483647] • long : 64 bit signed integer, [-9223372036854775807 , 9223372036854775806] • float : 32-bit float, 1.4023984e–45 to 3.40282347e+38 • double : 64-bit float, 4.94065645841246544e–324 to 1.79769313486231570e+308 • boolean : such a variable can take on -

Step by Step Tutorial: How to Build a Plugin for Servoy

How to build a plugin for Servoy - A step by step tutorial brought to you by Servoy Stuff How to build a plugin for Servoy – A step by step tutorial brought to you by Servoy Stuff PART 3 J. The IScriptObject interface In the first part of this tutorial we went through the tedious details of configuring our Servoy/Eclipse environment, and set-up our project “WhoisPlugin”, now it’s time to actually build the plugin. Then in the second part, we implemented the IScriptPlugin using (and describing as we went along) the Eclipse IDE, we learned to rely on the API and to use the interface in an efficient way. Now is (finally!) time to implement our second main class, the “WhoisPluginProvider” class for which we already have a stub nicely prepared for us by Eclipse. As you remember, all we have to do is to follow the “TODO” task trail and implement each method one after the other. Or so it seems… But first, we need to take a good look at the IScriptObject interface that our WhoisPluginProvider class is going to implement, so let’s browse to the public API and click on the relevant link to get the interface definition: 1/26 How to build a plugin for Servoy - A step by step tutorial brought to you by Servoy Stuff We’re in luck because this interface doesn’t extend any other, so we only have to implement the methods above. Now, let’s look at them in details: ¾ getAllReturnedTypes() should return a Class array (“Class” is a java class that defines the type of class). -

Curriculum Vitae

Curriculum Vitae DATOS PERSONALES ● Nombre: Juan Andrés Moreno Rubio ● Fecha de Nacimiento: 02-03-1980 ● Lugar de Nacimiento: Plasencia (Cáceres) ● Nacionalidad: Española ● Tfno: 676875420 ● E-mail: [email protected] ● Vive en: Barcelona. ● Experiencia: 12 años FORMACIÓN ● TÍTULO UNIVERSITARIO _ ○ Licenciado en Ingeniería Informática por la Universidad de Extremadura (Escuela Politécnica de Cáceres). ○ Periodo: 1999 - Febrero 2008. (Los últimos 2 años sólo con el PFC) ● FORMACIÓN SECUNDARIA _ ○ Curso de Orientación Universitaria obtenido en 1999 y título de Bachiller obtenido en 1998 en el Instituto Valle del Jerte (Plasencia). CONOCIMIENTOS ● CONOCIMIENTOS INFORMÁTICOS _ ○ CMS's, herramientas y entornos de producción web (Avanzado): Worpdress, Joomla, Magnolia CMS, Tridion, Macromedia Dreamweaver, Frontpage y diversos plugins de eclipse para desarrollo web. RapidWeaver (Mac), XWiki. ○ Frameworks Javascript: (Avanzado): NodeJS, ReactJS (redux/reflux), webpack2, jQuery, prototpye, mootools. ember.js. Moustache y jade para templating. ○ IDEs de Desarrollo (Avanzado): Atom, Cloud9, WebRatio, Eclipse, Aptana Studio, LAMP, IBM Rational Software Architecht, Servoy, NetBeans, IntelliJ Idea, Coda. ○ Sistemas Operativos (Avanzado): Windows, Linux y Mac OS. ○ Servidores de Aplicaciones (Avanzado): Tomcat, JBOSS, Weblogic, Resin, Jetty, Nginx, y Apache. Amazon WS y Google Cloud Platform. ○ Programación (Avanzado): Ensamblador, Visual Basic, Pascal FC, C, C++, JAVA. Shell Script y PHP scripting. ES5 y ES6 (Javascript). ○ Desarrollo Web (Avanzado): HTML, JavaScript, CSS, LESS, SASS, VELOCITY, PHP, JSP, XML, XSLT, J2EE, y Action Script. ○ Bases de datos (Intermedio): Conocimientos de diseño, administración y mantenimiento de bases de datos Oracle, Mysql, Postgresql e Hypersonic, y dominio del lenguaje SQL. Redis. Mongo. Página 1 de 7 CURRICULUM VITAE CRONOLÓGICO. Juan Andrés Moreno Rubio ○ Frameworks JAVA (Intermedio /Avanzado): Spring, Struts, Freemarker, Hibernate, Ibatis, JEE,. -

2008 BZ Research Eclipse Adoption Study

5th Annual Eclipse Adoption Study November 2008 (With comparisons to November 2007, November 2006, November 2005 and September 2004 Studies) 7 High Street, Suite 407 Huntington, NY 11743 631-421-4158 www.bzresearch.com © BZ Research November 2008 Eclipse Adoption Study © BZ Research November 2008 Table of Contents Table of Contents................................................................................................................................................... 2 Methodology .......................................................................................................................................................... 4 Universe Selection ................................................................................................................................................. 6 Question 1. Do the developers within your organization use Eclipse or Eclipse-based tools? ........................ 7 Question 2. Which version(s) of Eclipse are you using? .................................................................................... 8 Question 3. How long have you been using Eclipse or Eclipse-based tools and technologies (either at work, or for your personal projects)?.............................................................................................................................. 9 Question 4. What type of software are you (or your organization) developing using Eclipse-based tools and technologies? (Note: OSI refers to Open Source Initiative, see www.opensource.org for more information.) ...............................................................................................................................................................................10 -

Brief Outline of Contents This Course Is Designed to Familiarize The

Brief Outline of Contents This course is designed to familiarize the student with the advanced concepts and techniques in Java programming. Topics include: Comprehensive coverage of object-oriented programming with cooperating classes; Implementation of polymorphism with inheritance and interfaces; Programming with generics and Java collections; Programming with exceptions, stream input/output and graphical AWT and Swing Prerequisites Programming in Java or its equivalent. Learning Goals Understand the following. The relationships between classes Specification vs. implementation Be able to ... Specify programs Implement many of the features of Java, Standard Edition Test Java programs Required Textbook Y. Daniel Liang, "Introduction to Java Programming," Comprehensive Version, 7th Ed., Prentice Hall, 2007. ISBN-10: 0136012671 ISBN-13: 978-0136012672 Software Required JRE: Java Runtime Environment JDK Any version >= 1.5 (J2SE JDK is fine) IDE: Eclipse is fine (or any other JDK you are comfortable with, like JCreator, NetBeans) Evaluation of Student Work Rev2 Student work will be evaluated as follows. Assignments: 60% Quizzes: 10% Final: 30% 1 There will be an assignment approximately every two weeks; possibly one week at times. Quizzes will be introduced as needed with approximately a week's notice. They will count for 10% of the grade. One quiz does not count, allowing you one absence from class. Otherwise: All pass: A 80-89% passed: B 70-79% passed: C 60-69% passed: D Late homework will not be accepted unless there is a reason why it was not reasonably possible to perform the work in time given work and emergency conditions. In that case, e- mail the written reason should be attached to the homework, which will be graded on a pass/fail basis if the reason is accepted by me. -

Ch1: Introduction

Ch1: Introduction 305172 Computer Programming Laboratory Jiraporn Pooksook Naresuan University Class Information • Instructor: Jiraporn Pooksook (จิราพร พุกสุข) • TA: Wanarat Juraphanthong (วนารัตน์) • Lecture time&Location: G1: Thurs 9-12 a.m., EN609 G2: Thurs 5-8 p.m., EN609 • Office: EE 214 • Office hour: By appointment • Email: [email protected] • Website: www.ecpe.nu.ac.th/jirapornpook Assignment & Grading • Homework: 10% • Class Participation: 10% • Midterm: 30% • Final: 30% • Project: 20% Books & References • Python for Kids: A Playful Introduction To Programming, by Jason R. Briggs Retrieved from : https://books.google.co.th/books Books & References • Python programming books written in Thai. • Python tutorial https://docs.python.org/3/tutorial/ • Video Lecture of CU https://www.youtube.com/watch?v=U2l1xgpVsuo Tools • Python 3.7.0 https://www.python.org/downloads/ • IDLE Python Exercises • Register Code.org https://studio.code.org/s/express/ • Finish tasks and report what level you have already done every week. How to write a program • Decide which language you want to code. • Find a good compiler/interpreter. • Start writing a code. What are these words? Programming Compiler/ Editors language interpreter C GNU GCC 9.1 Turbo C ,Borland C++, Visual C++, Visual Studio Code, Codeblocks C++ GNU GCC 9.1 Turbo C++,Borland C++,Visual C++ , Visual Studio Code, Codeblocks JAVA Java SE 12.0.1 JCreator, Netbeans, Eclipse Ruby Ruby 2.6.3 Atom, Visual Studio Code Python Python 3.7.3 Visual Studio Code, Idle, PyCharm Types of Programming Languages • Static Programming Language : Java, C, C++, etc. – declare the type of variables before use. – need compiler to read all instructions and run at once finally. -

IDE's for Java, C

1 IDE’s for Java, C, C++ David Rey - DREAM 2 Overview • Introduction about IDE’s • Eclipse example: overview • Eclipse: editing • Eclipse: using a version control tool • Eclipse: compiling/building/generating doc • Eclipse: running/debugging • Eclipse: testing • Eclipse: tools to easily re-write code • Conclusion 3 What is an IDE • IDE = Integrated Development Environment • IDE = EDI (Environnement de Développement Intégré) in French • Generally language dependant (c/c++ specific IDE, java specific IDE, not yet good ones for Fortran) • Typical integrated development tools : • editor (with auto-indent, auto-completion, colorization, …) ; • version control ; • compiler/builder ; • documentation extractor ; • debugger ; • tests tool ; • refactoring tools. 4 What is not an IDE • Just a great text editor • A code generator • A GUI designer • A forge (i.e. GForge) 5 IDEs examples - Java • Eclipse (http://www.eclipse.org) • JBuilder (http://www.borland.com/us/products/jbuilder/index.html - free for personnal and non-commercial use) • NetBeans (http://www.netbeans.org/) • JCreator (http://www.jcreator.com/) • … 6 IDE’s examples – C/C++ • Visual C++ - com. license (http://msdn.microsoft.com/visualc) • C++ Builder - com. license (http://www.borland.com/us/products/cbuilder/index.html) • Quincy (http://www.codecutter.net/tools/quincy/) • Anjuta (http://anjuta.sourceforge.net/) • KDevelop (http://www.kdevelop.org/) • Code::Block (http://www.codeblocks.org/) • BVRDE (http://bvrde.sourceforge.net/) • RHIDE (http://www.rhide.com/) • … 7 Overview • Introduction -

Stanford University Medical Center Department of Pathology

STANFORD PATHOLOGY RESIDENT/FELLOW HANDBOOK 2011-12 Stanford University Medical Center Department of Pathology Resident and Clinical Fellow Handbook 2012-2013 300 Pasteur Drive Lane Bldg., Room L-235 Stanford, CA 93205-5324 Ph (650) 725-8383 Fx (650) 725-6902 1 STANFORD PATHOLOGY RESIDENT/FELLOW HANDBOOK 2012-2013 TABLE OF CONTENTS SECTION PAGE # Overview................................................................................................. 4 Trainee Selection.................................................................................... 4 Anatomic Pathology (AP) Training ......................................................... 7 Clinical Pathology (CP) Training ............................................................ 7 Combined Anatomic & Clinical Pathology (AP/CP) Training ................. 8 Combined Anatomic & Neuropathology (AP/NP) Training ………………. 9 Goals of the Residency Program …………........................................... 10 GENERAL INFORMATION Regular Work Hours & Availability......................................................... ………14 Call Guidelines.......................................................................................... 15 Anatomic Pathology..........................................................................16 Clinical Pathology..............................................................................17 MedHub Residency Management System ...................................................20 Conferences .................................................................................................21 -

Principy Operačních Systémů

PROGRAMOVACÍ TECHNIKY STUDIJNÍ MATERIÁLY URČENÉ PRO STUDENTY FEI VŠB-TU OSTRAVA VYPRACOVAL: MAREK BĚHÁLEK OSTRAVA 2006 © Materiál byl vypracován jako studijní opora pro studenty předmětu Programovací techniky na FEI VŠB-TU Ostrava. Jiné použití není povoleno. Některé části vycházejí či přímo kopírují původní výukové materiály k předmětu Programovací techniky vytvořené doc. Ing Miroslavem Benešem Ph. D. Programovací techniky OBSAH 1 Průvodce studiem ..................................................................................... 5 1.1 Charakteristika předmětu Programovací techniky ............................. 5 1.2 Další informace k předmětu Programovací techniky ......................... 5 2 Nástroje pro tvorbu programu................................................................ 7 2.1 Tvorba aplikace .................................................................................. 8 2.2 Editor .................................................................................................. 9 2.3 Překladač .......................................................................................... 10 2.3.1 Překlad zdrojových souborů ..................................................... 11 2.3.2 Typy překladače ....................................................................... 11 2.4 Spojovací program (linker)............................................................... 12 2.5 Nástroje pro správu verzí.................................................................. 12 2.5.1 Concurrent Version System (CVS) .........................................