Aligning Design of Embodied Interfaces to Learner Goals

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 1 Introduction to Computers, Programs, and Java

Chapter 1 Introduction to Computers, Programs, and Java 1.1 Introduction • The central theme of this book is to learn how to solve problems by writing a program . • This book teaches you how to create programs by using the Java programming languages . • Java is the Internet program language • Why Java? The answer is that Java enables user to deploy applications on the Internet for servers , desktop computers , and small hand-held devices . 1.2 What is a Computer? • A computer is an electronic device that stores and processes data. • A computer includes both hardware and software. o Hardware is the physical aspect of the computer that can be seen. o Software is the invisible instructions that control the hardware and make it work. • Computer programming consists of writing instructions for computers to perform. • A computer consists of the following hardware components o CPU (Central Processing Unit) o Memory (Main memory) o Storage Devices (hard disk, floppy disk, CDs) o Input/Output devices (monitor, printer, keyboard, mouse) o Communication devices (Modem, NIC (Network Interface Card)). Bus Storage Communication Input Output Memory CPU Devices Devices Devices Devices e.g., Disk, CD, e.g., Modem, e.g., Keyboard, e.g., Monitor, and Tape and NIC Mouse Printer FIGURE 1.1 A computer consists of a CPU, memory, Hard disk, floppy disk, monitor, printer, and communication devices. CMPS161 Class Notes (Chap 01) Page 1 / 15 Kuo-pao Yang 1.2.1 Central Processing Unit (CPU) • The central processing unit (CPU) is the brain of a computer. • It retrieves instructions from memory and executes them. -

Programming Languages and Methodologies

Personnel Information: Şekip Kaan EKİN Game Developer at Alictus Mobile: +90 (532) 624 44 55 E-mail: [email protected] Website: www.kaanekin.com GitHub: https://github.com/sekin72 LinkedIn: www.linkedin.com/in/%C5%9Fekip-kaan-ekin-326646134/ Education: 2014 – 2019 B.Sc. Computer Engineering Bilkent University, Ankara, Turkey (Language of Education: English) 2011 – 2014 Macide – Ramiz Taşkınlar Science High School, Akhisar/Manisa, Turkey 2010 – 2011 Manisa Science High School, Manisa, Turkey 2002 – 2010 Misak-ı Milli Ali Şefik Primary School, Akhisar/Manisa, Turkey Languages: Turkish: Native Speaker English: Proficient Programming Languages and Methodologies: I am proficient and experienced in C#, Java, NoSQL and SQL, wrote most of my projects using these languages. I am also experienced with Scrum development methodology. I have some experience in C++, Python and HTML, rest of my projects are written with them. I have less experience in MATLAB, System Verilog, MIPS Assembly and MARS. Courses Taken: Algorithms and Programming I-II CS 101-102 Fundamental Structures of Computer Science I-II CS 201-202 Algorithms I CS 473 Object-Oriented Software Engineering CS319 Introduction to Machine Learning CS 464 Artificial Intelligence CS 461 Database Systems CS 353 Game Design and Research COMD 354 Software Engineering Project Management CS 413 Software Product Line Engineering CS 415 Application Lifecycle Management CS 453 Software Verification and Validation CS 458 Animation and Film/Television Graphics I-II GRA 215-216 Automata Theory and Formal -

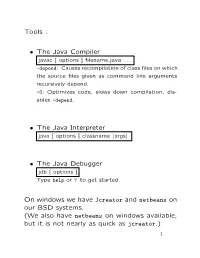

The Java Compiler • the Java Interpreter • the Java Debugger On

Tools : • The Java Compiler javac [ options ] filename.java . -depend: Causes recompilation of class files on which the source files given as command line arguments recursively depend. -O: Optimizes code, slows down compilation, dis- ables -depend. • The Java Interpreter java [ options ] classname hargsi • The Java Debugger jdb [ options ] Type help or ? to get started. On windows we have Jcreator and netbeans on our BSD systems. (We also have netbeans on windows available, but it is not nearly as quick as jcreator.) 1 The simplest program: public class Hello { public static void main(String[] args) { System.out.println("Hello."); } } Hello.java Executing this program would print to the console the sentence “Hello.”. A bit more complex: public class Second { public static void main(String[] input) { for (int i = input.length - 1; i >= 0; i--) System.out.print(input[i] + " "); System.out.print("\n"); } } Second.java Executing this program would print to the console the arguments in reverse order, each separated by one blank space. Primitive data types : • byte : 8 bit integer, [-128 , 127] • char : 16 bit unsigned integer, [0 , 65536]. This character set offers 65536 distincts Unicode char- acters. • short : 16 bit signed integer, [-32768 , 32767] • int : 32-bit integer, [-2147483648 , 2147483647] • long : 64 bit signed integer, [-9223372036854775807 , 9223372036854775806] • float : 32-bit float, 1.4023984e–45 to 3.40282347e+38 • double : 64-bit float, 4.94065645841246544e–324 to 1.79769313486231570e+308 • boolean : such a variable can take on -

Songs by Title Karaoke Night with the Patman

Songs By Title Karaoke Night with the Patman Title Versions Title Versions 10 Years 3 Libras Wasteland SC Perfect Circle SI 10,000 Maniacs 3 Of Hearts Because The Night SC Love Is Enough SC Candy Everybody Wants DK 30 Seconds To Mars More Than This SC Kill SC These Are The Days SC 311 Trouble Me SC All Mixed Up SC 100 Proof Aged In Soul Don't Tread On Me SC Somebody's Been Sleeping SC Down SC 10CC Love Song SC I'm Not In Love DK You Wouldn't Believe SC Things We Do For Love SC 38 Special 112 Back Where You Belong SI Come See Me SC Caught Up In You SC Dance With Me SC Hold On Loosely AH It's Over Now SC If I'd Been The One SC Only You SC Rockin' Onto The Night SC Peaches And Cream SC Second Chance SC U Already Know SC Teacher, Teacher SC 12 Gauge Wild Eyed Southern Boys SC Dunkie Butt SC 3LW 1910 Fruitgum Co. No More (Baby I'm A Do Right) SC 1, 2, 3 Redlight SC 3T Simon Says DK Anything SC 1975 Tease Me SC The Sound SI 4 Non Blondes 2 Live Crew What's Up DK Doo Wah Diddy SC 4 P.M. Me So Horny SC Lay Down Your Love SC We Want Some Pussy SC Sukiyaki DK 2 Pac 4 Runner California Love (Original Version) SC Ripples SC Changes SC That Was Him SC Thugz Mansion SC 42nd Street 20 Fingers 42nd Street Song SC Short Dick Man SC We're In The Money SC 3 Doors Down 5 Seconds Of Summer Away From The Sun SC Amnesia SI Be Like That SC She Looks So Perfect SI Behind Those Eyes SC 5 Stairsteps Duck & Run SC Ooh Child SC Here By Me CB 50 Cent Here Without You CB Disco Inferno SC Kryptonite SC If I Can't SC Let Me Go SC In Da Club HT Live For Today SC P.I.M.P. -

New Products 04 Final

Winter 2004 Made by guitar fanatics hiding in the rock ‘n’ roll underground of Salt Lake City 7 www.digitech.com 7 801.566.8800 #18-0249 The information and specs contained in this brochure are preliminary and subject to change without notice.. New Products Built-in 8-track recorder, USB I/O, MP3 playback, phantom-powered mic preamp... With the new GNX4 we didn't just ture that magic riff , just press the record footswitch and raise the bar, we KICKED Meet the GNX Series’ top-of-the- save the moment onto the Compact Flash memory card line model. The GNX4 doesn’t for transfer later to computer. it some- replace the award-winning GNX3. It just takes the GNX3’s ground- Professional recording software from Lexicon®. breaking guitar workstation con- Connect to your PC via the USB port and unleash the cept to the next level. real power of the GNX4’s recording capabilities using ™ A built-in MIDI drum machine the included Pro Tracks Plus recording software for ® ™ that rivals standalone drum your PC or Bias Deck 3.5 SE for your Mac. Stream machines. four channels of 24-bit The GNX4’s MIDI drum audio to the computer machine sounds so realistic simultaneously while listen- you’ll look for the drum ing to two channels play- stick chips. It has a vast back with zero latency. For library of high quality the finishing touch, process kits and patterns or you your work through world- ® can use your own class Lexicon reverbs cour- MIDI files. Plus, it can even tesy of the Pantheon™ play back your favorite MP3s. -

11111-1-4- 1111L-..It

:11 2 5 1111- 1=.11 MIINMP 2 2 MIIMEMD- 111U 11111-1-4-1111L-..it DOCUBEIT SESONE ED 055 368 08 EA 003 872 Reed, William H. AUTHOR Development in the TITLE Coordination of Organic CIrriculum Public Schools of Boulder, Colorado.Final Report. INsTITUTION Boulder valley School District RE-2,Boulder, Colo. SPoNS AGENCY National Center for Educational Researchand Development (DHEW/CE), Washington, D.C. BUREAU NO BR-8-0225 PUB DATE Aug 71 GRANT 0EG-0-8-080225-2671(085) NOTE 168?. EDRS PRICE NF-10.65 HC-$6.58 DESCRIPTORS Behavioral Objectives; Change Agents;Curriculun Design; Curriculum Development;Curriculum Planning; English Curriculum; ProgramAdministration; Reading Instruction; Secondary Education; SelfActualisation; Social Studies; Student CenteredCurriculum; Vocational Education IDENTIFIERS Boulder; ColorAdo; Learning Packages ABSTRACT This document describes theefforts of program administrators to implement an organiccurriculum in a Boulder, Colorado, ;sigh school. The chief programadministrator coordinated efforts to develop instructionalmaterials and a curricular program in Enllish, reading, and socialstudies based on the needs and expetiences of local students. Organiccurriculum is a learner- rather than a teacher-centered courseof study utiLixing learning packages specifying behavioralobjectives. Appendixes present learning packages for a 10th gradeEnglish program and for writing behavioral objectives. (RA) I DE.RA/RIVEPET EDUCATION OF HEALTH WELFARE OFFICE OF EDUCAJO0A1 THS DOCUMINT HAS miry RIPRO OUC(O ISACILYAs AICIII/E0 FROM T.41 PI ASOF4 ORORGANiZATION NATING IT POINTS ORIG OF VIIW OR ORIN 10145 STAY( 000 P401 PFICESSARiLv PPISI NIT OFFICIAL CA Tr0.1 POS,Flord oFF 'CIE OF tOu OR POLICY FINAL REPORT Project No. 8-0225 Grant No. OEG-0-8-080225-2671(085) COORDINATION OF ORGANIC CURRICULUM DEVELOPMENT IN THE PUBLIC SCHOOLS OF BOULDER, COLORADO William H. -

The Ticker, December 4, 2000

/ .. VOLUME 78, ISSUE 8 For.the Students and the Community December 4, 2000 Stress Busters The BathrooRi 'Solution Free Massages at Stress Management Workshop ·More -.... lion and· By, Vaaessa Wite-ko By Adam Ostaszewski News Editor JlI":. .... Nt!WS Editor It has been two months since the article was In preparation for another grueling finals published on the unsanitary conditions of the week, Baruch will be holding a "Stress Women's bathrooms. There have been some a-USfe-rS"""-worKsnop-on-DecemberiWam:r-t4th. - --improvemenis~-and an-extra service was- aaoed The program, which is co-sponsored by the to the women's bathrooms, however certain Counseling Center and the Health Center, will conditions remain in deadlock. be held in room 1704 ofthe 360 PAS building The following improvements have occurred. from 12:00 to 3:30. The neglecting of OSHA health code, section The Health and Counseling Centers have I 910.141(d)(2)(iii) "Each lavatory shall be pro aranged for two independent massage thera vided with hot and cold running water, or tepid pists, Shakti Tatlan and Nelle Davis, to come in running water," was fixed. The hot water has and give massages to anyone that chooses to been. turned on, and all sinks are functioning participate. Light refreshments will be also properly. served. Additional trashcans were placed in the bath Aurora Ocampo ofthe Center for Health and rooms of the 18 street building to help control Healing at Beth Israel Hospital will be speaking the level oftrash. Also, the soap was replaced at the workshop. Ocampo is a clinical nurse in the women's bathroom 00 the 14 floor of360 specializing in therapeutic touch; she is also PAS. -

Robotics Irobot Corp. (IRBT)

Robotics This report is published for educational purposes only by students competing in the Boston Security Analysts Society (BSAS) Boston Investment iRobot Corp. (IRBT) Research Challenge. 12/13/2010 Ticker: IRBT Recommendation: Sell Price: $22.84 Price Target: $15.20-16.60 Market Profile Figure 1.1: iRobot historical stock price Shares O/S 25 mm 25 Current price $22.84 52 wk price range $14.45-$23.00 20 Beta 1.86 iRobot 3 mo ADTV 0.14 mm 15 Short interest 2.1 mm Market cap $581mm 10 Debt 0 S & P 500 (benchmarked Jan-09) P/10E 25.2x 5 EV/10 EBITDA 13.5x Instl holdings 57.8% 0 Jan-09 Apr-09 Jul-09 Oct-09 Jan-10 Apr-10 Jul-10 Oct-10 Insider holdings 12.5% Valuation Ranges iRobot is a SELL: The company’s high valuation reflects overly optimistic expectations for home and military robot sales. While we are bullish on robotics, iRobot’s 52 wk range growth story has run out of steam. • Consensus is overestimating future home robot sales: Rapid yoy growth in 2010 home robot Street targets sales is the result of one-time penetration of international markets, which has concealed declining domestic sales. Entry into new markets drove growth in home robot sales in Q4 2009 and Q1 2010, 20-25x P/2011E but international sales have fallen 4% since Q1 this year, and we believe the street is overestimating international growth in 2011 (30% vs. our estimate of 18%). Domestic sales were down 29% in 8-12x Terminal 2009 and are up only 2.5% ytd. -

Packaging Specifications and Design

ECE 477 Digital Systems Senior Design Project Rev 8/09 Homework 4: Packaging Specifications and Design Team Code Name: 2D-MPR Group No. 12 Team Member Completing This Homework: Tyler Neuenschwander E-mail Address of Team Member: tcneuens@ purdue.edu NOTE: This is the first in a series of four “design component” homework assignments, each of which is to be completed by one team member. The body of the report should be 3-5 pages, not including this cover page, references, attachments or appendices. Evaluation: SCORE DESCRIPTION Excellent – among the best papers submitted for this assignment. Very few 10 corrections needed for version submitted in Final Report. Very good – all requirements aptly met. Minor additions/corrections needed for 9 version submitted in Final Report. Good – all requirements considered and addressed. Several noteworthy 8 additions/corrections needed for version submitted in Final Report. Average – all requirements basically met, but some revisions in content should 7 be made for the version submitted in the Final Report. Marginal – all requirements met at a nominal level. Significant revisions in 6 content should be made for the version submitted in the Final Report. Below the passing threshold – major revisions required to meet report * requirements at a nominal level. Revise and resubmit. * Resubmissions are due within one week of the date of return, and will be awarded a score of “6” provided all report requirements have been met at a nominal level. Comments: Comments from the grader will be inserted here. ECE 477 Digital Systems Senior Design Project Rev 8/09 1.0 Introduction The 2D-MPR is an autonomous robot designed to collect data required to map a room. -

Irobot: ROBOTS for the HOME

Licensed to: iChapters User CASE 5 iROBOT: ROBOTS FOR THE HOME Robots have been around for a long time. Detroit has appeared on the cover of Popular Science. It was clear used robots for four decades to build cars, and manu- by then that his future lay in inventions. facturers of all kinds of goods use some form of robot- ics to achieve effi ciencies and productivity. But until The Opportunity iRobot brought its battery-powered vacuum cleaner to the market, no one had successfully used robots in In 1990, Brooks and Angle, who by then had be- the home as an appliance. The issue was not whether it come close working partners with their MIT col- was possible to use robots in the home, but could they league Helen Greiner, borrowed from their credit be produced at a price customers would pay. Until the cards and used bank debt to found iRobot in a tiny introduction of Roomba, iRobot’s intelligent vacuum apartment in Somerville, Massachusetts. The goal of cleaner, robots for the home cost tens of thousands of the company was to build robots that would affect dollars. At Roomba’s price point of $$199,199, it was now hhowow peppeopleople llivedived ttheirheir llives.ivese . AtAt tthathaat ttime, no one possiblee ffororor ttheheh aaveragevev rar gee cconsumeronsus meer too aaffordfford to hhaveava e a cocouldoulld coconceivenceie ve ooff a wawwayy tot bbringringn rrobotsoboto s into domes- robot cleanleaean the hohhouse.usu e.. TThathah t inn iitselftssele f is aann iniinterestingteerrests inng titicc lilifefe iinn aan aaffordableffforordad blle wwaway,y, aandndd iiRobotRoR bob t was still years story, bututt eevenveen mommorerer iinterestingnteresestitingg iiss ththehe eneentrepreneurialnttrepepreenen ururiiaal awawayayy ffromroom didiscoveringsscoovverrini g ththee oononee aapapplicationppliccatiio that would journey of Colin Angle and his company, iRobot. -

Guitar Magazine Master Spreadsheet

Master 10 Years Through the Iris G1 09/06 10 years Wasteland GW 4/06 311 Love Song GW 7/04 AC/DC Back in Black + lesson GW 12/05 AC/DC Dirty Deeds Done Dirt Cheap G1 1/04 AC/DC For Those About to Rock GW 5/07 AC/DC Girls Got Rhythm G1 3/07 AC/DC Have a Drink On Me GW 12/05 ac/dc hell's bells G1 9/2004 AC/DC hell's Bells G 3/91 AC/DC Hells Bells GW 1/09 AC/DC Let There Be Rock GW 11/06 AC/DC money talks GW 5/91 ac/DC shoot to thrill GW 4/10 AC/DC T.N.T GW 12/07 AC/DC Thunderstruck 1/91 GS AC/DC Thunderstruck GW 7/09 AC/DC Who Made Who GW 1/09 AC/DC Whole Lotta Rosie G1 10/06 AC/DC You Shook Me All Night Long GW 9/07 Accept Balls to the Wall GW 11/07 Aerosmith Back in the Saddle GW 12/98 Aerosmith Dream On GW 3/92 Aerosmith Dream On G1 1/07 Aerosmith Love in an Elevator G 2/91 Aerosmith Train Kept a Rollin’ GW 11/08 AFI Miss Murder GW 9/06 AFI Silver and Cold GW 6/04 Al DiMeola Egyptian Danza G 6/96 Alice Cooper No More Mr. Nice Guy G 9/96 Alice Cooper School's Out G 2/90 alice cooper school’s out for summer GW hol 08 alice in chains Dam That River GW 11/06 Alice in Chains dam that river G1 4/03 Alice In Chains Man in the Box GW 12/09 alice in chains them bones GW 10/04 All That Remains Two Weeks GW 1/09 All-American Rejects Dirty Little Secret GW 6/06 Allman Bros Midnight Rider GW 12/06 Allman Bros Statesboro Blues GW 6/04 Allman Bros Trouble no More (live) GW 4/07 Allman Bros. -

Irobot Create 2 Tethered Driving Using Serial Communication and Python Nick Saxton April 2, 2015

iRobot Create 2 Tethered Driving Using Serial Communication and Python Nick Saxton April 2, 2015 Executive Summary: This application note will detail the necessary steps to write a simple Python script to control an iRobot Create 2 through serial port communication. Following an introduction to the topic and the technology used, a detailed walkthrough will be provided showing the reader how to write a user controlled tethered driving script. This walkthrough will start with a blank file and show all of the required steps to reach the final script. Additionally, automated driving code will be discussed, including comments on how it is used in the Automated 3D Environment Modeling project sponsored by Dr. Daniel Morris of the Michigan State University 3D Vision Lab1. Keywords: iRobot Create Tethered Driving Serial Communication2 Python Programming 1 Introduction: This section will introduce the technology and concepts used in this application note. First, an overview of the iRobot Create 2 Programmable Robot will be provided. Then, the Python programming language will be examined, including a brief history of the language and some of its key features. Finally, serial port communication will be discussed. Following this introduction, the objective of this application note will be looked at. The iRobot Create 2 Programmable Robot is a fully programmable version of iRobot’s popular Roomba vacuum cleaning robot. It weighs under 8 lbs, measures a little over 13 inches in diameter and approximately 3.5 inches tall, and has a serial port on its top side, accessible after removing the top cover. An image of the Create 2 can be seen below in Figure 1.