Elastic Highly Available Cloud Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Openicra : Vers Un Modèle Générique De Déploiement Automatisé Des Applications Dans Le Nuage Informatique

ÉCOLE DE TECHNOLOGIE SUPÉRIEURE UNIVERSITÉ DU QUÉBEC MÉMOIRE PRÉSENTÉ À L’ÉCOLE DE TECHNOLOGIE SUPÉRIEURE COMME EXIGENCE PARTIELLE À L’OBTENTION DE LA MAÎTRISE EN GÉNIE CONCENTRATION : RÉSEAUX DE TÉLÉCOMMUNICATIONS M. Ing. PAR Ridha GADHGADHI OPENICRA : VERS UN MODÈLE GÉNÉRIQUE DE DÉPLOIEMENT AUTOMATISÉ DES APPLICATIONS DANS LE NUAGE INFORMATIQUE MONTRÉAL, LE 6 SEPTEMBRE 2013 ©Tous droits réservés, Ridha Gadhgadhi, 2013 II ©Tous droits réservés Cette licence signifie qu’il est interdit de reproduire, d’enregistrer ou de diffuser en tout ou en partie, le présent document. Le lecteur qui désire imprimer ou conserver sur un autre media une partie importante de ce document, doit obligatoirement en demander l’autorisation à l’auteur. PRÉSENTATION DU JURY CE MÉMOIRE A ÉTÉ ÉVALUÉ PAR UN JURY COMPOSÉ DE : M. Mohamed Cheriet, directeur de mémoire Département de génie de la production automatisée à l’École de technologie supérieure M. Stéphane Coulombe, président du jury Département de génie logiciel et des TI à l’École de technologie supérieure M. Chamseddine Talhi, membre du jury Département de génie logiciel et des TI à l’École de technologie supérieure M. Ali Kanso, examinateur externe Ericsson Research, Ericsson Inc. IL A FAIT L’OBJET D’UNE SOUTENANCE DEVANT JURY ET PUBLIC LE 23 AOÛT 2013 À L’ÉCOLE DE TECHNOLOGIE SUPÉRIEURE REMERCIEMENTS Je tiens tout d’abord à remercier chaleureusement mon directeur de recherche, le professeur Mohamed Cheriet, pour son expertise, ses conseils et son soutien inconditionnel. Je remercie aussi Mathieu Lemay d’Inocybe Technologies Inc. pour ses conseils techniques. Je tiens à remercier tous les membres du jury de m'avoir fait l'honneur d'accepter de juger mon mémoire : • Monsieur Stéphane Coulombe, de l’ÉTS, Université du Québec; • Monsieur Chamseddine Talhi, de l’ÉTS, Université du Québec; • Monsieur Ali Kanso, d’Ericsson Montréal, Canada. -

High Availability in Clouds: Systematic Review and Research Challenges Patricia T

Endo et al. Journal of Cloud Computing: Advances, Systems Journal of Cloud Computing: and Applications (2016) 5:16 DOI 10.1186/s13677-016-0066-8 Advances, Systems and Applications REVIEW Open Access High availability in clouds: systematic review and research challenges Patricia T. Endo1,2*, Moisés Rodrigues2, Glauco E. Gonçalves2,3, Judith Kelner2, Djamel H. Sadok2 and Calin Curescu4 Abstract Cloud Computing has been used by different types of clients because it has many advantages, including the minimization of infrastructure resources costs, and its elasticity property, which allows services to be scaled up or down according to the current demand. From the Cloud provider point-of-view, there are many challenges to be overcome in order to deliver Cloud services that meet all requirements defined in Service Level Agreements (SLAs). High availability has been one of the biggest challenges for providers, and many services can be used to improve the availability of a service, such as checkpointing, load balancing, and redundancy. Beyond services, we can also find infrastructure and middleware solutions. This systematic review has as its main goal to present and discuss high available (HA) solutions for Cloud Computing, and to introduce some research challenges in this area. We hope this work can be used as a starting point to understanding and coping with HA problems in Cloud. Keywords: Cloud computing, High availability, Systematic review, Research challenges Introduction in $336,000 less revenue per hour. Paypal, the online pay- Cloud Computing emerged as a novel technology at the ment system, experiences in a revenue loss of $225,000 end of the last decade, and it has been a trending topic ever per hour. -

Kubernetes As an Availability Manager for Microservice Based Applications Leila Abdollahi Vayghan

Kubernetes as an Availability Manager for Microservice Based Applications Leila Abdollahi Vayghan A Thesis in the Department of Computer Science and Software Engineering Presented in Partial Fulfillment of the Requirements for the Degree of Master of Computer Science at Concordia University Montreal, Quebec, Canada August 2019 © Leila Abdollahi Vayghan, 2019 CONCORDIA UNIVERSITY SCHOOL OF GRADUATE STUDIES This is to certify that the thesis prepared By: Leila Abdollahi Vayghan Entitled: Kubernetes as an Availability Manager for Microservice Based Applications and submitted in partial fulfillment of the requirements for the degree of Master in Computer Science complies with the regulations of the University and meets the accepted standards with respect to originality and quality. Signed by the final examining committee: ________________________________________________ Chair Dr. P. Rigby ________________________________________________ Internal Examiner Dr. D. Goswami ________________________________________________ Internal Examiner Dr. J. Rilling ________________________________________________ Co-Supervisor Dr. F. Khendek ________________________________________________ Co-Supervisor Dr. M. Toeroe Approved by: ___________________________________ Dr. L. Narayanan, Chair Department of Computer Science and Software Engineering _______________ 2019___ __________________________________ Dr. Amir Asif, Dean, Faculty of Engineering and Computer Science ii ABSTRACT Kubernetes as an Availability Manager for Microservice Based Applications Leila -

System Design for Telecommunication Gateways

P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS Alexander Bachmutsky Nokia Siemens Networks, USA A John Wiley and Sons, Ltd., Publication P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS Alexander Bachmutsky Nokia Siemens Networks, USA A John Wiley and Sons, Ltd., Publication P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come This edition first published 2011 C 2011 John Wiley & Sons, Ltd Registered office John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom For details of our global editorial offices, for customer services and for information about how to apply for permission to reuse the copyright material in this book please see our website at www.wiley.com. The right of the author to be identified as the author of this work has been asserted in accordance with the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher. -

Protection Group (PG): This Is a Dynamic Entity That Informally Represents the Groups of Components to Which a CSI Has Been Assigned

ÉCOLE DE TECHNOLOGIE SUPÉRIEURE UNIVERSITÉ DU QUÉBEC THESIS PRESENTED TO ÉCOLE DE TECHNOLOGIE SUPÉRIEURE IN PARTIAL FULFILLEMENT OF THE REQUIREMENTS FOR A MASTER’S DEGREE WITH THESIS IN INFORMATION TECHNOLOGY ENGINEERING M. A. Sc. BY Maxime TURENNE A NEW DOMAIN SPECIFIC LANGUAGE FOR GENERATING AND VALIDATING MIDDLEWARE CONFIGURATIONS FOR HIGHLY AVAILABLE APPLICATIONS MONTREAL, October 6th, 2015 Maxime Turenne 2015 This Creative Commons licence allows readers to download this work and share it with others as long as the author is credited. The content of this work may not be modified in any way or used commercially. BOARD OF EXAMINERS THESIS M.A.Sc. THIS THESIS HAS BEEN EVALUATED BY THE FOLLOWING BOARD OF EXAMINERS Mr. Abdelouahed Gherbi, Thesis Supervisor Department of software and IT engineering at École de technologie supérieure Mr. Ali Kanso, Thesis Co-supervisor Researcher at Ericsson Canada Mr. Mohamed Cheriet, Chair, Board of Examiners Department of software and IT engineering at École de technologie supérieure Mr. Sègla Kpodjedo, Member of the jury Department of software and IT engineering at École de technologie supérieure THIS THESIS WAS PRENSENTED AND DEFENDED IN THE PRESENCE OF A BOARD OF EXAMINERS AND THE PUBLIC SEPTEMBER 4TH, 2015 AT ÉCOLE DE TECHNOLOGIE SUPÉRIEURE ACKNOWLEDGMENTS I would like to express my sincere gratitude to: • My supervisors, Dr. Abdelouahed Gherbi not only for giving me the chance to benefit from his large and substantial knowledge, but also for his human approach from which I have learned a lot. • Dr. Ali Kanso from who I learned almost everything about high availability for his inspiring example as a scientific and colleague. -

Kubernetes As an Availability Manager for Microservice Applications

Kubernetes as an Availability Manager for Microservice Applications Leila Abdollahi Vayghan Mohamed Aymen Saied Maria Toeroe Ferhat Khendek Engineering and Computer Engineering and Computer Ericsson Inc. Engineering and Computer Science Science Montreal, Canada Science Concordia University Concordia University [email protected] Concordia University Montreal, Canada Montreal, Canada Montreal, Canada [email protected] [email protected] [email protected] Abstract— The move towards the microservice based services that can be deployed and scaled independently by fully architecture is well underway. In this architectural style, small and automated deployment machinery, with minimum centralized loosely coupled modules are developed, deployed, and scaled management [2]. Microservices are built around separate independently to compose cloud-native applications. However, for business functionalities. Each microservice runs in its own carrier-grade service providers to migrate to the microservices process and communicates through lightweight mechanisms, architectural style, availability remains a concern. Kubernetes is often using APIs [3]. Microservices address the drawbacks of an open source platform that defines a set of building blocks which monolithic applications. They are small and can restart faster at collectively provide mechanisms for deploying, maintaining, the time of upgrade or failure recovery. Microservices are scaling, and healing containerized microservices. Thus, loosely coupled, and failure of one microservice will not affect Kubernetes hides the complexity of microservice orchestration while managing their availability. In a preliminary work we other microservices of the system. The fine granularity of this evaluated Kubernetes, using its default configuration, from the architectural style makes the scaling more flexible and more availability perspective in a private cloud settings. -

Introduction Minimizing Planned Downtime of SAP Systems with the Virtualization Technologies & Systematic Review and Resear

Introduction Minimizing Planned Downtime of SAP Systems with the Virtualization Technologies & systematic review and research challenges in cloud computing Mrs.Priyanka Hariom Singh In-charge Principal Of K.S.E.T’S COLLEGE OF COMPUTER APPLICATIONS ABSTRACT Cloud Computing has been used by different types of clients because it has many advantages, including the minimization of infrastructure resources costs, and its elasticity property, which allows services to be scaled up or down according to the current demand. From the Cloud provider point-of-view, there are many challenges to be overcome in order to deliver Cloud services that meet all requirements defined in Service Level Agreements (SLAs). High availability has been one of the biggest challenges for providers, and many services can be used to improve the availability of a service, such as SAP infrastructures on which hundreds of users depend for their work and especially when systems such as materials management, supply chain management (SCM), and customer relationship management (CRM) run concurrently and interact with each other. we can also find infrastructure and middleware solutions. This systematic review has as its main goal to present and discuss high available (HA) solutions and new technologies implementation which are introduced by oracle i.e Minimizing Planned Downtime of SAP Systems with the Virtualization Technologies for Cloud Computing, and to introduce some research challenges in this area. Keywords:Cloud computing , biggest challenges for providers, SAP infrastructures, Service Level Agreements (SLAs) ,High availability, Systematic review I.INTRODUCTION Cloud Computing emerged as a novel technology at the end of the last decade, and it has been a trending topic ever since. -

Opensaf and Vmware from the Perspective of High Availability

OpenSAF and VMware from the Perspective of High Availability Ali Nikzad A Thesis in The Department of Computer Science and Software Engineering Presented in Partial Fulfillment of the Requirements for the Degree of Master of Applied Science (Software Engineering) at Concordia University Montreal, Quebec, Canada November 2013 © Ali Nikzad, 2013 CONCORDIA UNIVERSITY School of Graduate Studies This is to certify that the thesis prepared By: Ali Nikzad Entitled: OpenSAF and VMware from the Perspective of High Availability and submitted in partial fulfillment of the requirements for the degree of complies with the regulations of the University and meets the accepted standards with respect to originality and quality. Signed by the final examining committee: Dr. T. Popa _______________________________ Chair Dr. J. Rilling __ ________________________ Examiner Dr. J. Paquet ___________________________ Examiner Dr. F. Khendek_ ________________________ Supervisor Dr. M. Toeroe _ ______________________ Co-Supervisor Approved by Dr. S. P. Mudur________________________ Chair of Department Dr. C. Trueman___________________________________ Dean of Faculty Date: November 2013 ii Abstract OpenSAF and VMware from the Perspective of High Availability Ali Nikzad Cloud is becoming one of the most popular means of delivering computational services to users who demand services with higher availability. Virtualization is one of the key enablers of the cloud infrastructure. Availability of the virtual machines along with the availability of the hosted software components are the fundamental ingredients for achieving highly available services in the cloud. There are some availability solutions developed by virtualization vendors like VMware HA and VMware FT. At the same time the SAForum specifications and OpenSAF as a compliant implementation offer a standard based open solution for service high availability. -

Combining Opensaf with Vmware Architecture, Measurement

OpenSAF and VMware from the Perspective of High Availability Ali Nikzad, Ferhat Khendek Maria Toeroe Concordia University Ericsson SVM’2013 – Zurich – October 2013 1 Friday, October 18, 13 Outline } Introduction & Objectives } Some Background } Testbed, Failures and Metrics } The baseline architectures } Measurements } Analysis } Architectures combining OpenSAF and virtualization } Conclusion 2 Friday, October 18, 13 Introduction & Objectives } Service availability and continuity have become important requirements in several domains } High availability (demanded in some domains) is defined as at least 99.999% availability which is maximum of 5.26 minutes of downtime in a year } Including scheduled downtime for upgrade for instance } The computing world is moving toward cloud services and cloud computing } Virtualization is an important aspect } Virtualization has many advantages 3 Friday, October 18, 13 Introduction & Objectives } Evaluate and position virtualization and the SAForum middleware with respect to each other } Using OpenSAF and VMware in the test-bed } Analyzing the measurements } Determine pros and cons of using virtualization in/for high availability (HA) } Propose a solution(s) that combines the benefits from each 4 Friday, October 18, 13 Background – SAForum and OpenSAF AMF Checkpointing Notification Log SAForum is a consortium of telecommunication Etc. Logically grouped and computing companies developing standards for components enabling highly available services and applications Applications Application Interface OpenSAF -

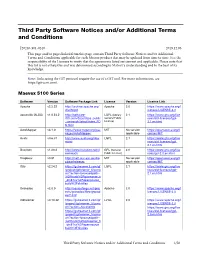

Third Party Software Notices And/Or Additional Terms and Conditions

Third Party Software Notices and/or Additional Terms and Conditions F20240-501-0240 2019.12.06 This page and/or pages linked from this page contain Third Party Software Notices and/or Additional Terms and Conditions applicable for each Matrox product that may be updated from time to time. It is the responsibility of the Licensee to verify that the agreements listed are current and applicable. Please note that this list is not exhaustive and was determined according to Matrox’s understanding and to the best of its knowledge. Note: Links using the GIT protocol require the use of a GIT tool. For more information, see https://git-scm.com/. Maevex 5100 Series Software Version Software Package Link License Version License Link Apache v2.2.22 http://archive.apache.org/ Apache 2.0 https://www.apache.org/l dist/httpd icenses/LICENSE-2.0 asoundlib (ALSA) v1.0.24.2 http://software- LGPL (Library 2.1 https://www.gnu.org/lice dl.ti.com/dsps/dsps_public General Public nses/old-licenses/lgpl- _sw/ezsdk/latest/index_FD License) 2.1.en.html S.html AutoMapper v3.1.0 https://www.nuget.org/pac MIT No version https://opensource.org/li kages/AutoMapper/ applicable censes/MIT Avahi v0.6.31 http://www.avahi.org/dow LGPL 2.1 https://www.gnu.org/lice nload nses/old-licenses/lgpl- 2.1.en.html Busybox v1.20.2 http://www.busybox.net/d GPL (General 2.0 https://www.gnu.org/lice ownloads Public License) nses/gpl-2.0.en.html Dropbear v0.51 http://matt.ucc.asn.au/dro MIT No version https://opensource.org/li pbear/releases applicable censes/MIT Glib v2.24.2 https://gstreamer.ti.com/gf -

IP Multimedia for Municipalities

IP Multimedia for Municipalities The supporting architecture DAN PETERSTRÖM KTH Information and Communication Technology Master of Science Thesis Stockholm, Sweden 2009 TRITA-ICT-EX-2009:103 IP Multimedia for Municipalities: The supporting architecture Dan Peterström [email protected] Aug 18, 2009 Examiner and academic advisor: Prof. G. Q. Maguire Jr., KTH Industrial advisor: Per Ljungberg, Ericsson Abstract Fiber deployment is becoming popular and is seen as a way to increase a community’s attractiveness to new inhabitants and companies. A new Open Access network is emerging, leading to a more horizontal network architecture. Combining this architecture with IMS enables developers to easily develop new and attractive services. To facilitate the development of new IMS services there needs to be an easy to use development environment and a reliable hardware/software platform upon which to deploy them. This thesis project will explore the design, development, and evaluation of new IMS applications targeted at municipal networks as well as the service platforms they are deployed on. The thesis will also examined what role IMS plays in Municipalities and why they may need a tailored IMS solution. During the thesis a reference network for Municipalities was put together and tested. Different service platforms were tested and evaluated. Sammanfattning Fiberutbyggnad har blivit mycket populärt och ses som ett sätt att öka en stads attraktivitet för nya invånare och företag. Ett nytt öppen nät håller på att växa fram, detta leder till en mer horisontell nätverksarkitektur. Kombinera denna arkitektur med IMS så kan utvecklare lättare utveckla nya och attraktiva tjänster. För att underlätta utvecklingen av nya IMS-tjänster måste det finnas ett lättanvänd utvecklingsmiljö och en pålitlig hårdvaru/mjukvaru plattform att installera dem på. -

Opensaf Release 4 Overview “The Architecture Release”

OpenSAF Release 4 Overview “The Architecture Release” Mario Angelic Expert Middleware Architectures, Ericsson OpenSAF TLC [email protected] Presentation Outline • Background Information – OpenSAF Release 3 Architecture Overview • Release 4, “Architecture Release” – Functionality – Architecture Improvement • Streamlining • Modularity • Architecture alignment First, a chart 2000 1000 1800 900 1600 800 1400 1200 700 1000 600 800 600 500 400 400 200 300 0 200 100 jan-08 apr-08 jul-08 okt-08 jan-09 apr-09 jul-09 okt-09 jan-10 apr-10 0 Rel 2 Rel 3 Rel 4 Presentation Outline • Background Information – OpenSAF Release 3 Architecture Overview • Release 4, “Architecture Release” – Functionality – Architecture Improvement • Streamlining • Modularity • Architecture alignment OpenSAF 3.0 Key Features HA Applications SNMP Subagent + Command Line Interface SA Forum Information Model Management Service Management Access System Resource SA Forum SA Forum Availability XML Service Monitor Cluster Membership Management Framework Distributed Tracing Message Based SA Forum SA Forum Service Checkpoint Service Message Service Checkpoint Service Description Availability Interface Service SA Forum SA Forum Manager + Virtual IP Log Service Notification Service System LEAP (Utility Library) Persistent Store SA Forum SA Forum Service Lock Service Event Service Message Distribution Service (MDS over TIPC) HW Platform Porting Layer Linux (RHEL, SUSE, WRS PNE LE, Fedora, Mvista, Ubuntu)/SOLARIS Hardware Platform OpenSAF 3.0 key services AMF Application • Availability