Python Interpreter Performance Deconstructed

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Structure of Programming Languages – Lecture 2A

Structure of Programming Languages { Lecture 2a CSCI 6636 { 4536 February 4, 2020 CSCI 6636 { 4536 Lecture 2a. 1/19 February 4, 2020 1 / 19 Outline 1 Overview of Languages 2 Language Properties 3 Good or Bad: Fundamental Considerations 4 Homework CSCI 6636 { 4536 Lecture 2a. 2/19 February 4, 2020 2 / 19 Outline The Language Landscape Languages Come in Many Flavors. Possible Design Goals Design Examples Representation Issues CSCI 6636 { 4536 Lecture 2a. 3/19 February 4, 2020 3 / 19 Overview of Languages What is a Program? We can view a program two ways: Developer's view: A program is the implementation of a design (a model) for a piece of software. Coder's view: A program is a description of a set of actions that we want a computer to carry out on some data. Similarly, we can view a language more than one way: High level: It permits us to express a model. Low level: It permits us to define a correct set of instructions for the computer. CSCI 6636 { 4536 Lecture 2a. 4/19 February 4, 2020 4 / 19 Overview of Languages Aspects of a Language. To use a language effectively we must learn these four aspects: Syntax is the set legal forms that a sentence or program unit may take. During translation, if the syntax of a program unit is legal (correct), then we can talk about the semantics of the unit. Semantics is the science of meaning. Operational semantics is the set of actions when a program is executed. Style is a set of human factors. -

Multiparadigm Programming with Python 3 Chapter 5

Multiparadigm Programming with Python 3 Chapter 5 H. Conrad Cunningham 7 September 2018 Contents 5 Python 3 Types 2 5.1 Chapter Introduction . .2 5.2 Type System Concepts . .2 5.2.1 Types and subtypes . .2 5.2.2 Constants, variables, and expressions . .2 5.2.3 Static and dynamic . .3 5.2.4 Nominal and structural . .3 5.2.5 Polymorphic operations . .4 5.2.6 Polymorphic variables . .5 5.3 Python 3 Type System . .5 5.3.1 Objects . .6 5.3.2 Types . .7 5.4 Built-in Types . .7 5.4.1 Singleton types . .8 5.4.1.1 None .........................8 5.4.1.2 NotImplemented ..................8 5.4.2 Number types . .8 5.4.2.1 Integers (int)...................8 5.4.2.2 Real numbers (float)...............9 5.4.2.3 Complex numbers (complex)...........9 5.4.2.4 Booleans (bool)..................9 5.4.2.5 Truthy and falsy values . 10 5.4.3 Sequence types . 10 5.4.3.1 Immutable sequences . 10 5.4.3.2 Mutable sequences . 12 5.4.4 Mapping types . 13 5.4.5 Set Types . 14 5.4.5.1 set ......................... 14 5.4.5.2 frozenset ..................... 14 5.4.6 Other object types . 15 1 5.5 What Next? . 15 5.6 Exercises . 15 5.7 Acknowledgements . 15 5.8 References . 16 5.9 Terms and Concepts . 16 Copyright (C) 2018, H. Conrad Cunningham Professor of Computer and Information Science University of Mississippi 211 Weir Hall P.O. Box 1848 University, MS 38677 (662) 915-5358 Browser Advisory: The HTML version of this textbook requires a browser that supports the display of MathML. -

Object-Oriented Type Inference

Object-Oriented Type Inference Jens Palsberg and Michael I. Schwartzbach [email protected] and [email protected] Computer Science Department, Aarhus University Ny Munkegade, DK-8000 Arh˚ us C, Denmark Abstract The algorithm guarantees that all messages are un- derstood, annotates the program with type infor- We present a new approach to inferring types in un- mation, allows polymorphic methods, and can be typed object-oriented programs with inheritance, used as the basis of an optimizing compiler. Types assignments, and late binding. It guarantees that are finite sets of classes and subtyping is set in- all messages are understood, annotates the pro- clusion. Given a concrete program, the algorithm gram with type information, allows polymorphic methods, and can be used as the basis of an op- constructs a finite graph of type constraints. The timizing compiler. Types are finite sets of classes program is typable if these constraints are solvable. and subtyping is set inclusion. Using a trace graph, The algorithm then computes the least solution in our algorithm constructs a set of conditional type worst-case exponential time. The graph contains constraints and computes the least solution by least all type information that can be derived from the fixed-point derivation. program without keeping track of nil values or flow analyzing the contents of instance variables. This 1 Introduction makes the algorithm capable of checking most com- mon programs; in particular, it allows for polymor- phic methods. The algorithm is similar to previous Untyped object-oriented languages with assign- work on type inference [18, 14, 27, 1, 2, 19, 12, 10, 9] ments and late binding allow rapid prototyping be- in using type constraints, but it differs in handling cause classes inherit implementation and not spec- late binding by conditional constraints and in re- ification. -

Type Inference for Late Binding. the Smalleiffel Compiler. Suzanne Collin, Dominique Colnet, Olivier Zendra

Type Inference for Late Binding. The SmallEiffel Compiler. Suzanne Collin, Dominique Colnet, Olivier Zendra To cite this version: Suzanne Collin, Dominique Colnet, Olivier Zendra. Type Inference for Late Binding. The SmallEiffel Compiler.. Joint Modular Languages Conference (JMLC), 1997, Lintz, Austria. pp.67–81. inria- 00563353 HAL Id: inria-00563353 https://hal.inria.fr/inria-00563353 Submitted on 4 Feb 2011 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Typ e Inference for Late Binding. The SmallEiel Compiler. Suzanne COLLIN, Dominique COLNET and Olivier ZENDRA Campus Scientique, Bâtiment LORIA, Boîte Postale 239, 54506 Vando euvre-lès-Nancy Cedex France Tel. +33 03 83.59.20.93 Email: [email protected] Centre de Recherche en Informatique de Nancy Abstract. The SmallEiel compiler uses a simple typ e inference mecha- nism to translate Eiel source co de to C co de. The most imp ortant asp ect in our technique is that many o ccurrences of late binding are replaced by static binding. Moreover, when dynamic dispatch cannot b e removed, inlining is still p ossible. The advantage of this approach is that it sp eeds up execution time and decreases considerably the amount of generated co de. -

Introduction to Object-Oriented Programming (OOP)

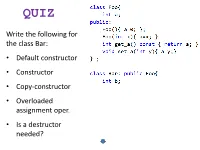

QUIZ Write the following for the class Bar: • Default constructor • Constructor • Copy-constructor • Overloaded assignment oper. • Is a destructor needed? Or Foo(x), depending on how we want the initialization to be made. Criticize! Ch. 15: Polymorphism & Virtual Functions Virtual functions enhance the concept of type. There is no analog to the virtual function in a traditional procedural language. As a procedural programmer, you have no referent with which to think about virtual functions, as you do with almost every text other feature in the language. Features in a procedural language can be understood on an algorithmic level, but, in an OOL, virtual functions can be understood only from a design viewpoint. Remember upcasting Draw the UML diagram! We would like the more specific version of play() to be called, since flute is a Wind object, not a generic Instrument. Ch. 1: Introduction to Objects Remember from ch.1 … Early binding vs. late binding “The C++ compiler inserts a special bit of code in lieu of the absolute call. This code calculates the text address of the function body, using information stored in the object at runtime!” Ch. 15: Polymorphism & Virtual Functions virtual functions To cause late binding to occur for a particular function, C++ requires that you use the virtual keyword when declaring the function in the base text class. All derived-class functions that match the signature of the base-class declaration will be called using the virtual mechanism. example It is legal to also use the virtual keyword in the derived-class declarations, but it is redundant and can be confusing. -

Run Time Polymorphism Against Virtual Function in Object Oriented Programming

Devaendra Gahlot et al, / (IJCSIT) International Journal of Computer Science and Information Technologies, Vol. 2 (1) , 2011, 569-571 Run Time Polymorphism Against Virtual Function in Object Oriented Programming #1 2 #3 Devendra Gahlot , S. S. Sarangdevot# , Sanjay Tejasvee #Department of Computer Application, Govt. Engineering College Bikaner, Bikaner, Rajasthan, India. #Depertment of IT & CS, Janardan Rai Nagar Rajasthan Vidyapeeth University, Pratap Nagar, Udaipur, Rajasthan, India) # Department of Computer Application,Govt. Engineering College Bikaner,Bikaner ,Rajasthan,India. Abstract- The Polymorphism is the main feature of Object same name and the same parameter sets in all the super classes, Oriented Programming. Run Time Polymorphism is concept of subclasses and interfaces. In principle, the object types may be late binding; means the thread of the program will be unrelated, but since they share a common interface, they are often dynamically executed, according to determination of the implemented as subclasses of the same super class. Though it is not compiler. In this paper, we will discuss the role of Run Time required, it is understood that the different methods will also produce Polymorphism and how it can be effectively used to increase the similar results (for example, returning values of the same type). efficiency of the application and overcome complexity of overridden function and pointer object during inheritance in Polymorphism is not the same as method overloading or method Object Oriented programming. overriding.[1] Polymorphism is only concerned with the application of specific implementations to an interface or a more generic base 1. INTRODUCTION TO POLYMORPHISM IN OBJECT- class. Method overloading refers to methods that have the same name ORIENTED PROGRAMMING but different signatures inside the same class. -

TAPS Working Group T. Pauly, Ed. Internet-Draft Apple Inc. Intended Status: Standards Track B

TAPS Working Group T. Pauly, Ed. Internet-Draft Apple Inc. Intended status: Standards Track B. Trammell, Ed. Expires: 13 January 2022 Google Switzerland GmbH A. Brunstrom Karlstad University G. Fairhurst University of Aberdeen C. Perkins University of Glasgow P. Tiesel SAP SE C.A. Wood Cloudflare 12 July 2021 An Architecture for Transport Services draft-ietf-taps-arch-11 Abstract This document describes an architecture for exposing transport protocol features to applications for network communication, the Transport Services architecture. The Transport Services Application Programming Interface (API) is based on an asynchronous, event-driven interaction pattern. It uses messages for representing data transfer to applications, and it describes how implementations can use multiple IP addresses, multiple protocols, and multiple paths, and provide multiple application streams. This document further defines common terminology and concepts to be used in definitions of Transport Services APIs and implementations. Status of This Memo This Internet-Draft is submitted in full conformance with the provisions of BCP 78 and BCP 79. Internet-Drafts are working documents of the Internet Engineering Task Force (IETF). Note that other groups may also distribute working documents as Internet-Drafts. The list of current Internet- Drafts is at https://datatracker.ietf.org/drafts/current/. Internet-Drafts are draft documents valid for a maximum of six months and may be updated, replaced, or obsoleted by other documents at any time. It is inappropriate to use Internet-Drafts as reference material or to cite them other than as "work in progress." Pauly, et al. Expires 13 January 2022 [Page 1] Internet-Draft TAPS Architecture July 2021 This Internet-Draft will expire on 13 January 2022. -

Imperative Programming

Naming, scoping, binding, etc. Instructor: Dr. B. Cheng Fall 2004 Organization of Programming Languages-Cheng (Fall 2004) 1 Imperative Programming ? The central feature of imperative languages are variables ? Variables are abstractions for memory cells in a Von Neumann architecture computer ? Attributes of variables ? Name, Type, Address, Value, … ? Other important concepts ? Binding and Binding times ? Strong typing ? Type compatibility rules ? Scoping rules Organization of Programming Languages-Cheng (Fall 2004) 2 Preliminaries ? Name: representation for something else ? E.g.: identifiers, some symbols ? Binding: association between two things; ? Name and the thing that it names ? Scope of binding: part of (textual) program that binding is active ? Binding time: point at which binding created ? Generally: point at which any implementation decision is made. Organization of Programming Languages-Cheng (Fall 2004) 3 1 Names (Identifiers) ? Names are not only associated with variables ? Also associated with labels, subprograms, formal parameters, and other program constructs ? Design issues for names: ? Maximum length? ? Are connector characters allowed? (“_”) ? Are names case sensitive? ? Are the special words: reserved words or keywords? Organization of Programming Languages-Cheng (Fall 2004) 4 Names ? Length ? If too short, they will not be connotative ? Language examples: ? FORTRAN I: maximum 6 ? COBOL: maximum 30 ? FORTRAN 90 and ANSI C (1989): maximum 31 ? Ansi C (1989): no length limitation, but only first 31 chars signifi cant ? -

Open Implementation and Flexibility in CSCW Toolkits

Open Implementation and Flexibility in CSCW Tooikits James Paul Dourlsh A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philisophy of the University of London. Department of Computer Science University College London June 1996 ProQuest Number: 10017199 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest. ProQuest 10017199 Published by ProQuest LLC(2016). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code. Microform Edition © ProQuest LLC. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 Abstract. Abstract The design of Computer-Supported Cooperative Work (CSCW) systems involves a variety of dis ciplinary approaches, drawing as much on sociological and psychological perspectives on group and individual activity as on technical approaches to designing distributed systems. Traditionally, these have been applied independently—the technical approaches focussing on design criteria and implementation strategies, the social approaches focussing on the analysis of working activity with or without technological support. However, the disciplines are more strongly related than this suggests. Technical strategies—such as the mechanisms for data replication, distribution and coordination—have a significant impact on the forms of interaction in which users can engage, and therefore on how their work proceeds. -

Efficient Late Binding of Dynamic Function Compositions

Efficient Late Binding of Dynamic Function Compositions Lars Schütze Jeronimo Castrillon Chair for Compiler Construction Chair for Compiler Construction Technische Universität Dresden Technische Universität Dresden Dresden, Germany Dresden, Germany [email protected] [email protected] Abstract 1 Introduction Adaptive software becomes more and more important as Ubiquitous computing leads to new challenges where context- computing is increasingly context-dependent. Runtime adapt- dependent software is more and more important. Developing ability can be achieved by dynamically selecting and apply- such software requires approaches that focus on objects, their ing context-specific code. Role-oriented programming has context-dependent behavior and relations. Object-oriented been proposed as a paradigm to enable runtime adaptive soft- programming (OOP) is the de facto standard approach to ware by design. Roles change the objects’ behavior at run- those problems today. This is because of the comprehensi- time and thus allow adapting the software to a given context. bility of object-oriented models and code which enables an However, this increased variability and expressiveness has intuitive representation of aspects of the real world. That is a direct impact on performance and memory consumption. how classes, objects, functions and inheritance originated. We found a high overhead in the steady-state performance of For example, an aspect of the real world is that an object may executing compositions of adaptations. This paper presents appear in different roles at different times (i.e., contexts). To a new approach to use run-time information to construct a reflect the different roles of entities, design patterns have dispatch plan that can be executed efficiently by the JVM. -

Programming Languages Session 2 – Main Theme Imperative Languages

Programming Languages Session 2 – Main Theme Imperative Languages: Names, Scoping, and Bindings Dr. Jean-Claude Franchitti New York University Computer Science Department Courant Institute of Mathematical Sciences Adapted from course textbook resources Programming Language Pragmatics (3rd Edition) Michael L. Scott, Copyright © 2009 Elsevier 1 Agenda 11 SessionSession OverviewOverview 22 ImperativeImperative Languages:Languages: Names,Names, Scoping,Scoping, andand BindingsBindings 33 ConclusionConclusion 2 What is the course about? Course description and syllabus: » http://www.nyu.edu/classes/jcf/g22.2110-001 » http://www.cs.nyu.edu/courses/fall10/G22.2110-001/index.html Textbook: » Programming Language Pragmatics (3rd Edition) Michael L. Scott Morgan Kaufmann ISBN-10: 0-12374-514-4, ISBN-13: 978-0-12374-514-4, (04/06/09) 3 Session Agenda Session Overview Imperative Languages: Names, Scoping, and Bindings Conclusion 4 Review: BNF, Concrete and Abstract Syntax Trees e expr ::= expr “+” term | expr “–” term | t term term ::= term “*” factor | t term “/” factor | f factor factor ::= number | e identifier | “(“ expr “)” e t * t t + f f f f * ( A + B * C ) * D A B C D 5 Icons / Metaphors Information Common Realization Knowledge/Competency Pattern Governance Alignment Solution Approach 66 Agenda 11 SessionSession OverviewOverview 22 ImperativeImperative Languages:Languages: Names,Names, Scoping,Scoping, andand BindingsBindings 33 ConclusionConclusion 7 Imperative Languages: Names, Scoping, and Bindings - Sub-Topics Use of Types Name, Scope, -

CS307: Principles of Programming Languages

CS307: Principles of Programming Languages LECTURE 1: INTRODUCTION TO PROGRAMMING LANGUAGES LECTURE OUTLINE • INTRODUCTION • EVOLUTION OF LANGUAGES • WHY STUDY PROGRAMMING LANGUAGES? • PROGRAMMING LANGUAGE CLASSIFICATION • LANGUAGE TRANSLATION • COMPILATION VS INTERPRETATION • OVERVIEW OF COMPILATION CS307 : Principles of Programming Languages - Tony Mione [Copyright 2017] INTRODUCTION • WHAT MAKES A LANGUAGE SUCCESSFUL? • EASY TO LEARN (PYTHON, BASIC, PASCAL, LOGO) • EASE OF EXPRESSION/POWERFUL (C, JAVA, COMMON LISP, APL, ALGOL-68, PERL) • EASY TO IMPLEMENT (JAVASCRIPT, BASIC, FORTH) • EFFICIENT [COMPILES TO EFFICIENT CODE] (FORTRAN, C) • BACKING OF POWERFUL SPONSOR (JAVA, VISUAL BASIC, COBOL, PL/1, ADA) • WIDESPREAD DISSEMINATION AT MINIMAL COST (JAVA, PASCAL, TURING, ERLANG) CS307 : Principles of Programming Languages - Tony Mione [Copyright 2017] INTRODUCTION • WHY DO WE HAVE PROGRAMMING LANGUAGES? WHAT IS A LANGUAGE FOR? • WAY OF THINKING – WAY TO EXPRESS ALGORITHMS • LANGUAGES FROM THE USER’S POINT OF VIEW • ABSTRACTION OF VIRTUAL MACHINE – WAY TO SPECIFY WHAT YOU WANT HARDWARE TO DO WITHOUT GETTING INTO THE BITS • LANGUAGES FROM THE IMPLEMENTOR’S POINT OF VIEW CS307 : Principles of Programming Languages - Tony Mione [Copyright 2017] EVOLUTION OF LANGUAGES • EARLY COMPUTERS PROGRAMMED DIRECTLY WITH MACHINE CODE • PROGRAMMER HAND WROTE BINARY CODES • PROGRAM ENTRY DONE WITH TOGGLE SWITCHES • SLOW. VERY ERROR-PRONE • WATCH HOW TO PROGRAM A PDP-8! • HTTPS://WWW.YOUTUBE.COM/WATCH?V=DPIOENTAHUY CS307 : Principles of Programming Languages