Clustering Outline

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ncbr (Fastlane)

APPLICANT CONTACT Port of Moses Lake Jeffrey Bishop, Executive Director 7810 Andrews N.E. Suite 200 Moses Lake, WA 98837 Port of Moses Lake www.portofmoseslake.com [email protected] Project Name Northern Columbia Basin Railroad Project Was a FASTLANE application for this project submitted previously? Yes If yes, what was the name of the project in the previous application? Northern Columbia Basin Railroad Project Previously Incurred Project Costs $2.1 million Future Eligible Project Costs $30.3 million Total Project Costs $32.4 million Total Federal Funding (including FASTLANE) $9.9 million Are matching funds restricted to a specific project component? If so, No which one? Is the project of a portion of the project currently located on Yes National Highway Freight Network? Is the project of a portion on the project located on the NHS? This project crosses under the NHS as well is it runs adjacent to the NHS Does the project add capacity to the Interstate system? Yes, by diverting VMT to rail Is the project in a national scenic area? No Does the project components include a railway-highway grade No crossing or grade separation project? The project includes crossing If so, please include the grade crossing ID improvements. Do the project components include an intermodal or freight rail Yes project, or freight project within the boundaries of a public or private freight rail, water (including ports, or intermodal facility? If answered yes to either of the two component questions above, $9.9 million how much of requested FASTLANE -

The Popular Culture Studies Journal

THE POPULAR CULTURE STUDIES JOURNAL VOLUME 6 NUMBER 1 2018 Editor NORMA JONES Liquid Flicks Media, Inc./IXMachine Managing Editor JULIA LARGENT McPherson College Assistant Editor GARRET L. CASTLEBERRY Mid-America Christian University Copy Editor Kevin Calcamp Queens University of Charlotte Reviews Editor MALYNNDA JOHNSON Indiana State University Assistant Reviews Editor JESSICA BENHAM University of Pittsburgh Please visit the PCSJ at: http://mpcaaca.org/the-popular-culture- studies-journal/ The Popular Culture Studies Journal is the official journal of the Midwest Popular and American Culture Association. Copyright © 2018 Midwest Popular and American Culture Association. All rights reserved. MPCA/ACA, 421 W. Huron St Unit 1304, Chicago, IL 60654 Cover credit: Cover Artwork: “Wrestling” by Brent Jones © 2018 Courtesy of https://openclipart.org EDITORIAL ADVISORY BOARD ANTHONY ADAH FALON DEIMLER Minnesota State University, Moorhead University of Wisconsin-Madison JESSICA AUSTIN HANNAH DODD Anglia Ruskin University The Ohio State University AARON BARLOW ASHLEY M. DONNELLY New York City College of Technology (CUNY) Ball State University Faculty Editor, Academe, the magazine of the AAUP JOSEF BENSON LEIGH H. EDWARDS University of Wisconsin Parkside Florida State University PAUL BOOTH VICTOR EVANS DePaul University Seattle University GARY BURNS JUSTIN GARCIA Northern Illinois University Millersville University KELLI S. BURNS ALEXANDRA GARNER University of South Florida Bowling Green State University ANNE M. CANAVAN MATTHEW HALE Salt Lake Community College Indiana University, Bloomington ERIN MAE CLARK NICOLE HAMMOND Saint Mary’s University of Minnesota University of California, Santa Cruz BRIAN COGAN ART HERBIG Molloy College Indiana University - Purdue University, Fort Wayne JARED JOHNSON ANDREW F. HERRMANN Thiel College East Tennessee State University JESSE KAVADLO MATTHEW NICOSIA Maryville University of St. -

Enterprise Best Practices for Ios Devices On

White Paper Enterprise Best Practices for iOS devices and Mac computers on Cisco Wireless LAN Updated: January 2018 © 2018 Cisco and/or its affiliates. All rights reserved. This document is Cisco Public. Page 1 of 51 Contents SCOPE .............................................................................................................................................. 4 BACKGROUND .................................................................................................................................. 4 WIRELESS LAN CONSIDERATIONS .................................................................................................... 5 RF Design Guidelines for iOS devices and Mac computers on Cisco WLAN ........................................................ 5 RF Design Recommendations for iOS devices and Mac computers on Cisco WLAN ........................................... 6 Wi-Fi Channel Coverage .................................................................................................................................. 7 ClientLink Beamforming ................................................................................................................................ 10 Wi-Fi Channel Bandwidth ............................................................................................................................. 10 Data Rates .................................................................................................................................................... 12 802.1X/EAP Authentication .......................................................................................................................... -

2020 WWE Finest

BASE BASE CARDS 1 Angel Garza Raw® 2 Akam Raw® 3 Aleister Black Raw® 4 Andrade Raw® 5 Angelo Dawkins Raw® 6 Asuka Raw® 7 Austin Theory Raw® 8 Becky Lynch Raw® 9 Bianca Belair Raw® 10 Bobby Lashley Raw® 11 Murphy Raw® 12 Charlotte Flair Raw® 13 Drew McIntyre Raw® 14 Edge Raw® 15 Erik Raw® 16 Humberto Carrillo Raw® 17 Ivar Raw® 18 Kairi Sane Raw® 19 Kevin Owens Raw® 20 Lana Raw® 21 Liv Morgan Raw® 22 Montez Ford Raw® 23 Nia Jax Raw® 24 R-Truth Raw® 25 Randy Orton Raw® 26 Rezar Raw® 27 Ricochet Raw® 28 Riddick Moss Raw® 29 Ruby Riott Raw® 30 Samoa Joe Raw® 31 Seth Rollins Raw® 32 Shayna Baszler Raw® 33 Zelina Vega Raw® 34 AJ Styles SmackDown® 35 Alexa Bliss SmackDown® 36 Bayley SmackDown® 37 Big E SmackDown® 38 Braun Strowman SmackDown® 39 "The Fiend" Bray Wyatt SmackDown® 40 Carmella SmackDown® 41 Cesaro SmackDown® 42 Daniel Bryan SmackDown® 43 Dolph Ziggler SmackDown® 44 Elias SmackDown® 45 Jeff Hardy SmackDown® 46 Jey Uso SmackDown® 47 Jimmy Uso SmackDown® 48 John Morrison SmackDown® 49 King Corbin SmackDown® 50 Kofi Kingston SmackDown® 51 Lacey Evans SmackDown® 52 Mandy Rose SmackDown® 53 Matt Riddle SmackDown® 54 Mojo Rawley SmackDown® 55 Mustafa Ali Raw® 56 Naomi SmackDown® 57 Nikki Cross SmackDown® 58 Otis SmackDown® 59 Robert Roode Raw® 60 Roman Reigns SmackDown® 61 Sami Zayn SmackDown® 62 Sasha Banks SmackDown® 63 Sheamus SmackDown® 64 Shinsuke Nakamura SmackDown® 65 Shorty G SmackDown® 66 Sonya Deville SmackDown® 67 Tamina SmackDown® 68 The Miz SmackDown® 69 Tucker SmackDown® 70 Xavier Woods SmackDown® 71 Adam Cole NXT® 72 Bobby -

Better Buildings Residential Network Peer Exchange Call Series

2_Title Slide Better Buildings Residential Network Peer Exchange Call Series: Addressing Barriers to Upgrade Projects at Affordable Multifamily Properties (201) March 10, 2016 Call Slides and Discussion Summary Call Attendee Locations 2 Call Participants – Network Members . Alabama Energy Doctors . Greater Cincinnati Energy Alliance . Alaska Housing Finance Corporation . green|spaces . American Council for an Energy-Efficient . GRID Alternatives Economy (ACEEE) . International Center for Appropriate and . Austin Energy Sustainable Technology (ICAST) . BlueGreen Alliance Foundation . Johnson Environmental . Bridging The Gap . Metropolitan Washington Council of . CalCERTS, Inc. Governments (MWCOG) . Center for Sustainable Energy . Michigan Saves . City of Aspen Utilities and Environmental . National Grid (Rhode Island) Initiatives . National Housing Trust/Enterprise . City of Kansas City, Missouri . PUSH Buffalo . City of Plano . Research Into Action, Inc. CLEAResult . Solar and Energy Loan Fund (SELF) . District of Columbia Sustainable Energy . Southface Utility . Stewards of Affordable Housing for the . Duke Carbon Offsets Initiative Future . EcoWorks . Vermont Energy Investment Corporation . Elevate Energy (VEIC) . Energize New York . Wisconsin Energy Conservation . Energy Efficiency Specialists Corporation (WECC) . Fujitsu General America Inc. Yolo County Housing 3 Call Participants – Non-Members (1 of 3) . Affordable Community Energy . City of Chicago . AppleBlossom Energy Inc. City of Minneapolis . Architectural Nexus . City of Orlando -

2016 FASTLANE Grant Program

IH-35 Laredo Bundle FASTLANE Grant Application i. COVER PAGE Project Name: IH-35 – Laredo Bundle Previously Incurred Project Cost $0 Future Eligible Project Cost $58,600,000 Total Project Cost $58,600,000 NSFHP Request $35,160,000 Total Federal Funding (including NSFHP) $46,880,000 Are matching funds restricted to a specific project component? No If so, which one? Is the project or a portion of the project currently located on National Highway Yes Freight Network? Is the project or a portion of the project located on the National Highway System Yes Does the project add capacity to the Interstate system? No Is the project in a national scenic area? Do the project components include a railway-highway grade crossing or grade Yes separation project? Do the project components include an intermodal or freight rail project, No or freight project within the boundaries of a public or private freight rail, water (including ports), or intermodal facility? If answered yes to either of the two component questions above, how much of $0.0 requested NSFHP funds will be spent on each of these projects components? State(s) in which project is located. Texas Small or large project Small Also submitting an application to TIGER for this project? No Urbanized Area in which project is located, if applicable. Laredo Population of Urbanized Area. 636,520 Is the project currently programmed in the: (please specify in which plans the project is currently programmed) TIP No STIP No MPO Long Range Transportation Plan Yes State Long Range Transportation Plan Yes State Freight Plan No i ii. -

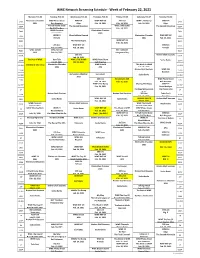

WWE Network Streaming Schedule - Week of February 22, 2021

WWE Network Streaming Schedule - Week of February 22, 2021 Monday, Feb 22 Tuesday, Feb 23 Wednesday, Feb 24 Thursday, Feb 25 Friday, Feb 26 Saturday, Feb 27 Sunday, Feb 28 Elimination Chamber WWE Photo Shoot WWE 24 WWE NXT UK 205 Live WWE's The Bump WWE 24 6AM 6AM 2021 Ron Simmons Edge Feb. 18, 2021 Feb. 19, 2021 Feb. 24, 2021 Edge R-Truth Game Show WWE's The Bump 6:30A The Second Mountain The Second Mountain 6:30A Big E & The Boss Feb. 24, 2021 WWE Chronicle Elimination Chamber 7AM 7AM Bianca Belair 2021 WWE 24 WrestleMania Rewind Elimination Chamber WWE NXT UK 7:30A 7:30A R-Truth 2021 Feb. 25, 2021 WWE NXT UK 8AM The Mania Begins 8AM Feb. 25, 2021 205 Live WWE NXT UK WWE 24 8:30A 8:30A Feb. 19, 2021 Feb. 18, 2021 R-Truth WWE Untold WWE NXT UK NXT TakeOver 9AM 9AM APA Feb. 18, 2021 Vengeance Day 205 Live Broken Skull Sessions 9:30A 9:30A Feb. 19, 2021 The Best of WWE Raw Talk WWE's THE BUMP WWE Photo Shoot 10AM Sasha Banks 10AM Feb 22, 2021 FEB 24, 2021 Kofi Kingston Elimination Chamber WWE Untold This Week in WWE 10:30A The Best of John Cena 10:30A 2021 APA Feb. 25, 2021 Broken Skull Sessions WWE 365 11AM 11AM Ricochet Elimination Chamber Liv Forever 11:30A Sasha Banks 11:30A 2021 205 Live SmackDown 549 WWE Photo Shoot 12N 12N Feb. 19, 2021 Feb. 26, 2010 Kofi Kingston WWE's The Bump Chasing The Magic WWE 24 12:30P 12:30P Feb. -

State Funds Keep Lynn and Peabody Moving

DEALS OF THE $DAY$ PG. 3 MONDAY, JULY 16, 2018 DEALS OF THE Dumpster day $DAY$ lets residents PG. 3 lighten their load DEALS By Bella diGrazia Now that we only have DEALS ITEM STAFF one barrel, I can’t put ev- OF THE erything into that so all LYNN — Dumpster days the trash accumulates,” $ $ are not a dime a dozen in DAY said Perry Guanci, a Lynn PG. 3 the city. But with a sharp resident since 1963. increase in demand, they The line of junk-laden could be. cars formed on Commer- For the past two decades, cial Street extension off there are three Saturdays the Lynnway Saturday at a year that are dedicated 6 a.m. even through dump- DEALS to helping Lynn residents ster day didn’t officially get rid of their oversized start until 7a.m. Guanci OF THE household junk. The city’s and his daughter, Lisa, trash collection program, made their first dumpster $DAY$ which began in 2014, only run of the day, loading PG. 3 provides residents with their car up with trash one 64 gallon barrel for they were eager to get rid trash. of. The father-daughter “We used to put all our ITEM PHOTO | SPENSER HASAK trash on the sidewalk. DUMPSTER, A7 Gabriel and Maria Morillo team up to push part of their couch into a dumpster.DEALS OF THE Swampscott$DAY$ native divesPG. 3 into challenge By Gayla Cawley 10 p.m. and midnight ITEM STAFF and swim throughout the night. SWAMPSCOTT — “I’m excited for the chal- Swampscott native Craig lenge and am just going to Lewin will undertake the try to enjoy it as much as grueling 21-mile Cata- possible,” said 32-year-old lina Channel Swim on Lewin, who moved to Can- Thursday, swimming the ton three years ago. -

I Am Number Four Wrestlemania Quizlet Space Race Life As a Slave

Page 4 ~ THE VILLAGER/April 7, 2017 YOUTH BRIGADE www.theaustinvillager.com Kappa Alpha Psi Fraternity, Inc Youth of Today Hope of Tomorrow Quizlet I am Number Four WrestleMania you in subjects that maybe book was incredible, from wrestling world. difficult. the characters to the writ- In 1988, WWE pre- My favorite part ing style, the battle scenes pared a small festival to about quizlet is match. to the sweet romantic celebrate WrestleMania Match is so fun because ones. It literally has every- IV, which included auto- you get to compare your thing: first love, loss, dra- graph signings by Brutus score against your class- matic fights, alien beings Beefcake, Rick Rude and mates. My friends and I with the most mind-blow- Bret Hart. There was a like to see who will be the ing powers – called "Lega- brunch, and a 5K run. Af- best one. cies" – and the most evil ter they had success with Another thing that I super-villain-aliens I've Kevin Parish the first one they decided like about quizlet is that it ever read about.I adored Park Crest M.S. to make it an every year helps me memorize the all the characters in this thing, called WWE Kennedy George I have been watch- WrestleMania Axxess. Joshua Moore vocabulary words we have book, Number Four espe- Pflugerville Cele M. S. ing wrestling for 11 years WrestleMania 32 was at KIPP Austin Academy been learning and it feels cially. He was an amaz- good to know that I'm get- ingly strong boy, and he and I enjoy it. -

Department of Education

Vol. 81 Friday, No. 161 August 19, 2016 Part III Department of Education 34 CFR Parts 367, 369, 370, et al. Workforce Innovation and Opportunity Act, Miscellaneous Program Changes; Final Rule VerDate Sep<11>2014 17:03 Aug 18, 2016 Jkt 238001 PO 00000 Frm 00001 Fmt 4717 Sfmt 4717 E:\FR\FM\19AUR3.SGM 19AUR3 mstockstill on DSK3G9T082PROD with RULES3 55562 Federal Register / Vol. 81, No. 161 / Friday, August 19, 2016 / Rules and Regulations DEPARTMENT OF EDUCATION program, 34 CFR part 371 (formerly Finally, as part of this update, the known as ‘‘Vocational Rehabilitation Secretary removes regulations that are 34 CFR Parts 367, 369, 370, 371, 373, Service Projects for American Indians superseded or obsolete and consolidates 376, 377, 379, 381, 385, 386, 387, 388, with Disabilities’’); regulations, where appropriate. In 389, 390, and 396 • The Rehabilitation National addition to removing portions of 34 CFR [Docket No. 2015–ED–OSERS–0002] Activities program, 34 CFR part 373 part 369 pertaining to specific programs (formerly known as ‘‘Special whose statutory authority was repealed RIN 1820–AB71 Demonstration Projects’’); under WIOA (i.e., Migrant Workers • The Protection and Advocacy of program, the Recreational Programs, and Workforce Innovation and Opportunity Individual Rights (PAIR) program, 34 the Projects With Industry program), the Act, Miscellaneous Program Changes CFR part 381; Secretary is removing the remaining AGENCY: Office of Special Education and • The Rehabilitation Training portions of the Part 369 regulations. The Rehabilitative Services, Department of program, 34 CFR part 385; Secretary is also removing parts 376, Education. • The Rehabilitation Long-Term 377, and 389. -

FASTLANE NEXT GENERATION User Guide

Developed by TEI Software Development for Louisiana Economic Development FASTLANE NEXT GENERATION User Guide Contents About FASTLANE Next Generation ............................................................................................................... 4 Available Incentive Programs.................................................................................................................... 4 FASTLANE Next Generation Features ....................................................................................................... 4 How to Use FASTLANE Next Generation ....................................................................................................... 5 Account Registration ................................................................................................................................. 5 Login after new Registration ..................................................................................................................... 7 Log in with an account created in Old FASTLANE ..................................................................................... 8 Forgot Password ..................................................................................................................................... 10 Search Advance Data .............................................................................................................................. 12 Search Project Data ................................................................................................................................ -

A2 6 Falling from Hell (In a Cell)

Journal of Physics Special Topics An undergraduate physics journal A2 6 Falling From Hell (In a Cell) R. Bradley-Evans, R. Gafur, R. Heath, M. Hough Department of Physics and Astronomy, University of Leicester, Leicester, LE1 7RH December 2, 2017 Abstract We take a look at some of the physics involved in the WWE Wrestlemania 32 Hell in a Cell match between The Undertaker and Shane McMahon. By resolving forces and momentum involved in Shane McMahon's fall from the top of the cell and through a table, we calculate the effective impact he feels and compare this to an instantaneous impact (a situation where the table does not break). The reduction in momentum is found to be 943.9 Ns. It is concluded that the table he goes through drastically reduces the impact he would have felt, had it been instantaneous. Introduction fall that Shane McMahon actually experiences. Professional wrestling is a scripted form of Theory sports entertainment, but there are still many The physics involved in this scenario requires match types and situations which often see resolving forces. As Shane McMahon begins his wrestlers putting their bodies through high im- fall from the top of the cell, his initial velocity pact manoeuvres. In this paper, we are going to is 0 ms−1. At this point, the only forces acting take a look at some of the physics involved in upon him are gravity and drag, so the force can the WWE Wrestlemania 32 Hell in a Cell match be calculated by that took place between The Undertaker and Shane McMahon.