Scientific Integrity and Transparency Hearing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Request for Information to Improve Federal Scientific Integrity Policies (86 FR 34064)

July 27, 2021 Office of Science and Technology Policy Executive Office of the President Eisenhower Executive Office Building 1650 Pennsylvania Avenue Washington, DC 20504 Submitted electronically to [email protected] Re: Request for Information to Improve Federal Scientific Integrity Policies (86 FR 34064) The American Association for the Advancement of Science (AAAS), Association of American Medical Colleges (AAMC), Association of American Universities (AAU), Association of Public and Land-grant Universities (APLU), and Council on Governmental Relations (COGR), collectively the “Associations,” appreciate the opportunity to provide feedback to the White House Office of Science and Technology Policy (OSTP) to help improve the effectiveness of Federal scientific integrity policies in enhancing public trust in science. The Associations strongly support the efforts of the White House in addressing issues of federal scientific integrity and public trust in science. We further appreciate the swift issuance of the Presidential Memorandum on Restoring Trust in Government Through Scientific Integrity and Evidence-Based Policymaking and establishment of the Scientific Integrity Task Force. These actions seek to formalize and standardize scientific integrity through an all-of-government approach. Protecting the integrity of science and ensuring the use of evidence in policymaking should be a national priority across administrations. These efforts come at a critical time for the United States. Public trust in federal science has been shaken by anti-science rhetoric, lack of transparency, and questions about the integrity of science conducted and supported by the federal government. At the same time, building and maintaining trust in this science has never been more important as we confront continuing threats, including a global pandemic and climate change. -

Huda Y. Zoghbi, MD, Howard Hughes Medical Institute, Jan and Dan Duncan Neurological Research Institute at Texas Children’S Hospital and Baylor College of Medicine

Investigator Spotlight: Huda Y. Zoghbi, MD, Howard Hughes Medical Institute, Jan and Dan Duncan Neurological Research Institute at Texas Children’s Hospital and Baylor College of Medicine It is hard to believe that the 7th World Rett Syndrome Congress has come and gone with great success! Now with summer upon us, IRSF continues to move the spotlight to the committed scientists who have made the World Congress an impressive, high quality meeting with outstanding presentations and discussions. This month we are honored to focus on Dr. Huda Zoghbi who had co-chaired the Basic Research Symposium at the World Congress along with Dr. Gail Mandel. Together, they had produced an exciting lineup of speakers who were encouraged to present new, unpublished data in an effort to foster new ideas that will help chart the course for Rett syndrome research. Dr. Zoghbi is a Howard Hughes Medical Institute (HHMI) Investigator, the Director of the Jan and Dan Duncan Neurological Research Institute at Texas Children’s Hospital, and Professor of the Baylor College of Medicine in Houston, TX. She received her medical degree from Meharry Medical College and completed residency training in pediatrics and neurology at Baylor College of Medicine where she encountered her first Rett syndrome patient in 1983. Dr. Zoghbi was inspired to receive additional research training in the area molecular genetics and upon completion she joined the faculty of Baylor College of Medicine. In 1999, Dr. Zoghbi and collaborators including research fellow Ruthie Amir made a major breakthrough for Rett syndrome. They had discovered that mutations in MECP2, the gene encoding methyl-CpG-binding protein 2, causes Rett syndrome. -

UC San Diego UC San Diego Electronic Theses and Dissertations

UC San Diego UC San Diego Electronic Theses and Dissertations Title Spinocerebellar Ataxia Type 7 is Characterized by Defects in Mitochondrial and Metabolic Function Permalink https://escholarship.org/uc/item/02b7m809 Author Ward, Jacqueline Marie Publication Date 2016 Supplemental Material https://escholarship.org/uc/item/02b7m809#supplemental Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, SAN DIEGO Spinocerebellar Ataxia Type 7 is Characterized by Defects in Mitochondrial and Metabolic Function A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Biomedical Sciences by Jacqueline Marie Ward Committee in charge: Professor Albert La Spada, Chair Professor Eric Bennett Professor Lawrence Goldstein Professor Alysson Muotri Professor Miles Wilkinson 2016 Copyright Jacqueline Marie Ward, 2016 All rights reserved The Dissertation of Jacqueline Marie Ward is approved, and it is acceptable in quality and form for publication on microfilm and electronically: Chair University of California, San Diego 2016 iii DEDICATION This work is dedicated to my grandfather, Dr. Wayne Ward, the kindest person I’ve ever known. His memory inspires me to be a better person daily. iv TABLE OF CONTENTS SIGNATURE PAGE .......................................................................................... iii DEDICATION .................................................................................................. -

Extensions of Remarks E1775 HON. SAM GRAVES HON

December 10, 2014 CONGRESSIONAL RECORD — Extensions of Remarks E1775 On a personal note, Aubrey was a dear son to ever serve in the House of Representa- U.S. Senator Kay Bailey Hutchison, U.S. Sen- friend and loyal supporter. I will always re- tives, the oldest person ever elected to a ator Lloyd Bentsen, T. Boone Pickens, H. member his kindness and his concern for peo- House term and the oldest House member Ross Perot, Red Adair, Bo Derek, Chuck Nor- ple who deserved a second chance. I will al- ever to a cast a vote. Mr. HALL is also the last ris, Ted Williams, Tom Hanks and The Ink ways remember him as a kind, gentle, loving, remaining Congressman who served our na- Spots. and brilliant human being who gave so much tion during World War II. He works well with both Republicans and to others. And for all of these accomplishments, I Democrats, but he ‘‘got religion,’’ in 2004, and Today, California’s 13th Congressional Dis- would like to thank and congratulate RALPH became a Republican. Never forgetting his trict salutes and honors an outstanding indi- one more time for his service to the country Democrat roots, he commented, ‘‘Being a vidual, Dr. Aubrey O’Neal Dent. His dedication and his leadership in the Texas Congressional Democrat was more fun.’’ and efforts have impacted so many lives Delegation. RALPH HALL always has a story and a new, throughout the state of California. I join all of Born in Fate, Texas on May 3, 1923, HALL but often used joke. -

Program Book

PARKINSON’SDISEASE2014 ADVANCING RESEARCH, IMPROVING LIVES PROGRAM MATERIALS Sponsored by: January 6 – 7, 2014 Natcher Conference Center National Institutes of Health Bethesda, MD About our cover: The program cover image is a stylized version of the Parkinson’s Disease Motor-Related Pattern (PDRP), an abnormal pattern of regional brain function observed in MRI studies which shows increased metabolism indicated by red in some brain regions (pallidothalamic, pontine, and motor cortical areas), and decreased metabolism indicated by blue in others (associated lateral premotor and posterior parietal areas). Original image used with permission of David Eidelberg, M.D. For further information see: Hirano et al., Journal of Neuroscience 28 (16): 4201-4209. Welcome Message from Dr. Story C. Landis Welcome to the National Institute of Neurological Disorders and Stroke (NINDS) conference, “Parkinson’s Disease 2014: Advancing Research, Improving Lives.” Remarkable new discoveries and technological advances are rapidly changing the way we study the biological mechanisms of Parkinson’s disease, identify paths to improved treatments, and design effective clinical trials. Elucidating mechanisms and developing and testing effective interventions require a diverse set of approaches and perspectives. The NINDS has organized this conference with the primary goal of seeking consensus on, and prioritizing, research recommendations spanning clinical, translational, and basic Parkinson’s disease research that we support. We have assembled a stellar and dedicated group of session chairs and panelists who have worked collaboratively to identify emerging research opportunities in Parkinson’s research. While we have divided our working groups into three main research areas, we expect each will inform the others over the course of the next two days, and we look forward to both complementary and unique perspectives. -

Speakers of the House: Elections, 1913-2021

Speakers of the House: Elections, 1913-2021 Updated January 25, 2021 Congressional Research Service https://crsreports.congress.gov RL30857 Speakers of the House: Elections, 1913-2021 Summary Each new House elects a Speaker by roll call vote when it first convenes. Customarily, the conference of each major party nominates a candidate whose name is placed in nomination. A Member normally votes for the candidate of his or her own party conference but may vote for any individual, whether nominated or not. To be elected, a candidate must receive an absolute majority of all the votes cast for individuals. This number may be less than a majority (now 218) of the full membership of the House because of vacancies, absentees, or Members answering “present.” This report provides data on elections of the Speaker in each Congress since 1913, when the House first reached its present size of 435 Members. During that period (63rd through 117th Congresses), a Speaker was elected six times with the votes of less than a majority of the full membership. If a Speaker dies or resigns during a Congress, the House immediately elects a new one. Five such elections occurred since 1913. In the earlier two cases, the House elected the new Speaker by resolution; in the more recent three, the body used the same procedure as at the outset of a Congress. If no candidate receives the requisite majority, the roll call is repeated until a Speaker is elected. Since 1913, this procedure has been necessary only in 1923, when nine ballots were required before a Speaker was elected. -

Huda Zoghbi: Taking Genetic Inquiry to the Next Level

Spectrum | Autism Research News https://www.spectrumnews.org NEWS, PROFILES Huda Zoghbi: Taking genetic inquiry to the next level BY RACHEL ZAMZOW 25 JUNE 2021 Listen to this story: Most mornings, Huda Zoghbi, 67, climbs a glass-encased, curling staircase to reach her lab on the top and 13th floor of the Jan and Dan Duncan Neurological Research Institute in Houston, Texas. The twisting glass tower, which she designed with a team of architects, echoes the double helix of DNA — a structure that has been central to her career-long quest to uncover genes underlying neurological conditions. As the institute’s director — and as a scientist— she is known for going beyond the standard job description. Genetics researchers often cast a wide net and sequence thousands of genes at a time. But in her prolific career, Zoghbi has focused on a handful of genes, methodically building up an understanding of their function one careful step at a time. Thanks to that approach, Zoghbi has made a number of landmark discoveries, including identifying the genetic roots of Rett syndrome, an autism-related condition that primarily affects girls, as well as the genetic mutations that spur spinocerebellar ataxia, a degenerative motor condition. She has authored more than 350 journal articles. Her accomplishments have earned her almost every major biology and neuroscience research award, including the prestigious Breakthrough Prize in 2017 and the Brain Prize in 2020. “She’s clearly the international leader in the field,” said the late Stephen Warren, professor of human genetics at Emory University in Atlanta, Georgia. Zoghbi never set out to lead a large research center, she says — her heart is in the lab. -

CQ Committee Guide

SPECIAL REPORT Committee Guide Complete House and senate RosteRs: 113tH CongRess, seCond session DOUGLAS GRAHAM/CQ ROLL CALL THE PEOPLE'S BUSINESS: The House Energy and Commerce Committee, in its Rayburn House Office Building home, marks up bills on Medicare and the Federal Communications Commission in July 2013. www.cq.com | MARCH 24, 2014 | CQ WEEKLY 431 09comms-cover layout.indd 431 3/21/2014 5:12:22 PM SPECIAL REPORT Senate Leadership: 113th Congress, Second Session President of the Senate: Vice President Joseph R. Biden Jr. President Pro Tempore: Patrick J. Leahy, D-Vt. DEMOCRATIC LEADERS Majority Leader . Harry Reid, Nev. Steering and Outreach Majority Whip . Richard J. Durbin, Ill. Committee Chairman . Mark Begich, Alaska Conference Vice Chairman . Charles E. Schumer, N.Y. Chief Deputy Whip . Barbara Boxer, Calif. Policy Committee Chairman . Charles E. Schumer, N.Y. Democratic Senatorial Campaign Conference Secretary . Patty Murray, Wash. Committee Chairman . Michael Bennet, Colo. REPUBLICAN LEADERS Minority Leader . Mitch McConnell, Ky. Policy Committee Chairman . John Barrasso, Wyo. Minority Whip . John Cornyn, Texas Chief Deputy Whip . Michael D. Crapo, Idaho Conference Chairman . John Thune, S.D. National Republican Senatorial Conference Vice Chairman . Roy Blunt, Mo. Committee Chairman . Jerry Moran, Kan. House Leadership: 113th Congress, Second Session Speaker of the House: John A. Boehner, R-Ohio REPUBLICAN LEADERS Majority Leader . Eric Cantor, Va. Policy Committee Chairman . James Lankford, Okla. Majority Whip . Kevin McCarthy, Calif. Chief Deputy Whip . Peter Roskam, Ill. Conference Chairwoman . .Cathy McMorris Rodgers, Wash. National Republican Congressional Conference Vice Chairwoman . Lynn Jenkins, Kan. Committee Chairman . .Greg Walden, Ore. Conference Secretary . Virginia Foxx, N.C. -

BUSINESS BRIEFS Spring 2013

BAY AREA HOUSTON ECONOMIC PARTNERSHIP’’S BUSINESSBUSINESS BRIEFSBRIEFS Volume 6, Number 1 Spring 2013 The amount of economic activity we‟ve seen over the first quarter of 2013 may IN THIS ISSUE not be unprecedented, but it certainly has kept us busy at BAHEP. We‟re cur- rently working on 52 project leads across various industry sectors. It seems that I. Special Honors many companies, national and international, are paying attention to the incentives II. Business Assistance and well-educated workforce that Texas and Bay Area Houston have to offer. Programs Along with our community partners, we have also been heavily involved in advo- III. Marketing cacy work. We‟ve traveled to Washington, D.C., to meet with freshmen members IV. Advertising, Public of Congress to help educate them about NASA and the importance of America‟s Relations & Media human space program. We‟ve been to Austin so much that it‟s starting to become Communications a home away from home. Legislators have heard about the priorities this commu- V. Preserve NASA nity places on education, the state franchise tax, recreational maritime jobs preser- Funding Levels vation, tort reform, and telecommunications. We joined our aerospace partners VI. Bay Area Houston during Space Week Texas to inform the Texas legislature once again about the Advanced Technology huge economic impact that NASA Johnson Space Center has on this state and to Consortium (BayTech) stress the importance of the Texas Aerospace Scholars program and Technology Outreach Program. We also traveled to Austin to participate in Chemical Day in VII. Space Alliance support of this hugely important industry sector. -

Employees of Northrop Grumman Political Action Committee (ENGPAC) 2017 Contributions

Employees of Northrop Grumman Political Action Committee (ENGPAC) 2017 Contributions Name Candidate Office Total ALABAMA $69,000 American Security PAC Rep. Michael Dennis Rogers (R) Leadership PAC $5,000 Byrne for Congress Rep. Bradley Roberts Byrne (R) Congressional District 01 $5,000 BYRNE PAC Rep. Bradley Roberts Byrne (R) Leadership PAC $5,000 Defend America PAC Sen. Richard Craig Shelby (R) Leadership PAC $5,000 Martha Roby for Congress Rep. Martha Roby (R) Congressional District 02 $10,000 Mike Rogers for Congress Rep. Michael Dennis Rogers (R) Congressional District 03 $6,500 MoBrooksForCongress.Com Rep. Morris Jackson Brooks, Jr. (R) Congressional District 05 $5,000 Reaching for a Brighter America PAC Rep. Robert Brown Aderholt (R) Leadership PAC $2,500 Robert Aderholt for Congress Rep. Robert Brown Aderholt (R) Congressional District 04 $7,500 Strange for Senate Sen. Luther Strange (R) United States Senate $15,000 Terri Sewell for Congress Rep. Terri Andrea Sewell (D) Congressional District 07 $2,500 ALASKA $14,000 Sullivan For US Senate Sen. Daniel Scott Sullivan (R) United States Senate $5,000 Denali Leadership PAC Sen. Lisa Ann Murkowski (R) Leadership PAC $5,000 True North PAC Sen. Daniel Scott Sullivan (R) Leadership PAC $4,000 ARIZONA $29,000 Committee To Re-Elect Trent Franks To Congress Rep. Trent Franks (R) Congressional District 08 $4,500 Country First Political Action Committee Inc. Sen. John Sidney McCain, III (R) Leadership PAC $3,500 (COUNTRY FIRST PAC) Gallego for Arizona Rep. Ruben M. Gallego (D) Congressional District 07 $5,000 McSally for Congress Rep. Martha Elizabeth McSally (R) Congressional District 02 $10,000 Sinema for Arizona Rep. -

Congressional Record United States Th of America PROCEEDINGS and DEBATES of the 113 CONGRESS, SECOND SESSION

E PL UR UM IB N U U S Congressional Record United States th of America PROCEEDINGS AND DEBATES OF THE 113 CONGRESS, SECOND SESSION Vol. 160 WASHINGTON, WEDNESDAY, NOVEMBER 12, 2014 No. 137 House of Representatives The House met at 2 p.m. and was Ms. FOXX led the Pledge of Alle- H.R. 3716, to ratify a water settle- called to order by the Speaker. giance as follows: ment agreement affecting the Pyramid f I pledge allegiance to the Flag of the Lake Paiute Tribe, and for other pur- United States of America, and to the Repub- poses; PRAYER lic for which it stands, one nation under God, H.R. 5062, to amend the Consumer Fi- The Chaplain, the Reverend Patrick indivisible, with liberty and justice for all. nancial Protection Act of 2010 to speci- J. Conroy, offered the following prayer: f fy that privilege and confidentiality God of the universe, we give You MESSAGE FROM THE PRESIDENT are maintained when information is thanks for giving us another day. shared by certain nondepository cov- A full week later, we are thankful A message in writing from the Presi- ered persons with Federal and State fi- that we live in a nation where a peace- dent of the United States was commu- nancial regulators, and for other pur- ful change or readjustment of govern- nicated to the House by Mr. Brian poses; ment is not only expected, but Pate, one of his secretaries. H.R. 5404, to amend title 38, United achieved. May it ever be so. f States Code, to extend certain expiring Bless the Members of this assembly MAKING IN ORDER CONSIDER- provisions of law administered by the as they return to the work facing ATION OF MOTIONS TO SUSPEND Secretary of Veterans Affairs, and for them, work that needs to be done. -

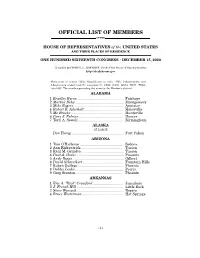

Official List of Members

OFFICIAL LIST OF MEMBERS OF THE HOUSE OF REPRESENTATIVES of the UNITED STATES AND THEIR PLACES OF RESIDENCE ONE HUNDRED SIXTEENTH CONGRESS • DECEMBER 15, 2020 Compiled by CHERYL L. JOHNSON, Clerk of the House of Representatives http://clerk.house.gov Democrats in roman (233); Republicans in italic (195); Independents and Libertarians underlined (2); vacancies (5) CA08, CA50, GA14, NC11, TX04; total 435. The number preceding the name is the Member's district. ALABAMA 1 Bradley Byrne .............................................. Fairhope 2 Martha Roby ................................................ Montgomery 3 Mike Rogers ................................................. Anniston 4 Robert B. Aderholt ....................................... Haleyville 5 Mo Brooks .................................................... Huntsville 6 Gary J. Palmer ............................................ Hoover 7 Terri A. Sewell ............................................. Birmingham ALASKA AT LARGE Don Young .................................................... Fort Yukon ARIZONA 1 Tom O'Halleran ........................................... Sedona 2 Ann Kirkpatrick .......................................... Tucson 3 Raúl M. Grijalva .......................................... Tucson 4 Paul A. Gosar ............................................... Prescott 5 Andy Biggs ................................................... Gilbert 6 David Schweikert ........................................ Fountain Hills 7 Ruben Gallego ............................................