Chess Neighborhoods, Function Combination, and Reinforcement Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multilinear Algebra and Chess Endgames

Games of No Chance MSRI Publications Volume 29, 1996 Multilinear Algebra and Chess Endgames LEWIS STILLER Abstract. This article has three chief aims: (1) To show the wide utility of multilinear algebraic formalism for high-performance computing. (2) To describe an application of this formalism in the analysis of chess endgames, and results obtained thereby that would have been impossible to compute using earlier techniques, including a win requiring a record 243 moves. (3) To contribute to the study of the history of chess endgames, by focusing on the work of Friedrich Amelung (in particular his apparently lost analysis of certain six-piece endgames) and that of Theodor Molien, one of the founders of modern group representation theory and the first person to have systematically numerically analyzed a pawnless endgame. 1. Introduction Parallel and vector architectures can achieve high peak bandwidth, but it can be difficult for the programmer to design algorithms that exploit this bandwidth efficiently. Application performance can depend heavily on unique architecture features that complicate the design of portable code [Szymanski et al. 1994; Stone 1993]. The work reported here is part of a project to explore the extent to which the techniques of multilinear algebra can be used to simplify the design of high- performance parallel and vector algorithms [Johnson et al. 1991]. The approach is this: Define a set of fixed, structured matrices that encode architectural primitives • of the machine, in the sense that left-multiplication of a vector by this matrix is efficient on the target architecture. Formulate the application problem as a matrix multiplication. -

Super Human Chess Engine

SUPER HUMAN CHESS ENGINE FIDE Master / FIDE Trainer Charles Storey PGCE WORLD TOUR Young Masters Training Program SUPER HUMAN CHESS ENGINE Contents Contents .................................................................................................................................................. 1 INTRODUCTION ....................................................................................................................................... 2 Power Principles...................................................................................................................................... 4 Human Opening Book ............................................................................................................................. 5 ‘The Core’ Super Human Chess Engine 2020 ......................................................................................... 6 Acronym Algorthims that make The Storey Human Chess Engine ......................................................... 8 4Ps Prioritise Poorly Placed Pieces ................................................................................................... 10 CCTV Checks / Captures / Threats / Vulnerabilities ...................................................................... 11 CCTV 2.0 Checks / Checkmate Threats / Captures / Threats / Vulnerabilities ............................. 11 DAFiii Attack / Features / Initiative / I for tactics / Ideas (crazy) ................................................. 12 The Fruit Tree analysis process ............................................................................................................ -

Chess-Training-Guide.Pdf

Q Chess Training Guide K for Teachers and Parents Created by Grandmaster Susan Polgar U.S. Chess Hall of Fame Inductee President and Founder of the Susan Polgar Foundation Director of SPICE (Susan Polgar Institute for Chess Excellence) at Webster University FIDE Senior Chess Trainer 2006 Women’s World Chess Cup Champion Winner of 4 Women’s World Chess Championships The only World Champion in history to win the Triple-Crown (Blitz, Rapid and Classical) 12 Olympic Medals (5 Gold, 4 Silver, 3 Bronze) 3-time US Open Blitz Champion #1 ranked woman player in the United States Ranked #1 in the world at age 15 and in the top 3 for about 25 consecutive years 1st woman in history to qualify for the Men’s World Championship 1st woman in history to earn the Grandmaster title 1st woman in history to coach a Men's Division I team to 7 consecutive Final Four Championships 1st woman in history to coach the #1 ranked Men's Division I team in the nation pnlrqk KQRLNP Get Smart! Play Chess! www.ChessDailyNews.com www.twitter.com/SusanPolgar www.facebook.com/SusanPolgarChess www.instagram.com/SusanPolgarChess www.SusanPolgar.com www.SusanPolgarFoundation.org SPF Chess Training Program for Teachers © Page 1 7/2/2019 Lesson 1 Lesson goals: Excite kids about the fun game of chess Relate the cool history of chess Incorporate chess with education: Learning about India and Persia Incorporate chess with education: Learning about the chess board and its coordinates Who invented chess and why? Talk about India / Persia – connects to Geography Tell the story of “seed”. -

CHESS MASTERPIECES: (Later, in Europe, Replaced by a HIGHLIGHTS from the DR

CHESS MASTERPIECES: (later, in Europe, replaced by a HIGHLIGHTS FROM THE DR. queen). These were typically flanKed GEORGE AND VIVIAN DEAN by elephants (later to become COLLECTION bishops), though in this case, they are EXHIBITION CHECKLIST camels with drummers; cavalrymen (later to become Knights); and World Chess Hall of Fame chariots or elephants, (later to Saint Louis, Missouri 2.1. Abstract Bead anD Dart Style Set become rooKs or “castles”). A September 9, 2011-February 12, with BoarD, India, 1700s. Natural and frontline of eight foot soldiers 2012 green-stained ivory, blacK lacquer- (pawns) completed each side. work folding board with silver and mother-of-pearl. This classical Indian style is influenced by the Islamic trend toward total abstraction of the design. The pieces are all lathe- turned. The blacK lacquer finish, made in India from the husKs of the 1.1. Neresheimer French vs. lac insect, was first developed by the Germans Set anD Castle BoarD, Chinese. The intricate inlaid silver Hanau, Germany, 1905-10. Silver and grid pattern traces alternating gilded silver, ivory, diamonds, squares filled with lacy inscribed fern sapphires, pearls, amethysts, rubies, leaf designs and inlaid mother-of- and marble. pearl disKs. These decorations 2.3. Mogul Style Set with combine a grid of squares, common Presentation Case, India, 1800s. Before WWI, Neresheimer, of Hanau, to Western forms of chess, with Beryl with inset diamonds, rubies, Germany, was a leading producer of another grid of inlaid center points, and gold, wooden presentation case ornate silverware and decorative found in Japanese and Chinese clad in maroon velvet and silk-lined. -

New Data and Multivariate Methods Transform E-Underwriting Businesspeople Often Describe Competition in Terms of Chess, and Rightly So

Stalemate to Checkmate: New Data and Multivariate Methods Transform E-underwriting Businesspeople often describe competition in terms of chess, and rightly so. It’s a game of strategy and skill, but not of ambiguous outcomes: Only two things can happen in a chess game – a checkmate or a stalemate. The analogy effectively captures the challenges confronting U.S. life insurers as carriers seek to Bruce Bosco expand in seemingly mature markets. Vice President Business Development Consider the stalemate: the point during a chess game in which the player is left with no legal move, AURA and the game ends in a draw. The same term could describe the state of the U.S. life insurance market, where carriers confront a coverage gap worth an estimated $16 trillion. While this vast pool of under-protected potential policyholders seems to represent a tremendous opportunity, on the whole it has remained stubbornly out of reach. After investing mightily in improving the purchasing experience, insurance leaders may understandably feel as if there are no moves left. Relax underwriting assessment any further, and carriers rightly fear that a streamlined sale today may lead to widening losses tomorrow. Until recently this stalemate seemed destined to continue indefinitely. To move from stalemate to checkmate, insurers must first understand that the rules of the game have changed. New Game, New Rules Today’s life insurance consumers are looking for a buying experience befitting the digital age. They want to make quick, convenient, and affordable purchases, but this expectation is often thwarted by onerous underwriting requirements. Thankfully, the combination of new data sources and automated underwriting technologies have enabled insurers to change the rules of the game. -

Double Fianchetto – the Modern Chess Lifestyle

DOUBLE-FIANCHETTO THE MODERN CHESS LIFESTYLE by Daniel Hausrath www.thinkerspublishing.com Managing Editor Romain Edouard Assistant Editor Daniel Vanheirzeele Graphic Artist Philippe Tonnard Cover design Iwan Kerkhof Typesetting i-Press ‹www.i-press.pl› First edition 2020 by Th inkers Publishing Double-Fianchetto — the Modern Chess Lifestyle Copyright © 2020 Daniel Hausrath All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission from the publisher. ISBN 978-94-9251-075-4 D/2020/13730/3 All sales or enquiries should be directed to Th inkers Publishing, 9850 Landegem, Belgium. e-mail: [email protected] website: www.thinkerspublishing.com TABLE OF CONTENTS KEY TO SYMBOLS 5 PREFACE 7 PART 1. DOUBLE FIANCHETTO WITH WHITE 9 Chapter 1. Double fi anchetto against the King’s Indian and Grünfeld 11 Chapter 2. Double fi anchetto structures against the Dutch 59 Chapter 3. Double fi anchetto against the Queen’s Gambit and Tarrasch 77 Chapter 4. Diff erent move orders to reach the Double Fianchetto 97 Chapter 5. Diff erent resulting positions from the Double Fianchetto and theoretically-important nuances 115 PART 2. DOUBLE FIANCHETTO WITH BLACK 143 Chapter 1. Double fi anchetto in the Accelerated Dragon 145 Chapter 2. Double fi anchetto in the Caro Kann 153 Chapter 3. Double fi anchetto in the Modern 163 Chapter 4. Double fi anchetto in the “Hippo” 187 Chapter 5. Double fi anchetto against 1.d4 205 Chapter 6. Double fi anchetto in the Fischer System 231 Chapter 7. -

First Steps : the French CYRUS LAKDAWALA

First Steps : the French CYRUS LAKDAWALA www.everymanchess.com About the Author Cyrus Lakdawala is an International Master, a former National Open and American Open Cham- pion, and a six-time State Champion. He has been teaching chess for over 30 years, and coaches some of the top junior players in the U.S. Also by the Author: Play the London System A Ferocious Opening Repertoire The Slav: Move by Move 1...d6: Move by Move The Caro-Kann: Move by Move The Four Knights: Move by Move Capablanca: Move by Move The Modern Defence: Move by Move Kramnik: Move by Move The Colle: Move by Move The Scandinavian: Move by Move Botvinnik: Move by Move The Nimzo-Larsen Attack: Move by Move Korchnoi: Move by Move The Alekhine Defence: Move by Move The Trompowsky Attack: Move by Move Carlsen: Move by Move The Classical French: Move by Move Larsen: Move by Move 1...b6: Move by Move Bird’s Opening: Move by Move Petroff Defence: Move by Move Fischer: Move by Move Anti-Sicilians: Move by Move Contents About the Author 3 Bibliography 5 Introduction 7 1 The Main Line Winawer 16 2 The Winawer: Fourth Move Alternatives 50 3 The Classical Variation 70 4 The Tarrasch Variation 107 5 The Advance Variation 143 6 The Exchange Variation 180 7 Other Lines 202 Index of Variations 233 Index of Complete Games 238 Introduction What makes a French player? W________W [rhb1kgn4] [0p0pDp0p] [WDWDpDWD] [DWDWDWDW] [WDWDPDWD] [DWDWDWDW] [P)P)W)P)] [$NGQIBHR] W--------W The French is an opening so vast in scale, that it almost defies classification. -

Rules for Chess

Rules for Chess Object of the Chess Game It's rather simple; there are two players with one player having 16 black or dark color chess pieces and the other player having 16 white or light color chess pieces. The chess players move on a square chessboard made up of 64 individual squares consisting of 32 dark squares and 32 light squares. Each chess piece has a defined starting point or square with the dark chess pieces aligned on one side of the board and the light pieces on the other. There are 6 different types of chess pieces, each with it's own unique method to move on the chessboard. The chess pieces are used to both attack and defend from attack, against the other players chessmen. Each player has one chess piece called the king. The ultimate objective of the game is to capture the opponents king. Having said this, the king will never actually be captured. When either sides king is trapped to where it cannot move without being taken, it's called "checkmate" or the shortened version "mate". At this point, the game is over. The object of playing chess is really quite simple, but mastering this game of chess is a totally different story. Chess Board Setup Now that you have a basic concept for the object of the chess game, the next step is to get the the chessboard and chess pieces setup according to the rules of playing chess. Lets start with the chess pieces. The 16 chess pieces are made up of 1 King, 1 queen, 2 bishops, 2 knights, 2 rooks, and 8 pawns. -

1500 Forced Mates

1500 Forced Mates Jakov Geller Contents Introduction .................................................................................................................5 Part 1. Basic Skills .................................................................................................... 8 Chapter 1. Checkmate with One Piece ........................................................... 9 Chapter 2. Checkmate with More Than One Piece .................................. 18 Chapter 3. Captures ........................................................................................... 41 Part 2. Capabilities of Your Own Pieces ......................................................... 47 Chapter 4. Sacrifices .......................................................................................... 48 Chapter 5. Pawn Promotion ............................................................................ 52 Chapter 6. Vacation ........................................................................................... 55 Chapter 7. Attraction ........................................................................................ 65 Chapter 8. Combinations ................................................................................. 73 Part 3. Overpowering Your Opponent’s Pieces ........................................... 83 Chapter 9. Elimination ..................................................................................... 84 Chapter 10. Combination: Attraction + Elimination ............................... 89 Chapter 11. Combinations -

Glossary of Chess

Glossary of chess See also: Glossary of chess problems, Index of chess • X articles and Outline of chess • This page explains commonly used terms in chess in al- • Z phabetical order. Some of these have their own pages, • References like fork and pin. For a list of unorthodox chess pieces, see Fairy chess piece; for a list of terms specific to chess problems, see Glossary of chess problems; for a list of chess-related games, see Chess variants. 1 A Contents : absolute pin A pin against the king is called absolute since the pinned piece cannot legally move (as mov- ing it would expose the king to check). Cf. relative • A pin. • B active 1. Describes a piece that controls a number of • C squares, or a piece that has a number of squares available for its next move. • D 2. An “active defense” is a defense employing threat(s) • E or counterattack(s). Antonym: passive. • F • G • H • I • J • K • L • M • N • O • P Envelope used for the adjournment of a match game Efim Geller • Q vs. Bent Larsen, Copenhagen 1966 • R adjournment Suspension of a chess game with the in- • S tention to finish it later. It was once very common in high-level competition, often occurring soon af- • T ter the first time control, but the practice has been • U abandoned due to the advent of computer analysis. See sealed move. • V adjudication Decision by a strong chess player (the ad- • W judicator) on the outcome of an unfinished game. 1 2 2 B This practice is now uncommon in over-the-board are often pawn moves; since pawns cannot move events, but does happen in online chess when one backwards to return to squares they have left, their player refuses to continue after an adjournment. -

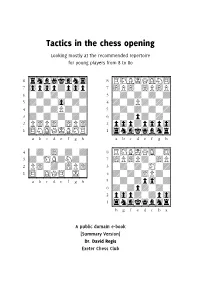

Tactics in the Chess Opening

Tactics in the chess opening Looking mostly at the recommended repertoire for young players from 8 to 80 cuuuuuuuuC cuuuuuuuuC (rhb1kgn4} (RHBIQGN$} 70p0pDp0p} 7)P)w)P)P} 6wDwDwDwD} 3wDwDwDwD} 5DwDw0wDw} &dwDPDwDw} &wDwDPDwD} 5wDwDwdwD} 3DwDwDwDw} 6dwDpDwDw} 2P)P)w)P)} 2p0pdp0p0} %$NGQIBHR} %4ngk1bhr} v,./9EFJMV v,./9EFJMV cuuuuuuuuC &wDw)wDwD} (RHBIQGw$} 3dwHBDNDw} 7)P)Pdw)P} 2P)wDw)P)} 3wDwDwHwD} %$wGQ$wIw} &dwDw)PDw} v,./9EFJMV 5wDwDp0wD} 6dwDpDwDw} 2p0pdwdp0} %4ngk1bhr} vMJFE9/.,V A public domain e-book [Summary Version] Dr. David Regis Exeter Chess Club Contents Introduction.................................................... Error! Bookmark not defined. PLAYING WHITE WITH 1. E4 E5.......................................... ERROR ! B OOKMARK NOT DEFINED . Scotch Gambit ................................................ Error! Bookmark not defined. Italian Game ................................................... Error! Bookmark not defined. Evans' Gambit................................................. Error! Bookmark not defined. Italian Game ................................................... Error! Bookmark not defined. Two Knights'................................................... Error! Bookmark not defined. Petroff Defence............................................... Error! Bookmark not defined. Elephant Gambit............................................. Error! Bookmark not defined. Latvian Gambit ............................................... Error! Bookmark not defined. Philidor's Defence.......................................... -

Cyrus Lakdawala Tactical Training

CYRUS LAKDAWALA TACTICAL TRAINING www.everymanchess.com About the Author is an International Master, a former National Open and American Open Cyrus Lakdawala Champion, and a six-time State Champion. He has been teaching chess for over 30 years, and coaches some of the top junior players in the U.S. Also by the Author: 1...b6: Move by Move 1...d6: Move by Move A Ferocious Opening Repertoire Anti-Sicilians: Move by Move Bird’s Opening: Move by Move Botvinnik: Move by Move Capablanca: Move by Move Carlsen: Move by Move Caruana: Move by Move First Steps: the Modern Fischer: Move by Move Korchnoi: Move by Move Kramnik: Move by Move Larsen: Move by Move Opening Repertoire: ...c6 Opening Repertoire: Modern Defence Opening Repertoire: The Sveshnikov Petroff Defence: Move by Move Play the London System The Alekhine Defence: Move by Move The Caro-Kann: Move by Move The Classical French: Move by Move The Colle: Move by Move The Four Knights: Move by Move The Modern Defence: Move by Move The Nimzo-Larsen Attack: Move by Move The Scandinavian: Move by Move The Slav: Move by Move The Trompowsky Attack: Move by Move Contents About the Author 3 Bibliography 6 Introduction 7 1 Various Mating Patterns 13 2 Shorter Mates 48 3 Longer Mates 99 4 Annihilation of Defensive Barrier/Obliteration/Demolition of Structure 161 5 Clearance/Line Opening 181 6 Decoy/Attraction/Removal of the Guard 194 7 Defensive Combinations 213 8 Deflection 226 9 Desperado 234 10 Discovered Attack 244 11 Double Attack 259 12 Drawing Combinations 270 13 Fortress 279 14 Greek Gift Sacrifice