A New Approach to Switch Fabrics Based on Mini-Router Grids and Output Queueing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Transmit Processing in MIMO Wireless Systems

IEEE 6th CAS Symp. on Emerging Technologies: Mobile and Wireless Comm Shanghai, China, May 31-June 2,2004 Transmit Processing in MIMO Wireless Systems Josef A. Nossek, Michael Joham, and Wolfgang Utschick Institute for Circuit Theory and Signal Processing, Munich University of Technology, 80333 Munich, Germany Phone: +49 (89) 289-28501, Email: [email protected] Abstract-By restricting the receive filter to he scalar, we weighted by a scalar in Section IV. In Section V, we compare derive the optimizations for linear transmit processing from the linear transmit and receive processing. respective joint optimizations of transmit and receive filters. We A popular nonlinear transmit processing scheme is Tom- can identify three filter types similar to receive processing: the nmamit matched filter (TxMF), the transmit zero-forcing filter linson Harashima precoding (THP) originally proposed for (TxZE), and the transmil Menerfilter (TxWF). The TxW has dispersive single inpur single output (SISO) systems in [IO], similar convergence properties as the receive Wiener filter, i.e. [Ill, but can also be applied to MlMO systems [12], [13]. it converges to the matched filter and the zero-forcing Pter for THP is similar to decision feedback equalization (DFE), hut low and high signal to noise ratio, respectively. Additionally, the contrary to DFE, THP feeds back the already transmitted mean square error (MSE) of the TxWF is a lower hound for the MSEs of the TxMF and the TxZF. symbols to reduce the interference caused by these symbols The optimizations for the linear transmit filters are extended at the receivers. Although THP is based on the application of to obtain the respective Tomlinson-Harashimp precoding (THP) nonlinear modulo operators at the receivers and the transmitter, solutions. -

Download the Basketballplayer.Ngql Fle Here

Nebula Graph Database Manual v1.2.1 Min Wu, Amber Zhang, XiaoDan Huang 2021 Vesoft Inc. Table of contents Table of contents 1. Overview 4 1.1 About This Manual 4 1.2 Welcome to Nebula Graph 1.2.1 Documentation 5 1.3 Concepts 10 1.4 Quick Start 18 1.5 Design and Architecture 32 2. Query Language 43 2.1 Reader 43 2.2 Data Types 44 2.3 Functions and Operators 47 2.4 Language Structure 62 2.5 Statement Syntax 76 3. Build Develop and Administration 128 3.1 Build 128 3.2 Installation 134 3.3 Configuration 141 3.4 Account Management Statement 161 3.5 Batch Data Management 173 3.6 Monitoring and Statistics 192 3.7 Development and API 199 4. Data Migration 200 4.1 Nebula Exchange 200 5. Nebula Graph Studio 224 5.1 Change Log 224 5.2 About Nebula Graph Studio 228 5.3 Deploy and connect 232 5.4 Quick start 237 5.5 Operation guide 248 6. Contributions 272 6.1 Contribute to Documentation 272 6.2 Cpp Coding Style 273 6.3 How to Contribute 274 6.4 Pull Request and Commit Message Guidelines 277 7. Appendix 278 7.1 Comparison Between Cypher and nGQL 278 - 2/304 - 2021 Vesoft Inc. Table of contents 7.2 Comparison Between Gremlin and nGQL 283 7.3 Comparison Between SQL and nGQL 298 7.4 Vertex Identifier and Partition 303 - 3/304 - 2021 Vesoft Inc. 1. Overview 1. Overview 1.1 About This Manual This is the Nebula Graph User Manual. -

Three Methods of Finding Remainder on the Operation of Modular

Zin Mar Win, Khin Mar Cho; International Journal of Advance Research and Development (Volume 5, Issue 5) Available online at: www.ijarnd.com Three methods of finding remainder on the operation of modular exponentiation by using calculator 1Zin Mar Win, 2Khin Mar Cho 1University of Computer Studies, Myitkyina, Myanmar 2University of Computer Studies, Pyay, Myanmar ABSTRACT The primary purpose of this paper is that the contents of the paper were to become a learning aid for the learners. Learning aids enhance one's learning abilities and help to increase one's learning potential. They may include books, diagram, computer, recordings, notes, strategies, or any other appropriate items. In this paper we would review the types of modulo operations and represent the knowledge which we have been known with the easiest ways. The modulo operation is finding of the remainder when dividing. The modulus operator abbreviated “mod” or “%” in many programming languages is useful in a variety of circumstances. It is commonly used to take a randomly generated number and reduce that number to a random number on a smaller range, and it can also quickly tell us if one number is a factor of another. If we wanted to know if a number was odd or even, we could use modulus to quickly tell us by asking for the remainder of the number when divided by 2. Modular exponentiation is a type of exponentiation performed over a modulus. The operation of modular exponentiation calculates the remainder c when an integer b rose to the eth power, bᵉ, is divided by a positive integer m such as c = be mod m [1]. -

The Best of Both Worlds?

The Best of Both Worlds? Reimplementing an Object-Oriented System with Functional Programming on the .NET Platform and Comparing the Two Paradigms Erik Bugge Thesis submitted for the degree of Master in Informatics: Programming and Networks 60 credits Department of Informatics Faculty of mathematics and natural sciences UNIVERSITY OF OSLO Autumn 2019 The Best of Both Worlds? Reimplementing an Object-Oriented System with Functional Programming on the .NET Platform and Comparing the Two Paradigms Erik Bugge © 2019 Erik Bugge The Best of Both Worlds? http://www.duo.uio.no/ Printed: Reprosentralen, University of Oslo Abstract Programming paradigms are categories that classify languages based on their features. Each paradigm category contains rules about how the program is built. Comparing programming paradigms and languages is important, because it lets developers make more informed decisions when it comes to choosing the right technology for a system. Making meaningful comparisons between paradigms and languages is challenging, because the subjects of comparison are often so dissimilar that the process is not always straightforward, or does not always yield particularly valuable results. Therefore, multiple avenues of comparison must be explored in order to get meaningful information about the pros and cons of these technologies. This thesis looks at the difference between the object-oriented and functional programming paradigms on a higher level, before delving in detail into a development process that consisted of reimplementing parts of an object- oriented system into functional code. Using results from major comparative studies, exploring high-level differences between the two paradigms’ tools for modular programming and general program decompositions, and looking at the development process described in detail in this thesis in light of the aforementioned findings, a comparison on multiple levels was done. -

Functional C

Functional C Pieter Hartel Henk Muller January 3, 1999 i Functional C Pieter Hartel Henk Muller University of Southampton University of Bristol Revision: 6.7 ii To Marijke Pieter To my family and other sources of inspiration Henk Revision: 6.7 c 1995,1996 Pieter Hartel & Henk Muller, all rights reserved. Preface The Computer Science Departments of many universities teach a functional lan- guage as the first programming language. Using a functional language with its high level of abstraction helps to emphasize the principles of programming. Func- tional programming is only one of the paradigms with which a student should be acquainted. Imperative, Concurrent, Object-Oriented, and Logic programming are also important. Depending on the problem to be solved, one of the paradigms will be chosen as the most natural paradigm for that problem. This book is the course material to teach a second paradigm: imperative pro- gramming, using C as the programming language. The book has been written so that it builds on the knowledge that the students have acquired during their first course on functional programming, using SML. The prerequisite of this book is that the principles of programming are already understood; this book does not specifically aim to teach `problem solving' or `programming'. This book aims to: ¡ Familiarise the reader with imperative programming as another way of imple- menting programs. The aim is to preserve the programming style, that is, the programmer thinks functionally while implementing an imperative pro- gram. ¡ Provide understanding of the differences between functional and imperative pro- gramming. Functional programming is a high level activity. -

Fast Integer Division – a Differentiated Offering from C2000 Product Family

Application Report SPRACN6–July 2019 Fast Integer Division – A Differentiated Offering From C2000™ Product Family Prasanth Viswanathan Pillai, Himanshu Chaudhary, Aravindhan Karuppiah, Alex Tessarolo ABSTRACT This application report provides an overview of the different division and modulo (remainder) functions and its associated properties. Later, the document describes how the different division functions can be implemented using the C28x ISA and intrinsics supported by the compiler. Contents 1 Introduction ................................................................................................................... 2 2 Different Division Functions ................................................................................................ 2 3 Intrinsic Support Through TI C2000 Compiler ........................................................................... 4 4 Cycle Count................................................................................................................... 6 5 Summary...................................................................................................................... 6 6 References ................................................................................................................... 6 List of Figures 1 Truncated Division Function................................................................................................ 2 2 Floored Division Function................................................................................................... 3 3 Euclidean -

Strength Reduction of Integer Division and Modulo Operations

Strength Reduction of Integer Division and Modulo Operations Saman Amarasinghe, Walter Lee, Ben Greenwald M.I.T. Laboratory for Computer Science Cambridge, MA 02139, U.S.A. g fsaman,walt,beng @lcs.mit.edu http://www.cag.lcs.mit.edu/raw Abstract Integer division, modulo, and remainder operations are expressive and useful operations. They are logical candidates to express complex data accesses such as the wrap-around behavior in queues using ring buffers, array address calculations in data distribution, and cache locality compiler-optimizations. Experienced application programmers, however, avoid them because they are slow. Furthermore, while advances in both hardware and software have improved the performance of many parts of a program, few are applicable to division and modulo operations. This trend makes these operations increasingly detrimental to program performance. This paper describes a suite of optimizations for eliminating division, modulo, and remainder operations from programs. These techniques are analogous to strength reduction techniques used for multiplications. In addition to some algebraic simplifications, we present a set of optimization techniques which eliminates division and modulo operations that are functions of loop induction variables and loop constants. The optimizations rely on number theory, integer programming and loop transformations. 1 Introduction This paper describes a suite of optimizations for eliminating division, modulo, and remainder operations from pro- grams. In addition to some algebraic simplifications, we present a set of optimization techniques which eliminates division and modulo operations that are functions of loop induction variables and loop constants. These techniques are analogous to strength reduction techniques used for multiplications. Integer division, modulo, and remainder are expressive and useful operations. -

Computing Mod Without Mod

Computing Mod Without Mod Mark A. Will and Ryan K. L. Ko Cyber Security Lab The University of Waikato, New Zealand fwillm,[email protected] Abstract. Encryption algorithms are designed to be difficult to break without knowledge of the secrets or keys. To achieve this, the algorithms require the keys to be large, with some algorithms having a recommend size of 2048-bits or more. However most modern processors only support computation on 64-bits at a time. Therefore standard operations with large numbers are more complicated to implement. One operation that is particularly challenging to implement efficiently is modular reduction. In this paper we propose a highly-efficient algorithm for solving large modulo operations; it has several advantages over current approaches as it supports the use of a variable sized lookup table, has good spatial and temporal locality allowing data to be streamed, and only requires basic processor instructions. Our proposed algorithm is theoretically compared to widely used modular algorithms, before practically compared against the state-of-the-art GNU Multiple Precision (GMP) large number li- brary. 1 Introduction Modular reduction, also known as the modulo or mod operation, is a value within Y, such that it is the remainder after Euclidean division of X by Y. This operation is heavily used in encryption algorithms [6][8][14], since it can \hide" values within large prime numbers, often called keys. Encryption algorithms also make use of the modulo operation because it involves more computation when compared to other operations like add. Meaning that computing the modulo operation is not as simple as just adding bits. -

Haskell-Like S-Expression-Based Language Designed for an IDE

Department of Computing Imperial College London MEng Individual Project Haskell-Like S-Expression-Based Language Designed for an IDE Author: Supervisor: Michal Srb Prof. Susan Eisenbach June 2015 Abstract The state of the programmers’ toolbox is abysmal. Although substantial effort is put into the development of powerful integrated development environments (IDEs), their features often lack capabilities desired by programmers and target primarily classical object oriented languages. This report documents the results of designing a modern programming language with its IDE in mind. We introduce a new statically typed functional language with strong metaprogramming capabilities, targeting JavaScript, the most popular runtime of today; and its accompanying browser-based IDE. We demonstrate the advantages resulting from designing both the language and its IDE at the same time and evaluate the resulting environment by employing it to solve a variety of nontrivial programming tasks. Our results demonstrate that programmers can greatly benefit from the combined application of modern approaches to programming tools. I would like to express my sincere gratitude to Susan, Sophia and Tristan for their invaluable feedback on this project, my family, my parents Michal and Jana and my grandmothers Hana and Jaroslava for supporting me throughout my studies, and to all my friends, especially to Harry whom I met at the interview day and seem to not be able to get rid of ever since. ii Contents Abstract i Contents iii 1 Introduction 1 1.1 Objectives ........................................ 2 1.2 Challenges ........................................ 3 1.3 Contributions ...................................... 4 2 State of the Art 6 2.1 Languages ........................................ 6 2.1.1 Haskell .................................... -

Introduction to Python - Day 1/3

#### Introduction to Python - Day 1/3 #### # Today we will go over the format of the workshop, Spyder, IPython and Python, variables and assignments, mathematical and logical operations, variable reassignments, floats and integers, object types and coercion, text (string) operations and, methods, if/elif/else statements and defining functions. # This workshop aims to teach you some of the basics of coding in Python. Note that QCB Collaboratory offers other workshops focusing on different aspects of Python (graphing and data analysics, digitial image proessing, etc.) - you might find current list of workshops on the QCB Workshops page: https://qcb.ucla.edu/collaboratory-2/workshops/ # Each day the workshop is divided into two parts. The first part will introduce different aspects of Python. During this part you will be following instructions in the .py files (or the instructor) and typing code along with the instructor. In the second part will let you use the new techniques. Each day I will e-mail you copies of the tutorials with my own code written into the blanks. But, don't let that demotivate you from typing anything. Most of programming is troubleshooting, so I encourage you to type along, and we will address any questions and errors as they arise. My own coding results in errors every single day, it is a natural part of programming. # So, what is Spyder and how does it communicate with Python? The Spyder Integrated Development Environment (IDE) is a computer program that has both IPython and a text editor. You are reading from the text editor. # IPython is an interpreter for Python. -

Typing the Numeric Tower

Typing the Numeric Tower Vincent St-Amour1, Sam Tobin-Hochstadt1, Matthew Flatt2, and Matthias Felleisen1 1 Northeastern University {stamourv,samth,matthias}@ccs.neu.edu 2 University of Utah [email protected] Abstract. In the past, the creators of numerical programs had to choose between simple expression of mathematical formulas and static type checking. While the Lisp family and its dynamically typed relatives support the straightforward ex- pression via a rich numeric tower, existing statically typed languages force pro- grammers to pollute textbook formulas with explicit coercions or unwieldy nota- tion. In this paper, we demonstrate how the type system of Typed Racket accom- modates both a textbook programming style and expressive static checking. The type system provides a hierarchy of numeric types that can be freely mixed as well as precise specifications of sign, representation, and range information—all while supporting generic operations. In addition, the type system provides infor- mation to the compiler so that it can perform standard numeric optimizations. 1 Designing the Numeric Tower From the classic two-line factorial program to financial applications to scientific com- putation to graphics software, programs rely on numbers and numeric computations. Because of this spectrum of numeric applications, programmers wish to use a wide vari- ety of numbers: the inductively defined natural numbers, fixed-width integers, floating- point numbers, complex numbers, etc. Supporting this variety demands careful attention to the design of programming languages that manipulate numbers. Most languages have taken one of two approaches to numbers. Many untyped lan- guages, drawing on the tradition of Lisp and Smalltalk, provide a hierarchy of numbers whose various levels can be freely used together, known as the numeric tower. -

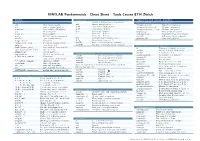

MATLAB Fundamentals - Cheat Sheet - Tools Course ETH Z¨Urich

MATLAB Fundamentals - Cheat Sheet - Tools Course ETH Z¨urich Basics Arithmetics Numerics and Linear Algebra Workspace +, - Addition, Subtraction (elementwise) Numerical Integration and Differentiation ans Most recent answer A*B Matrix multiplication integral(f,a,b) Numerical integration clc clear command window A.*B elementwise multiplication integral2(f,a,b,c,d) 2D num. integration clear var clear variables Workspace A./B elementwise division integral3(f,a,b,..,r,s) 3D num. integration clf Clear all plots B:nA Left array division trapz(x,y) Trapezoidal integration close all Close all plots / Solve xA = B for x cumtrapz(x,y) Cumulative trapez integration ctrl-c Kill the current calculation n Solve Ax = B for x diff(X) Differences (along columns) ^ doc fun open documentation A n normal/(square) matrix power gradient(X) Numerical gradient ^ disp('text') Print text A. n Elementwise power of A format short|long Set output display format sum(X) Sum of elements (along columns) prod(X) Product of elements (along columns) help fun open in-line help Matrix Functions/ Linear Algebra load filename fvarsg load variables from .mat file A' Transpose of matrix or vector save f-appendg file fvarsg save var to file inv(A) inverse of A (use with care!) addpath path include path to .. Elementary Functions det(A) determinant of A iskeyword arg Check if arg is keyword eig(A),eigs(A) eigenvalues of A (subset) % This is a comment Comments sin(A) Sine of argument in radians sind(A) Sine of argument in degrees cross(A,B) Cross product ... connect lines (with break)