Language and Domain Independent Entity Linking with Quantified

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2010 Html Master File

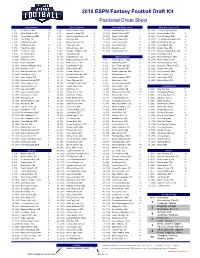

2010 ESPN Fantasy Football Draft Kit Positional Cheat Sheet Quarterbacks Running Backs Running Backs (ctn'd) Wide Receivers (ctn'd) 1. (9) Drew Brees, NO 10 1. (1) Chris Johnson, TEN 9 73. (214) Jonathan Dwyer, PIT 5 63. (195) Jerricho Cotchery, NYJ 7 2. (13) Aaron Rodgers, GB 10 2. (2) Adrian Peterson, MIN 4 74. (216) Maurice Morris, DET 7 64. (200) Chris Chambers, KC 4 3. (21) Peyton Manning, IND 7 3. (3) Maurice Jones-Drew, JAC 9 75. (219) Albert Young, MIN 4 65. (201) Chaz Schilens, OAK 10 4. (23) Tom Brady, NE 5 4. (4) Ray Rice, BAL 8 76. (225) Danny Ware, NYG 8 66. (202) T.J. Houshmandzadeh, BAL 8 5. (39) Matt Schaub, HOU 7 5. (5) Steven Jackson, STL 9 77. (227) Julius Jones, SEA 5 67. (204) Dexter McCluster, KC 4 6. (40) Philip Rivers, SD 10 6. (6) Frank Gore, SF 9 78. (228) Lex Hilliard, MIA 5 68. (209) Lance Moore, NO 10 7. (45) Tony Romo, DAL 4 7. (8) Michael Turner, ATL 8 79. (231) Deji Karim, JAC 9 69. (210) Golden Tate, SEA 5 8. (67) Brett Favre, MIN 4 8. (11) DeAngelo Williams, CAR 6 80. (238) Isaac Redman, PIT 5 70. (220) Darrius Heyward-Bey, OAK 10 9. (77) Joe Flacco, BAL 8 9. (14) Ryan Grant, GB 10 71. (223) Deon Butler, SEA 5 10. (87) Jay Cutler, CHI 8 10. (16) Cedric Benson, CIN 6 Wide Receivers 72. (224) Nate Washington, TEN 9 11. (93) Eli Manning, NYG 8 11. -

BAFFL 2 2011 Transactions 29-Feb-2012 10:11 AM Eastern Week 1

www.rtsports.com BAFFL 2 2011 Transactions 29-Feb-2012 10:11 AM Eastern Week 1 Wed Mar 2 8:49 am ET The Barnegat Bengals Acquired Drew Brees NOR QB Owner Thu Aug 25 6:31 pm ET Keyport Red Raiders Acquired Jamaal Charles KAN RB Commissioner Thu Aug 25 6:33 pm ET samaa Acquired Chris Johnson TEN RB Commissioner Thu Aug 25 7:43 pm ET Cirrhosis of the River Acquired Tony Romo DAL QB Commissioner Thu Aug 25 7:43 pm ET Cirrhosis of the River Released Percy Harvin MIN WR Commissioner Thu Aug 25 10:40 pm ET CreamPuffs Acquired New York Jets NYJ Special TeamCommissioner Thu Aug 25 10:49 pm ET The Barnegat Bengals Acquired Toby Gerhart MIN RB Commissioner Thu Aug 25 10:49 pm ET The Barnegat Bengals Released Mikel Leshoure DET RB Commissioner Sat Aug 27 12:32 pm ET Loosey Goosey Moosies Released Ryan Williams ARI RB Owner Sat Aug 27 9:31 pm ET The Barnegat Bengals Acquired Antonio Brown PIT WR Owner Sat Aug 27 9:31 pm ET The Barnegat Bengals Released Donald Brown IND RB Owner Sat Aug 27 9:53 pm ET The Barnegat Bengals Acquired Darren Sproles NOR RB Owner Sat Aug 27 9:53 pm ET The Barnegat Bengals Released Toby Gerhart MIN RB Owner Sun Aug 28 9:14 am ET Neptune Titans Acquired Nate Washington TEN WR Owner Sun Aug 28 9:14 am ET Neptune Titans Released Robert Meachem NOR WR Owner Tue Aug 30 12:08 am ET The Barnegat Bengals Acquired Bernard Berrian --- WR Owner Tue Aug 30 12:08 am ET The Barnegat Bengals Released Greg Little CLE WR Owner Wed Aug 31 6:19 pm ET Pork Roll Egg & Craig Released Jerricho Cotchery PIT WR Owner Fri Sep 2 4:05 pm ET The Barnegat -

Miami Dolphins Weekly Release

Miami Dolphins Weekly Release Preseason Game 3: Miami Dolphins (0-2) vs. Atlanta Falcons (1-1) Saturday, Aug. 29 • 7 p.m. ET • Sun Life Stadium • Miami Gardens, Fla. TABLE OF CONTENTS 2015 MIAMI DOLPHINS SEASON SCHEDULE 4-5 GAME INFORMATION 6-7 STEPHEN ROSS BIO 8 JOE PHILBIN BIO 9 2015 MIAMI DOLPHINS OFFENSIVE/SPECIAL TEAMS COACHES BIOS 10 2015 MIAMI DOLPHINS DEFENSIVE/STRENGTH COACHES BIOS 11 2014 NFL RANKINGS 12 2015 NFL PRESEASON RANKINGS 13 2014 DOLPHINS LEADERS AND STATISTICS 14 DOLPHINS PLAYERS VS. FALCONS/DOLPHINS-FALCONS SERIES FAST FACTS 15 LAST WEEK VS. CAROLINA 16-17 WHAT TO LOOK FOR IN 2015 18-19 2015 MIAMI DOLPHINS DRAFT CLASS 20 2015 MIAMI DOLPHINS OFF-SEASON RECAP 21 POSITION-BY-POSITION BREAKDOWN 22-24 DOLPHINS RECORD BOOK 25 SUN LIFE STADIUM MODERNIZATION 26-27 2015 MIAMI DOLPHINS ROOKIES IN THE COMMUNITY 28-30 MIAMI DOLPHINS 50TH SEASON 31 DOLPHINS PRESEASON TELEVISION 32 DOLPHINS ON THE AIR THIS SEASON 33 FLASHBACK...TO DOLPHINS TRAINING CAMP HISTORY 34 MAIMI DOLPHINS MEDIA WORKROOM ACCESS/PARKING INFO 35 GAME RECAPS AND POSTGAME NOTES 36-37 2015 MIAMI DOLPHINS TRANSACTIONS 38-39 2015 MIAMI DOLPHINS ALPHA ROSTER 40 2015 MIAMI DOLPHINS NUMERICAL ROSTER 41 2014 MIAMI DOLPHINS REGULAR SEASON STATISTICS 42 2015 MIAMI DOLPHINS TENTATIVE DEPTH CHART/PRONUNCIATION GUIDE 43 DOLPHINS-PANTHERS FLIP CARD DOLPHINS-PANTHERS GAME BOOK 2015 PRESEASON SUPPLEMENTAL STATS NFL IMPORTANT DATES MID-JULY NFL Training Camps Open SEPTEMBER 10, 13–14 AUG SEP 3 Kickoff Weekend 13 2015 @ Chicago Bears Tampa Bay Buccaneers OCTOBER 4 Soldier Field Sun Life Stadium International Series (London) Chicago 27, Miami 10 WFOR 7:00 P.M. -

Izfs4uwufiepfcgbigqm.Pdf

PANTHERS 2018 SCHEDULE PRESEASON Thursday, Aug. 9 • 7:00 pm Friday, Aug. 24 • 7:30 pm (Panthers TV) (Panthers TV) GAME 1 at BUFFALO BILLS GAME 3 vs NEW ENGLAND PATRIOTS Friday, Aug. 17 • 7:30 pm Thursday, Aug. 30 • 7:30 pm (Panthers TV) (Panthers TV) & COACHES GAME 2 vs MIAMI DOLPHINS GAME 4 at PITTSBURGH STEELERS ADMINISTRATION VETERANS REGULAR SEASON Sunday, Sept. 9 • 4:25 pm (FOX) Thursday, Nov. 8 • 8:20 pm (FOX/NFLN) WEEK 1 vs DALLAS COWBOYS at PITTSBURGH STEELERS WEEK 10 ROOKIES Sunday, Sept. 16 • 1:00 pm (FOX) Sunday, Nov. 18 • 1:00 pm* (FOX) WEEK 2 at ATLANTA FALCONS at DETROIT LIONS WEEK 11 Sunday, Sept. 23 • 1:00 pm (CBS) Sunday, Nov. 25 • 1:00 pm* (FOX) WEEK 3 vs CINCINNATI BENGALS vs SEATTLE SEAHAWKS WEEK 12 2017 IN REVIEW Sunday, Sept. 30 Sunday, Dec. 2 • 1:00 pm* (FOX) WEEK 4 BYE at TAMPA BAY BUCCANEERS WEEK 13 Sunday, Oct. 7 • 1:00 pm* (FOX) Sunday, Dec. 9 • 1:00 pm* (FOX) WEEK 5 vs NEW YORK GIANTS at CLEVELAND BROWNS WEEK 14 RECORDS Sunday, Oct. 14 • 1:00 pm* (FOX) Monday, Dec. 17 • 8:15 pm (ESPN) WEEK 6 at WASHINGTON REDSKINS vs NEW ORLEANS SAINTS WEEK 15 Sunday, Oct. 21 • 1:00 pm* (FOX) Sunday, Dec. 23 • 1:00 pm (FOX) WEEK 7 at PHILADELPHIA EAGLES vs ATLANTA FALCONS WEEK 16 TEAM HISTORY Sunday, Oct. 28 • 1:00 pm* (CBS) Sunday, Dec. 30 • 1:00 pm* (FOX) WEEK 8 vs BALTIMORE RAVENS at NEW ORLEANS SAINTS WEEK 17 Sunday, Nov. -

Week 4 - Thursday, October 1, 2015

WEEK 4 - THURSDAY, OCTOBER 1, 2015 BALTIMORE RAVENS (0-3) AT PITTSBURGH STEELERS (2-1) SERIES RAVENS STEELERS THURS. RECORD 4-4 10-15 SERIES LEADER 21-17 STREAKS 6 of past 10 3 of past 5 COACHES VS. OPP. John Harbaugh: 8-9 Mike Tomlin: 10-9 LAST WEEK L 28-24 vs. Bengals W 12-6 at Rams LAST GAME 11/2/14: Ravens 23 at Steelers 43. Pittsburgh QB Ben Roethlisberger throws for 340 yards & 6 TDs (136.3 rating). Steelers WR Antonio Brown has 11 catches for 144 yards & TD. LAST GAME AT SITE 11/2/14 REFEREE Clete Blakeman BROADCAST CBS/NFLN (8:25 PM ET): Jim Nantz, Phil Simms, Tracy Wolfson (field reporter). Westwood One: Kevin Kugler, Tony Boselli. SIRIUS: 83 (Bal.), 93 (Pit.). XM: 226 (Pit.). STATS PASSING Flacco: 82-126-863-4-4-82.2 Vick: 5-6-38-0-0-93.1 RUSHING Forsett: 39-124-3.2-0 Bell: 19-62-3.3-1 RECEIVING Smith, Sr.: 25-349 (3L)-14.0-2 Brown: 29 (T3L)-436 (1C)-15.0-2 OFFENSE 354.7 392.0 TAKE/GIVE -1 0 DEFENSE 375.0 342.7 SACKS Mosley: 2 Tuitt: 2.5 INTs J. Smith: 2 (T1L) Allen: 1 PUNTING Koch: 48.0 Berry: 47.2 KICKING Tucker: 28 (2C) (7/7 PAT; 7/8 FG) Scobee: 16 (4/5 PAT; 4/6 FG) NOTES RAVENS: In past 5 vs. Pit. (incl. playoffs), QB JOE FLACCO has completed 117 of 172 (68 pct.) for 1,194 yards with 8 TDs vs. -

New England Patriots 2009 Stats Pack Patriots Regular Season Record When …

NEW ENGLAND PATRIOTS 2009 STATS PACK PATRIOTS REGULAR SEASON RECORD WHEN … 1994 1995 1996 1997 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 Total W L W L W L W L W L W L W L W L W L W L W L W L W L W L W L W L W L Overall Record 10 6 6 10 11 5 10 6 9 7 8 8 5 11 11 5 9 7 14 2 14 2 10 6 12 4 16 0 11 5 7 3 163 87 Home 5 3 3 5 6 2 6 2 6 2 5 3 3 5 6 2 5 3 8 0 8 0 5 3 5 3 8 0 5 3 6 0 90 36 Away 5 3 3 5 5 3 4 4 3 5 3 5 2 6 5 3 4 4 6 2 6 2 5 3 7 1 8 0 6 2 1 3 73 51 0 0 By Month 0 0 August 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 September 2 2 1 2 2 2 3 0 2 1 3 0 0 4 1 2 3 1 2 2 2 0 2 1 2 1 3 0 2 1 2 1 32 20 October 1 3 1 4 3 1 1 3 2 2 3 2 2 2 2 2 0 3 4 0 4 1 2 2 4 0 5 0 3 1 3 1 40 27 November 3 1 3 1 3 1 3 2 3 2 0 3 1 3 3 1 4 1 4 0 4 0 2 2 2 2 3 0 2 3 2 1 42 23 December 4 0 1 3 3 1 2 1 2 2 1 3 2 2 4 0 2 2 4 0 3 1 4 0 4 1 5 0 4 0 45 16 January 0 0 0 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0 0 0 1 0 0 1 0 0 0 0 0 0 3 1 0 0 vs. -

NFL Fantasy Football Depth Chart. Updated

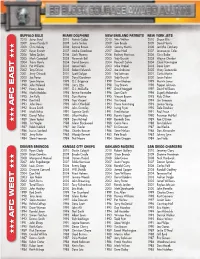

NFL Fantasy Football Depth Chart. Updated: August 27th Key: [R]-Rookie, AFC East Division Buffalo Bills Miami Dolphins New England Patriots New York Jets Pos Name Pos Name Pos Name Pos Name QB Ryan Fitzpatrick QB Chad Henne QB Tom Brady QB Mark Sanchez Brian Brohm Pat White Brian Hoyer Kellen Clemens RB Fred Jackson RB Ronnie Brown RB Laurence Maroney RB Shonn Greene C.J. Spiller [R] Ricky Williams Sammy Morris Ladainian Tomlinson WR1 Lee Evans WR1 Brandon Marshall WR1 Randy Moss WR1 Braylon Edwards WR2 Steve Johnson WR2 Davone Bess WR2 Wes Welker WR2 Santonio Holmes James Hardy Brian Hartline Julian Edelman Jerricho Cotchery Chad Jackson Patrick Turner Brandon Tate David Clowney TE Shawn Nelson TE Anthony Fasano TE Rob Gronkowski [R] TE Dustin Keller Jonathan Stupar Joey Haynos Aaron Hernandez [R] Ben Hartsock K Rian Lindell K Dan Carpenter K Stephen Gostkowski K Nick Folk AFC North Division Cincinnati Bengals Baltimore Ravens Pittsburgh Steelers Cleveland Browns Pos Name Pos Name Pos Name Pos Name QB Carson Palmer QB Joe Flacco QB Ben Roethlisberger QB Jake Delhomme J.T. O'Sullivan Marc Bulger D. Dixon/B. Leftwich Seneca Wallace RB Cedric Benson RB Ray Rice RB Rashard Mendenhall RB Jerome Harrison Bernard Scott Willis McGahee Mewelde Moore Montario Hardesty WR1 Chad Ochocinco WR1 Anquan Boldin WR1 Hines Ward WR1 Mohamed Massaquoi WR2 Terrel Owens WR2 Derrick Mason WR2 Mike Wallace WR2 Brian Robiskie Antonio Bryant Mark Clayton Arnaz Battle Chansi Stuckey Jerome Simpson Donte' Stallworth Antwaan Randle El Joshua Cribbs TE Jermaine Gresham -

Fantasy Football for Blood and Profit

FANTASY FOOTBALL FOR BLOOD AND PROFIT 2ND EDITION – 2007 SEASON By Pete Smits Senior Writer for Copyright © 2007 by Pete Smits. All rights reserved. You may not reproduce this document for any reason other than your own personal use. You are hereby advised that individuals re-selling, publicly posting or otherwise distributing this document without the express authorization and written permission of the author will be pursued to the fullest extent that the law allows. We will do everything in our power to ensure the value of this document for those who have purchased it. Pete Smits Page 1 8/3/2007 Copyright © 2007 by Pete Smits Table of Contents A comprehensive systematic approach that consistently provides playoff opportunities in any league Preface to the Second Edition ........................................... 5 “So what’s new this year?” .......................................................................................... 5 INTRODUCTION................................................................... 6 “How can I make the playoffs (almost) every year in every league?” ..................... 6 OVERVIEW of METHODS ................................................... 8 “So, what should I do differently?”............................................................................. 8 “Will I start this player in the upcoming week?” ...................................................... 9 STARTING CONSIDERATIONS......................................... 12 “How badly do you want to win?” ........................................................................... -

Patriots Vs. Cardinals

PATRIOTS VS . C ARDINALS SERIES HISTORY PATRIOTS VS. NFC The Patriots and Cardinals will meet for the 12th The Patriots have been successful against NFC teams in recent time and for the first time at Gillette Stadium. seasons, compiling a 30-5 (.857) record against the conference After a 1-6 series start against Arizona, New since 2001, including a 3-1 record in Super Bowls. The Patriots England has won the last four meetings to have won 14 straight regular-season games over NFC teams bring its record against the Cardinals to 5-6. dating back to 2005. New England has not lost a regular-season In their last meeting, the Patriots claimed a game to an NFC team since Sept. 18, 2005, falling on the road 23-12 victory at Sun Devil Stadium on Sept. to the Carolina Panthers, 27-17. The Patriots are 3-0 against 19, 2004. The Patriots have only faced the NFC competition this season, defeating the San Francisco 49ers Cardinals once during Bill Belichick’s tenure as head 30-21 on Oct. 5, beating the St. Louis Rams 23-16 on Oct. 26 coach (2000-present). The Patriots have faced and defeating the Seattle Seahawks 24-21 on Dec. 7. the other 30 NFL teams at least twice. Of the 11 previous meetings in the series, only three TALE OF THE TAPE have been in played in Foxborough. The last 2008 Regular Season New England Arizona time the Cardinals traveled to Foxborough was Record 9-5 8-6 on Sept. 15, 1996 when the Patriots shut out Divisional Standings T-1st 1st the Cardinals by a 31-0 score. -

Afc East Afc West Afc East Afc

BUFFALO BILLS MIAMI DOLPHINS NEW ENGLAND PATRIOTS NEW YORK JETS 2010 Jairus Byrd 2010 Patrick Cobbs 2010 Wes Welker 2010 Shaun Ellis 2009 James Hardy III 2009 Justin Smiley 2009 Tom Brady 2009 David Harris 2008 Chris Kelsay 2008 Ronnie Brown 2008 Sammy Morris 2008 Jerricho Cotchery 2007 Kevin Everett 2007 Andre Goodman 2007 Steve Neal 2007 Laveranues Coles 2006 Takeo Spikes 2006 Zach Thomas 2006 Rodney Harrison 2006 Chris Baker HHH 2005 Mark Campbell 2005 Yeremiah Bell 2005 Tedy Bruschi 2005 Wayne Chrebet 2004 Travis Henry 2004 David Bowens 2004 Rosevelt Colvin 2004 Chad Pennington 2003 Pat Williams 2003 Jamie Nails 2003 Mike Vrabel 2003 Dave Szott 2002 Tony Driver 2002 Robert Edwards 2002 Joe Andruzzi 2002 Vinny Testaverde 2001 Jerry Ostroski 2001 Scott Galyon 2001 Ted Johnson 2001 Curtis Martin 2000 Joe Panos 2000 Daryl Gardener 2000 Tedy Bruschi 2000 Jason Fabini 1999 Sean Moran 1999 O.J. Brigance 1999 Drew Bledsoe 1999 Marvin Jones 1998 John Holecek 1998 Larry Izzo 1998 Troy Brown 1998 Pepper Johnson 1997 Henry Jones 1997 O.J. McDuffie 1997 David Meggett 1997 David Williams 1996 Mark Maddox 1996 Bernie Parmalee 1996 Sam Gash 1996 Siupeli Malamala 1995 Jim Kelly 1995 Dan Marino 1995 Vincent Brown 1995 Kyle Clifton 1994 Kent Hull 1994 Troy Vincent 1994 Tim Goad 1994 Jim Sweeney AFC EAST 1993 John Davis 1993 John Offerdahl 1993 Bruce Armstrong 1993 Lonnie Young 1992 Bruce Smith 1992 John Grimsley 1992 Irving Fryar 1992 Dale Dawkins 1991 Mark Kelso 1991 Sammie Smith 1991 Fred Marion 1991 Paul Frase 1990 Darryl Talley 1990 Liffort Hobley -

Indianapolis Colts Cleveland Browns No

INDIANAPOLIS COLTS CLEVELAND BROWNS NO. NAME POS. No. Name Pos. 1 PAT MCAFEE . .P INDIANAPOLIS COLTS (0-1) VS. CLEVELAND BROWNS (0-1) 4 Phil Dawson . .K 4 ADAM VINATIERI . .K 6 Seneca Wallace . .QB 5 KERRY COLLINS . .QB SUNDAY, SEPTEMBER 18, 2011 - 1:00 PM - LUCAS OIL STADIUM 7 Brad Maynard . .P 7 CURTIS PAINTER . .QB 9 Thaddeus Lewis . .QB 11 ANTHONY GONZALEZ . .WR 10 Jordan Norwood . .WR 15 BLAIR WHITE . .WR 11 Mohamed Massaquoi . .WR 17 AUSTIN COLLIE . .WR COLTS OFFENSE COLTS DEFENSE 12 Colt McCoy . .QB 18 PEYTON MANNING . .QB WR 87 Reggie Wayne 17 Austin Collie LE 98 Robert Mathis 90 Jamaal Anderson 15 Greg Little . .WR 20 JUSTIN TRYON . .DB LT 74 Anthony Castonzo LDT 95 Fili Moala 94 Drake Nevis 16 Joshua Cribbs . .WR 21 KEVIN THOMAS . .DB LG 76 Joe Reitz 73 Seth Olsen RDT 99 Antonio Johnson 68 Eric Foster 18 Carlton Mitchell . .WR 23 TERRENCE JOHNSON . .DB 20 Mike Adams . .DB C 63 Jeff Saturday 78 Mike Pollak RE 93 Dwight Freeney 96 Tyler Brayton 92 Jerry Hughes 25 JERRAUD POWERS . .DB 21 Dimitri Patterson . .DB RG 71 Ryan Diem SLB 51 Pat Angerer 50 Phillip Wheeler 27 JACOB LACEY . .DB 22 Buster Skrine . .DB 29 JOSEPH ADDAI . .RB RT 72 Jeff Linkenbach 79 Ben Ijalana MLB 58 Gary Brackett 54 Nate Triplett 23 Joe Haden . .DB 30 DAVID CALDWELL . .DB TE 44 Dallas Clark 81 Brody Eldridge 84 Jacob Tamme WLB 53 Kavell Conner 57 Adrian Moten 55 Ernie Simms 24 Sheldon Brown . .DB 31 DONALD BROWN . .RB WR 85 Pierre Garcon 11 Anthony Gonzalez 15 Blair White LCB 27 Jacob Lacey 23 Terrence Johnson 36 Chris Rucker 27 Eric Hagg . -

2010 Football Season

22010010 FFootball:ootball: TThehe RRoadoad TToo TThehe OOrangerange BBowlowl GGoesoes TThroughhrough CCharlotteharlotte The Atlantic Coast Conference What’s Inside ACC Standings: 2009 Final Standings Release No. 1, Friday, August 27, 2010 ACC Games Overall ACC SIDs, ACC Communications .........2 Atlantic Division ..W L ..For Opp Hm Rd ..W L ....For Opp Hm Rd Nu Div. ... Streak Media Schedule, Digital News............3 #Clemson ...............6 2 ..268 169 4-0 2-2 ....9 5 ....436 286 6-1 2-3 1-1 4-1 ......Won 1 ACC National and Satellite Radio ........4 Boston College .......5 3 ..174 196 3-1 2-2 ....8 5 ....322 257 6-1 2-3 0-1 4-1 ......Lost 1 2010 Composite Schedule .................5 Florida State ...........4 4 ..268 278 2-2 2-2 ....7 6 ....391 390 3-3 3-3 1-0 3-2 ......Won 1 ACC By The Numbers Notes ...............6 Wake Forest ...........3 5 ..226 254 2-2 1-3 ....5 7 ....316 315 4-3 1-4 0-0 2-3 ......Won 1 Atlantic, Coastal Division Notes ...... 7-8 NC State .................2 6 ..213 315 2-2 0-4 ....5 7 ....364 374 5-3 0-4 0-0 1-4 ......Won 1 Week 1 Game Previews ................9-11 Maryland ................1 7 ..161 222 1-3 0-4 ....2 10 ....256 375 2-5 0-5 0-0 1-4 ......Lost 7 Team Schedule and Results......... 12-14 Noting ACC Football .................. 15-25 Coastal Division . W L ..For Opp Hm Rd ..W L ....For Opp Hm Rd Nu Div. ... Streak 2010 Dr Pepper ACC Championship ..