Use Cases: Impermissible AI and Fundamental Rights Breaches

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gloucestershire Constabulary

PEEL Police effectiveness, efficiency and legitimacy 2018/19 An inspection of Gloucestershire Constabulary Contents What this report contains 1 Force in context 3 Overall summary 4 Effectiveness 7 Force in context 8 How effectively does the force reduce crime and keep people safe? 10 Summary 10 Preventing crime and tackling anti-social behaviour 10 Investigating crime 13 Protecting vulnerable people 16 Tackling serious and organised crime 22 Armed policing 26 Efficiency 28 Force in context 29 How efficiently does the force operate and how sustainable are its services? 30 Summary 30 Meeting current demands and using resources 31 Planning for the future 31 Legitimacy 36 Force in context 37 How legitimately does the force treat the public and its workforce? 39 Summary 39 Treating the public fairly 40 Ethical and lawful workforce behaviour 41 Treating the workforce fairly 45 Annex A – About the data 46 i What this report contains This report is structured in four parts: 1. Our overall assessment of the force’s 2018/19 performance. 2. Our judgments and summaries of how effectively, efficiently and legitimately the force keeps people safe and reduces crime. 3. Our judgments and any areas for improvement and causes of concern for each component of our inspection. 4. Our detailed findings for each component. Our inspection approach In 2018/19, we adopted an integrated PEEL assessment (IPA) approach to our existing PEEL (police effectiveness, efficiency and legitimacy) inspections. IPA combines into a single inspection the effectiveness, efficiency and legitimacy areas of PEEL. These areas had previously been inspected separately each year. As well as our inspection findings, our assessment is informed by our analysis of: • force data and management statements; • risks to the public; • progress since previous inspections; • findings from our non-PEEL inspections; • how forces tackle serious and organised crime locally and regionally; and • our regular monitoring work. -

Article the Surveillance Dimensions of the Use of Social Media by UK Police Forces

The Surveillance Dimensions of the Use of Article Social Media by UK Police Forces Elena M. Egawhary Columbia University, USA [email protected] Abstract This paper explores the various surveillance practices involved in the use of social media for communication and investigation purposes by UK police forces. In doing so, it analyses internal policy documents and official guidance obtained through freedom of information (FOI) requests sent to 46 police forces in the United Kingdom. This analysis finds that UK police forces advise their staff to simultaneously engage in both surveillance and counter-surveillance strategies in their use of social media as a policing tool. Introduction The use of social media by UK police forces falls into two broad categories: communication (or engagement) and investigation (or operational use). UK police forces began registering corporate accounts on Twitter and Facebook for communication purposes for the first time in 2008. However, UK police forces’ use of the internet for investigative purposes dates back to April 2001 with the creation of the National Hi-Tech Crime Unit (NHTCU) to “investigate attacks on the Critical National Infrastructure; major internet based offences of paedophilia, fraud or extortion; information from seized electronic media and gather intelligence on cybercrime and cybercriminals” (Corbitt 2001: 29). Prior to 2001, most of the responsibility for using social networking sites to investigate crime fell to a small number of digital evidence recovery officers who were “swamped and learning on the job” (Thomas 2005) resulting in a reportedly “huge workload” (Goodwin 2005). This suggests that the use of social media in UK policing began in an unstructured way and “on the basis of initiatives by individual officers and subsequently with varying degrees of official support” (Crump 2011: 1). -

Successful Bids to the Police Innovation Fund 2016 to 2017

SUCCESSFUL BIDS TO THE POLICE INNOVATION FUND 2016/17 Bid 2016/17 Lead Force Other partners Bid Name / Details No. Award National Centre for Cyberstalking Research (NCCR) – University of Bedfordshire Cyberharassment: University of Liverpool Bedfordshire Platform for Evidence Nottingham Trent University £461,684.00 47 Gathering, Assessing Police Victim Support Risk & Managing Hampshire Stalking Policing Consultancy Clinic Paladin Greater Manchester Police Dyfed-Powys PCC Cambridgeshire Constabulary University of Cambridge BeNCH Community Rehabilitation Company Crown Prosecution Service Evidence-based Local authorities Cambridgeshire approach to deferred Health system £250,000.00 36 prosecution linked to Constabulary Criminal Justice Board devolution in West Midlands Police Cambridgeshire. Hampshire Constabulary Hertfordshire Constabulary Leicestershire Police Staffordshire Police West Yorkshire Police Ministry of Justice/NOMS Warwickshire Police Cheshire Integrated Force West Mercia Police £303,000.00 122 Communications Constabulary West Mercia Fire and Rescue Solution Cheshire Fire and Rescue Fire and Rescue Services Cheshire (FRS) through the Chief Fire National Air Service for 140 £120,100.00 Constabulary Officers’ Association (CFOA) emergency services Association of Ambulance (Category 1 and 2) Chief Executives (AACE) City of London Metropolitan Police Service False identity data £525,000.00 62 Warwickshire Police Police capture and sharing Barclays Bank Metropolitan Police Service Serious Fraud Office Public/private Crown Prosecution -

Durham Constabulary Baseline Assessment October 2006

Durham Constabulary – Baseline Assessment October 2006 Durham Constabulary Baseline Assessment October 2006 Durham Constabulary – Baseline Assessment October 2006 ISBN 13: 978-1-84726-024-6 ISBN 10: 1-84726-024-1 CROWN COPYRIGHT FIRST PUBLISHED 2006 Durham Constabulary – Baseline Assessment October 2006 Contents Introduction to Baseline Assessment Force Overview and Context Findings Summary of Judgements 1 Citizen Focus (Domain A) Fairness and Equality in Service Delivery Neighbourhood Policing and Problem Solving Customer Service and Accessibility Professional Standards 2 Reducing Crime (Domain 1) Volume Crime Reduction 3 Investigating Crime (Domain 2) Managing Critical Incidents and Major Crime Tackling Serious and Organised Criminality Volume Crime Investigation Improving Forensic Performance Criminal Justice Processes 4 Promoting Safety (Domain 3) Reducing Anti-Social Behaviour Protecting Vulnerable People 5 Providing Assistance (Domain 4) Contact Management Providing Specialist Operational Support Strategic Roads Policing 6 Resource Use (Domain B) Human Resource Management Training, Development and Organisational Learning Race and Diversity Managing Financial and Physical Resources Information Management National Intelligence Model 7 Leadership and Direction Leadership Performance Management and Continuous Improvement Appendix 1 Glossary of Terms and Abbreviations Page 1 Durham Constabulary – Baseline Assessment October 2006 Baseline Assessment 2006 Frameworks 1 Citizen Focus (PPAF Domain A) 1A Fairness and 1B Neighbourhood 1C Customer -

Data-Driven Policing and Public Value Ian Kearns and Rick Muir March 2019 Data-Driven Policing and Public Value

DATA-DRIVEN POLICING AND PUBLIC VALUE IAN KEARNS AND RICK MUIR MARCH 2019 DATA-DRIVEN POLICING AND PUBLIC VALUE IAN KEARNS AND RICK MUIR MARCH 2019 Acknowledgments The authors would like to thank all those who contributed to this research. In particular we are grateful to Accenture, BT and the Institute for Policy Research at the University of Bath who generously provided the funding for this project. We are also grateful to the members of our Project Advisory Group, including David Darch, Allan Fairley, Lord Toby Harris, Giles Herdale, Simon Kempton and Professor Tom Kirchmaier. We would like to thank all those police forces we visited as part of the research in particular Avon and Somerset Police and Hampshire Constabulary. Authors Dr Ian Kearns is a Senior Associate Fellow of the Police Foundation and during the period in which he was working on this report was also a Visiting Fellow at the Institute for Policy Research at the University of Bath. He has 25 years of experience working in the public, private and NGO sectors. He is a former Deputy Director and Acting Director of the Institute for Public Policy Research (IPPR) where he provided strategic direction on digital government, the new digital economy, national security and crime. Prior to this Ian was a Director in the Global Government Industry Practice of Electronic Data Systems (EDS), an IT services firm with a $20bn turnover. Ian also co-founded and served as the first Director of the European Leadership Network, a network of former Prime Ministers, foreign and defence ministers and other senior figures focused on security issues. -

Durham Constabulary Glossary of Terms – APPENDIX E

Durham Constabulary Glossary of Terms – APPENDIX E A CJRT – Criminal Justice Reform Team DMS – Digital Management System ABE – Achieving Best Evidence CJS - Criminal Justice System DO - Detention Officer ABELS – Achieving Best Evidence Language CJU - Criminal Justice Unit DS or D/SGT - Detective Sargent Screen CL – County Lines DSE - Display Screen Equipment ACC - Assistant Chief Constable CLEP – College Level Examination Programme D/SUPT - Detective Superintendent CLICM – County Lines Intelligence Collection ACO - Assistant Chief Officer DUBS - Durham University Business School Matrix ACRO – Criminal Records Office DV – Domestic Violence ADE – Adverse Childhood Experiences CMP – Covert Monitoring Post DVI – Disaster Victim Identification COMET – Client Offender Management AEP – Attenuating energy projectile DVPO – Domestic Violence Prevention Order Engagement Tracker AIMs – Assessment, Intervention, Move On CoP - College of Policing ALO - Architectural Liaison Officer CPC – Central Point of Contact E APP – Authorised Professional Practice EAP – Employee Assistance Programme ANPR – Automatic Number Plate Recognition CPD - Continuous Professional Development ECU – Economic Crime Unit APSTRA – Armed Policing Strategic Threat and CPIA - Criminal Procedures and Investigations Act EDI – Equality Diversity Inclusion Risk Assessment CPM - Community Peer Mentor EDMR - Eye Movement Desensitisation and ARMS – Active Risk Management System CPO - Crime Prevention Officer Reprocessing ARV – Armed Response Vehicle CPO – Close Protection Officer EFS -

Overview of Public Sector Procurement in the North East March 2017

Overview of Public Sector Procurement in the North East March 2017 Overview of Public Sector Procurement in the NE Contents Background 3 North East Procurement Organisation (NEPO) 4 NEPO Member Authorities 5 Darlington Borough Council 5 Durham County Council 6 Gateshead Council 7 Hartlepool Borough Council 8 Middlesbrough Council 9 Newcastle City Council 10 North Tyneside Council 11 Redcar & Cleveland Borough Council 12 South Tyneside Council 13 Stockton-On-Tees Borough Council 14 Sunderland City Council 15 Police Authorities 16 Durham Police 16 Northumbria Police 17 Fire and Rescue Services 18 County Durham and Darlington Fire and Rescue Service 18 Tyne and Wear Fire and Rescue Service 19 Universities 20 Northumbria University 20 2 Overview of Public Sector Procurement in the NE Background The “Overview of Public Sector Procurement in the North East” has been developed by NEPO’s Stakeholder Engagement Group to help the North East supply base identify the main public sector procurement bodies in the region with a view to competing for public sector business. NEPO will regularly review and update this document and welcomes input from any North East public sector organisation wishing to be included in future iterations (please contact [email protected]). NEPO’s Stakeholder Engagement Group was established in January 2015 with the following objectives; 1. Create and develop connections and engagement that will support and improve economic growth across the whole of the North East. 2. Effectively harness the expertise and market intelligence vested in business representative, private sector and VCS organisations to assist NEPO’s activities. 3. Give a greater focus on social value and an appreciation of the wider benefits that can be achieved through, for example, opportunities for public sector contracts to support employment and create apprenticeships in local labour markets. -

Police, Crime and Victims' Plan

POLICE, CRIME & VICTIMS’ PLAN FOR COUNTY DURHAM & DARLINGTON 2018 - 2021 Putting victims first in County Durham & Darlington Contents Foreword 3 My Plan 4 Your Community 6 My Role 10 Achieving the Outcomes 12 Service Delivery 23 Plan on a Page 26 Governance and Accountability 28 Resources 30 Appendix 34 How the PCVC Plan links to other partnerships Page 2 Foreword In 2016 I was delighted to be re-elected as your Police, Crime and Victims’ Commissioner. Now, half way through my current term of office, I am taking the opportunity to refresh my Police, Crime and Victims’ Plan for the period to 2021. The Plan sets out what I hope to achieve during that and influence the work of others to meet local time, and lays foundations for the longer- needs. It will always be important to me to term. be visible and accessible so that you can raise In 2016, I added the word ‘victims’ to my job issues with me directly, and I will continue title, to make it ‘Police, Crime and Victims’ to use your money as efficiently as possible, Commissioner’. The needs of victims are to maintain Durham’s position as the most at the heart of my work. Victims don’t ask efficient Police force in the country1 . My Plan to be victims. For the vast majority, the continues to be based firmly on the issues you experience is completely unexpected. Many have told me that you are facing. are vulnerable; some have mental health Crime has changed over the past few decades, problems. All of them should be offered and it will continue to change in the future. -

Predictive Policing

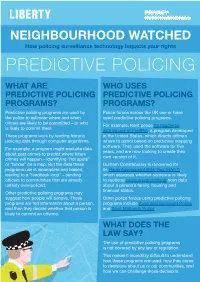

NEIGHBOURHOOD WATCHED How policing surveillance technology impacts your rights PREDICTIVE POLICING WHAT ARE WHO USES PREDICTIVE POLICING PREDICTIVE POLICING PROGRAMS? PROGRAMS? Predictive policing programs are used by Police forces across the UK use or have the police to estimate where and when used predictive policing programs. crimes are likely to be committed – or who For example, Kent police hit headlines is likely to commit them. with its use of PredPol, a program developed These programs work by feeding historic in the United States, which directs officers policing data through computer algorithms. where to patrol based on predictive mapping software. They used the software for five For example, a program might evaluate data years, and are now looking to create their about past crimes to predict where future own version of it. crimes will happen – identifying “hot spots” or “boxes” on a map. But the data these Durham Constabulary is renowned for programs use is incomplete and biased, its Harm Assessment Risk Tool (HART), leading to a “feedback loop” – sending which assesses whether someone is likely officers to communities that are already to reoffend using crude profiling from data unfairly over-policed. about a person’s family, housing and financial status. Other predictive policing programs may suggest how people will behave. These Other police forces using predictive policing programs are fed information about a person, programs include Avon and Somerset Police and then they decide whether that person is and West Midlands Police. likely to commit an offence. WHAT DOES THE LAW SAY? The use of predictive policing programs is not covered by any law or regulation. -

Sentrysis by Durham Constabulary

SentrySIS by Durham Constabulary T/Insp 2570 Adam Norris - [email protected] D/Supt 203 Kevin Weir - [email protected] Durham Constabulary, Police HQ, Aykley Heads, Durham, United Kingdom, DH1 5TT Tel: 101 ext 754989 SUMMARY (396 words) Retired Police Chief Mike Barton once said of technology that “it is the greatest enabler” – this is certainly true in 21st Century Policing. After nearly a decade of austerity and a general increase in demand on policing service, Policing UK PLC must adapt to work smarter not harder in order to manage demand to ensure that it remains a viable public service moving forward. Durham Constabulary has capitalised on the improvement in connectivity and digital technology to modernise our working practices and to increase the speed of investigations to achieve this aim. Durham Constabulary utilises a centralised local authority CCTV centre, this posed logistical problems for officers progressing investigations, as at it’s extreme, officers would either have to drive up to 20 miles to collect a CCTV disc and complete the bureaucratic data protection form or wait up to 2 weeks for the disc to arrive at their police station. Further to this, victim and witness statements were taken in paper format face to face as a matter of course – this again provided logistical problems in obtaining evidence from victims and witnesses in a global world where long distance travel is as easy as catching a bus and people move around with far greater ease. Analysis of these officer outputs showed that fuel was being burned unnecessarily, travelling time was essentially “dead time” in which officers couldn’t do anything productive and their investigations were hindered by the delay in obtaining key evidence because of outdated practices. -

Police Funding 2019/20

Police Funding 2019/20 Consultation document December 2018 – January 2019 Durham Constabulary is an outstanding police force. I know that because HM Inspectorate of Constabulary and Fire and Rescue Services have rated the force as the most effective and efficient force in the country for three years in a row – and because of the great feedback I get from local people. I am committed to ensuring that the Constabulary invests in people, innovation and IT to find further efficiencies so that people who live, work and visit the area are protected, and the Force remains ‘outstanding’. Since 2010 Government funding for policing in Durham and Darlington has been cut in real terms by 32%. About 75% of the money spent by the force comes from a Government grant, and the rest of it is paid by Council tax payers through the policing precept. I am determined to protect neighbourhood policing, so that there is an effective police presence in your area. However, the long-term reduction in Government funding, in real terms, means that front-line policing will be at risk unless I increase the policing precept. “It’s my job to make sure that the You may have seen in the press that Government has increased its direct grant allocation to Police forces, for one year only. However, most of that increase will have to pay for an very best use is made of your money, increase in the pension deficit for policing – an issue which affects every force in the so that policing in County Durham country, following a Government re-calculation of size of the deficit. -

Repeat Victims of Domestic Burglary (2003)

TLLLEY AWARD 2003 REPEAT VICTIMISATION DOMESTIC BURGLARY PROJECT PARTNERSHIP PROJECT Cleveland Police, Safe in Tees Valley and the Crime and Disorder Partnerships of: Middlesbrough Stockton * RartIepool Redcar and Cleveland * Darlington endorsing Chief Officer(s): Cleveland Police b. .. .. .. .,.. .. .\J. .. h, . .. filfi- Safe in Tees Valley . .. Crime and Disorder Reduction Partnership . .. .. .. Contact Details: S, CONTENTS 1. smmary 2. Scanning 3. Analysis 4. Response 5. Assessment 6. Conclusion APPENDICES 1. Table 15 - Predictive Characteristics by District 2. Victim Survey Questionnaires 3. Data Protection Forms 4. LeQers to Victim 5. Li teratme Review 6. Letters of Thanks 7. Press Articles Map illustrating the Tees Valley Region REPEAT VICTIMS OF DOMESTIC BURGLARY The government has put in place targets to reduce domestic burglary by 25% over the six year period April 1999 to March 2005. Central to addressing 'repeat' incidents should be a strategy to avoid becoming a repeat. Research by Safe in Tees Valley in 200112002 revealed the approach to domestic burglary repeats across the Cleveland Police area and the Darlington Division of Durham Constabulary was inconsistent and ineffective. This resulted in a poor service to victims and an inaccurate recording of crime details. Responses were often hampered by a lack of basic, timely information and no resources to address the problem. The project outline developed the initial work and aimed to: Develop a way forward to prevent a first time burglary victim becoming a repeat victim. Reduce the number of domestic burglaries through highlighting circumstances of repeats and the predictive characteristics. Provide timely and effective intervention with repeats thereby improving service to victims.