Intelligent Vision-Driven Robot for Sample Detection and Return -A Study in Artificial Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Control in Robotics

Control in Robotics Mark W. Spong and Masayuki Fujita Introduction The interplay between robotics and control theory has a rich history extending back over half a century. We begin this section of the report by briefly reviewing the history of this interplay, focusing on fundamentals—how control theory has enabled solutions to fundamental problems in robotics and how problems in robotics have motivated the development of new control theory. We focus primarily on the early years, as the importance of new results often takes considerable time to be fully appreciated and to have an impact on practical applications. Progress in robotics has been especially rapid in the last decade or two, and the future continues to look bright. Robotics was dominated early on by the machine tool industry. As such, the early philosophy in the design of robots was to design mechanisms to be as stiff as possible with each axis (joint) controlled independently as a single-input/single-output (SISO) linear system. Point-to-point control enabled simple tasks such as materials transfer and spot welding. Continuous-path tracking enabled more complex tasks such as arc welding and spray painting. Sensing of the external environment was limited or nonexistent. Consideration of more advanced tasks such as assembly required regulation of contact forces and moments. Higher speed operation and higher payload-to-weight ratios required an increased understanding of the complex, interconnected nonlinear dynamics of robots. This requirement motivated the development of new theoretical results in nonlinear, robust, and adaptive control, which in turn enabled more sophisticated applications. Today, robot control systems are highly advanced with integrated force and vision systems. -

An Abstract of the Dissertation Of

AN ABSTRACT OF THE DISSERTATION OF Austin Nicolai for the degree of Doctor of Philosophy in Robotics presented on September 11, 2019. Title: Augmented Deep Learning Techniques for Robotic State Estimation Abstract approved: Geoffrey A. Hollinger While robotic systems may have once been relegated to structured environments and automation style tasks, in recent years these boundaries have begun to erode. As robots begin to operate in largely unstructured environments, it becomes more difficult for them to effectively interpret their surroundings. As sensor technology improves, the amount of data these robots must utilize can quickly become intractable. Additional challenges include environmental noise, dynamic obstacles, and inherent sensor non- linearities. Deep learning techniques have emerged as a way to efficiently deal with these challenges. While end-to-end deep learning can be convenient, challenges such as validation and training requirements can be prohibitive to its use. In order to address these issues, we propose augmenting the power of deep learning techniques with tools such as optimization methods, physics based models, and human expertise. In this work, we present a principled framework for approaching a prob- lem that allows a user to identify the types of augmentation methods and deep learning techniques best suited to their problem. To validate our framework, we consider three different domains: LIDAR based odometry estimation, hybrid soft robotic control, and sonar based underwater mapping. First, we investigate LIDAR based odometry estimation which can be characterized with both high data precision and availability; ideal for augmenting with optimization methods. We propose using denoising autoencoders (DAEs) to address the challenges presented by modern LIDARs. -

Curriculum Reinforcement Learning for Goal-Oriented Robot Control

MEng Individual Project Imperial College London Department of Computing CuRL: Curriculum Reinforcement Learning for Goal-Oriented Robot Control Supervisor: Author: Dr. Ed Johns Harry Uglow Second Marker: Dr. Marc Deisenroth June 17, 2019 Abstract Deep Reinforcement Learning has risen to prominence over the last few years as a field making strong progress tackling continuous control problems, in particular robotic control which has numerous potential applications in industry. However Deep RL algorithms alone struggle on complex robotic control tasks where obstacles need be avoided in order to complete a task. We present Curriculum Reinforcement Learning (CuRL) as a method to help solve these complex tasks by guided training on a curriculum of simpler tasks. We train in simulation, manipulating a task environment in ways not possible in the real world to create that curriculum, and use domain randomisation in attempt to train pose estimators and end-to-end controllers for sim-to-real transfer. To the best of our knowledge this work represents the first example of reinforcement learning with a curriculum of simpler tasks on robotic control problems. Acknowledgements I would like to thank: • Dr. Ed Johns for his advice and support as supervisor. Our discussions helped inform many of the project’s key decisions. • My parents, Mike and Lyndsey Uglow, whose love and support has made the last four year’s possible. Contents 1 Introduction8 1.1 Objectives................................. 9 1.2 Contributions ............................... 10 1.3 Report Structure ............................. 11 2 Background 12 2.1 Machine learning (ML) .......................... 12 2.2 Artificial Neural Networks (ANNs) ................... 12 2.2.1 Strengths of ANNs ....................... -

Upgrading a Legacy Outdoors Robotic Vehicle4

Upgrading a Legacy Outdoors Robotic Vehicle4 1 1 2 Theodosis Ntegiannakis , Odysseas Mavromatakis , Savvas Piperidis , and Nikos C. Tsourveloudis3 1 Theodosis Ntegiannakis and Odysseas Mavromatakis are graduate students at the School of Production Engineering and Management, Technical University of Crete, Hellas. 2 corresponding author: Intelligent Systems and Robotics Laboratory, School of Production Engineering and Management, Technical University of Crete, 73100 Chania, Hellas, [email protected], www.robolab.tuc.gr 3 School of Production Engineering and Management, Technical University of Crete, Hellas, [email protected], www.robolab.tuc.gr Abstract. ATRV-mini was a popular, 2000's commercially available, outdoors robot. The successful upgrade procedure of a decommissioned ATRV-mini is presented in this paper. Its robust chassis construction, skid steering ability, and optional wifi connectivity were the major rea- sons for its commercial success, mainly for educational and research pur- poses. However the advances in electronics, microcontrollers and software during the last decades were not followed by the robot's manufacturer. As a result, the robot became obsolete and practically useless despite its good characteristics. The upgrade used up to date, off the shelf compo- nents and open source software tools. There was a major enhancement at robot's processing power, energy consumption, weight and autonomy time. Experimental testing proved the upgraded robot's operational in- tegrity and capability of undertaking educational, research and other typical robotic tasks. Keywords: all terrain autonomous robotic vehicle, educational robotics, ROS, robot upgrade, solar panels. 1 Introduction This work deals with the upgrading procedure of a decommissioned Real World Interface (RWI) ATRV-mini all-terrain robotic vehicle. -

Final Program of CCC2020

第三十九届中国控制会议 The 39th Chinese Control Conference 程序册 Final Program 主办单位 中国自动化学会控制理论专业委员会 中国自动化学会 中国系统工程学会 承办单位 东北大学 CCC2020 Sponsoring Organizations Technical Committee on Control Theory, Chinese Association of Automation Chinese Association of Automation Systems Engineering Society of China Northeastern University, China 2020 年 7 月 27-29 日,中国·沈阳 July 27-29, 2020, Shenyang, China Proceedings of CCC2020 IEEE Catalog Number: CFP2040A -USB ISBN: 978-988-15639-9-6 CCC2020 Copyright and Reprint Permission: This material is permitted for personal use. For any other copying, reprint, republication or redistribution permission, please contact TCCT Secretariat, No. 55 Zhongguancun East Road, Beijing 100190, P. R. China. All rights reserved. Copyright@2020 by TCCT. 目录 (Contents) 目录 (Contents) ................................................................................................................................................... i 欢迎辞 (Welcome Address) ................................................................................................................................1 组织机构 (Conference Committees) ...................................................................................................................4 重要信息 (Important Information) ....................................................................................................................11 口头报告与张贴报告要求 (Instruction for Oral and Poster Presentations) .....................................................12 大会报告 (Plenary Lectures).............................................................................................................................14 -

Real-Time Vision, Tracking and Control

Proceedings of the 2000 IEEE International Conference on Robotics & Automation San Francisco, CA April 2000 Real-Time Vision, Tracking and Control Peter I. Corke Seth A. Hutchinson CSIRO Manufacturing Science & Technology Beckman Institute for Advanced Technology Pinjarra Hills University of Illinois at Urbana-Champaign AUSTRALIA 4069. Urbana, Illinois, USA 61801 [email protected] [email protected] Abstract sidered the fusion of computer vision, robotics and This paper, which serves as an introduction to the control and has been a distinct field for over 10 years, mini-symposium on Real- Time Vision, Tracking and though the earliest work dates back close to 20 years. Control, provides a broad sketch of visual servoing, the Over this period several major, and well understood, approaches have evolved and been demonstrated in application of real-time vision, tracking and control many laboratories around the world. Fairly compre- for robot guidance. It outlines the basic theoretical approaches to the problem, describes a typical archi- hensive overviews of the basic approaches, current ap- tecture, and discusses major milestones, applications plications, and open research issues can be found in a and the significant vision sub-problems that must be number of recent sources, including [l-41. solved. The next section, Section 2, describes three basic ap- proaches to visual servoing. Section 3 provides a ‘walk 1 Introduction around’ the main functional blocks in a typical visual Visual servoing is a maturing approach to the control servoing system. Some major milestones and proposed applications are discussed in Section 4. Section 5 then of robots in which tasks are defined visually, rather expands on the various vision sub-problems that must than in terms of previously taught Cartesian coordi- be solved for the different approaches to visual servo- nates. -

The Personal Rover Project: the Comprehensive Design of A

Robotics and Autonomous Systems 42 (2003) 245–258 The Personal Rover Project: The comprehensive design of a domestic personal robot Emily Falcone∗, Rachel Gockley, Eric Porter, Illah Nourbakhsh The Robotics Institute, Carnegie Mellon University, Newell-Simon Hall 3111, 5000 Forbes Ave., Pittsburgh, PA 15213, USA Abstract In this paper, we summarize an approach for the dissemination of robotics technologies. In a manner analogous to the personal computer movement of the early 1980s, we propose that a productive niche for robotic technologies is as a long-term creative outlet for human expression and discovery. To this end, this paper describes our ongoing efforts to design, prototype and test a low-cost, highly competent personal rover for the domestic environment. © 2003 Elsevier Science B.V. All rights reserved. Keywords: Social robots; Educational robot; Human–robot interaction; Personal robot; Step climbing 1. Introduction duration of interaction and the roles played by human and robot participants. In cases where the human care- Robotics occupies a special place in the arena of giver provides short-term, nurturing interaction to a interactive technologies. It combines sophisticated robot, research has demonstrated the development of computation with rich sensory input in a physical effective social relationships [5,12,21]. Anthropomor- embodiment that can exhibit tangible and expressive phic robot design can help prime such interaction ex- behavior in the physical world. periments by providing immediately comprehensible In this regard, a central question that occupies our social cues for the human subjects [6,17]. research group pertains to the social niche of robotic In contrast, our interest lies in long-term human– artifacts in the company of the robotically uninitiated robot relationships, where a transient suspension of public-at-large: What is an appropriate first role for in- disbelief will prove less relevant than long-term social telligent human–robot interaction in the daily human engagement and growth. -

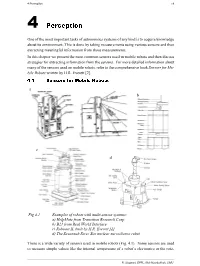

4 Perception 79

4 Perception 79 4 Perception One of the most important tasks of autonomous systems of any kind is to acquire knowledge about its environment. This is done by taking measurements using various sensors and then extracting meaningful information from those measurements. In this chapter we present the most common sensors used in mobile robots and then discuss strategies for extracting information from the sensors. For more detailed information about many of the sensors used on mobile robots, refer to the comprehensive book Sensors for Mo- bile Robots written by H.R. Everett [2]. 4.1 Sensors for Mobile Robots a b c d Fig 4.1 Examples of robots with multi-sensor systems: a) HelpMate from Transition Research Corp. b) B21 from Real World Interface c) Roboart II, built by H.R. Everett [2] d) The Savannah River Site nuclear surveillance robot There is a wide variety of sensors used in mobile robots (Fig. 4.1). Some sensors are used to measure simple values like the internal temperature of a robot’s electronics or the rota- R. Siegwart, EPFL, Illah Nourbakhsh, CMU 80 Autonomous Mobile Robots tional speed of the motors. Other, more sophisticated sensors can be used to acquire infor- mation about the robot’s environment or even to directly measure a robot’s global position. In this chapter we focus primarily on sensors used to extract information about the robot’s environment. Because a mobile robot moves around, it will frequently encounter unfore- seen environmental characteristics, and therefore such sensing is particularly critical. We begin with a functional classification of sensors. -

Pixy Hardware Interface

SSttrriikkeerr EEL 4914 Senior Design I Group 8 Cruz, Efrain Decamp, Loubens Narvaez, Luis Thomas, Brian Competitive Autonomous Air-Hockey Gaming system Table of Contents Table of Contents .................................................................................................. i 1 Executive Summary .......................................................................................... 1 2 Project Description ............................................................................................ 3 2.1 Motivation ................................................................................................................. 3 2.2 Goals and Objectives ................................................................................................. 4 2.3 Requirements and Specifications ........................................................................... 4 2.3.1 User Interface .................................................................................................... 4 2.3.2 Audio and Visual Effects .................................................................................... 6 2.3.3 Tracking System ................................................................................................. 7 2.3.4 Software .............................................................................................................. 9 2.3.5 System Hardware .............................................................................................. 10 2.3.6 Puck Return Mechanism .................................................................................. -

Botball Kit for Teaching Engineering Computing

Botball Kit for Teaching Engineering Computing David P. Miller Charles Winton School of AME Department of CIS University of Oklahoma University of N. Florida Norman, OK 73019 Jacksonville, FL 32224 Abstract Many engineering classes can benefit from hands on use of robots. The KISS Institute Botball kit has found use in many classes at a number of universities. This paper outlines how the kit is used in a few of these different classes at a couple of different universities. This paper also introduces the Collegiate Botball Challenge, and how it can be used as a class project. 1 Introduction Introductory engineering courses are used to teach general principles while introducing the students to all of the engineering disciplines. Robotics, as a multi-disciplinary application can be an ideal subject for projects that stress the different engineering fields. A major consideration in establishing a robotics course emphasizing mobile robots is the type of hands-on laboratory experience that will be incorporated into the course of instruction. Most electrical engineering schools lack the machine shops and expertise needed to create the mechanical aspects of a robot system. Most mechanical engineering schools lack the electronics labs and expertise needed for the actuation, sensing and computational aspects required to support robotics work. The situation is even more dire for most computer science schools. Computer science departments typically do not have the support culture for the kind of laboratories that are more typically associated with engineering programs. On the other hand, it is recognized that computer science students need courses which provide closer to real world experiences via representative hands-on exercises. -

![Arxiv:2011.00554V1 [Cs.RO] 1 Nov 2020 AI Agents [5]–[10]](https://docslib.b-cdn.net/cover/6447/arxiv-2011-00554v1-cs-ro-1-nov-2020-ai-agents-5-10-1576447.webp)

Arxiv:2011.00554V1 [Cs.RO] 1 Nov 2020 AI Agents [5]–[10]

Can a Robot Trust You? A DRL-Based Approach to Trust-Driven Human-Guided Navigation Vishnu Sashank Dorbala, Arjun Srinivasan, and Aniket Bera University of Maryland, College Park, USA Supplemental version including Code, Video, Datasets at https://gamma.umd.edu/robotrust/ Abstract— Humans are known to construct cognitive maps of their everyday surroundings using a variety of perceptual inputs. As such, when a human is asked for directions to a particular location, their wayfinding capability in converting this cognitive map into directional instructions is challenged. Owing to spatial anxiety, the language used in the spoken instructions can be vague and often unclear. To account for this unreliability in navigational guidance, we propose a novel Deep Reinforcement Learning (DRL) based trust-driven robot navigation algorithm that learns humans’ trustworthiness to perform a language guided navigation task. Our approach seeks to answer the question as to whether a robot can trust a human’s navigational guidance or not. To this end, we look at training a policy that learns to navigate towards a goal location using only trustworthy human guidance, driven by its own robot trust metric. We look at quantifying various affective features from language-based instructions and incorporate them into our policy’s observation space in the form of a human trust metric. We utilize both these trust metrics into an optimal cognitive reasoning scheme that decides when and when not to trust the given guidance. Our results show that Fig. 1: We look at whether humans can be trusted on the naviga- the learned policy can navigate the environment in an optimal, tional guidance they give to a robot. -

A Multi-Robot Search and Retrieval System

From: AAAI Technical Report WS-02-18. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved. MinDART : A Multi-Robot Search & Retrieval System Paul E. Rybski, Amy Larson, Heather Metcalf, Devon Skyllingstad, Harini Veeraraghavan and Maria Gini Department of Computer Science and Engineering, University of Minnesota 4-192 EE/CS Building Minneapolis, MN 55455 {rybski,larson,hmetcalf,dsky,harini,gini}@cs.umn.edu Abstract We are interested in studying how environmental and control factors affect the performance of a homogeneous multi-robot team doing a search and retrieval task. We have constructed a group of inexpensive robots called the Minnesota Distributed Autonomous Robot Team (MinDART) which use simple sen- sors and actuators to complete their tasks. We have upgraded these robots with the CMUCam, an inexpensive camera sys- tem that runs a color segmentation algorithm. The camera allows the robots to localize themselves as well as visually recognize other robots. We analyze how the team’s perfor- mance is affected by target distribution (uniform or clumped), size of the team, and whether search with explicit localization is more beneficial than random search. Introduction Cooperating teams of robots have the potential to outper- form a single robot attempting an identical task. Increasing Figure 1: The robots with their infrared targets and colored task or environmental knowledge may also improve perfor- landmarks. mance, but increased performance comes at a price. In addi- tion to the monetary concerns of building multiple robots, AAAI 2002 Mobile Robot Competition and Exhibition. Fi- the complexity of the control strategy and the processing nally, we analyze how we would expect these robots to per- overhead can outweigh the benefits.