Improving the Programmability of GPU Architectures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tesla K80 Gpu Accelerator

TESLA K80 GPU ACCELERATOR BD-07317-001_v05 | January 2015 Board Specification DOCUMENT CHANGE HISTORY BD-07317-001_v05 Version Date Authors Description of Change 01 June 23, 2014 GG, SM Preliminary Information (Information contained within this board specification is subject to change) 02 October 8, 2014 GG, SM • Updated product name • Minor change to Table 2 03 October 31, 2014 GG, SM • Added “8-Pin CPU Power Connector” section • Updated Figure 2 04 November 14, 2014 GG, SM • Removed preliminary and NDA • Updated boost clocks • Minor edits throughout document 05 January 30, 2015 GG, SM Updated Table 2 with MTBF data Tesla K80 GPU Accelerator BD-07317-001_v05 | ii TABLE OF CONTENTS Overview ............................................................................................. 1 Key Features ...................................................................................... 2 NVIDIA GPU Boost on Tesla K80 ................................................................ 3 Environmental Conditions ....................................................................... 4 Configuration ..................................................................................... 5 Mechanical Specifications ........................................................................ 6 PCI Express System ............................................................................... 6 Tesla K80 Bracket ................................................................................ 7 8-Pin CPU Power Connector ................................................................... -

AMD Radeon E8860

Components for AMD’s Embedded Radeon™ E8860 GPU INTRODUCTION The E8860 Embedded Radeon GPU available from CoreAVI is comprised of temperature screened GPUs, safety certi- fiable OpenGL®-based drivers, and safety certifiable GPU tools which have been pre-integrated and validated together to significantly de-risk the challenges typically faced when integrating hardware and software components. The plat- form is an off-the-shelf foundation upon which safety certifiable applications can be built with confidence. Figure 1: CoreAVI Support for E8860 GPU EXTENDED TEMPERATURE RANGE CoreAVI provides extended temperature versions of the E8860 GPU to facilitate its use in rugged embedded applications. CoreAVI functionally tests the E8860 over -40C Tj to +105 Tj, increasing the manufacturing yield for hardware suppliers while reducing supply delays to end customers. coreavi.com [email protected] Revision - 13Nov2020 1 E8860 GPU LONG TERM SUPPLY AND SUPPORT CoreAVI has provided consistent and dedicated support for the supply and use of the AMD embedded GPUs within the rugged Mil/Aero/Avionics market segment for over a decade. With the E8860, CoreAVI will continue that focused support to ensure that the software, hardware and long-life support are provided to meet the needs of customers’ system life cy- cles. CoreAVI has extensive environmentally controlled storage facilities which are used to store the GPUs supplied to the Mil/ Aero/Avionics marketplace, ensuring that a ready supply is available for the duration of any program. CoreAVI also provides the post Last Time Buy storage of GPUs and is often able to provide additional quantities of com- ponents when COTS hardware partners receive increased volume for existing products / systems requiring additional inventory. -

HP Grafične Postaje: HP Z1, HP Z2, HP Z4, ……

ARES RAČUNALNIŠTVO d.o.o. HP Z1 Tržaška cesta 330 HP Z2 1000 Ljubljana, Slovenia, EU HP Z4 tel: 01-256 21 50 Grafične kartice GSM: 041-662 508 e-mail: [email protected] www.ares-rac.si 29.09.2021 DDV = 22% 1,22 Grafične postaje HP Grafične postaje: HP Z1, HP Z2, HP Z4, …….. ZALOGA Nudimo vam tudi druge modele, ki še niso v ceniku preveri zalogo Na koncu cenika so tudi opcije: Grafične kartice. Ostale opcije po ponudbi. Objavljamo neto ceno in ceno z DDV (PPC in akcijsko). Dokončna cena in dobavni rok - po konkretni ponudbi Objavljamo neto ceno in ceno z DDV (PPC in akcijsko). Dokončna cena po ponudbi. Koda HP Z1 TWR Grafična postaja /Delovna postaja Zaloga Neto cena Cena z DDV (EUR) (EUR) HP Z1 G8 TWR Zaloga Neto cena Cena z DDV 29Y17AV HP Z1 G8 TWR, Core i5-11500, 16GB, SSD 512GB, nVidia GeForce RTX 3070 8GB, USB-C, Win10Pro #71595950 Koda: 29Y17AV#71595950 HP delovna postaja, HP grafična postaja, Procesor Intel Core i5 -11500 (2,7 - 4,6 GHz) 12MB 6 jedr/12 niti Nabor vezja Intel Q570 Pomnilnik 16 GB DDR4 3200 MHz (1x16GB), 3x prosta reža, do 128 GB 1.735,18 2.116,92 SSD pogon 512 GB M.2 2280 PCIe NVMe TLC Optična enota: brez, HDD pogon : brez Razširitvena mesta 2x 3,5'', 1x 2,5'', RAID podpira RAID AKCIJA 1.657,00 2.021,54 Grafična kartica: nVidia GeForce RTX 3070 8GB GDDR6, 256bit, 5888 Cuda jeder CENA Žične povezave: Intel I219-LM Gigabit Network Brezžične povezave: brez Razširitve: 1x M.2 2230; 1x PCIe 3.0 x16; 2x PCIe 3,0 x16 (ožičena kot x4); 2x M.2 2230/2280; 2x PCIe 3.0 x1 Čitalec kartic: brez Priključki spredaj: 1x USB-C, 2x -

Workstation Fisse E Mobili Monitor Hub Usb-C Docking

OCCUPATEVI DEL VOSTRO BUSINESS, NOI CI PRENDEREMO CURA DEI VOSTRI COMPUTERS E PROGETTI INFORMATICI 16-09-21 16 WORKSTATION FISSE E MOBILI MONITOR HUB USB-C DOCKING STATION Noleggiare computer, server, dispositivi informatici e di rete, Cremonaufficio è un’azienda informatica presente nel terri- può essere una buona alternativa all’acquisto, molti infatti torio cremonese dal 1986. sono i vantaggi derivanti da questa pratica e il funziona- Sin dai primi anni si è distinta per professionalità e capacità, mento è molto semplice. Vediamo insieme alcuni di questi registrando di anno in anno un trend costante di crescita. vantaggi: Oltre venticinque anni di attività di vendita ed assistenza di Vantaggi Fiscali. Grazie al noleggio è possibile ottenere di- prodotti informatici, fotocopiatrici digitali, impianti telefonici, versi benefici in termini di fiscalità, elemento sempre molto reti dati fonia, videosorveglianza, hanno consolidato ed affer- interessante per le aziende. Noleggiare computer, server e mato l’azienda Cremonaufficio come fornitrice di prodotti e dispositivi di rete permette di ottenere una riduzione sulla servizi ad alto profilo qualitativo. tassazione annuale e il costo del noleggio è interamente Il servizio di assistenza tecnica viene svolto da tecnici interni, deducibile. specializzati nei vari settori, automuniti. Locazione operativa, non locazione finanziaria. A differen- Cremonaufficio è un operatore MultiBrand specializzato in za del leasing, il noleggio non prevede l’iscrizione ad una Information Technology ed Office Automation e, come tale, si centrale rischi, di conseguenza migliora il rating creditizio, avvale di un sistema di gestione operativa certificato ISO 9001. facilita il rapporto con le banche e l’accesso ai finanzia- menti. -

Radeon™ E8860 (Adelaar) Video & Graphics XMC Aitech

M596 Radeon™ E8860 (Adelaar) Video & Graphics XMC Aitech • Single Width XMC • Enhanced Graphics & Video Support DirectX® 11.1 • PCIe x8 Gen 2.0 Host Interface Shader 5.0 • AMD Radeon E8860 (Adelaar) GPU OpenGL 4.2 6 Independent Graphics Heads OpenCL 1.1 2 GB GDDR5 • Operating System Support • Analog and Digital Video Inputs Windows™ ® • Analog and Digital Video Outputs Linux ® VxWorks Full Video Switching Capabilities ® • INTEGRITY • Video Capture and Overlay • Video Resize and Customized Formats • Full 2D/3D Processing Capabilities • Universal VPWR 5V/12V Aitech Defense Systems, Inc. A member of the Aitech Rugged Group 19756 Prairie Street, Chatsworth, CA 91311 Tel: (888) Aitech-8 (248-3248) Fax: (818) 407-1502 e-mail: [email protected] web: www.rugged.com Aitech Powerful Six Head Processing and Multiple Video Outputs Aitech's M596 6-Head Multiple Output Graphics XMC provides a high-performance, highly versatile embedded video and graphics solution for harsh environment applications. Designed around the AMD E8860 Six Head Graphics Processing Unit with its 2 GB of GDDR5, the M596 can simultaneously drive several independent video streams in a wide variety of output formats. It integrates multiple supporting hardware engines such as graphics language accelerators, parallel processing engines, video and audio de-compression units, and more. The M596 supports the most advanced graphics and video standards including DirectX, OpenGL, and H.264, as well as multiple and versatile graphics and video output protocols. Many of the standard M596 output video channels are provided through E8860 native integrated video ports. Additional video protocols/formats and signal conditioning are provided by an optional sophisticated FPGA residing alongside the E8860 GPU, to complement the GPU's capabilities. -

Contributions of Hybrid Architectures to Depth Imaging: a CPU, APU and GPU Comparative Study

Contributions of hybrid architectures to depth imaging : a CPU, APU and GPU comparative study Issam Said To cite this version: Issam Said. Contributions of hybrid architectures to depth imaging : a CPU, APU and GPU com- parative study. Hardware Architecture [cs.AR]. Université Pierre et Marie Curie - Paris VI, 2015. English. NNT : 2015PA066531. tel-01248522v2 HAL Id: tel-01248522 https://tel.archives-ouvertes.fr/tel-01248522v2 Submitted on 20 May 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THESE` DE DOCTORAT DE l’UNIVERSITE´ PIERRE ET MARIE CURIE sp´ecialit´e Informatique Ecole´ doctorale Informatique, T´el´ecommunications et Electronique´ (Paris) pr´esent´eeet soutenue publiquement par Issam SAID pour obtenir le grade de DOCTEUR en SCIENCES de l’UNIVERSITE´ PIERRE ET MARIE CURIE Apports des architectures hybrides `a l’imagerie profondeur : ´etude comparative entre CPU, APU et GPU Th`esedirig´eepar Jean-Luc Lamotte et Pierre Fortin soutenue le Lundi 21 D´ecembre 2015 apr`es avis des rapporteurs M. Fran¸cois Bodin Professeur, Universit´ede Rennes 1 M. Christophe Calvin Chef de projet, CEA devant le jury compos´ede M. Fran¸cois Bodin Professeur, Universit´ede Rennes 1 M. -

Tesla K8 Gpu Active Accelerator

TESLA K8 GPU ACTIVE ACCELERATOR BD-07228-001_v03 | September 2014 Board Specification DOCUMENT CHANGE HISTORY BD-07228-001_v03 Version Date Authors Description of Change 01 May 1, 2014 HV, SM Preliminary Information (Information contained within this board specification is subject to change) 02 July 2, 2014 GG, SM • Updated “Overview” • Updated Figure 2 03 September 16, 2014 GG, SM • Removed all preliminary and confidential markings. This doc is no longer preliminary • Updated GPU minimum clock speed Tesla K8 GPU Active Accelerator BD-07228-001_v03 | ii TABLE OF CONTENTS Overview ........................................................................................... 1 Key Features ..................................................................................... 1 NVIDIA GPU Boost ............................................................................... 2 NVIDIA GPU Boost on Tesla K8 Active ..................................................... 2 API for NVIDIA GPU Boost on Tesla ........................................................ 3 Tesla K8 Block Diagram ........................................................................ 5 Configuration .................................................................................... 6 Mechanical Specifications ....................................................................... 7 PCI Express System ............................................................................. 7 Standard I/O Connector Placement .......................................................... 8 Internal -

Hardware Selection and Configuration Guide Davinci Resolve 15

Hardware Selection and Configuration Guide DaVinci Resolve 15 PUBLIC BETA The world’s most popular and advanced on set, offline and online editing, color correction, audio post production andDaVinci visual Resolve effects 15 — Certified system. Configuration Guide 1 Contents Introduction 3 Getting Started 4 Guidelines for selecting your OS and system hardware 4 Media storage selection and file systems 9 Hardware Selection and Setup 10 DaVinci Resolve for Mac 11 DaVinci Resolve for Windows 16 DaVinci Resolve for Linux 22 Shopping Guide 32 Mac systems 32 Windows systems 35 Linux systems 38 Media storage 40 GPU selection 42 Expanders 44 Accessories 45 Third Party Audio Consoles 45 Third Party Color Grading Panels 46 Windows and Linux Systems: PCIe Slot Configurations 47 ASUS PCIe configuration 47 GIGABYTE PCIe configuration 47 HP PCIe configuration 48 DELL PCIe configuration 49 Supermicro PCIe configuration 50 PCIe expanders: Slot configurations 51 Panel Information 52 Regulatory Notices and Safety Information 54 Warranty 55 DaVinci Resolve 15 — Certified Configuration Guide 2 Introduction Building a professional all in one solution for offline and online editing, color correction, audio post production and visual effects DaVinci Resolve has evolved over a decade from a high-end color correction system used almost exclusively for the most demanding film and TV products to become the worlds most popular and advanced professional all in one solution for offline and online editing, color correction, audio post production and now visual effects. It’s a scalable and resolution independent finishing tool for Mac, Windows and Linux which natively supports an extensive list of image, audio and video format and codecs so you can mix various sources on the timeline at the same time. -

GPU Computing: More Exciting Times for HPC! Paul G

GPU Computing: More exciting times for HPC! Paul G. Howard, Ph.D. Chief Scientist, Microway, Inc. Copyright 2009 by Microway, Inc. Introduction In the never-ending quest in the High Performance Computing world for more and more computing speed, there's a new player in the game: the general purpose graphics processing unit, or GPU. The GPU is the processor used on a graphics card, optimized to perform simple but intensive processing on many pixels simultaneously. Taking a step back from its use in graphics, the GPU can be thought of as a high- performance SPMD (single-process multiple-data) processor capable of extremely high computational throughput, at low cost and with a far higher computational throughput-to-power ratio than is possible with CPUs. Performance speedups between 10x and 1000x have been reported using GPUs on real-world applications. For most people, a performance improvement of even one order of magnitude does not simply increase productivity. It will allow previously impractical projects to be completed in a timely manner. With three orders of magnitude improvement, a one minute simulation may be viewed real-time at 15 frames per second. GPU computing will change the way you think about your research! In late 2006, NVIDIA and ATI (acquired by AMD in 2006) began marketing GPU boards as streaming coprocessor boards. Both companies released software to expose the processor to application programmers. Since then the hardware has grown more powerful and the available software has become more high-level and developer-friendly. Hardware specifications and comparisons The GPU cards currently on the market are available from NVIDIA and AMD. -

Evga Geforce Gtx 960 Driver Download Windows 10 DRIVER EVGA GTX 960 WINDOWS 8.1

evga geforce gtx 960 driver download windows 10 DRIVER EVGA GTX 960 WINDOWS 8.1. Straight Power 400W Recorded with Nvidia Shadowplay Edited in. GeForce graphics cards deliver advanced DX12 features such as ray tracing and variable rate shading, bringing games to life with ultra-realistic visual effects and faster frame rates. Free delivery and returns on eBay Plus items for Plus members. Drivers Compro Videomate Action Ntsc Windows 8 Download (2020). I thought my A8-6500 APU would be a severe bottleneck, but my games are very smooth! EVGA GTX960 SSC - Is the GTX960 Worth it? I installed the ASUS Turbo GTX 280. Get the best deals on EVGA NVIDIA GeForce GTX 960 Computer Graphics Cards and find everything you'll need to improve your home office setup at. Powered by innovative Pascal architecture and equipped with the latest. Should be 1253 MHz through a 30. Buy EVGA NVIDIA GeForce GTX 960 NVIDIA Computer Graphics & Video Cards and get the best deals at the lowest prices on eBay! Ethan Gunderson Cape Coral. Designed to meet the requirements of immersive, next-generation displays including virtual reality, 4k, and multiple-monitor game-play, the GeForce GTX 1080 by NVIDIA is the future of gaming. Small yet nippy, just the way GTX 1060 ought to be? Placa video EVGA GeForce GTX 960 SSC GAMING ACX 2.0+ 4GB. The GTX 960 should at least be recognized in the bios as a standard vid card. Get the latest edition of GDDR5. Another ASUS product reviewed today, the Strix edition of the GTX 960. -

Data Sheet FUJITSU PRIMERGY NVIDIA® Tesla® K40 GPU

Data Sheet FUJITSU PRIMERGY NVIDIA® Tesla® K40 GPU Data Sheet FUJITSU PRIMERGY NVIDIA® Tesla® K40 GPU PRIMERGY server meet requirements for HPC GPU clusters With the ever-increasing demand for more computing performance, systems based on CPUs alone, can no longer keep up. The CPU-only systems can only get faster by adding thousands of individual computers – this method consumes too much power and makes supercomputers very expensive. A different strategy is parallel computing, and the HPC industry is moving toward a hybrid computing model, where Co-Processors and CPUs work together to perform general purpose computing tasks. As parallel processors, GPUs excel at tackling large amounts of similar data because the problem can be split into hundreds or thousands of pieces and calculated simultaneously. PRIMERGY NVIDIA® Tesla® K40 GPU Through the application-acceleration cores per board, Tesla processors offload parallel computations from the CPU to dramatically accelerate the floating point calculation performance. By adding a Tesla processor, engineers, designers, and content creation professionals accelerate some of the most complex tools exponentially faster than by adding a second CPU. Equipped with 12 GB of memory, the Tesla K40 GPU accelerator is ideal for the most demanding HPC and big data problem sets. It outperforms CPUs by up to 10 and includes a Tesla GPUBoost feature that enables power headroom to be converted into usercontrolled performance boost. Main Features Benefits K40 = 2880 Cores Tesla K40 delivers up to 4.29 teraflops of singel-percision floating point performance / 1.43 double-precision floating point performance ECC Memory Meets a critical requirement for computing accuracy and reliability in datacenters and supercomputing centers. -

Technical Details Specs

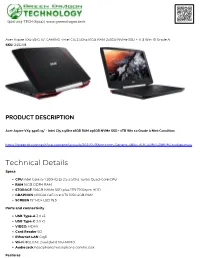

(501) 203-TECH (8324) | www.greendragon.tech Acer Aspire VX5-591G 15" GAMING -Intel CI5 2.5Ghz 16GB RAM 256GB NVMe SSD + 1TB Win 10 Grade A SKU: 245208 PRODUCT DESCRIPTION Acer Aspire VX5-591G 15" - Intel CI5 2.5Ghz 16GB RAM 256GB NVMe SSD + 1TB Win 10 Grade A Mint Condition https://greendragon.tech/wp-content/uploads/2021/07/ShortForm-Generic-480p-16-9-1409173089793-rpcbe5.mp4 Technical Details Specs CPU Intel Core i5-7300HQ @ 2.5-3.5Ghz Turbo Quad-Core CPU RAM 16GB DDR4 RAM STORAGE 256GB NVMe SSD plus 1TB 7200rpm HDD GRAPHICS NVIDIA GeForce GTX 1050 4GB RAM SCREEN 15” HD+ LED 16:9 Ports and connectivity USB Type-A 3.0 x3 USB Type-C 3.0 x1 VIDEO: HDMI Card Reader SD Ethernet LAN GigE Wi-Fi 802.11AC Dual Band MU-MIMO Audio jack headphone/microphone combo jack Features (501) 203-TECH (8324) | www.greendragon.tech Iron Red Backlit Keyboard Web Camera Microphone Security Lock Slot Includes A/C Power Adapter (New) Aggressive Gaming Design In the case of the Aspire VX 15, looks can kill! This soon-to-be battlefield gaming icon utilizes a hard-edged futuristic design with sleek lines and angles—and an impressive, 15.6” Full HD Display—to put you in total command of all the action. It combines a powerful 7th Gen Intel Core i5-7300HQ processor with high-performance NVIDIA GeForce GTX 1050 graphics driven by the new NVIDIA Pascal architecture. The advanced cooling and stellar audio capabilities support intense gaming sessions while the illuminating iron-red keyboard adds to the drama.