Numerical Methods for the Accelerated Resolution of Large Scale Linear Systems on Massively Parallel Hybrid Architecture Abal-Kassim Cheik Ahamed

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Jül Spez 0467.Pdf

EMULATING SIMULA IN TURBO PASCAL by Morton John Canty EMULATING SIMULA IN TURBO PASCAL M. J. Canty 1. lntroduction Although the computer language SIMULA /1,2/ is now over 20 years old it remains an excellent general purpose programming tool as well as a popular and powerfuf simulation language, the purpose for which it was originally developed. SIMULA is an extension of ALGOL 60, which it contains as a true subset, and its advanced concepts have served as a model for modern object-oriented languages such as SMALLTALK. The properties which make SIMULA especially suitable for simulation tasks are 1. a hierarchic cfass concept with inheritance, 2. sophisticated list handfing facilities and 3. concurrent programming capability. Of these three, the most essential property to allow for programming of discrete time simulation tasks is the third one, concurrent programming. By this is meant the ability to sustain parallel autonomous entities (called processes or co-routines) in memory. Allowing an arbitrary number of such processes to interact with each other along a time axis forms the basis of SIMULA's model for discrete time simulation. Although virtually all major programming languages have been implemented in one form or another on personal computers, SIMULA is a notable exception. Perhaps the main reason for this is that, while enjoying great popularity in Europe, SIMULA is not as well known on the North American continent. Pascal belongs to the same Afgol famify as SIMULA, and the dialect Turbo Pascal of the firm Borland Interna tional has become one bf the most wide spread high-levef languages for MS-DOS personal computers. -

A Domain-Specific Embedded Language for Probabilistic

AN ABSTRACT OF THE THESIS OF Steven Kollmansberger for the degree of Master of Science in Computer Science presented on December 12, 2005. Title: A Domain-Specific Embedded Language for Probabilistic Programming Abstract approved: Martin Erwig Functional programming is concerned with referential transparency, that is, given a certain function and its parameter, that the result will always be the same. However, it seems that this is violated in applications involving uncertainty, such as rolling a dice. This thesis defines the background of probabilistic programming and domain-specific languages, and builds on these ideas to construct a domain- specific embedded language (DSEL) for probabilistic programming in a purely functional language. This DSEL is then applied in a real-world setting to develop an application in use by the Center for Gene Research at Oregon State University. The process and results of this development are discussed. c Copyright by Steven Kollmansberger December 12, 2005 All Rights Reserved A Domain-Specific Embedded Language for Probabilistic Programming by Steven Kollmansberger A THESIS submitted to Oregon State University in partial fulfillment of the requirements for the degree of Master of Science Presented December 12, 2005 Commencement June 2006 Master of Science thesis of Steven Kollmansberger presented on December 12, 2005 APPROVED: Major Professor, representing Computer Science Director of the School of Electrical Engineering and Computer Science Dean of the Graduate School I understand that my thesis will become part of the permanent collection of Ore- gon State University libraries. My signature below authorizes release of my thesis to any reader upon request. Steven Kollmansberger, Author ACKNOWLEDGMENTS This thesis would not be possible without the ideas, drive and commitment of many people. -

Harmless Advice∗

Harmless Advice ∗ Daniel S. Dantas David Walker Princeton University Princeton University [email protected] [email protected] Abstract number of calls to each method: The what in this case is the com- when This paper defines an object-oriented language with harmless putation that does the recording and the is the instant of time aspect-oriented advice. A piece of harmless advice is a compu- just prior to execution of each method body. In aspect-oriented ter- what advice tation that, like ordinary aspect-oriented advice, executes when minology, the specification of to do is called and the when pointcut control reaches a designated control-flow point. However, unlike specification of to do it is called a . A collection of ordinary advice, harmless advice is designed to obey a weak non- pointcuts and advice organized to perform a coherent task is called aspect interference property. Harmless advice may change the termination an . behavior of computations and use I/O, but it does not otherwise in- Many within the AOP community adhere to the tenet that as- oblivious fluence the final result of the mainline code. The benefit of harmless pects are most effective when the code they advise is to advice is that it facilitates local reasoning about program behavior. their presence [11]. In other words, aspects are effective when a More specifically, programmers may ignore harmless advice when programmer is not required to annotate the advised code (hence- mainline code reasoning about the partial correctness properties of their programs. forth, the ) in any particular way. When aspect- In addition, programmers may add new pieces of harmless advice oriented languages are oblivious, all control over when advice to pre-existing programs in typical “after-the-fact” aspect-oriented is applied rests with the aspect programmer as opposed to the style without fear they will break important data invariants used by mainline programmer. -

Poker, a Process-Interaction Simulator and Controller for Use in Collaborative Simulation

POKer, a Process-Interaction Simulator and Controller for use in Collaborative Simulation Andriy Levytskyy, Eugene J.H. Kerckhoffs Delft University of Technology Faculty of Information Technology and Systems Mediamatica Department Zuidplantsoen 4, 2628 BZ Delft, The Netherlands e-mail: [email protected] and a subset of it, a process interaction world-view Keywords [20], which is of particular interest to us. This world- Discrete-Event simulation, Process-Interaction view gained its popularity due to the easiness of world-view, operational semantics, scripting mapping real-world processes into this formalism, languages, Python. and easiness of comprehending the essence of the process by a human. The above, as well as special application Abstract requirements for the simulation system we seek, In this paper we present the ideas and inspired us to develop our own special-purpose implementation of a small process-interaction kernel simulation software while trying to preserve its and cover its evolution from operational semantics connection to the formal background. In this paper of process-oriented simulation languages to the we present the ideas and implementation of a small current state of the kernel as (simulation) core of a process-interaction kernel and cover its evolution collaborative environment controller. from operational semantics of process oriented simulation languages to the current state of the kernel as (simulation) core of a collaborative 1. Introduction environment controller. Simulation as problem-solving methodology has been widely adopted in many practical domains. 2. View on the environment Users are free to select from a number of available general-purpose simulation software [14, 4] or, in 2.1. -

Writing Cybersecurity Job Descriptions for the Greatest Impact

Writing Cybersecurity Job Descriptions for the Greatest Impact Keith T. Hall U.S. Department of Homeland Security Welcome Writing Cybersecurity Job Descriptions for the Greatest Impact Disclaimers and Caveats • Content Not Officially Adopted. The content of this briefing is mine personally and does not reflect any position or policy of the United States Government (USG) or of the Department of Homeland Security. • Note on Terminology. Will use USG terminology in this brief (but generally translatable towards Private Sector equivalents) • Job Description Usage. For the purposes of this presentation only, the Job Description for the Position Description (PD) is used synonymously with the Job Opportunity Announcement (JOA). Although there are potential differences, it is not material to the concepts presented today. 3 Key Definitions and Concepts (1 of 2) • What do you want the person to do? • Major Duties and Responsibilities. “A statement of the important, regular, and recurring duties and responsibilities assigned to the position” SOURCE: https://www.opm.gov/policy-data- oversight/classification-qualifications/classifying-general-schedule-positions/classifierhandbook.pdf • Major vs. Minor Duties. “Major duties are those that represent the primary reason for the position's existence, and which govern the qualification requirements. Typically, they occupy most of the employee's time. Minor duties generally occupy a small portion of time, are not the primary purpose for which the position was established, and do not determine qualification requirements” SOURCE: https://www.opm.gov/policy-data- oversight/classification-qualifications/classifying-general-schedule-positions/positionclassificationintro.pdf • Tasks. “Activities an employee performs on a regular basis in order to carry out the functions of the job.” SOURCE: https://www.opm.gov/policy-data-oversight/assessment-and-selection/job-analysis/job_analysis_presentation.pdf 4 Key Definitions and Concepts (2 of 2) • What do you want to see on resumes that qualifies them to do this work? • Competency. -

Comparative Programming Languages CM20253

We have briefly covered many aspects of language design And there are many more factors we could talk about in making choices of language The End There are many languages out there, both general purpose and specialist And there are many more factors we could talk about in making choices of language The End There are many languages out there, both general purpose and specialist We have briefly covered many aspects of language design The End There are many languages out there, both general purpose and specialist We have briefly covered many aspects of language design And there are many more factors we could talk about in making choices of language Often a single project can use several languages, each suited to its part of the project And then the interopability of languages becomes important For example, can you easily join together code written in Java and C? The End Or languages And then the interopability of languages becomes important For example, can you easily join together code written in Java and C? The End Or languages Often a single project can use several languages, each suited to its part of the project For example, can you easily join together code written in Java and C? The End Or languages Often a single project can use several languages, each suited to its part of the project And then the interopability of languages becomes important The End Or languages Often a single project can use several languages, each suited to its part of the project And then the interopability of languages becomes important For example, can you easily -

Answers to Selected Exercises

Programming Languages - Principles and Practice 2nd Edition by Kenneth C. Louden Thomson Brooks/Cole 2002 Answers to Selected Exercises Copyright Kenneth C. Louden 2002 Chapter 1 1.2. (a) function numdigits(x: integer): integer; var t,n: integer; begin n := 1; t := x; while t >= 10 do begin n := n + 1; t := t div 10; end; numdigits := n; end; (c) numdigits x = if x < 10 then 1 else numdigits(x / 10) + 1 (e) function numdigits(x: Integer) return Integer is t: Integer := x; n: Integer := 1; begin while t >= 10 loop n := n + 1; t := t / 10; end loop; return n; end numdigits; (g) class NumDigits { public static int numdigits(int x) { int t = x, n = 1; while (t >= 10) { n++; t = t / 10; } return n; } } Kenneth C. Louden Programming Languages – Principles and Practice 2nd Ed. Answers - 2 1.5. (a) The remainder function, which we shall write here as % (some languages use rem or remainder), is defined by the integer equation a = (a / b) * b + a % b. The modulo function, which we shall write here as mod (some languages use modulo) is defined by the properties 1. a mod b is an integer between b and 0 not equal to b, and 2. there exists an integer n such that a = n * b + a mod b. When both a and b are non-negative, it can be shown that a % b = a mod b. But when either (or both) of a and b are negative these definitions can produce different results. For example, –10 % 3 = –1, since – 10 / 3 = –3 and –3 * 3 – 1 = –10. -

M. Craig Weaver Society for Worldwide Interbank Financial

M. Craig Weaver 106 Lakewood Drive Coatesville, PA 19320 (484) 712-0479 [email protected] S UMMARY Software Engineering professional with extensive experience in the design and development of system networking software and layered network architectures. A team-oriented communicator with the ability to solve complex problems using strong analytical skills. Effectively analyzed, designed and developed global networking software products on platforms ranging from micro, server, and mid-range to mainframe computers. Core strengths: • Software design, specification, and programming • Team Management • Software Quality Assurance Testing • Network troubleshooting and problem solution • Software problem analysis and solution • Burroughs Network Architecture expert • Complex protocol design and implementation • Networking and layered network architectures T E C H N I C A L S KILLS LANGUAGES JavaScript, C++, C, ALGOL, FORTRAN, HTML, Intel Assembler, Pascal, and others. PLATFORMS UNIX Systems; Microsoft Windows based systems (WINNT/WIN2000/WINXP); Unisys A-Series; Unisys V-Series; Unisys Clearpath; Unisys ES7000; Burroughs Large Systems; Burroughs Medium systems; Intel Processor based communications processor cards; Burroughs CP9500/B900. P ROFESSIONAL E XPERIENCE Society for Worldwide Interbank Financial Telecommunication (SWIFT) 2008 – 2014 International Member Owned Banking Cooperative Manassas, Virginia SENIO R SOFTWARE ENG INEER – CONTRACT FIN Renewal Project – Moving the SWIFT financial application software from Unisys machines (ALGOL) to HP UNIX machines (C++) • Tested ALTOS conversion tool. Created test cases from canonical ALGOL forms, converting them using ALTOS, then comparing the ALGOL and C++ test results. Diagnosed discrepancies and either designed solutions or required changes to the tool. • Converted Unisys ALGOL into UNIX C++ code. Tested, debugged and maintained the converted C++ code. -

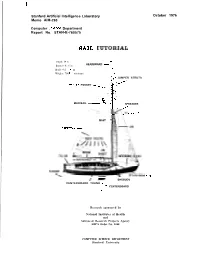

Sail Tutorial

I i Stanford Artificial Intelligence Laboratory October 1976 Memo AIM-290 Computer Science Department Report No. STAN-B-760575 SAIL TUTORIAL Length: 19 ft. I HEADBOARD &y Beam: 6 ft. 6 in. / Draft: 4 ft. llY2 in. ;’ /: Weight: 700 Ibs. minimum / - ,;’ it JUMPER STRUTS .i’ BA-ITEN POCKET <~>hI . f I . / . : ~ / ..I . ! '\ t : i : MAINSAIL .L%.. i b; SPREADER JIBSTAY .> ‘--~ MAST - BACKSTAY - . ,RDS SHROUDS CENTERBOARD TRUNK &‘ ,:- CENTERBOARD 4 Research sponsored by National Institutes of Health and Advanced Research Projects Agency ARPA Order No. 2494 COMPUTER SCIENCE DEPARTMENT Stanford University St anford Artificial Intelligence Laboratory October 1976 Memo AIM-290 Computer Science Department Report No. STAN-G-76-575 . SAIL TUTORIAL bY Nanoy W. Smith SUMEX-AIM Computer Project Department of Genetic6 Stanford University Medic&l Center ABSTRACT This TUTORIAL is designed for a beginning user of Sail, an ALGOL-like language for the PDP 10. The first part covers the basic statements and expressions of the language; remaining topics include macros, records, conditional compilation, and input/output. Detailed examples of Sail programming are included throughout, and only a mini,mum of programming background is assumed. This -manual was prepared as part of the SUMEX-AIM computing resoutce supported by the Biotechdogy Resources Program of the National Institutes of Health under grant RR- . 00735. Printing and preparation for publication were supported by ARPA under Contract M DA903076-C-0206. The views and conclusions contained in this document are those of the author(s) and should not be interpreted as necessarily representing the oficial policies, either expressed or implied, of Stanford *University, NIH, ARPA, or the V. -

Acp 113(Ah) Call Sign Book for Ships September 2008

UNCLASSIFIED ACP 113(AH) ACP 113(AH) CALL SIGN BOOK FOR SHIPS SEPTEMBER 2008 I UNCLASSIFIED UNCLASSIFIED ACP 113(AH) FOREWORD 1. ACP 113(AH), CALL SIGN BOOK FOR SHIPS, is an UNCLASSIFIED publication. Periodic accounting is not required. 2. ACP 113(AH) will be effective for National, Service, or Allied use when directed by the appropriate Implementing Agency and, when effective, will supersede ACP 113(AG) which is to be destroyed in accordance with current security regulations. 3. This publication now incorporates as ANNEX B, the information formerly contained in ACP 130 NATO SUPP-1 Section 2: Decoding of Visual Callsigns (pennant numbers) allocated to Ships and Certain Aurtorities of European NATO Countires and of the Countires of the British Commonwealth of Nations (Excluding Canada). 4. This publication contains Allied military information and is furnished for official purposes only. 5. It is permitted to copy or make extracts from this publication. 6. This publication may be carried in aircraft for use therein. 7. This ACP is to be maintained in accordance with provisions of the current version of ACP 198. II UNCLASSIFIED UNCLASSIFIED ACP 113(AH) THE COMBINED COMMUNICATION-ELECTRONICS BOARD LETTER OF PROMULGATION FOR ACP 113(AH) 1. The purpose of this Combined Communication Electronics Board (CCEB) Letter of Promulgation is to implement ACP 113(AH), CALL SIGN BOOK FOR SHIPS. 2. ACP 113(AH) is effective on receipt for CCEB Nations and effective for NATO nations and strategic Commands when promulgated by the NATO Military Committee (NAMILCOM). When effective, will supersede ACP 113(AG) which shall be destroyed in accordance with National regulations. -

ALGOL and MCP Interfaces to POSIX Features

Product Information Announcement o New Release • Revision o Update o New Mail Code Title MCP/AS ALGOL and MCP Interfaces to POSIXȵ Features Programming Reference Manual (7011 8351–002) ȵ This announces a retitling and reissue of the A Series ALGOL and MCP Interfaces to POSIX Features Programming Reference Manual. No new technical changes have been introduced since the HMP 1.0 and SSR 43.2 release in June 1996. This manual describes functions used to obtain certain POSIX-related features in programs not written in the C language. Essentially, each function mimics a C language POSIX function. Most functions call library procedures exported by the MCPSUPPORT library. The POSIX interface was developed by the Institute of Electrical and Electronics Engineers, Inc. (IEEE). • United States customers, call Unisys Direct at 1-800-448-1424. • Customers outside the United States, contact your Unisys sales office. • Unisys personnel, order through the electronic Book Store at http://iwww.bookstore.unisys.com. Comments about documentation can be sent through e-mail to [email protected]. Announcement only: Announcement and attachments: System: MCP/AS Release: HMP 4.0 and SSR 45.1 Date: June 1998 Part number: 7011 8351–002 MCP/AS ALGOL AND MCP INTERFACES ȵ TO POSIX FEATURES Programming Reference Manual Copyright ȴ 1998 Unisys Corporation. All rights reserved. Unisys and ClearPath are registered trademarks of Unisys Corporation. HMP 4.0 and SSR 45.1 June1998 Printed in USA Priced Item 7011 8351–002 The names, places, and/or events used in this publication are not intended to correspond to any individual, group, or association existing, living, or otherwise. -

February 1965 \

This simple little box o can make a very big improvement in the system you're planning. If you are a system designer, and if you are planning a 1 in 10,000,000. One small CDR-1 tape cartridge stores process control system, machine tool control, commercial as much data as 7000 feet of punched paper. And it can data acquisition, computer input/output, business system, be erased and used again. With all these advantages, banking system or just about any system for industry over·all operating costs of the CDR·1 are bound to be read how the new Ampex incremental Commercial Data lower than present systems. With all these advantages, Recorder can give you a lot more capability, for a lot less initial cost is competitive- right down to the penny-with money, than anything else in its field. most paper tape systems. Designed to meet a wide range of applications. The CDR-1 Greater capability, lower cost. What makes the new Ampex can be interfaced with many different types of data trans· CDR-! so much better? It's a magnetic tape recorder. For mission control and computer equipment. the first time, designers and manufacturers of systems that use other inputj output devices can enjoy all the Literally fool-proof. Operating the CDR·1 is as simple as inherent advantages of magnetic tape. The CDR·1 records slipping the magnetic tape cartridge into a slot. There's up to 2400 bits of information per second - incrementally, no threading, no rewinding. And the cartridge keeps data from random or continuous sources.