Streamflow Forecasting Without Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Install and Maintain Stream Gauges

Proposition 68 Projects 2019 Update California Department of Water Resources Sustainable Groundwater Management Program INSTALL AND MAINTAIN STREAM GAUGES This project focuses on inventorying, installation, and maintenance of stream gauges in high- and medium-priority basins. Building on the knowledge and successful track record of DWR’s Regional and Statewide Integrated Water Management technical assistance programs, this project supports and is aligned with the Governor’s Water Resilience Portfolio (Executive Order N-10-19), the Open and Transparent Data Act (AB 1755), and Sen. Bill Dodd’s Stream Gauges Bill (SB19). What is Proposition 68? will provide fast and reliable data. Additionally, this project supports GSP development, implementation, The California Drought, Water, Parks, Climate, Coastal and evaluation, as wells as groundwater recharge Protection and Outdoor for all Fund (Senate Bill 5, projects. This new surface water data provides Proposition 68) authorized $4 billion in general multiple benefits to programs within a variety of local, obligation bonds for state and local parks, state, and federal agencies including State Water environmental protection and restoration projects, Resources Control Board and the Department of Fish water infrastructure projects, and flood protection and Wildlife. projects. The Install and Maintain Stream Gauge project utilizes $4.95 million on data, tools, and What is New in 2019? analysis efforts for drought and groundwater Five stream gauges were recently installed throughout investments to achieve regional sustainability in the state at Bear River, Ash Creek, Owens Creek, support of the Sustainable Groundwater Management Tuolumne River, and San Luis Ray River. Act (SGMA) over a period of five years. How Does This Project Support SGMA? What are the Next Steps? In the next two years, DWR plans to install SGMA requires Groundwater Sustainability Agencies approximately 24 additional stream gauges in high- (GSAs) to monitor and assess stream depletion, water and medium-priority basins. -

U.S. Geological Survey Streamflow Data in Michigan Using the USGS NWIS Database MDOT Bridge Scour Conference October 5, 2017

U.S. Geological Survey Streamflow data in Michigan Using the USGS NWIS database MDOT Bridge Scour Conference October 5, 2017 Tom Weaver Eastern Hydrologic Data Chief Upper Midwest Water Science Center In Michigan, USGS operates gage sites to monitor hydrologic conditions including streamflow, surface water and groundwater levels, and water quality. In October 2017, the network includes: 166 real time continuous-record streamgages 10 crest-stage gages (CSG), including 5 real time 10 continuous-record lake-level gages 11 miscellaneous streamflow sites 32 continuous-record water-quality sites 24 groundwater wells, including 6 USGS real time Climate Response Network sites How do we monitor surface water? Surface-water monitoring at a stream site Gage height (stage) and streamflow are measured at gaging stations through a range of conditions At most sites a stage-discharge relation is constructed Outside staff gage indicating water level In 2017, most gaging stations are being constructed with non- submersible pressure transducers and GOES satellite transmitters. This is station number 04032000 Presque Isle River near Tula: https://waterdata.usgs.gov/mi/nwis/uv/ ?site_no=04032000&PARAmeter_cd= 00065,00060 Accessing the National Water Information System (NWIS) is easy https://mi.water.usgs.gov/ It’s easy to expand the interactive map by clicking on it twice. At that point you can easily hover the cursor over the gage of interest. Optionally, you can actually just go over to the Statewide Streamflow Current Conditions Table, or the other tables and click them instead. We will visit that option after a few slides. Clicking on the Daily Streamflow Conditions Map again brings you an interactive view: Each colored dot on the map indicates the location of, and streamflow conditions at, a streamgage. -

Who Uses Stream Gauge Data?

Who Uses Stream Gauge Data? By Janet Thigpen, CFM Flood Mitigation Specialist Southern Tier Central Regional Planning & Development Board Presentation at “Stream Gauges ~ Follow the Flow” Congressional Briefings on May 2, 2014 (hosted by the US Geological Survey) USGS Streamgauge Network in New York State The current US Geological Survey (USGS) stream gauge sites in New York State are shown in yellow. This network changes over time as new gauges are added and others are discontinued. Many of the gauges that have been discontinued because of funding difficulties are sites that are important because they have a long period of record. The red sites on this map represent gauges that have 30 or more years of record, but are no longer active. When these gauges were discontinued, the value of that long-term record was lost forever. Prepared by Southern Tier Central Regional Planning and Development Board Page 1 I am going to share some examples of how people in my region use stream gauge information, but first let’s look at some data. This shows 45 years of annual high flows for the Vltava River in Prague, Czechoslovakia (courtesy of Bo Juza, DHI). We would generally consider this to be a pretty good period of record for understanding and analyzing the high flows. If we have 85 years of data, that’s even better. We see that there were some floods during that time. 135 years is more data than we have for any site in the United States. The longest period of record for any gauge in New York State is 111 years. -

Streamflow Measurement and Analysis

BLBS113-c06 BLBS113-Brooks Trim: 244mm×172mm August 25, 2012 17:2 CHAPTER 6 Streamflow Measurement and Analysis INTRODUCTION Streamflow is the primary mechanism by which water moves from upland watersheds to ocean basins. Streamflow is the principal source of water for municipal water supplies, ir- rigated agricultural production, industrial operations, and recreation. Sediments, nutrients, heavy metals, and other pollutants are also transported downstream in streamflow. Flooding occurs when streamflow discharge exceeds the capacity of the channel. Therefore, stream- flow measurements or methods for predicting streamflow characteristics are needed for various purposes. This chapter discusses the measurement of streamflow, methods and mod- els used for estimating streamflow characteristics, and methods of streamflow-frequency analysis. MEASUREMENT OF STREAMFLOW Streamflow discharge, the quantity of flow per unit of time, is perhaps the most important hydrologic information needed by a hydrologist and watershed manager. Peak-flow data are needed in planning for flood control and designing engineering structures including bridges and road culverts. Streamflow data during low-flow periods are required to estimate the dependability of water supplies and for assessing water quality conditions for aquatic organisms (see Chapter 11). Total streamflow and its variation must be known for design purposes such as downstream reservoir storage. The stage or height of water in a stream channel can be measured with a staff gauge or water-level recorder at some location on a stream reach. The problem then becomes converting a measurement of the stage of a stream to discharge of the stream. This problem Hydrology and the Management of Watersheds, Fourth Edition. Kenneth N. -

Stormwater Management Detention Pond Design Within Floodplain Areas

Transportation Research Record 1017 31 Stormwater Management Detention Pond Design Within Floodplain Areas PAUL H. SMITH and JACK S. COOK ABSTRACT A unique approach to stormwater management for projects requ1r1ng mitigation of additional runoff caused by increases in paved surface areas is presented in this paper. Based on a design project developed for the General Foods Corporate Headquarters site in Rye, New York, a stormwater detention pond has been imple mented within the floodplain of an adjacent water course. Encroachment of con struction activities within a floodplain required the development of a deten tion pond that was capable of controlling excess runoff from adjacent areas while providing continued floodplain storage volume capacity. This methodology minimized the impacts of flooding on adjacent properties and provided suitable land areas for development in accordance with the intended use of the property. Occurrence of peak flooding along the watercourse did not coincide with peak stormwater runoff conditions from the smaller adjacent drainage area. By uti lizing flood hydrograph principles and analyses that were developed by the Soil Conservation Service, U.S. Department of Agriculture, it was possible to de velop a detention pond to provide a stormwater management phase and a flood control phase. Computerized analyses were compared for pre- and postdevelopment conditions using stormwater runoff and flood flow data on the basis of storms with return period frequencies of 10, 25, 50, and 100 years. By providing inlet pipes and outlet structures to control detention pond storage, peak flows from the pond to the watercourse and peak flood flows on the watercourse were re duced. -

Destimating Design Stream Flows at Road- Stream Crossings

Estimating Design Stream Flows at Road- Stream Crossings DD.1 Introduction D.2 Design Flow Estimates D.3 Verifying Flow Estimates at Ungauged Streams Stream Simulation Appendix D—Estimating Design Stream Flows at Road-Stream Crossings D.1 INTRODUCTION Assessing the stability of any crossing structure requires estimating design peak flows for the site. This appendix provides guidance and resources for estimating peak flows at gauged and ungauged sites. It is intended as a desk reference rather than an introduction to hydrologic analysis. Two types of design flows apply to stream-simulation design: l structural-design flows, for evaluating the structural integrity and stability of the culvert, bridge, etc., during flood events. l bed-design flows, for evaluating the stability of the particles intended to be permanent inside a drainage structure. Design flows are the flows that, if exceeded, may cause failure of the structure or the bed. The two design flows may be different if the consequences of bed failure are different from those of complete structural failure (see risk discussion in section 6.5.2.1). For example, if the acceptable risk of bed failure is 4 percent in any one year, the bed design flow would be the flow that is exceeded on average only every 25 years—the 25-year flow. The acceptable riskof losing the structure might be lower, perhaps only 1-percent per year, in which case the 100-year flow would be the structural-design flow. These design flows are often taken to be the same in real applications, but it is important to understand the concept that design flows are determined based on acceptable risks and consequences. -

Manual on Procedures in Operational Hydrology

MINISTRY OF WATER ENERGY AND MINERALS TANZANIA MANUAL ON PROCEDURES IN OPERATIONAL HYDROLOGY VOLUME 1 ESTABLISHMENT OF STREAM GAUGING STATIONS 1979 79 H* (o/8 MINISTRY OF WATER NORWEGIAN AGENCY FOR ENERGY AND MINERALS INTERNATIONAL DEVELOPMENT TANZANIA (NORAD) MANUAL ON PROCEDURES IN OPERATIONAL HYDROLOGY VOLUME 1 ESTABLISHMENT OF STREAM GAUGING STATIONS 0STEN A. TILREM 1979 © Norwegian Agency for International Development (NORAD) 1979 Printed by Fotosats Vs - Oslo Oslo 1979 PREFACE This Manual on Procedures in Operational Hydrology has been prepared jointly by the Ministry of Water, Energy and Minerals of Tanzania and the Norwegian Agency for International Development (NORAD). The author is 0sten A. Tilrem, senior hydrologist at the Norwegian Water Resources and Electricity Board, who for a period served as the Project Manager of the project Hydrometeorological Survey of Western Tanzania. The Manual consists of five Volumes dealing with 1. Establishment of Stream Gauging Stations 2. Operation of Stream Gauging Stations 3. Stream Discharge Measurements by Current Meter and Relative Salt Dilution 4. Stage-Discharge Relations at Stream Gauging Stations 5. Sediment Transport in Streams - Sampling, Analysis and Computation The author has drawn on many sources for information contained in this Volume and is indebted to these. It is hoped that suitable acknowledgement is made in the form of references to these works. The author would like to thank his colleagues at the Water Resources and Electricity Board for kindly reading and criticising the manuscript. Special credit is due to W. Balaile, Principal Hydrologist at the Ministry of Water, Energy and Minerals of Tanzania for his review and suggestions. -

Measuring River Discharge from Space

Measuring streamflow from space: a choose your own adventure Tamlin Pavelsky UNC Department of Geological Sciences Why do we care about streamflow? . Water resources . Flood hazards . Transportation . Hydropower generation . Ecology . Understanding the Water Cycle River Discharge Measurement Locations in the Global Runoff Data Centre Database We don’t have good quality, current data on rivers for much of the world. Lakes are even more poorly measured. Measuring River Discharge Fundamental Parameters in River Discharge (Q, m3/s): Depth(d), Velocity(v), Width(w) Q=wdv Each of these variables can also be individually related to discharge: w=aQb d=cQf v=kQm These power-law relationships have been recognized for well over a century but were fully explored by famous hydrologists Luna Leopold and Thomas Maddock in the 1950s Which Path Will You Choose???? Width Depth Velocity River Width Monitoring River Discharge Traditionally, we monitor discharge on the ground using rating curves between discharge and river depth: Stream gauge http://www.dgs.udel.edu/delaware-geology/stream-gages-usgs Depth = cQf Can we monitor discharge via river widths? Estimating River Discharge From Widths Smith et al, WRR, 1996 First demonstration of width-discharge measurements from radar satellite images Ashmore and Sauks, WRR, 2006 Demonstration that ground-based optical imagery can be used to estimate discharge Smith and Pavelsky, WRR, 2008 Used daily satellite-derived optical imagery to track river width/discharge. Estimating River Discharge From Widths It is possible to estimate discharge from space using the same approach we use on the ground: rating curves. Requirements: . On-the-ground discharge training data . -

Spatial and Temporal Variations in Streambed Hydraulic Conductivity Quantified with Time-Series Thermal Methods

Journal of Hydrology 389 (2010) 276–288 Contents lists available at ScienceDirect Journal of Hydrology journal homepage: www.elsevier.com/locate/jhydrol Spatial and temporal variations in streambed hydraulic conductivity quantified with time-series thermal methods Christine E. Hatch a,*,1, Andrew T. Fisher a,b, Chris R. Ruehl a,2, Greg Stemler a,3 a Earth and Planetary Sciences Department, University of California, Santa Cruz, E&MS A232, 1156 High Street, Santa Cruz, CA 95064, USA b Institute for Geophysics and Planetary Physics, University of California, 1156 High Street, Santa Cruz, CA 95064, USA article info summary Article history: We gauged and instrumented an 11.42-km long experimental reach of the Pajaro River, central coastal Received 27 October 2009 California, to determine rates of streambed seepage (loss and hyporheic exchange) using reach averaged Received in revised form 16 March 2010 and point specific methods. We used these data to assess changes in streambed hydraulic conductivity Accepted 28 May 2010 with time, as a function of channel discharge and associated changes in sediment scour and deposition. Discharge loss along the experimental reach was generally 0.1–0.3 m3 sÀ1 when channel discharge was This manuscript was handled by Philippe 6 3 À1 Baveye, Editor-in-Chief, with the assistance 2m s , with most of the loss occurring along the lower part of the experimental reach. Point specific of C. Corradini, Associate Editor seepage rates, determined using time-series analysis of streambed thermal records, indicate seepage rates as great as À1.4 m dayÀ1 (downward into the streambed). Seepage rates varied spatially and with Keywords: time, with greater seepage occurring along the lower part of the reach and during the summer and fall. -

Near Real-Time Runoff Estimation Using Spatially Distributed Radar

NearNear RealReal--TimeTime RunoffRunoff EstimationEstimation UsingUsing SpatiallySpatially DistributedDistributed RadarRadar RainfallRainfall DataData Jennifer Hadley 22 April 2003 IntroductionIntroduction n Water availability has become a major issue in Texas in the last several years, and the population is expected to double in the next 50 years (Texas Water Development Board, 2000). n Thus, there is a need for real-time weather data processing and hydrologic modeling which can provide information useful for planning, flood and drought mitigation, reservoir operation, and watershed and water resource management practices. n Traditionally, hydrologic models have used rain gauge networks which are generally sparse and insufficient to capture the spatial variability across large watersheds, and are unable to provide data in real-time. IntroductionIntroduction n Obtaining accurate rainfall data, in particular, is extremely important in hydrologic modeling because rainfall is the driving force in the hydrologic process. n NEXRAD radar can provide data for planning and management with spatial and temporal variability in real-time over large areas. PurposePurpose The objective of this study is to evaluate several variations of the Natural Resource Conservation Service (NRCS – formerly known as the Soil Conservation Service – SCS) curve number (CN) method for estimating near real-time runoff, using high resolution radar rainfall data for watersheds in various agro-climatic regions of Texas. IssuesIssues withwith CNCN AssignmentAssignment n Hawkins (1998) and Hawkins and Woodward (2002) state that CN tables should be used as guidelines and that actual CNs should be determined based on local and regional data. n Price (1998) determined that CN could be variable due to seasonal changes. n Ponce and Hawkins (1996) stated that values for initial abstractions (Ia) could be interpreted as a regional parameter to improve runoff estimates. -

Bureau of Land Management Soil, Water, and Air Program Highlights Fiscal Year 2015 Alaska’S Mosquito Fork Wild and Scenic River and Fireweed on a Nearby Hillside

Bureau of Land Management Soil, Water, and Air Program Highlights Fiscal Year 2015 Alaska’s Mosquito Fork Wild and Scenic River and fireweed on a nearby hillside. Production services were provided by the BLM National Operations Center’s Information and Publishing Services Section in Denver, Colorado. BLM/WO/GI-17/007+7000 Table of Contents Introduction ________________________________________________________________________________________________ 1 Soil Highlights ______________________________________________________________________________________________ 3 BLM and USGS Study Geologic Processes and Sediment Deposition on River Islands – Eastern States _______________ 3 Cooperative Efforts Increase Grass and Forb Cover, Control Cheatgrass, and Reduce Erosion – New Mexico __________ 3 Land Management Agencies Implement MOU and Make Notable Progress – Nevada _______________________________ 4 BLM Colorado Continues Badger Wash Study – Colorado _______________________________________________________ 4 Project Reduces Airborne Particulate Matter (PM10 ) – Arizona __________________________________________________ 4 Water Highlights ____________________________________________________________________________________________ 5 BLM and State of Oregon Coordinate on Water Rights Data Entry – Oregon/Washington ____________________________ 5 Partnership with Trout Unlimited Improves Conditions for Trout in Goodenough Creek – Idaho ______________________ 5 BLM Nevada State Office Hosts Interagency Drought Training and Tour – Nevada _________________________________ -

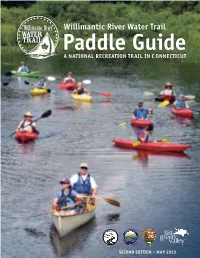

Willimantic River Water Trail Paddle Guide a National Recreation Trail in C Onnecticut

Willimantic River Water Trail Paddle Guide A National RecReatioN Trail iN c oNNecTicuT SecoNd ediTioN – MAy 2013 Welcome! The Willimantic River Water Trail provides more than 21 miles of pad- What is A Water Trail? dling enjoyment and challenges between Stafford Springs and Wind- ham, Connecticut, with only one short portage. This guide describes It is the combination of a waterway public-access launches and landing areas, as well as river features you with paddle routes and segments, will find along the way. clearly described in maps and Like all streams and rivers, the Willimantic changes character every day, guides, managed for public access even hourly. While this guide cannot possibly predict your experiences and conserved to protect land and on the water, it does provide basic information and sources for real-time water resources – the very resourc- information. The guide encourages you to ask questions, learn from oth- es paddlers come here to enjoy. ers and make careful decisions before you head out. Ultimately, it’s up to you to make good choices based on the weather, the river, your paddling The Last Green Valley, Inc. is teaming expertise and equipment. More specific safety tips are suggested below. up with paddlers, outfitters and river groups like the Willimantic River Alliance to create water CAUTION: Do not use this guide as your only source of trails on the rivers of the National navigational information. Conditions on the river change Heritage Corridor. For more infor- constantly, sometimes drastically. It is your responsibility mation, or to join The Last Green to be aware of changing conditions and the abilities of Valley or the Willimantic River your group so your decisions lead to a safe trip.