Understanding Data Communication from Fundamentals to Networking.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Strategic Use of the Internet and E-Commerce: Cisco Systems

Journal of Strategic Information Systems 11 (2002) 5±29 www.elsevier.com/locate/jsis Strategic use of the Internet and e-commerce: Cisco Systems Kenneth L. Kraemer*, Jason Dedrick Graduate School of Management and Center for Research on Information Technology and Organizations, University of California, Irvine, 3200 Berkeley Place, Irvine, CA 92697-4650, USA Accepted 3October 2001 Abstract Information systems are strategic to the extent that they support a ®rm's business strategy. Cisco Systems has used the Internet and its own information systems to support its strategy in several ways: (1) to create a business ecology around its technology standards; (2) to coordinate a virtual organiza- tion that allows it to concentrate on product innovation while outsourcing other functions; (3) to showcase its own use of the Internet as a marketing tool. Cisco's strategy and execution enabled it to dominate key networking standards and sustain high growth rates throughout the 1990s. In late 2000, however, Cisco's market collapsed and the company was left with billions of dollars in unsold inventory, calling into question the ability of its information systems to help it anticipate and respond effectively to a decline in demand. q 2002 Elsevier Science B.V. All rights reserved. Keywords: Internet; e-commerce; Cisco Systems; Virtual Organization; Business Ecology 1. Introduction Information systems are strategic to the extent that they are used to support or enable different elements of a ®rm's business strategy (Porter and Millar, 1985). Cisco Systems, the world's largest networking equipment company, has used the Internet, electronic commerce (e-commerce), and information systems as part of its broad strategy of estab- lishing a dominant technology standard in the Internet era. -

N94-22776 the AGRHYMET Data Communications Project

N94-22776 The AGRHYMET Data Communications Project G. R. Mah Hughes STX EROS Data Center Sioux Falls, SD 57198, USA D. P. Salpini USAID/Information Resource Management 11O0Wilson Boulevard Arlington, VA 22209, USA ABSTRACT clude supplying food production advice to govern- ment ministries, locust plague prediction and con- The U.S. Geological Survey (USGS) and the trol, and assistance to the Famine Early Warning U.S. Agency for International Development (USAID) System program. To accomplish its mission the are providing technical assistance to the AGRHYMET program has set up the AGRHYMET AGRHYMET program in West Africa. AGRHYMET regional center (ARC) in Niamey, Niger, and na- staff use remote sensing technology to produce sat- tional AGRHYMET centers (NAC) in each of the ellite image maps of the Sahel region of West Af- member nations. A receiving station for satellite im- rica. These image maps may show vegetation ages from NOAA's Advanced Very High Resolution greenness, sea surface temperatures, or processed Radiometer (AVHRR) instrument was installed at weather satellite imagery. The image maps must be the ARC by the French Government as part of its distributed from the AGRHYMET Regional Center in foreign aid program. The U.S. Agency for Interna- Niger to national AGRHYMET centers in the mem- tional Development (USAID), in cooperation with the ber countries of Burkina Faso, Cape Verde, Chad, U.S, Geological Survey's EROS Data Center (EDC), Gambia, Guinea-Bissau, Mali, Mauritania, Niger, have set up a system to process the AVHRR data to and Senegal. After consideration of a number of make image maps that indicate the relative "green- land- and space-based solutions for image map ness" of the area. -

Non-IBM Parts Catalog Book Cover COVER Book Cover ------Non-IBM Parts Catalog

Non-IBM Parts Catalog Book Cover COVER Book Cover -------------------------------------------------------------------------- Non-IBM Parts Catalog Document Number SA38-0041-04 -------------------------------------------------------------------------- ¦ Copyright IBM Corp. 1989, 1992 COVER - 1 Non-IBM Parts Catalog Notices NOTICES Notices This Feature pamphlet is a component of PS/2 Bill of Forms number SB0F-2480-00. ¦ Copyright IBM Corp. 1989, 1992 NOTICES - 1 Non-IBM Parts Catalog Edition Notice EDITION Edition Notice Fifth Edition (November 1992) This major revision obsoletes SA38-0041-03. The drawings and specifications contained herein shall not be reproduced in whole or in part without written permission. IBM has prepared this publication for the use of customer engineers in the installation, maintenance, or repair of the specific machines indicated. IBM makes no representations that it is suitable for any other purpose. This publication could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new editions of the publication. IBM may make improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time. Requests for copies of IBM publications should be made to your IBM representative or to the IBM branch office servicing your locality. Address comments concerning the content of this publication to IBM Corporation, Dept. 90A, Bldg. 234-2, Internal Zip 4307, 951 NW 51st St., Boca Raton, Florida, U.S.A. 33432. IBM may use or distribute whatever information you supply in any way it believes appropriate without incurring any obligation to you. ¦ Copyright International Business Machines Corporation 1989, 1992. All rights reserved. -

Digital Radio Technology and Applications

it DIGITAL RADIO TECHNOLOGY AND APPLICATIONS Proceedings of an International Workshop organized by the International Development Research Centre, Volunteers in Technical Assistance, and United Nations University, held in Nairobi, Kenya, 24-26 August 1992 Edited by Harun Baiya (VITA, Kenya) David Balson (IDRC, Canada) Gary Garriott (VITA, USA) 1 1 X 1594 F SN % , IleCl- -.01 INTERNATIONAL DEVELOPMENT RESEARCH CENTRE Ottawa Cairo Dakar Johannesburg Montevideo Nairobi New Delhi 0 Singapore 141 V /IL s 0 /'A- 0 . Preface The International Workshop on Digital Radio Technology and Applications was a milestone event. For the first time, it brought together many of those using low-cost radio systems for development and humanitarian-based computer communications in Africa and Asia, in both terrestrial and satellite environments. Ten years ago the prospect of seeing all these people in one place to share their experiences was only a far-off dream. At that time no one really had a clue whether there would be interest, funding and expertise available to exploit these technologies for relief and development applications. VITA and IDRC are pleased to have been involved in various capacities in these efforts right from the beginning. As mentioned in VITA's welcome at the Workshop, we can all be proud to have participated in a pioneering effort to bring the benefits of modern information and communications technology to those that most need and deserve it. But now the Workshop is history. We hope that the next ten years will take these technologies beyond the realm of experimentation and demonstration into the mainstream of development strategies and programs. -

Telebit T3000

Telebit T3000 Fast Start Guide 90202-01 Rev. A When connectivity counts. Telebit T3000 Fast Start Guide 90202-01 Rev. A PREFACE This guide is provided to help you quickly install and configure the T3000 modem. If you carefully follow the instructions in this booklet, you may not need to refer to the T3000 Reference Manual unless you have special requirements or need more specific instructions. However, we recommend that you spend some time reviewing that manual for additional information that is not covered in this guide. Copyright© 1991 Telebit Corporation. Telebit is a registered trademark of Telebit Corporation. Other brands or product names are trademarks or registered trademarks of their respective holders. 1 Installation Equipment Checklist Before installing the modern, make sure that you have the following equipment: • An AC power cord and transformer provided with your modern. • The 7-foot telephone cable supplied with your modern. • A shielded RS-232D cable with a DB-25 male connector for the modern. Your computer dealer can assist you in obtaining the appropriate cable for your requirements. • A small flat-blade screwdriver. If you do not have the items listed above, obtain them before proceeding. Connecting the Modem If you will be using the T3000 modern with a minicomputer or mainframe, you may need to first connect it to a terminal (or personal computer with a terminal emulation program) to preconfigure the modern before installing it in its final position. Make sure that the power switch (I/0) on the rear of the modern is turned off (0). Connect the power cord provided with your modern to the POWER connector on the back of the modern. -

1992-Telebit-T3000-V32bis-Modem

~ W O O O TELEBIT T3000'" Uata Comprcssion. CC ITI VA2bis dala Thc Industry's Fastcst Modem comprcssion. for 1ll0dcms incorpol"atin g Y.42 LAP-M, provides compression capabililie s 01' With transm ission speeds oI' LI ]) 10 57.600 bps. up 10 4: I. The T3000 is al so downwarcl ly Tclcbit has reached ti ncw mi lcslunc in V.32bis cOlll p:lIible with l110Jems using MNP 5. modem capabilities. This ra w spccd pcrlllils raslcr fil e lransfe rs. sercell fill s. and nClwork Use I' Friendly. Equ ippcd with 15 pre-sct conncctions - anel in lll:tlly applications a dial ~ Full y V.32bi.\" V.32, configuratio ll s Ihal are pcrmancllIl y slorcd in lhe up modem ma)' be lIscd 10 rcplace a 56K164 K V.22bi .~ , V.22, V.23, V.2 1, modem. lhe T3000 is easily con fi gu rccl for BeIl212/ 1U3 comp:'ltible Ica scd line. This can dramat ic,tlly rcduce costs specitic lI ser applic<l ti ons. Adelitional!y. the anel increase producli vilY. ~ Operates fuI! duplex ai T3000 provicles 10 front pane1 LEDs. which 14,400, 12,000,9600, DISTlNCTIVE FEATURES di splay the status 01' alllllajor ElA leael s. 7200, 4800, 2400, 1200 I'astost Throughput. Tho T3000 is a ful l and 300 hps Modcm Security. The T3000 protects 1I 0s1 dllplcx modem 1ha1 combines V.32bis trellis Supporls IJTE spccds UI' comput crs aga insl ll llau lhorizeu access wilh Iwo ~ codcd Illocl ul ation with V.42 error cO l1 trol anel lo 57,600 hps types 01' dial-access securit y. -

Devadas V. Patil LIBRARIES

Business Development Trends and Analysis for the Data Networking Market MASSACHUSETTS INSTITUTE OF TECHNOLOGY By MAR 0 8 2012 Devadas V. Patil LIBRARIES B.E. Mechanical Engineering, National Institute of Technology, Tiruchirapalli, India, 1990 M.S. Mechanical Engineering, University of Hawaii at Manoa, 1992 M.S. Information and Computer Science, University of Hawaii at Manoa, 1994 ARCHIVES SUBMITTED TO MIT SLOAN SCHOOL OF MANAGEMENT AND SCHOOL OF ENGINEERING IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR SYSTEM DESIGN AND MANAGEMENT PROGRAM FOR THE DEGREE OF MASTER OF SCIENCE IN ENGINEERING AND MANAGEMENT AT THE MASSACHUSETTS INSTITUTE OF TECHNOLOGY FEBRUARY 2011 0 2011 Devadas V. Patil. All rights reserved The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature of Author: DEVADAS PATIL System Design an 4a a e, nt Pr gram, December 2010 A 17A Certified by: _ _ _ _ _ _ _ __ _ _ HOWAkVI AN ERON, THESIS SUPERVISOR Bill Porter (1967) Distinguisheenior Lecturer of Entrepreneurship Accepted by: PAT HALE Director, System Design and Management Program This page has been intentionally left blank - 2- Business Development Trends and Analysis for the Data Networking Market By Devadas V. Patil Submitted to the System Design and Management Program on December 15, 2010 in Partial Fulfillment of the Requirements for the Degree of Master of Science in Engineering and Management ABSTRACT The Internet has come a long way after the widely reported invention by Sandra Lerner and Leonard Bosack of the router, a device that can transmit data from one network to another based on certain protocols and principles. -

TELEBIT® When Connectivity Counts

Modem Reference Manual for the Telebit T3000 and WorldBlazer Family of Products 90238-01 ., ' '1IJ TELEBIT® When connectivity counts. Modem Reference Manual for the Telebit T3000 and WorldBlazer Family of Products 90238-01 How to Use This Manual About This Manual This manual is a reference guide for using any of the Telebit T3000 and WorldBlazer modems. It is designed for both new and experienced modem users. To help you install and configure the modem, refer to the User's Guide for your particular modem. It is a separate booklet provided with your modem. If you have special requirements or experience any problems while using. your User's Guide, refer to this manual for additional information. Note: Read your User's Guide before reading this reference manual. Whether you are a first-time or experienced user, install your modem using the instructions in your User's Guide. This manual contains six chapters and four appendices: • Chapter 1, Introduction, describes the main features of the modem. • Chapter 2, Modem Operation and Special Features, describes command mode operations, flow control, RS-232 control signal interpretations, error control, data compression, protocol support, and synchronous support. • Chapter 3, AT Command Descriptions, describes the commands and explains the possible parameters, the range of parameters, and the default settings. 90238-01 How to Use This Manual • Chapter 4, S Register Descriptions, describes the registers used to operate the modem and explains the possible parameters, the range of parameters, and the default settings. • Chapter 5, Troubleshooting, describes the diagnostic tests performed by the modem after it is powered up. -

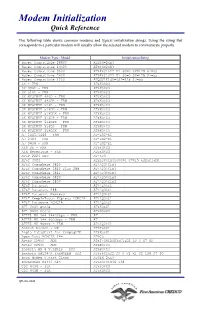

Modem Initialization Quick Reference

Modem Initialization Quick Reference The following table shows common modems and typical initialization strings. Using the string that corresponds to a particular modem will usually allow the selected modem to communicate properly. Modem Type / Model Initialization String Hayes Compatible 28800 ATS46=2&K3 Hayes Compatible 14400 ATS46=2&K3 Hayes Compatible 9600 AT&F&C1&D2 V1 S0=0 S2=128 S7=55 Hayes Compatible 2400 AT&F&C1&D2 V1 S0=0 S2=128 S7=55 Hayes Compatible 1200 ATQ0V1E1S0=0S2=128 S7=55 3X - FRN AT&K3%C3 3X 396D - FRN AT&K3%C3 3X 514D - FRN AT&K3%C3 3X WYSIWYF 496D - FRN AT&K3%C3 3X WYSIWYF 496DB - FRN AT&K3%C3 3X WYSIWYF 514D - FRN AT&K3%C3 3X WYSIWYF 514DB - FRN AT&K3%C3 3X WYSIWYF 514DBX - FRN AT&K3%C3 3X WYSIWYF 514DP - FRN AT&K3%C3 3X WYSIWYF 514DPX - FRN AT&K3%C3 3X WYSIWYF 514DS - FRN AT&K3%C3 3X WYSIWYF 514DSX - FRN AT&K3%C3 AJ 1445/1446 - FRN AT*LG2*E1 AJ 2423 - FRN AT*LG2*E1 AJ 9636 - FRN AT*LG2*E1 ARN DX - FRN AT&K3%C3 ARN Generique - FRN AT&K3%C3 AT&T 2224 CEO AT\\Q3 AT&T 4024 ATE1Q0V1X4Y0S0=0 S7=15 &D2&C1&M0 AT&T Comsphere 3810 AT\\Q3%C1H3 AT&T Comsphere 3810 Plus 288 AT\\Q3%C1H3 AT&T Comsphere 3811 AT\\Q3%C1H3 AT&T Comsphere 3820 AT\\Q3%C1H3 AT&T Comsphere 3830 AT\\Q3%C1H3 AT&T Dataport AT\\Q3%C1 AT&T Dataport 288 AT\\Q3%C1 AT&T Dataport Express AT\\Q3%C1 AT&T KeepInTouch Express PCMCIA AT\\Q3%C1 AT&T Paradyne PCMCIA AT\\Q3%C1 ATI 2400 etc/e AT&K3&U1 ATI 9600 etc/e AT&K3&U0 ATTEL MX 144_14400bps - FRN AT ATTEL MX 144_9600bps - FRN AT ATTEL MX 96xxx - FRN AT\\Q2%C1 Andest Rocket - UK AT$F4$C1 Angia DataStart for CompaqLTE -

Cisco Systems

Cisco Systems By Gediminas Sumyla Company overview Cisco Systems, Inc. is the worldwide leader in networking for the Internet. Today, networks are an essential part of business, education, government and home communications, and Cisco Internet Protocol-based (IP) networking solutions are the foundation of these networks. Cisco hardware, software, and service offerings are used to create Internet solutions that allow individuals, companies, and countries to increase productivity, improve customer satisfaction and strengthen competitive advantage. The Cisco name has become synonymous with the Internet, as well as with the productivity improvements that Internet business solutions provide. Mission and vision ―At Cisco, our vision is to change the way people work, live, play and learn‖ – Cisco Company’s mission is to enable people to make powerful connections-whether in business, education, philanthropy, or creativity. Cisco Systems also strove to deliver a wide range of new products, expand its offerings through internal and external efforts, enhance customer support, and increase its presence around the world. Organizations started to recognize the value of their information networks and the Internet as a source of business advantage; all of this drove company’s mission and vision. Cisco saw the need for multiservice network and were trying to find the ways how to integrate voice, video and data networks together. Executive leadership team John T. Chambers - Chairman and Chief Executive Officer Gregory Akers - Senior Vice President -

Cisco Modem User Interface

Cisco Modem User Interface Feature History Release Modification 12.2(4)T This feature was introduced. This document describes the Asynchronous Line Monitoring feature feature in Cisco IOS Release 12.2(4)T. It includes the following sections: • Feature Overview, page 1 • Supported Platforms, page 3 • Supported Standards, MIBs, and RFCs, page 3 • Configuration Tasks, page 4 • Monitoring and Maintaining the Asynchronous Line Monitoring feature, page 9 • Configuration Examples, page 9 • Command Reference, page 12 • Glossary, page 13 Feature Overview The Asynchronous Line Monitoring feature feature enables Cisco routers to behave like a modem and be configured using standard Hayes modem commands. Figure 1 shows a legacy data transfer configuration using X.25, modems, and telephone lines. Americas Headquarters: Cisco Systems, Inc., 170 West Tasman Drive, San Jose, CA 95134-1706 USA Cisco Modem User Interface Feature Overview Figure 1 Legacy Data Transfer Equipment POS Host terminal system Modem Modem PSTN 60548 With the Asynchronous Line Monitoring feature feature, a point of sale (POS) terminal, such as those used by gasoline service stations to charge customers for merchandise and services, can use high-speed Internet connections rather than slow-speed telephone connections to transfer data (see Figure 2). Figure 2 Data Transfers Using the Asynchronous Line Monitoring feature POS Client Host terminal router system Internet 60549 The user interface to the higher speed connection will not change when the Asynchronous Line Monitoring feature feature is used; the user interface will still appear as if the connection on the POS terminal is through a modem and a telephone line. Although there are a wide variety of proprietary extensions to the Hayes modem commands, the Asynchronous Line Monitoring feature feature supports only a subset of the commands. -

TCP/IP and Data Communications Administration Guide

TCP/IP and Data Communications Administration Guide Sun Microsystems, Inc. 2550 Garcia Avenue Mountain View, CA 94043-1100 U.S.A. Part No: 802-5753 August 1997 Copyright 1997 Sun Microsystems, Inc. 901 San Antonio Road, Palo Alto, California 94303-4900 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, SunSoft, SunDocs, SunExpress, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements.