Scalability Evaluation of VPN Technologies for Secure Container Networking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Flexgw Ipsec VPN Image User Guide

FlexGW IPsec VPN Image User Guide Zhuyun Information Technology Co.,Ltd. www.cloudcare.cn Zhuyun Information Technology Co.,Ltd. Contents .......................................................................................................... .................................................................................................................. 1 Introduction 4 1.1 Software Compon.e..n..t.s................................................................................................................... 4 1.2 Login Description ................................................................................................................... 4 1.3 Function Description ....................................................................................................5 1.4 Typical Scenarios Des..c..r..i.p..t..i.o..n......................................................................................................5 1.5 Program Description .................................................................................6 1.6 Software Operation Command Summary ............................... 7 ............................................................................................................... 2 IPSec Site-to-Site VPN User Guide (VPC network scenario) 8 2.1 Start IPSec VPN.s..e..r..v..i.c..e.................................................................................................................8 2.2 Add new tunnel ................................................................................................................. -

Freenas® 11.0 User Guide

FreeNAS® 11.0 User Guide June 2017 Edition FreeNAS® IS © 2011-2017 iXsystems FreeNAS® AND THE FreeNAS® LOGO ARE REGISTERED TRADEMARKS OF iXsystems FreeBSD® IS A REGISTERED TRADEMARK OF THE FreeBSD Foundation WRITTEN BY USERS OF THE FreeNAS® network-attached STORAGE OPERATING system. VERSION 11.0 CopYRIGHT © 2011-2017 iXsystems (https://www.ixsystems.com/) CONTENTS WELCOME....................................................1 TYPOGRAPHIC Conventions...........................................2 1 INTRODUCTION 3 1.1 NeW FeaturES IN 11.0..........................................3 1.2 HarDWARE Recommendations.....................................4 1.2.1 RAM...............................................5 1.2.2 The OperATING System DeVICE.................................5 1.2.3 StorAGE Disks AND ContrOLLERS.................................6 1.2.4 Network INTERFACES.......................................7 1.3 Getting Started WITH ZFS........................................8 2 INSTALLING AND UpgrADING 9 2.1 Getting FreeNAS® ............................................9 2.2 PrEPARING THE Media.......................................... 10 2.2.1 On FreeBSD OR Linux...................................... 10 2.2.2 On WindoWS.......................................... 11 2.2.3 On OS X............................................. 11 2.3 Performing THE INSTALLATION....................................... 12 2.4 INSTALLATION TROUBLESHOOTING...................................... 18 2.5 UpgrADING................................................ 19 2.5.1 Caveats:............................................ -

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

![Arxiv:1907.07120V1 [Cs.CY] 16 Jul 2019 1 Introduction That China Hindered Access to I2P by Poisoning DNS Resolu- Tions of the I2P Homepage and Three Reseed Servers](https://docslib.b-cdn.net/cover/9451/arxiv-1907-07120v1-cs-cy-16-jul-2019-1-introduction-that-china-hindered-access-to-i2p-by-poisoning-dns-resolu-tions-of-the-i2p-homepage-and-three-reseed-servers-59451.webp)

Arxiv:1907.07120V1 [Cs.CY] 16 Jul 2019 1 Introduction That China Hindered Access to I2P by Poisoning DNS Resolu- Tions of the I2P Homepage and Three Reseed Servers

Measuring I2P Censorship at a Global Scale Nguyen Phong Hoang Sadie Doreen Michalis Polychronakis Stony Brook University The Invisible Internet Project Stony Brook University Abstract required flexibility for conducting fine-grained measurements on demand. We demonstrate these benefits by conducting an The prevalence of Internet censorship has prompted the in-depth investigation of the extent to which the I2P (invis- creation of several measurement platforms for monitoring ible Internet project) anonymity network is blocked across filtering activities. An important challenge faced by these different countries. platforms revolves around the trade-off between depth of mea- Due to the prevalence of Internet censorship and online surement and breadth of coverage. In this paper, we present surveillance in recent years [7, 34, 62], many pro-privacy and an opportunistic censorship measurement infrastructure built censorship circumvention tools, such as proxy servers, virtual on top of a network of distributed VPN servers run by vol- private networks (VPN), and anonymity networks have been unteers, which we used to measure the extent to which the developed. Among these tools, Tor [23] (based on onion rout- I2P anonymity network is blocked around the world. This ing [39,71]) and I2P [85] (based on garlic routing [24,25,33]) infrastructure provides us with not only numerous and ge- are widely used by privacy-conscious and censored users, as ographically diverse vantage points, but also the ability to they provide a higher level of privacy and anonymity [42]. conduct in-depth measurements across all levels of the net- In response, censors often hinder access to these services work stack. -

Implementation Single Account Pdc Vpn Based on Ldap

IMPLEMENTATION SINGLE ACCOUNT PDC VPN BASED ON LDAP Gregorius Hendita Artha Kusuma Teknik Informatika, Fakultas Teknik Universitas Pancasila [email protected] Abstrak Data is an important for the company. Centralized data storage to facilitate users for accessing data in the company. Data will be stored centrally with PDC (Primary Domain Controller). Build communicate between head office and branch office requires high cost for each connection is not enough to ensure safety and security of data. Exchange data between head office and branch office should be kept confidential. VPN (Virtual Private Network) makes communication more efficient, not only the cost affordable that connection, security and safety will be the primary facility of VPN (Virtual Private Network). Service were established in the system will be integrated using LDAP (Lightweight Directory Access Protocol) to create a single account in each services such as PDC (Primary Domain Controller) and VPN (Virtual Private Network). The purposes of this final project to design and implementation a system centralized data storage and build communicate between head office and branch office are integrated with LDAP (Lighweight Active Directory Protocol). Hopefully this system can give more advantage to each network users. Keyword: PDC, VPN, LDAP, Single Account. I. Introduction previous workstations. To support the performance of the employees of the company of course has a Centralized data storage makes it easy for users variety of network services are formed in it such as to access data. many companies need a ftp, mail server, file sharing etc. These services of centralized storage system, because the data is course have their respective accounts. -

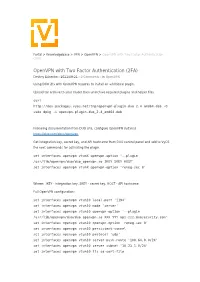

Openvpn with Two-Factor Authentication (2FA)

Portal > Knowledgebase > VPN > OpenVPN > OpenVPN with Two Factor Authentication (2FA) OpenVPN with Two Factor Authentication (2FA) Dmitriy Eshenko - 2021-09-21 - 0 Comments - in OpenVPN Using DUO 2fa with OpenVPN requires to install an additional plugin. Upload tar archive to your router then unarchive required plugins and helper files curl http://dev.packages.vyos.net/tmp/openvpn-plugin-duo_2.4_amd64.deb -O sudo dpkg -i openvpn-plugin-duo_2.4_amd64.deb Following documentation from DUO site, configure OpenVPN instance https://duo.com/docs/openvpn Get integration key, secret key, and API hostname from DUO control panel and add to VyOS the next commands for activating the plugin. set interfaces openvpn vtunX openvpn-option '--plugin /usr/lib/openvpn/duo/duo_openvpn.so IKEY SKEY HOST' set interfaces openvpn vtunX openvpn-option 'reneg-sec 0' Where: IKEY - integration key, SKEY - secret key, HOST - API hostname Full OpenVPN configuration: set interfaces openvpn vtun10 local-port '1194' set interfaces openvpn vtun10 mode 'server' set interfaces openvpn vtun10 openvpn-option '--plugin /usr/lib/openvpn/duo/duo_openvpn.so XXX YYY api-zzz.duosecurity.com' set interfaces openvpn vtun10 openvpn-option 'reneg-sec 0' set interfaces openvpn vtun10 persistent-tunnel set interfaces openvpn vtun10 protocol 'udp' set interfaces openvpn vtun10 server push-route '100.64.0.0/24' set interfaces openvpn vtun10 server subnet '10.23.1.0/24' set interfaces openvpn vtun10 tls ca-cert-file '/config/auth/ovpn/ca.crt' set interfaces openvpn vtun10 tls cert-file '/config/auth/ovpn/central.crt' set interfaces openvpn vtun10 tls crl-file '/config/auth/ovpn/crl.pem' set interfaces openvpn vtun10 tls dh-file '/config/auth/ovpn/dh.pem' set interfaces openvpn vtun10 tls key-file '/config/auth/ovpn/central.key' How to generate cryptographic materials described by the following link https://support.vyos.io/en/kb/articles/using-easy-rsa-to-generate-x-509-certificates-and-keys -2. -

Enabling TPM Based System Security Features

Enabling TPM based system security features Andreas Fuchs <[email protected]> Who am I ? ● 13 year on/off TPMs ● Fraunhofer SIT: Trustworthy Platforms ● TCG-member: TPM Software Stack WG ● Maintainer – tpm2-tss: The libraries – tpm2-tss-engine: The openssl engine – tpm2-totp: Computer-to-user attestation (mjg’s tpm-totp reimplemented for 2.0) 2 The hardware stack ● Trusted Platform Module (TPM) 2.0 – Smartcard-like capabilities but soldered in – Remote Attestation capabilities – As separate chip (LPC, SPI, I²C) – In Southbridge / Firmware – Via TEEs/TrustZone, etc – Thanks to Windows-Logos in every PC ● CPU – OS, TSS 2.0, where the fun is... 3 The TPM Software Stack 2.0 ● Kernel exposes /dev/tpm0 with byte buffers ● tpm2-tss is like the mesa of TCG specs ● TCG specifications: – TPM spec for functionality – TSS spec for software API ● tpm2-tss implements the glue ● Then comes core module / application integration – Think GDK, but OpenSSL – Think godot, but pkcs11 – Think wayland, but cryptsetup 4 The TSS APIs System API (sys) Enhanced SYS (esys) Feature API (FAPI) • 1:1 to TPM2 cmds • Automate crypto for • Spec in draft form HMAC / encrypted • TBimplemented • Cmd / Rsp sessions • No custom typedefs U serialization • Dynamic TCTI • JSON interfaces s • No file I/O loading • Provides Policy e • No crypto • Memory allocations language r • No heap / malloc • No file I/O • Provides keystore S p TPM Command Transmission Interface (tss2-tcti) p a Abstract command / response mechanism, • No crypto, heap, file I/O a Decouple APIs -

Master Thesis

Master's Programme in Computer Network Engineering, 60 credits MASTER Connect street light control devices in a secure network THESIS Andreas Kostoulas, Efstathios Lykouropoulos, Zainab Jumaa Network security, 15 credits Halmstad 2015-02-16 “Connect street light control devices in a secure network” Master’s Thesis in Computer Network engineering 2014 Authors: Andreas Kostoulas, Efstathios Lykouropoulos, Zainab Jumaa Supervisor: Alexey Vinel Examiner: Tony Larsson Preface This thesis is submitted in partial fulfilment of the requirements for a Master’s Degree in Computer Network Engineering at the Department of Information Science - Computer and Electrical Engineering, at University of Halmstad, Sweden. The research - implementation described herein was conducted under the supervision of Professor Alexey Vinel and in cooperation with Greinon engineering. This was a challenging trip with both ups and downs but accompanied by an extend team of experts, always willing to coach, sponsor, help and motivate us. For this we would like to thank them. We would like to thank our parents and family for their financial and motivational support, although distance between us was more than 1500 kilometres. Last but not least we would like to thank our fellow researchers and friends on our department for useful discussions, comments, suggestions, thoughts and also creative and fun moments we spend together. i Abstract Wireless communications is a constantly progressing technology in network engineering society, creating an environment full of opportunities that are targeting in financial growth, quality of life and humans prosperity. Wireless security is the science that has as a goal to provide safe data communication between authorized users and prevent unauthorized users from gaining access, deny access, damage or counterfeit data in a wireless environment. -

N2N: a Layer Two Peer-To-Peer VPN

N2N: A Layer Two Peer-to-Peer VPN Luca Deri1, Richard Andrews2 ntop.org, Pisa, Italy1 Symstream Technologies, Melbourne, Australia2 {deri, andrews}@ntop.org Abstract. The Internet was originally designed as a flat data network delivering a multitude of protocols and services between equal peers. Currently, after an explosive growth fostered by enormous and heterogeneous economic interests, it has become a constrained network severely enforcing client-server communication where addressing plans, packet routing, security policies and users’ reachability are almost entirely managed and limited by access providers. From the user’s perspective, the Internet is not an open transport system, but rather a telephony-like communication medium for content consumption. This paper describes the design and implementation of a new type of peer-to- peer virtual private network that can allow users to overcome some of these limitations. N2N users can create and manage their own secure and geographically distributed overlay network without the need for central administration, typical of most virtual private network systems. Keywords: Virtual private network, peer-to-peer, network overlay. 1. Motivation and Scope of Work Irony pervades many pages of history, and computing history is no exception. Once personal computing had won the market battle against mainframe-based computing, the commercial evolution of the Internet in the nineties stepped the computing world back to a substantially rigid client-server scheme. While it is true that the today’s Internet serves as a good transport system for supplying a plethora of data interchange services, virtually all of them are delivered by a client-server model, whether they are centralised or distributed, pay-per-use or virtually free [1]. -

Trinity Iot Product Catalogue

IoT Devices TRINITY IOT PRODUCT CATALOGUE Teltonika Device Range 2021 TABLE OF CONTENTS 1 RUT950 27 TRB142 3 RUT955 29 TRB145 5 RUTX09 31 RUTX10 7 RUTX11 33 RUTX12 9 TRB141 35 RUTX08 11 RUT230 37 TRB255 13 RUT240 39 FMB920 15 RUT850 41 RUTXR1 17 RUT900 43 FM130 19 GH5200 45 BLUE SLIM ID 21 TRB245 47 BLUE COIN MAG 23 TSW100 49 BLUE COIN T 25 TRB140 PRODUCT SHEET / RUT950 ROUTER INTRODUCING RUT950 TRINITY APPROVED & SMART™ COMPATIBLE RUT950 is a highly reliable and secure LTE router for professional applications. Router delivers high performance, mission-critical cellular communication. RUT950 is equipped with connectivity redundancy through dual SIM failover. External antenna connectors make it possible to attach desired antennas and to easily find the best signal location. LTE LTE Cat4 Cat4 LTE Cat 4 with Dual SIM – significantly speeds up to 150 Mps reduce roaming costs WANLTE 4 LTEX failoverCat4 Cat4 Automatic switch to available 4x Ethernet ports backup connection with VLAN functionality LTE LTE Cat4 Cat4 Wireless Access Point Linux Powered with Hotspot functionality Simply order, and we’ll take care of the rest Source Import Test ICASA Management Onboarding 24/7 Platform Support www.trinity.co.za 1 PRODUCT SHEET / RUT950 ROUTER LAN Ethernet Ports WAN Ethernet Ports LTE antenna connectors Power socket WiFi antenna SIM card connectors slots Hardware Weight 256 g CPU Atheros Wasp, MIPS 74Kc, 550 MHz Memory 16 MBytes Flash, 128 MBytes DDR2 RAM Ethernet 4 x 10/100 Ethernet ports: 1 x WAN (configurable as LAN), 3 x LAN ports Power supply 9 -

Applications Log Viewer

4/1/2017 Sophos Applications Log Viewer MONITOR & ANALYZE Control Center Application List Application Filter Traffic Shaping Default Current Activities Reports Diagnostics Name * Mike App Filter PROTECT Description Based on Block filter avoidance apps Firewall Intrusion Prevention Web Enable Micro App Discovery Applications Wireless Email Web Server Advanced Threat CONFIGURE Application Application Filter Criteria Schedule Action VPN Network Category = Infrastructure, Netw... Routing Risk = 1-Very Low, 2- FTPS-Data, FTP-DataTransfer, FTP-Control, FTP Delete Request, FTP Upload Request, FTP Base, Low, 4... All the Allow Authentication FTPS, FTP Download Request Characteristics = Prone Time to misuse, Tra... System Services Technology = Client Server, Netwo... SYSTEM Profiles Category = File Transfer, Hosts and Services Confe... Risk = 3-Medium Administration All the TeamViewer Conferencing, TeamViewer FileTransfer Characteristics = Time Allow Excessive Bandwidth,... Backup & Firmware Technology = Client Server Certificates Save Cancel https://192.168.110.3:4444/webconsole/webpages/index.jsp#71826 1/4 4/1/2017 Sophos Application Application Filter Criteria Schedule Action Applications Log Viewer Facebook Applications, Docstoc Website, Facebook Plugin, MySpace Website, MySpace.cn Website, Twitter Website, Facebook Website, Bebo Website, Classmates Website, LinkedIN Compose Webmail, Digg Web Login, Flickr Website, Flickr Web Upload, Friendfeed Web Login, MONITOR & ANALYZE Hootsuite Web Login, Friendster Web Login, Hi5 Website, Facebook Video -

Test-Beds and Guidelines for Securing Iot Products and for Secure Set-Up Production Environments

IoT4CPS – Trustworthy IoT for CPS FFG - ICT of the Future Project No. 863129 Deliverable D7.4 Test-beds and guidelines for securing IoT products and for secure set-up production environments The IoT4CPS Consortium: AIT – Austrian Institute of Technology GmbH AVL – AVL List GmbH DUK – Donau-Universit t Krems I!AT – In"neon Technologies Austria AG #KU – JK Universit t Lin$ / Institute for &ervasive 'om(uting #) – Joanneum )esearch !orschungsgesellschaft mbH *+KIA – No,ia -olutions an. Net/or,s 0sterreich GmbH *1& – *1& -emicon.uctors Austria GmbH -2A – -2A )esearch GmbH -)!G – -al$burg )esearch !orschungsgesellschaft -''H – -oft/are 'om(etence 'enter Hagenberg GmbH -AG0 – -iemens AG 0sterreich TTTech – TTTech 'om(utertechni, AG IAIK – TU Gra$ / Institute for A((lie. Information &rocessing an. 'ommunications ITI – TU Gra$ / Institute for Technical Informatics TU3 – TU 3ien / Institute of 'om(uter 4ngineering 1*4T – 1-Net -ervices GmbH © Copyright 2020, the Members of the IoT4CPS Consortium !or more information on this .ocument or the IoT5'&- (ro6ect, (lease contact8 9ario Drobics7 AIT Austrian Institute of Technology7 mario:.robics@ait:ac:at IoT4C&- – <=>?@A Test-be.s an. guidelines for securing IoT (ro.ucts an. for secure set-up (ro.uction environments Dissemination level8 &U2LI' Document Control Title8 Test-be.s an. gui.elines for securing IoT (ro.ucts an. for secure set-u( (ro.uction environments Ty(e8 &ublic 4.itorBsC8 Katharina Kloiber 4-mail8 ,,;D-net:at AuthorBsC8 Katharina Kloiber, Ni,olaus DEr,, -ilvio -tern )evie/erBsC8 -te(hanie von )E.en, Violeta Dam6anovic, Leo Ha((-2otler Doc ID8 DF:5 Amendment History Version Date Author Description/Comments VG:? ?>:G?:@G@G -ilvio -tern Technology Analysis VG:@ ?G:G>:@G@G -ilvio -tern &ossible )esearch !iel.s for the -2I--ystem VG:> >?:G<:@G@G Katharina Kloiber Initial version (re(are.