ARM Trustzone-Based Mobile Peripheral Control

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Device Tree 101

Device Tree 101 Device Tree 101 Organized in partnership with ST February 9, 2021 Thomas Petazzoni embedded Linux and kernel engineering [email protected] © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Corrections, suggestions, contributions and translations are welcome! - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/56 Who is speaking ? I Thomas Petazzoni I Chief Technical Officer at Bootlin I Joined in 2008, employee #1 I Embedded Linux & Linux kernel engineer, open-source contributor I Author of the Device Tree for Dummies talk in 2013/2014 I Buildroot co-maintainer I Linux kernel contributor: ≈ 900 contributions I Member of Embedded Linux Conference (Europe) program committee I Based in Toulouse, France - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/56 Agenda I Bootlin introduction I STM32MP1 introduction I Why the Device Tree ? I Basic Device Tree syntax I Device Tree inheritance I Device Tree specifications and bindings I Device Tree and Linux kernel drivers I Common properties and examples - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/56 Bootlin I In business since 2004 I Team based in France I Serving customers worldwide I 18% revenue from France I 44% revenue from EU except France I 38% revenue outside EU I Highly focused and recognized expertise I Embedded Linux I Linux kernel I Embedded Linux build systems I Activities -

Devicetree BOF

Devicetree BOF Open Source Summit Japan 2018 Tokyo Frank Rowand, Sony June 20, 2018 180618_0351 My Goal Do NOT show all of the slides Agenda - Past events - Future events - New since elc 2017 (February 2017) - Tools status - dtc compiler - questions, comments, issues, concerns from the crowd - commit statistics Plumbers 2017 Summary September 2017 Los Angeles Was not scheduled -- not enough interest / commitment Devicetree Workshop Oct 2017 Prague, Czech Republic https://elinux.org/Device_tree_future #Kernel_Summit_2017.2C_Devicetree_Workshop - slides - notes === Validation Tools & Schema === Runtime usage === DTS maintenance issues === More stuff Devicetree Workshop Oct 2017 Prague, Czech Republic Devicetree Workshop 2017 9:30 Welcome and Schedule bashing 9:40 Encoding and Schema checking: Framing the problem 9:45 DT YAML encoding overview 10:00 YAML encoding discussion 10:20 DT Schema format - option 1 10:35 DT Schema format - option 2 10:50 DT Schema discussion - what should go in the spec? 11:50 Code Generation from DT 12:10 Runtime memory consumption 14:30 Overlay maintenance plan 14:45 Avoiding duplicate descriptions 15:00 Criteria for accepting board files 15:15 Location for maintaining bindings - how to handle foreign bindings 15:30 Sharing Generic bindings 15:45 ABI Stability 16:00 [break and overflow discussion] 16:30 DT health check 16:50 devicetree.org update 17:05 EBBR Discussion 17:20 Closing and feedback Plumbers 2018 November 13 - 15, 2018 Tuesday - Thursday Vancouver, British Columbia, Canada https://www.linuxplumbersconf.org/event/2/overview -

Introduction to Linux Kernel Driver Programming

IntroductionIntroduction toto LinuxLinux kernelkernel driverdriver programmingprogramming Introduction to Linux kernel driver programming The Linux kernel device model Authors and license ● Authors – Michael Opdenacker ([email protected]) Founder of Bootlin, kernel and embedded Linux engineering company https://bootlin.com/company/staff/michael-opdenacker ● License – Creative Commons Attribution – Share Alike 4.0 https://creativecommons.org/licenses/by-sa/4.0/ – Document sources: https://github.com/e-ale/Slides Need for a device model ● For the same device, need to use the same device driver on multiple CPU architectures (x86, ARM…), even though the hardware controllers are different. ● Need for a single driver to support multiple devices of the same kind. ● This requires a clean organization of the code, with the device drivers separated from the controller drivers, the hardware description separated from the drivers themselves, etc. Driver: between bus infrastructure and framework In Linux, a driver is always interfacing with: ● a framework that allows the driver to expose the hardware features in a generic way. ● a bus infrastructure, part of the device model, to detect/communicate with the hardware. Let’s focus on the bus infrastructure for now Device model data structures The device model is organized around three main data structures: ● The struct bus_type structure, which represent one type of bus (USB, PCI, I2C, etc.) ● The struct device_driver structure, which represents one driver capable of handling certain devices on a certain bus. ● The struct device structure, which represents one device connected to a bus The kernel uses inheritance to create more specialized versions of struct device_driver and struct device for each bus subsystem. -

Kernel Boot-Time Tracing

Kernel Boot-time Tracing Linux Plumbers Conference 2019 - Tracing Track Masami Hiramatsu <[email protected]> Linaro, Ltd. Speaker Masami Hiramatsu - Working for Linaro and Linaro members - Tech Lead for a Landing team - Maintainer of Kprobes and related tracing features/tools Why Kernel Boot-time Tracing? Debug and analyze boot time errors and performance issues - Measure performance statistics of kernel boot - Analyze driver init failure - Debug boot up process - Continuously tracing from boot time etc. What We Have There are already many ftrace options on kernel command line ● Setup options (trace_options=) ● Output to printk (tp_printk) ● Enable events (trace_events=) ● Enable tracers (ftrace=) ● Filtering (ftrace_filter=,ftrace_notrace=,ftrace_graph_filter=,ftrace_graph_notrace=) ● Add kprobe events (kprobe_events=) ● And other options (alloc_snapshot, traceoff_on_warning, ...) See Documentation/admin-guide/kernel-parameters.txt Example of Kernel Cmdline Parameters In grub.conf linux /boot/vmlinuz-5.1 root=UUID=5a026bbb-6a58-4c23-9814-5b1c99b82338 ro quiet splash tp_printk trace_options=”sym-addr” trace_clock=global ftrace_dump_on_oops trace_buf_size=1M trace_event=”initcall:*,irq:*,exceptions:*” kprobe_event=”p:kprobes/myevent foofunction $arg1 $arg2;p:kprobes/myevent2 barfunction %ax” What Issues? Size limitation ● kernel cmdline size is small (< 256bytes) ● A half of the cmdline is used for normal boot Only partial features supported ● ftrace has too complex features for single command line ● per-event filters/actions, instances, histograms. Solutions? 1. Use initramfs - Too late for kernel boot time tracing 2. Expand kernel cmdline - It is not easy to write down complex tracing options on bootloader (Single line options is too simple) 3. Reuse structured boot time data (Devicetree) - Well documented, structured data -> V1 & V2 series based on this. Boot-time Trace: V1 and V2 series V1 and V2 series posted at June. -

Devicetree Specification

Devicetree Specification Release unknown-rev devicetree.org unknown-rev 13 September 2021 Contents 1 Introduction 3 1.1 Purpose and Scope..............................................3 1.2 Relationship to IEEE™ 1275 and ePAPR..................................4 1.3 32-bit and 64-bit Support...........................................4 1.4 Definition of Terms..............................................4 2 The Devicetree 6 2.1 Overview...................................................6 2.2 Devicetree Structure and Conventions....................................7 2.2.1 Node Names............................................7 2.2.2 Generic Names Recommendation.................................8 2.2.3 Path Names............................................. 10 2.2.4 Properties.............................................. 10 2.3 Standard Properties.............................................. 12 2.3.1 compatible............................................. 12 2.3.2 model................................................ 13 2.3.3 phandle............................................... 13 2.3.4 status................................................ 14 2.3.5 #address-cells and #size-cells.................................... 14 2.3.6 reg.................................................. 15 2.3.7 virtual-reg.............................................. 15 2.3.8 ranges................................................ 15 2.3.9 dma-ranges.............................................unknown-rev 16 2.3.10 dma-coherent........................................... -

Device Tree the Disaster So Far

Device Tree The Disaster so Far Mark Rutland <[email protected]> ELC Europe 2013 Agenda Disaster is a strong word. Let's talk about: I What was wrong with board files I What device tree is (and what it isn't) I The ARM conversion so far I The problems we have, and how to fix them I What we need to do in future Where we came from Two big problems: I Hard-coded board description I Kernel must know every possible configuration I Minor revisions require a new kernel I Separate kernels per platform I Uncoordinated { \stepping on each others toes" I Difficult to test I Painful for distributions Planned solution: I Single image I Dynamic configuration I Move board description out of the kernel Device Tree { Overview I A data structure for describing hardware I Defined by OpenFirmware (IEEE1275-1994) I Extended by ePAPR & other supplements I Handled by OpenFirmware, U-Boot, ... I Used by *BSD, Linux, OS X, Solaris, Xen I Used on ARC, ARM(64) C6X, META, MicroBlaze, MIPS, OpenRISC, PowerPC, SPARC, x86, Xtensa I Generated by KVM tool, Xen, others Device Tree { Overview I Declarative hardware description I Describes hardware topology I Format not tied to OS internals I Hierarchical key value(-list) I Just a data structure I Conventions, not rigid rules I Bindings I Conventions for describing a particular devices I Typically matched by compatible string I Device classes share common bindings I No central authority I Bindings created by users I No coordination of implementors Device Tree { Bindings Vendor dev2000 bindings ======================= The Vendor dev2000 is a memory-mapped device that may or may not do something useful. -

EXTENDING the LIFECYCLE of IOT DEVICES USING SELECTIVE DEACTIVATION a Dissertation Presented to the Academic Faculty by Michael

EXTENDING THE LIFECYCLE OF IOT DEVICES USING SELECTIVE DEACTIVATION A Dissertation Presented to The Academic Faculty By Michael Hesse In Partial Fulfillment of the Requirements for the Degree Master of Science in the School of Computer Science Georgia Institute of Technology August 2020 Copyright c Michael Hesse 2020 EXTENDING THE LIFECYCLE OF IOT DEVICES USING SELECTIVE DEACTIVATION Approved by: Professor Taesoo Kim, Advisor School of Computer Science Georgia Institute of Technology Professor Brendan Saltaformaggio School of Electrical and Computer Engineering Georgia Institute of Technology Professor Mustaque Ahmad School of Computer Science Georgia Institute of Technology Date Approved: April 29, 2020 ACKNOWLEDGEMENTS I would like to thank my advisor Taesoo Kim and the other members of the committee, Brendan Saltaformaggio and Mustaque Ahmad, for their time, patience and guidance, as this thesis would not have been possible without them. I would further like to thank Meng Xu and Fan San for their continued support and feedback during the course of my research. Ranjani and Sundar, thank you for emotional support and interest in my work. Finally, I would like to thank my parents and my sister for all the love and support they continue to provide throughout my entire life. iii TABLE OF CONTENTS Acknowledgments . iii List of Tables . viii List of Figures . ix Nomenclature . x Chapter 1: Introduction . 1 1.1 Problem Description . 2 1.2 Goal . 2 1.3 Assumptions & Threat Model . 4 1.4 Security Properties . 5 1.5 Challenges . 6 1.6 Thesis Overview . 6 Chapter 2: Background . 8 2.1 Platform Architecture . 8 2.2 Hardware Assisted Security Mechanisms . -

Create Platform Device for Node

devicetree: kernel internals and practical troubleshooting There have been many presentations on what a devicetree looks like and how to create a devicetree. This talk instead examines how the Linux kernel uses a devicetree. Topics include the kernel devicetree framework, device creation, resource allocation, driver binding, and connecting objects. Troubleshooting will consider initialization, allocation, and binding ordering; kernel configuration; and driver problems. Frank Rowand, Sony Mobile Communications August 22, 2014 140821_1927 CAUTION The material covered in this presentation is kernel version specific Most information describes 3.16 or earlier In cases where arch specific code is involved, there will be a bias to looking at arch/arm/ Chapter 1 Device tree what is device tree? “A device tree is a tree data structure with nodes that describe the devices in a system. Each node has property/value pairs that describe the characteristics of the device being represented. Each node has exactly one parent except for the root node, which has no parent.” (ePAPR v1.1) what is device tree? “A device tree is a tree data structure with nodes that describe the devices in a system. Each node has property/value pairs that describe the characteristics of the device being represented. Each node has exactly one parent except for the root node, which has no parent.” (ePAPR v1.1) A device tree describes hardware that can not be located by probing. DT data life cycle (source) .dts .dts - device tree source file / { /* incomplete .dts example */ model -

A Tale of Reusability in Coreboot

A tale of reusability in coreboot Furquan Shaikh Table of contents - Introduction to Chrome OS and Chrome OS Platform Model - Objectives - SSDT generation - Baseboard and Variant Structure - Override devicetree - Current challenges Once upon a time…... What is Chrome OS ? Chrome Linux kernel depthcharge Libraries (e.g. blobs, third- vboot) coreboot party f/w, FSP AARCH64 ARM32 X86 ... What does Chrome OS platform look like ? Chrome Keyboard TCPC EC Linux kernel Touchscreen depthcharge Librarie Trackpad s (e.g. vboot) blobs, third- coreboot party f/w, FSP Camera PMIC TPM AARCH64 ARM32 X86 ... Audio Controllers Application Processor Peripherals Traditional Chrome OS Platform Model Google Reference Platform OEM #1 Lead Device OEM #2 OEM #3 OEM #4 OEM #5 Follower Device Follower Device Follower Device Follower Device Traditional Chrome OS Platform Model Google Reference glados Platform OEM #1 Lead chell Device OEM #2 OEM #3 OEM #4 OEM #5 Follower Follower Follower Follower caroline cave Device Device Device Device Traditional Chrome OS Platform Model Google Reference reef Platform OEM #1 Lead reef Device OEM #2 OEM #3 OEM #4 OEM #5 Follower Follower Follower Follower nasher pyro sand snappy Device Device Device Device Traditional ‸ Chrome OS Platform Model Google Reference reef Platform coral OEM #1 OEM #2 OEM #3 OEM #4 astronaut robo santa nasher ... Device Device Device Device Traditional ‸ Chrome OS Platform Model Google Reference octopus Platform yorp bip OEM #1 OEM #2 OEM #3 OEM #4 phaser meep fleex bobba ... Device Device Device Device -

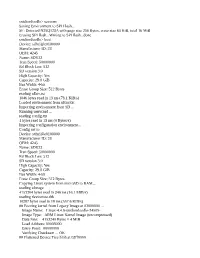

Snickerdoodle> Saveenv Saving Environment to SPI Flash... SF

snickerdoodle> saveenv Saving Environment to SPI Flash... SF: Detected N25Q128A with page size 256 Bytes, erase size 64 KiB, total 16 MiB Erasing SPI flash...Writing to SPI flash...done snickerdoodle> boot Device: sdhci@e0100000 Manufacturer ID: 28 OEM: 4245 Name: SDU32 Tran Speed: 50000000 Rd Block Len: 512 SD version 3.0 High Capacity: Yes Capacity: 29.8 GiB Bus Width: 4-bit Erase Group Size: 512 Bytes reading uEnv.txt 1046 bytes read in 13 ms (78.1 KiB/s) Loaded environment from uEnv.txt Importing environment from SD ... Running uenvcmd ... reading config.txt 1 bytes read in 13 ms (0 Bytes/s) Importing configuration environment... Config set to Device: sdhci@e0100000 Manufacturer ID: 28 OEM: 4245 Name: SDU32 Tran Speed: 50000000 Rd Block Len: 512 SD version 3.0 High Capacity: Yes Capacity: 29.8 GiB Bus Width: 4-bit Erase Group Size: 512 Bytes Copying Linux system from microSD to RAM... reading uImage 4153304 bytes read in 246 ms (16.1 MiB/s) reading devicetree.dtb 10287 bytes read in 18 ms (557.6 KiB/s) ## Booting kernel from Legacy Image at 03000000 ... Image Name: Linux-4.4.0-snickerdoodle-34505- Image Type: ARM Linux Kernel Image (uncompressed) Data Size: 4153240 Bytes = 4 MiB Load Address: 00008000 Entry Point: 00008000 Verifying Checksum ... OK ## Flattened Device Tree blob at 02ff0000 Booting using the fdt blob at 0x2ff0000 Loading Kernel Image ... OK Loading Device Tree to 1fffa000, end 1ffff82e ... OK Starting kernel ... [ 0.000000] Booting Linux on physical CPU 0x0 [ 0.000000] Linux version 4.4.0-snickerdoodle-34505-gcd2401c (russellbush@ub6 [ 0.000000] CPU: ARMv7 Processor [413fc090] revision 0 (ARMv7), cr=18c5387d [ 0.000000] CPU: PIPT / VIPT nonaliasing data cache, VIPT aliasing instructie [ 0.000000] Machine model: snickerdoodle [ 0.000000] cma: Reserved 16 MiB at 0x3f000000 [ 0.000000] Memory policy: Data cache writealloc [ 0.000000] PERCPU: Embedded 12 pages/cpu @ef7d4000 s19072 r8192 d21888 u4912 [ 0.000000] Built 1 zonelists in Zone order, mobility grouping on. -

Device Drivers

Device Drivers Prof. Stephen A. Edwards Columbia University Spring 2016 Linux Operating System Structure Applications Function Calls # Callbacks " Libraries System Calls # Signals " The Kernel Processes Scheduling Networking Memory Management File Systems Device Drivers iowrite32(), etc. # Interrupts " Hardware Busses Memory Peripherals User Space vs. Kernel Space Process abstraction central to most OSes Independent PC, registers, and memory Virtual memory hardware isolates processes, OS Processes run in limited-resourse “user mode” Bug in a process takes down the process only Kernel runs in “supervisor mode” with no access limitations Bugs in kernel code take down the whole system Unix Device Driver Model “Everything is a file” By convention, special “device” files stored in /dev Created by the mknod command or dynamically $ ls -Ggl --time-style=+ \ /dev/sd{a,a1,a2,b} /dev/tty{,1,2} \ First SCSI drive Block /dev/ttyUSB0 Device First partition of brw-rw----b 1 8, 0 /dev/sda first SCSI drive brw-rw---- 1 8, 1 /dev/sda1 brw-rw---- 1 8, 2 /dev/sda2 Second SCSI drive Character Device brw-rw---- 1 8, 16 /dev/sdb crw-rw-rw-c 1 5, 0 /dev/tty Current terminal crw-rw---- 1 4, 1 /dev/tty1 Second terminal crw-rw---- 1 4, 2 /dev/tty2 crw-rw---- 1 188,188 0 /dev/ttyUSB0 Major Minor First USB terminal Owner Group World Device Device permissions Number Number https://www.cs.columbia.edu/~smb/classes/s06-4118/l23.pdf /proc/devices Virtual file with a list of device drivers by major number $ cat /proc/devices Character devices: 4 /dev/vc/0 4 tty 4 ttyS 5 -

Devicetree BOF

Devicetree BOF ELCE 2018 Edinburgh, UK Frank Rowand, Sony October 24, 2018 181021_1535 My Goal Do NOT show all of the slides Agenda - Collect questions, areas of interest - Past events - Future events - New since elc 2017 - dtc compiler update - Overlay update - questions, comments, issues, concerns from the crowd What do you want to talk about? questions comments issues concerns Plumbers 2017 Summary September 2017 Los Angeles Was not scheduled -- not enough interest / commitment Devicetree Workshop Oct 2017 Prague, Czech Republic after elce Devicetree Workshop Oct 2017 Prague, Czech Republic https://elinux.org/Device_tree_future #Kernel_Summit_2017.2C_Devicetree_Workshop - slides - notes Main topic areas: === Validation Tools & Schema === Runtime usage === DTS maintenance issues === More stuff Devicetree Workshop 2017 9:30 Welcome and Schedule bashing 9:40 Encoding and Schema checking: Framing the problem 9:45 DT YAML encoding overview 10:00 YAML encoding discussion 10:20 DT Schema format - option 1 10:35 DT Schema format - option 2 10:50 DT Schema discussion - what should go in the spec? 11:50 Code Generation from DT 12:10 Runtime memory consumption 14:30 Overlay maintenance plan 14:45 Avoiding duplicate descriptions 15:00 Criteria for accepting board files 15:15 Location for maintaining bindings - how to handle foreign bindings 15:30 Sharing Generic bindings 15:45 ABI Stability 16:00 [break and overflow discussion] 16:30 DT health check 16:50 devicetree.org update 17:05 EBBR Discussion 17:20 Closing and feedback Plumbers 2018 November