Unix Memos Collection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

LS-09EN. OS Permissions. SUID/SGID/Sticky. Extended Attributes

Operating Systems LS-09. OS Permissions. SUID/SGID/Sticky. Extended Attributes. Operating System Concepts 1.1 ys©2019 Linux/UNIX Security Basics Agenda ! UID ! GID ! Superuser ! File Permissions ! Umask ! RUID/EUID, RGID/EGID ! SUID, SGID, Sticky bits ! File Extended Attributes ! Mount/umount ! Windows Permissions ! File Systems Restriction Operating System Concepts 1.2 ys©2019 Domain Implementation in Linux/UNIX ! Two types domain (subjects) groups ! User Domains = User ID (UID>0) or User Group ID (GID>0) ! Superuser Domains = Root ID (UID=0) or Root Group ID (root can do everything, GID=0) ! Domain switch accomplished via file system. ! Each file has associated with it a domain bit (SetUID bit = SUID bit). ! When file is executed and SUID=on, then Effective UID is set to Owner of the file being executed. When execution completes Efective UID is reset to Real UID. ! Each subject (process) and object (file, socket,etc) has a 16-bit UID. ! Each object also has a 16-bit GID and each subject has one or more GIDs. ! Objects have access control lists that specify read, write, and execute permissions for user, group, and world. Operating System Concepts 1.3 ys©2019 Subjects and Objects Subjects = processes Objects = files (regular, directory, (Effective UID, EGID) devices /dev, ram /proc) RUID (EUID) Owner permissions (UID) RGID-main (EGID) Group Owner permissions (GID) +RGID-list Others RUID, RGID Others ID permissions Operating System Concepts 1.4 ys©2019 The Superuser (root) • Almost every Unix system comes with a special user in the /etc/passwd file with a UID=0. This user is known as the superuser and is normally given the username root. -

Hacker Public Radio

hpr0001 :: Introduction to HPR hpr0002 :: Customization the Lost Reason hpr0003 :: Lost Haycon Audio Aired on 2007-12-31 and hosted by StankDawg Aired on 2008-01-01 and hosted by deepgeek Aired on 2008-01-02 and hosted by Morgellon StankDawg and Enigma talk about what HPR is and how someone can contribute deepgeek talks about Customization being the lost reason in switching from Morgellon and others traipse around in the woods geocaching at midnight windows to linux Customization docdroppers article hpr0004 :: Firefox Profiles hpr0005 :: Database 101 Part 1 hpr0006 :: Part 15 Broadcasting Aired on 2008-01-03 and hosted by Peter Aired on 2008-01-06 and hosted by StankDawg as part of the Database 101 series. Aired on 2008-01-08 and hosted by dosman Peter explains how to move firefox profiles from machine to machine 1st part of the Database 101 series with Stankdawg dosman and zach from the packetsniffers talk about Part 15 Broadcasting Part 15 broadcasting resources SSTRAN AMT3000 part 15 transmitter hpr0007 :: Orwell Rolled over in his grave hpr0009 :: This old Hack 4 hpr0008 :: Asus EePC Aired on 2008-01-09 and hosted by deepgeek Aired on 2008-01-10 and hosted by fawkesfyre as part of the This Old Hack series. Aired on 2008-01-10 and hosted by Mubix deepgeek reviews a film Part 4 of the series this old hack Mubix and Redanthrax discuss the EEpc hpr0010 :: The Linux Boot Process Part 1 hpr0011 :: dd_rhelp hpr0012 :: Xen Aired on 2008-01-13 and hosted by Dann as part of the The Linux Boot Process series. -

Easy Slackware

1 Создание легкой системы на базе Slackware I - Введение Slackware пользуется заслуженной популярностью как классический linux дистрибутив, и поговорка "кто знает Red Hat тот знает только Red Hat, кто знает Slackware тот знает linux" несмотря на явный снобизм поклонников "бога Патре га" все же имеет под собой основания. Одним из преимуществ Slackware является возможность простого создания на ее основе практически любой системы, в том числе быстрой и легкой десктопной, о чем далее и пойдет речь. Есть дис трибутивы, клоны Slackware, созданные именно с этой целью, типа Аbsolute, но все же лучше создавать систему под себя, с максимальным учетом именно своих потребностей, и Slackware пожалуй как никакой другой дистрибутив подходит именно для этой цели. Легкость и быстрота системы определяется выбором WM (DM) , набором программ и оптимизацией программ и системы в целом. Первое исключает KDE, Gnome, даже новые версии XFCЕ, остается разве что LXDE, но набор программ в нем совершенно не устраивает. Оптимизация наиболее часто используемых про грамм и нескольких базовых системных пакетов осуществляется их сборкой из сорцов компилятором, оптимизированным именно под Ваш комп, причем каж дая программа конфигурируется исходя из Ваших потребностей к ее возможно стям. Оптимизация системы в целом осуществляется ее настройкой согласно спе цифическим требованиям к десктопу. Такой подход был выбран по банальной причине, возиться с gentoo нет ни какого желания, комп все таки создан для того чтобы им пользоваться, а не для компиляции программ, в тоже время у каждого есть минимальный набор из не большого количества наиболее часто используемых программ, на которые стоит потратить некоторое, не такое уж большое, время, чтобы довести их до ума. Кро ме того, такой подход позволяет иметь самые свежие версии наиболее часто ис пользуемых программ. -

Sistemas De Archivos

Sistemas de archivos Gunnar Wolf IIEc-UNAM Esteban Ruiz CIFASIS-UNR Federico Bergero CIFASIS-UNR Erwin Meza UNICAUCA Índice 1. Plasmando la estructura en el dispositivo1 1.1. Conceptos para la organización...................2 1.2. Diferentes sistemas de archivos...................4 1.3. El volumen..............................4 1.4. El directorio y los i-nodos......................6 1.5. Compresión y desduplicación .................... 11 2. Esquemas de asignación de espacio 14 2.1. Asignación contigua......................... 14 2.2. Asignación ligada........................... 15 2.3. Asignación indexada......................... 16 2.4. Las tablas en FAT.......................... 18 3. Fallos y recuperación 20 3.1. Datos y metadatos.......................... 22 3.2. Vericación de la integridad..................... 22 3.3. Actualizaciones suaves (soft updates)................ 23 3.4. Sistemas de archivo con bitácora (journaling le systems).... 24 3.5. Sistemas de archivos estructurados en bitácora (log-structured le systems)................................ 25 4. Otros recursos 26 1. Plasmando la estructura en el dispositivo A lo largo del capítulo ?? se presentaron los elementos del sistema de archivos tal como son presentados al usuario nal, sin entrar en detalles respecto a cómo organiza toda esta información el sistema operativo en un dispositivo persistente 1 Mencionamos algunas estructuras base, pero dejándolas explícitamente pen- dientes de denición. En este capítulo se tratarán las principales estructuras y mecanismos empleados -

Facultad De Ingeniería Estadística E Informática

UNIVERSIDAD NACIONAL DEL ALTIPLANO FACULTAD DE INGENIERÍA ESTADÍSTICA E INFORMÁTICA ESCUELA PROFESIONAL DE INGENIERÍA ESTADÍSTICA E INFORMÁTICA TESIS “PERSONALIZACIÓN DEL SISTEMA OPERATIVO GNU/LINUX UBUNTU 13 PARA MEJORAR EL ACCESO A INTERNET SATELITAL CON GILAT, JULIACA - 2013” PRESENTADA POR: NELLY YUDITH ZAPANA MAMANI PARA OPTAR EL TITULO PROFESIONAL DE: INGENIERO ESTADISTICO E INFORMÁTICO PUNO – PERU 2014 i TESIS "PERSONALIZACIÓN DEL SISTEMA OPERATIVO GNU/LINUX UBUNTU 13 PARA MEJORAR EL ACCESO A INTERNET SATELITAL CON GILAT, JULIACA - 2013" PRESENTADA POR: NELL Y YUDITH ZAPANA MAMANI A LA COORDINACIÓN DE INVESTIGACIÓN DE LA FACULTAD DE INGENIERÍA ESTADÍSTICA E INFORMÁTICA DE LA UNIVERSIDAD NACIONAL DEL ALTIPLANO - PUNO, PARA OPTAR EL TÍTULO DE: INGENIERO ESTADÍSTICO E INFORMÁTICO APROBADA POR EL JURADO: Presidente: Primer Miembro: Segundo Miembro: M.Sc. Jhon Richard Huanca Suaquita Director: Asesor: lng. Ramiro Pedro Laura Murillo ii Repositorio Institucional UNA-PUNO No olvide citar esta tesis DEDICATORIAS ________________________________________________ Dedico el presente trabajo a Dios quién me ha bendecido con sabiduría, amor y paciencia, me ayudo en los momentos más difíciles brindándome valor con ello me fortaleció en todo momento. Con amor, admiración y respeto a mi Madre: Irene Mamani Ojeda, por su inmenso amor, sacrificio, su constante apoyo incondicional en mi formación Humana y Profesional. A mi familia por estar constantes en mi vida, por estar siempre presentes con sus consejos, acompañándome para poderme realizar. A la vida, que ciertamente nos brinda lo que cosechamos en ella. Nelly Zapana… iii AGRADECIMIENTOS ________________________________________________ A Dios, por todo lo que me ha dado, a mi Madre Irene que contribuyo con su presencia y amor. -

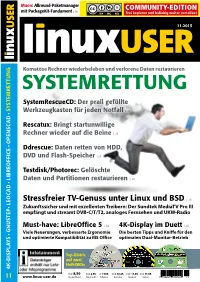

Systemrettung

Muon: Allround-Paketmanager LeoCAD: Lego-ModelleCOMMUNITY-EDITION am 20 Jahre GNUstep: Desktop- mit PackageKit-Fundament S. 70 Bildschirm konstruieren S. 46 Urgestein für Pragmatiker S. 16 Frei kopieren und beliebig weiter verteilen ! 11.2015 11.2015 Komatöse Rechner wiederbeleben und verlorene Daten restaurieren SYSTEMRETTUNG SYSTEMRETTUNG SystemRescueCD: Der prall gefüllte SYSTEMRETTUNG Werkzeugkasten für jeden Notfall S. 22 Rescatux: Bringt startunwillige Rechner wieder auf die Beine S. 28 Ddrescue: Daten retten von HDD, DVD und Flash-Speicher S.34 Testdisk/Photorec: Gelöschte • MediaTV Pro III • OpenSCAD Quellenverwaltung • MediaTV Pro Daten und Partitionen restaurieren S. 40 Stressfreier TV-Genuss unter Linux und BSD S . 80 • LibreOffice 5 • LibreOffice Zukunftssicher und mit exzellenten Treibern: Der Sundtek MediaTV Pro III empfängt und streamt DVB-C/T/T2, analoges Fernsehen und UKW-Radio • LeoCAD Must-have: LibreOffice 5 S. 64 4K-Display im Duett S. 60 Viele Neuerungen, verbesserte Ergonomie Die besten Tipps und Kniffe für den • GNUstep und optimierte Kompatibilität zu MS Office optimalen Dual-Monitor-Betrieb Top-Distris auf zwei • 4K-Monitore Heft-DVDs 4K-DISPLAYS • GNUSTEP• LEOCAD • LIBREOFFICE • OPENSCAD • OPENSCAD • • LIBREOFFICE LEOCAD • GNUSTEP• • 4K-DISPLAYS EUR 8,50 EUR 9,35 sfr 17,00 EUR 10,85 EUR 11,05 EUR 11,05 2 DVD-10 11 www.linux-user.de Deutschland Österreich Schweiz Benelux Spanien Italien 4 196067 008502 11 Editorial Apocalypse not Sehr geehrte Leserinnen und Leser, man muss sich schon sehr anstrengen, und zahlreiche Fahrzeughäuser in die Kri- um medial dem Ansturm Hunderttausen- se ziehen, sondern auch vermeintlich Un- der Flüchtlinge auf Mitteleuropa den beteiligte. Letztlich sind wir sowieso alle Rang abzulaufen – doch VW gelang das betroffen: von dem zusätzlichen Dreck, mit seinem „Dieselgate“ problemlos. -

Beets Documentation Release 1.5.1

beets Documentation Release 1.5.1 Adrian Sampson Oct 01, 2021 Contents 1 Contents 3 1.1 Guides..................................................3 1.2 Reference................................................. 14 1.3 Plugins.................................................. 44 1.4 FAQ.................................................... 120 1.5 Contributing............................................... 125 1.6 For Developers.............................................. 130 1.7 Changelog................................................ 145 Index 213 i ii beets Documentation, Release 1.5.1 Welcome to the documentation for beets, the media library management system for obsessive music geeks. If you’re new to beets, begin with the Getting Started guide. That guide walks you through installing beets, setting it up how you like it, and starting to build your music library. Then you can get a more detailed look at beets’ features in the Command-Line Interface and Configuration references. You might also be interested in exploring the plugins. If you still need help, your can drop by the #beets IRC channel on Libera.Chat, drop by the discussion board, send email to the mailing list, or file a bug in the issue tracker. Please let us know where you think this documentation can be improved. Contents 1 beets Documentation, Release 1.5.1 2 Contents CHAPTER 1 Contents 1.1 Guides This section contains a couple of walkthroughs that will help you get familiar with beets. If you’re new to beets, you’ll want to begin with the Getting Started guide. 1.1.1 Getting Started Welcome to beets! This guide will help you begin using it to make your music collection better. Installing You will need Python. Beets works on Python 3.6 or later. • macOS 11 (Big Sur) includes Python 3.8 out of the box. -

Wikipedia: Design of the FAT File System

Design of the FAT file system A FAT file system is a specific type of computer file system architecture and FAT a family of industry-standard file systems utilizing it. Developer(s) Microsoft, SCP, IBM, [3] The FAT file system is a legacy file system which is simple and robust. It Compaq, Digital offers good performance even in very light-weight implementations, but Research, Novell, cannot deliver the same performance, reliability and scalability as some Caldera modern file systems. It is, however, supported for compatibility reasons by Full name File Allocation Table: nearly all currently developed operating systems for personal computers and FAT12 (12- many home computers, mobile devices and embedded systems, and thus is a bit version), well suited format for data exchange between computers and devices of almost FAT16 (16- any type and age from 1981 through the present. bit versions), Originally designed in 1977 for use on floppy disks, FAT was soon adapted and FAT32 (32-bit version used almost universally on hard disks throughout the DOS and Windows 9x with 28 bits used), eras for two decades. Today, FAT file systems are still commonly found on exFAT (64- floppy disks, USB sticks, flash and other solid-state memory cards and bit versions) modules, and many portable and embedded devices. DCF implements FAT as Introduced 1977 (Standalone the standard file system for digital cameras since 1998.[4] FAT is also utilized Disk BASIC-80) for the EFI system partition (partition type 0xEF) in the boot stage of EFI- FAT12: August 1980 compliant computers. (SCP QDOS) FAT16: August 1984 For floppy disks, FAT has been standardized as ECMA-107[5] and (IBM PC DOS 3.0) ISO/IEC 9293:1994[6] (superseding ISO 9293:1987[7]). -

I. ELECTRONIC DISCOVERY: DEFINITIONS and USES A. What

I. ELECTRONIC DISCOVERY: DEFINITIONS AND USES A. What Is Electronic Discovery? Electronic discovery includes requests for and production of information that is stored in digital form.1 In short, electronic discovery is the discovery of electronic documents and data.2 Electronic documents include virtually anything that is stored on a computer such as e-mail, web pages, word processing files, and computer databases.3 Electronic records can be found on a wide variety of devices such as desktop and laptop computers, network servers, personal digital assistants and digital phones.4 Documents and data are "electronic" if they exist in a medium that can only be read by using computers such as cache memory, magnetic disks (for example computer hard drives or floppy disks), optical disks (for example DVDs or CDs), and magnetic tapes.5 Electronic discovery is frequently distinguished from traditional "paper discovery," which is the discovery of writings on paper that can be read without the assistance of computers.6 B. Why E-Discovery Can Be Valuable in Litigation With the advancement of technology, electronic discovery is not only valuable in litigation, it is essential. Electronic evidence is affecting virtually every investigation today whether it is criminal or civil.7 Usually, there are no longer "paper- trails" that establish who did what and when.8 Instead, electronic evidence is providing the clues to understanding what actually happened.9 Consider these statistics regarding the electronic evidence explosion: · "In 2002, the International Data Corporation estimated that 31 billion e-mails were sent daily. This number is expected to grow to 60 billion a day by 2006. -

Ubuntu Server Guide Basic Installation Preparing to Install

Ubuntu Server Guide Welcome to the Ubuntu Server Guide! This site includes information on using Ubuntu Server for the latest LTS release, Ubuntu 20.04 LTS (Focal Fossa). For an offline version as well as versions for previous releases see below. Improving the Documentation If you find any errors or have suggestions for improvements to pages, please use the link at thebottomof each topic titled: “Help improve this document in the forum.” This link will take you to the Server Discourse forum for the specific page you are viewing. There you can share your comments or let us know aboutbugs with any page. PDFs and Previous Releases Below are links to the previous Ubuntu Server release server guides as well as an offline copy of the current version of this site: Ubuntu 20.04 LTS (Focal Fossa): PDF Ubuntu 18.04 LTS (Bionic Beaver): Web and PDF Ubuntu 16.04 LTS (Xenial Xerus): Web and PDF Support There are a couple of different ways that the Ubuntu Server edition is supported: commercial support and community support. The main commercial support (and development funding) is available from Canonical, Ltd. They supply reasonably- priced support contracts on a per desktop or per-server basis. For more information see the Ubuntu Advantage page. Community support is also provided by dedicated individuals and companies that wish to make Ubuntu the best distribution possible. Support is provided through multiple mailing lists, IRC channels, forums, blogs, wikis, etc. The large amount of information available can be overwhelming, but a good search engine query can usually provide an answer to your questions. -

Ext4 Disk Layout - Ext4 Hps://Ext4.Wiki.Kernel.Org/Index.Php/Ext4 Disk Layout

Ext4 Disk Layout - Ext4 hps://ext4.wiki.kernel.org/index.php/Ext4_Disk_Layout Ext4 Disk Layout From Ext4 is document aempts to describe the on-disk format for ext4 filesystems. e same general ideas should apply to ext2/3 filesystems as well, though they do not support all the features that ext4 supports, and the fields will be shorter. NOTE: is is a work in progress, based on notes that the author (djwong) made while picking apart a filesystem by hand. e data structure definitions were pulled out of Linux 3.11 and e2fsprogs-1.42.8. He welcomes all comments and corrections, since there is undoubtedly plenty of lore that doesn't necessarily show up on freshly created demonstration filesystems. Contents 1 Terminology 2 Overview 2.1 Blocks 2.2 Layout 2.3 Flexible Block Groups 2.4 Meta Block Groups 2.5 Lazy Block Group Initialization 2.6 Special inodes 2.7 Block and Inode Allocation Policy 2.8 Checksums 2.9 Bigalloc 2.10 Inline Data 2.10.1 Inline Directories 3 e Super Block 4 Block Group Descriptors 5 Block and inode Bitmaps 6 Inode Table 6.1 Finding an Inode 6.2 Inode Size 6.3 Inode Timestamps 7 e Contents of inode.i_block 7.1 Symbolic Links 1 of 43 10/21/2013 10:43 PM Ext4 Disk Layout - Ext4 hps://ext4.wiki.kernel.org/index.php/Ext4_Disk_Layout 7.2 Direct/Indirect Block Addressing 7.3 Extent Tree 7.4 Inline Data 8 Directory Entries 8.1 Linear (Classic) Directories 8.2 Hash Tree Directories 9 Extended Aributes 9.1 POSIX ACLs 10 Multiple Mount Protection 11 Journal (jbd2) 11.1 Layout 11.2 Block Header 11.3 Super Block 11.4 Descriptor Block 11.5 Data Block 11.6 Revocation Block 11.7 Commit Block 12 Areas in Need of Work 13 Other References Terminology ext4 divides a storage device into an array of logical blocks both to reduce bookkeeping overhead and to increase throughput by forcing larger transfer sizes. -

Introducting Innovations in Open Source Projects

Introducing Innovations into Open Source Projects Dissertation zur Erlangung des Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) am Fachbereich Mathematik und Informatik der Freien Universität Berlin von Sinan Christopher Özbek Berlin August 2010 2 Gutachter: Professor Dr. Lutz Prechelt, Freie Universität Berlin Professor Kevin Crowston, Syracuse University Datum der Disputation: 17.12.2010 4 Abstract This thesis presents a qualitative study using Grounded Theory Methodology on the question of how to change development processes in Open Source projects. The mailing list communication of thirteen medium-sized Open Source projects over the year 2007 was analyzed to answer this question. It resulted in eight main concepts revolving around the introduction of innovation, i.e. new processes, services, and tools, into the projects including topics such as the migration to new systems, the question on where to host services, how radical Open Source projects can change their ways, and how compliance to processes and conventions is enforced. These are complemented with (1) the result of five case studies in which innovation introductions were conducted with Open Source projects, and with (2) a theoretical comparison of the results of this thesis to four theories and scientific perspectives from the organizational and social sciences such as Path Dependence, the Garbage Can model, Social-Network analysis, and Actor-Network theory. The results show that innovation introduction is a multifaceted phenomenon, of which this thesis discusses the most salient conceptual aspects. The thesis concludes with practical advice for innovators and specialized hints for the most popular innovations. 5 6 Acknowledgements I want to thank the following individuals for contributing to the completion of this thesis: • Lutz Prechelt for advising me over these long five years.