Estimate Performance and Capacity Requirements for Microsoft Search Server 2010 Express April 2010

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical Reference for Microsoft Sharepoint Server 2010

Technical reference for Microsoft SharePoint Server 2010 Microsoft Corporation Published: May 2011 Author: Microsoft Office System and Servers Team ([email protected]) Abstract This book contains technical information about the Microsoft SharePoint Server 2010 provider for Windows PowerShell and other helpful reference information about general settings, security, and tools. The audiences for this book include application specialists, line-of-business application specialists, and IT administrators who work with SharePoint Server 2010. The content in this book is a copy of selected content in the SharePoint Server 2010 technical library (http://go.microsoft.com/fwlink/?LinkId=181463) as of the publication date. For the most current content, see the technical library on the Web. This document is provided “as-is”. Information and views expressed in this document, including URL and other Internet Web site references, may change without notice. You bear the risk of using it. Some examples depicted herein are provided for illustration only and are fictitious. No real association or connection is intended or should be inferred. This document does not provide you with any legal rights to any intellectual property in any Microsoft product. You may copy and use this document for your internal, reference purposes. © 2011 Microsoft Corporation. All rights reserved. Microsoft, Access, Active Directory, Backstage, Excel, Groove, Hotmail, InfoPath, Internet Explorer, Outlook, PerformancePoint, PowerPoint, SharePoint, Silverlight, Windows, Windows Live, Windows Mobile, Windows PowerShell, Windows Server, and Windows Vista are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. -

The Response by the Security Community to Are All Retained

The magazine you!re reading was put together during an extremely busy few months that saw us pile up frequent flier miles on the way to several conferences. You can read about some of them in the pages that follow, specifically RSA Conference 2009, Infosecurity Europe 2009 and Black Hat Europe 2009. This issue brings forward many hot topics from respected security professionals located all over the world. There!s an in-depth review of IronKey, and to round it all up, there are three interviews that you!ll surely find stimulating. This edition of (IN)SECURE should keep you busy during the summer, but keep in mind that we!re coming back in September! Articles are already piling in so get in touch if you have something to share. Mirko Zorz Editor in Chief Visit the magazine website at www.insecuremag.com (IN)SECURE Magazine contacts Feedback and contributions: Mirko Zorz, Editor in Chief - [email protected] Marketing: Berislav Kucan, Director of Marketing - [email protected] Distribution (IN)SECURE Magazine can be freely distributed in the form of the original, non modified PDF document. Distribution of modified versions of (IN)SECURE Magazine content is prohibited without the explicit permission from the editor. Copyright HNS Consulting Ltd. 2009. www.insecuremag.com Qualys adds Web application scanning to QualysGuard Qualys added QualysGuard Web Application Scanning (WAS) 1.0 to the QualysGuard Security and Compliance Software-as-a- Service (SaaS) Suite, the company!s flagship solution for IT secu- rity risk and compliance management. Delivered through a SaaS model, QualysGuard WAS delivers automated crawling and test- ing for custom Web applications to identify most common vulner- abilities such as those in the OWASP Top 10 and WASC Threat Classification, including SQL injection and cross-site scripting. -

Introduction to FAST Search Server 2010 for Sharepoint

www.it-ebooks.info www.it-ebooks.info Working with Microsoft ® FAST™ Search Server 2010 for SharePoint ® Mikael Svenson Marcus Johansson Robert Piddocke www.it-ebooks.info Published with the authorization of Microsoft Corporation by: O’Reilly Media, Inc. 1005 Gravenstein Highway North Sebastopol, California 95472 Copyright © 2012 by Mikael Svenson, Marcus Johansson, Robert Piddocke All rights reserved. No part of the contents of this book may be reproduced or transmitted in any form or by any means without the written permission of the publisher. ISBN: 978-0-7356-6222-3 1 2 3 4 5 6 7 8 9 LSI 7 6 5 4 3 2 Printed and bound in the United States of America. Microsoft Press books are available through booksellers and distributors worldwide. If you need support related to this book, email Microsoft Press Book Support at [email protected]. Please tell us what you think of this book at http://www.microsoft.com/learning/booksurvey. Microsoft and the trademarks listed at http://www.microsoft.com/about/legal/en/us/IntellectualProperty/ Trademarks/EN-US.aspx are trademarks of the Microsoft group of companies. All other marks are property of their respective owners. The example companies, organizations, products, domain names, email addresses, logos, people, places, and events depicted herein are fictitious. No association with any real company, organization, product, domain name, email address, logo, person, place, or event is intended or should be inferred. This book expresses the author’s views and opinions. The information contained in this book is provided without any express, statutory, or implied warranties. -

Microsoft Dynamics AX 2012 System Requirements Microsoft Corporation Published: July 2016

Microsoft Dynamics AX 2012 System Requirements Microsoft Corporation Published: July 2016 Note: Some of the information in this guide applies only to Microsoft Dynamics AX 2012 R3 installations, and some information applies only to AX 2012 R2, AX 2012 Feature Pack, or AX 2012. Unless otherwise noted in this guide, all other content applies to AX 2012, AX 2012 Feature Pack, AX 2012 R2, and AX 2012 R3. Microsoft Dynamics AX Microsoft Dynamics is a line of integrated, adaptable business management solutions that enables you and your people to make business decisions with greater confidence. Microsoft Dynamics works like and with familiar Microsoft software, automating and streamlining financial, customer relationship, and supply chain processes in a way that helps you drive business success. U.S. and Canada Toll Free 1-888-477-7989 Worldwide +1-701-281-6500 www.microsoft.com/dynamics This document is provided "as-is." Information and views expressed in this document, including URL and other Internet Web site references, may change without notice. You bear the risk of using it. Some examples are for illustration only and are fictitious. No real association is intended or inferred. This document does not provide you with any legal rights to any intellectual property in any Microsoft product. You may copy and use this document for your internal, reference purposes. Copyright © 2016 Microsoft Corporation. All rights reserved. Microsoft, Microsoft Dynamics, the Microsoft Dynamics logo, Active Directory, Excel, Hyper-V, Internet Explorer, Outlook, SharePoint, SQL Server, Visual Studio, Windows, Windows PowerShell, Windows Server, and Windows Vista are trademarks of the Microsoft group of companies. -

Pdflib TET PDF Ifilter 4.2 Manual

ABC TET PDF IFilter Version 4.2 Enterprise PDF Search for Windows Copyright © 2002–2013 PDFlib GmbH and Thomas Merz. All rights reserved. Protected by European and U.S. patents. PDFlib GmbH Franziska-Bilek-Weg 9, 80339 München, Germany www.pdflib.com phone +49 • 89 • 452 33 84-0 fax +49 • 89 • 452 33 84-99 If you have questions check the PDFlib mailing list and archive at tech.groups.yahoo.com/group/pdflib Licensing contact: [email protected] Support for commercial PDFlib licensees: [email protected] (please include your license number) This publication and the information herein is furnished as is, is subject to change without notice, and should not be construed as a commitment by PDFlib GmbH. PDFlib GmbH assumes no responsibility or lia- bility for any errors or inaccuracies, makes no warranty of any kind (express, implied or statutory) with re- spect to this publication, and expressly disclaims any and all warranties of merchantability, fitness for par- ticular purposes and noninfringement of third party rights. PDFlib and the PDFlib logo are registered trademarks of PDFlib GmbH. PDFlib licensees are granted the right to use the PDFlib name and logo in their product documentation. However, this is not required. Adobe, Acrobat, PostScript, and XMP are trademarks of Adobe Systems Inc. AIX, IBM, OS/390, WebSphere, iSeries, and zSeries are trademarks of International Business Machines Corporation. ActiveX, Microsoft, OpenType, and Windows are trademarks of Microsoft Corporation. Apple, Macintosh and TrueType are trademarks of Apple Computer, Inc. Unicode and the Unicode logo are trademarks of Unicode, Inc. Unix is a trademark of The Open Group. -

Professional Services Agreement Public Defender Business Process

PROFESSIONAL SERVICES AGREEMENT PUBLIC DEFENDER BUSINESS PROCESS REENGINEERING & CASE MANAGEMENT SYSTEM IMPLEMENTATION BETWEEN COOK COUNTY GOVERNMENT Law Office of Public Defender AND Journal Technologies, Inc. CONTRACT NO. 1418-13332 APISRO'VOIP Idu HotikKiD 0" COOK COONTY OOIItiyIISSIOIISOS OCT II / 7015 Cook County Professtonsi Service Agreement Revised 3-9-2015 SUSPENSION .. .......1 8 a) Events of Default Defined . ...,...1 8 b) Remedies 19 c) Termination for Convenience ....21 d) Suspension 21 e) Delays 21 ARTICLE 10) GENERAL CONDITIONS .....2 1 a) Entire Agreement .. .....2 1 b) Counterparts 22 c) Contract Amendments ................................... 23 d) Governing Law and Jurisdiction.. 23 e) Severability 23 fl Assigns 24 g) Cooperation 24 h) Waiver 24 i) Independent Consultant .....24 j) Governmental Joint Purchasing Agreement .....25 ARTICLE 11) NOTICES., .....26 ARTICLE 12) AUTHORITY . .....26 List of Exhibits Exhibit I Scope of Work (SOW) Exhibit 2 Cook County Information Technology Special Conditions (ITSC) Exhibit 3 Schedule of Compensation Exhibit 4 Software License and Maintenance Agreement Exhibit 5 Identification of Subcontractor/Supplier/Subconsultant Exhibit 6 Minority and Women Owned Business Enterprise Commitment and Utilization Plan Exhibit 7 Evidence of Insurance Exhibit 8 Board Authorization Exhibit 9 Economic Disclosure Statement Cook County Professional Service Atpeement Ravrsed 3-9-2015 Contract No. 1418-13332 Public Defender Business Process Reengineering & Case Management System Implementation AGREEMENT This Agreement is made and entered into by and between the County of Cook, a public body corporate of the State of Illinois, on behalf of Oftice of the Chief Procurement Officer hereinafter referred to as "County" and Journal Technologies, Inc. hereinafter referred to as "Consultant", pursuant to authorization by the Cook County Board of Commissioners on October 7, 2015, as evidenced by Board Authorization letter attached hereto as EXHIBIT "8". -

Microsoft Sharepoint Server Agent Version 08.20.09.00

IBM Tivoli Composite Application Manager for Microsoft Applications: Microsoft SharePoint Server Agent Version 08.20.09.00 Reference IBM Note Before using this information and the product it supports, read the information in “Notices” on page 369. This edition applies to version 08.20.09.00 of the IBM Tivoli Composite Application Manager for Microsoft Applications: Microsoft SharePoint Server Agent and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 2010, 2020. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Chapter 1. IBM Tivoli Composite Application Manager for Microsoft Applications: Microsoft SharePoint Server Agent................................................ 1 Chapter 2. Dashboards.......................................................................................... 3 Default dashboards......................................................................................................................................3 Widgets for the Default dashboards............................................................................................................4 Custom views............................................................................................................................................... 9 Chapter 3. Thresholds..........................................................................................11 Predefined thresholds.............................................................................................................................. -

Department of Veterans Affairs (VA) Records Related to Contractor Young & Rubicam Aka Bursonmarsteller, 2012-2013

Description of document: Department of Veterans Affairs (VA) records related to contractor Young & Rubicam aka Burson-Marsteller, 2012-2013 Request date: 27-March-2014 Released date: 20-May-2014 Posted date: 18-August-2014 Source of document: Department of Veterans Affairs Veterans Affairs Central Office FOIA/Privacy Act Officers 810 Vermont Avenue, NW Washington, DC 20420 Email: [email protected] The governmentattic.org web site (“the site”) is noncommercial and free to the public. The site and materials made available on the site, such as this file, are for reference only. The governmentattic.org web site and its principals have made every effort to make this information as complete and as accurate as possible, however, there may be mistakes and omissions, both typographical and in content. The governmentattic.org web site and its principals shall have neither liability nor responsibility to any person or entity with respect to any loss or damage caused, or alleged to have been caused, directly or indirectly, by the information provided on the governmentattic.org web site or in this file. The public records published on the site were obtained from government agencies using proper legal channels. Each document is identified as to the source. Any concerns about the contents of the site should be directed to the agency originating the document in question. GovernmentAttic.org is not responsible for the contents of documents published on the website. DEPARTMENT OF VETERANS AFFAIRS WASHINGTON DC 20420 May 20, 2014 Via email Re: Freedom of Information Act Request 14-04059-F This is our final response to your Freedom of Information Act (FOIA) request dated and received in this office on March 27, 2014. -

Office Add-Ins Sharepoint Security Onenote 2010

THE MICROSOFT JOURNAL FOR DEVELOPERS JULY 2010 VOL 25 NO 7 OFFICE ADD-INS COLUMNS 3 Solutions for Accessing SharePoint Data in Offi ce 2010 EDITOR’S NOTE Donovan Follette and Paul Stubbs . 20 Over-Educated, Yet Under-Qualifi ed? SHAREPOINT SECURITY Keith Ward page 4 Trim SharePoint Search Results for Better Security CUTTING EDGE Ashley Elenjickal and Pooja Harjani . 36 Expando Objects in C# 4.0 Dino Esposito page 6 ONENOTE 2010 DATA POINTS Creating OneNote 2010 Extensions with the OneNote Object Model Windows Azure Table Andy Gray . 44 Storage–Not Your Father’s Database Julie Lerman page 16 OFFICE SERVICES SECURITY BRIEFS Merging Word Documents on the Server Side with SharePoint 2010 View State Security Manvir Singh and Ankush Bhatia . 50 Bryan Sullivan page 82 SMART CLIENT THE WORKING PROGRAMMER Building Distributed Apps with NHibernate and Rhino Service Bus Going NoSQL with MongoDB, Oren Eini . 58 Part 3 Ted Neward page 88 C# 4.0 UI FRONTIERS New C# Features in the .NET Framework 4 The Fluid UI in Silverlight 4 Chris Burrows . 68 Charles Petzold page 92 DON’T GET ME STARTED DESIGN PATTERNS Rejectionists Rejected Problems and Solutions with Model-View-ViewModel David Platt page 96 Robert McCarter . 74 Untitled-5 2 3/5/10 10:16 AM Sure, Visual Studio 2010 has a lot of great functionality— we’re excited that it’s only making our User Interface components even better! We’re here to help you go beyond what Visual Studio 2010 gives you so you can create Killer Apps quickly, easily and without breaking a sweat! Go to infragistics.com/beyondthebox today to expand your toolbox with the fastest, best-performing and most powerful UI controls available. -

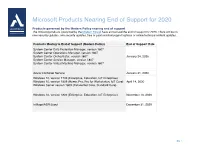

Microsoft Products Nearing End of Support for 2020

Microsoft Products Nearing End of Support for 2020 Products governed by the Modern Policy nearing end of support The following products (governed by the Modern Policy) have announced the end of support for 2020. There will be no new security updates, non-security updates, free or paid assisted support options or online technical content updates. Products Moving to End of Support (Modern Policy) End of Support Date System Center Data Protection Manager, version 1807 System Center Operations Manager, version 1807 System Center Orchestrator, version 1807 January 24, 2020 System Center Service Manager, version 1807 System Center Virtual Machine Manager, version 1807 Azure Container Service January 31, 2020 Windows 10, version 1709 (Enterprise, Education, IoT Enterprise) Windows 10, version 1809 (Home, Pro, Pro for Workstation, IoT Core) April 14, 2020 Windows Server version 1809 (Datacenter Core, Standard Core) Windows 10, version 1803 (Enterprise, Education, IoT Enterprise) November 10, 2020 InMage/ASR Scout December 31, 2020 pg. 1 Microsoft Products Nearing End of Support for 2020 Products governed by the Fixed Policy nearing end of support The following products (governed by the Fixed Policy) have announced the end of support in 2020. There will be no new security updates, non-security updates, free or paid assisted support options or online technical content updates. End of Support Products Moving to End of Support (Fixed Policy) Date Exchange Server 2010 (all editions) Microsoft Dynamics NAV 2009 R2 Microsoft Expression Blend 3 Microsoft Expression Design 3 Microsoft Expression Encoder 3 Microsoft Expression Studio 3 Microsoft Expression Web 3 January 14, 2020 Microsoft Hyper-V Server 2008 Microsoft Hyper-V Server 2008 R2 Windows 7 Windows Embedded Handheld 6.5 Windows Server 2008 R2 Windows Server 2008 Windows Server Update Services 3.0 April 14, 2020 Forefront Unified Access Gateway 2010 Microsoft Forefront Threat Management Gateway 2010 Enterprise Microsoft HPC Pack 2008 R2 pg. -

Comparative Study of Search Engines

“COMPARATIVE STUDY OF SEARCH ENGINES USEFUL FOR LIBRARIANS” A Dissertation Submitted to the Tilak Maharashtra Vidyapeeth, Pune For Master of Philosophy (M.Phil.) In LIBRARY AND INFORMATION SCIENCE Submitted By Mr. SUSHANT KORE Under the Guidance of Dr. N. B. DAHIBHATE Principal Technical Officer Information Division (DIRC) National Chemical Laboratory, Pune TILAK MAHARASHTRA VIDYAPETH DEPARTMENT OF LIBRARY AND INFORMATION SCIENCE PUNE - 411 037. December, 2014 DECLARATION I hereby declare that the dissertation entitled “Comparative study of search engines useful for librarians” completed by me for the degree of Master of Philosophy in library and Information Science. The entire work embodied in this thesis has been carried out by me under the guidance of Dr. N.B. Dahibhate, National Chemical Laboratory, and Digital Information Resource Center (DIRC), Pune. (Mr. Sushant Kore) Research Student (M.Phil.) Place: Pune Date: 26th December, 2014 CERTIFICATE This is to certify that the thesis entitled “Comparative study of search engines useful for librarians” which is being submitted herewith for the award of the Degree of Master of Philosophy (M.Phil.) in Library and Information Science of Tilak Maharashtra Vidyapeeth, Pune is the result of original research work completed by Mr. Sushant Kore under my supervision and guidance. To the best of my knowledge and belief the work incorporated in this thesis has not formed the basis for award of any Degree or similar title of this or any other University or examining body. (Dr. N.B. Dahibhate) Principal Technical Officer Information Division (DIRC) NCL, Pune Place: Pune Date: 26th December , 2014 ACKNOWLEDGEMENT I am very thankful to my respectable parents for their kind support in my life as well as completing my dissertation and bringing me to this stage in the educational field. -

Microsoft Dynamics AX 2012 System Requirements Microsoft Corporation Published: September 2017

Microsoft Dynamics AX 2012 System Requirements Microsoft Corporation Published: September 2017 This is the final planned list of system requirements as of September 2017. Microsoft does not plan to add support for additional products or product versions. However, new service packs and other updates of the currently supported product versions will still be supported. Note: Some of the information in this guide applies only to Microsoft Dynamics AX 2012 R3 installations, and some information applies only to AX 2012 R2, AX 2012 Feature Pack, or AX 2012. Unless otherwise noted in this guide, all other content applies to AX 2012, AX 2012 Feature Pack, AX 2012 R2, and AX 2012 R3. Microsoft Dynamics AX Microsoft Dynamics is a line of integrated, adaptable business management solutions that enables you and your people to make business decisions with greater confidence. Microsoft Dynamics works like and with familiar Microsoft software, automating and streamlining financial, customer relationship, and supply chain processes in a way that helps you drive business success. U.S. and Canada Toll Free 1-888-477-7989 Worldwide +1-701-281-6500 www.microsoft.com/dynamics This document is provided "as-is." Information and views expressed in this document, including URL and other Internet Web site references, may change without notice. You bear the risk of using it. Some examples are for illustration only and are fictitious. No real association is intended or inferred. This document does not provide you with any legal rights to any intellectual property in any Microsoft product. You may copy and use this document for your internal, reference purposes.