Undermining Information Hiding (And What to Do About

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Javascript and OOP Once Upon a Time

11/14/18 JavaScript and OOP Once upon a time . Jerry Cain CS 106AJ November 12, 2018 slides courtesy of Eric Roberts Object-Oriented Programming The most prestigious prize in computer science, the ACM Turing Award, was given in 2002 to two Norwegian computing pioneers, who jointly developed SIMULA in 1967, which was the first language to use the object-oriented paradigm. JavaScript and OOP In addition to his work in computer Kristen Nygaard science, Kristen Nygaard also took Ole Johan Dahl an active role in making sure that computers served the interests of workers, not just their employers. In 1990, Nygaard’s social activism was recognized in the form of the Norbert Wiener Award for Social and Professional Responsibility. Review: Clients and Interfaces David Parnas on Modular Development • Libraries—along with programming abstractions at many • David Parnas is Professor of Computer other levels—can be viewed from two perspectives. Code Science at Limerick University in Ireland, that uses a library is called a client. The code for the library where he directs the Software Quality itself is called the implementation. Research LaBoratory, and has also taught at universities in Germany, Canada, and the • The point at which the client and the implementation meet is United States. called the interface, which serves as both a barrier and a communication channel: • Parnas's most influential contriBution to software engineering is his groundBreaking 1972 paper "On the criteria to be used in decomposing systems into modules," which client implementation laid the foundation for modern structured programming. This paper appears in many anthologies and is available on the web at interface http://portal.acm.org/citation.cfm?id=361623 1 11/14/18 Information Hiding Thinking About Objects Our module structure is based on the decomposition criteria known as information hiding. -

Chapter 4: PHP Fundamentals Objectives: After Reading This Chapter You Should Be Entertained and Learned: 1

Chapter 4: PHP Fundamentals Objectives: After reading this chapter you should be entertained and learned: 1. What is PHP? 2. The Life-cycle of the PHP. 3. Why PHP? 4. Getting Started with PHP. 5. A Bit About Variables. 6. HTML Forms. 7. Building A Simple Application. 8. Programming Languages Statements Classification. 9. Program Development Life-Cycle. 10. Structured Programming. 11. Object Oriented Programming Language. 4.1 What is PHP? PHP is what is known as a Server-Side Scripting Language - which means that it is interpreted on the web server before the webpage is sent to a web browser to be displayed. This can be seen in the expanded PHP recursive acronym PHP-Hypertext Pre-processor (Created by Rasmus Lerdorf in 1995). This diagram outlines the process of how PHP interacts with a MySQL database. Originally, it is a set of Perl scripts known as the “Personal Home Page” tools. PHP 4.0 is released in June 1998 with some OO capability. The core was rewritten in 1998 for improved performance of complex applications. The core is rewritten in 1998 by Zeev and Andi and dubbed the “Zend Engine”. The engine is introduced in mid 1999 and is released with version 4.0 in May of 2000. Version 5.0 will include version 2.0 of the Zend Engine (New object model is more powerful and intuitive, Objects will no longer be passed by value; they now will be passed by reference, and Increases performance and makes OOP more attractive). Figure 4.1 The Relationship between the PHP, the Web Browser, and the MySQL DBMS 4.2 The Life-cycle of the PHP: A person using their web browser asks for a dynamic PHP webpage, the webserver has been configured to recognise the .php extension and passes the file to the PHP interpreter which in turn passes an SQL query to the MySQL server returning results for PHP to display. -

Data Abstraction and Encapsulation ADT Example

Data Abstraction and Encapsulation Definition: Data Encapsulation or Information Hiding is the concealing of the implementation details of a data object Data Abstraction from the outside world. Definition: Data Abstraction is the separation between and the specification of a data object and its implementation. Definition: A data type is a collection of objects and a set Encapsulation of operations that act on those objects. Definition: An abstract data type (ADT) is a data type that is organized in such a way that the specification of the objects and the specification of the operations on the objects is separated from the representation of the objects and the implementation of the operations. 1 2 Advantages of Data Abstraction and Data Encapsulation ADT Example ADT NaturalNumber is Simplification of software development objects: An ordered sub-range of the integers starting at zero and ending at the maximum integer (MAXINT) on the computer. Testing and Debugging functions: for all x, y belong to NaturalNumber; TRUE, FALSE belong to Boolean and where +, -, <, ==, and = are the usual integer operations Reusability Zero(): NaturalNumber ::= 0 Modifications to the representation of a data IsZero(x): Boolean ::= if (x == 0) IsZero = TRUE else IsZero = FALSE type Add(x, y): NaturalNumber ::= if (x+y <= MAXINT) Add = x + y else Add = MAXINT Equal(x, y): Boolean ::= if (x == y) Equal = TRUE else Equal = FALSE Successor(x): NaturalNumber ::= if (x == MAXINT) Successor = x else Successor = x +1 Substract(x, y): NaturalNumber ::= if (x < -

C++: Data Abstraction & Classes

Comp151 C++: Data Abstraction & Classes A Brief History of C++ • Bjarne Stroustrup of AT&T Bell Labs extended C with Simula-like classes in the early 1980s; the new language was called “C with Classes”. • C++, the successor of “C with Classes”, was designed by Stroustrup in 1986; version 2.0 was introduced in 1989. • The design of C++ was guided by three key principles: 1. The use of classes should not result in programs executing any more slowly than programs not using classes. 2. C programs should run as a subset of C++ programs. 3. No run-time inefficiency should be added to the language. Topics in Today's Lecture • Data Abstraction • Classes: Name Equivalence vs. Structural Equivalence • Classes: Restrictions on Data Members • Classes: Location of Function declarations/definitions • Classes: Member Access Control • Implementation of Class Objects Data Abstraction • Questions: – What is a ‘‘car''? – What is a ‘‘stack''? •A data abstraction is a simplified view of an object that includes only features one is interested in while hiding away the unnecessary details. • In programming languages, a data abstraction becomes an abstract data type (ADT) or a user-defined type. • In OOP, an ADT is implemented as a class. Example: Implement a Stack with an Array 1 data 2 3 size top . Example: Implement a Stack with a Linked List top -999 top (latest item) -999 1 2 Information Hiding •An abstract specification tells us the behavior of an object independent of its implementation. i.e. It tells us what an object does independent of how it works. • Information hiding is also known as data encapsulation, or representation independence. -

Data Abstraction and Object-Oriented Languages; Ada Case Study

CS323 Lecture: Data Abstraction and Object-Oriented Languages; Ada Case Study 2/4/09 Materials: Handout of Ada Demonstration Programs + Executable form (first_example, ascii_codes, rationals, stack, exception_demo, oo_demo) I. Introduction - ------------ A. As we have discussed previously, the earliest programming language paradigm (to which many languages belong) is the imperative or procedural paradigm. However, within this paradigm there have been some important developments which have led to modern languages being significantly better than older members of the same family, as well as to the development of the object-oriented paradigm. 1. One such development is "structured programming" which focussed on the control flow of programs. A major outcome of this work has been the presence, in most modern languages regardless of paradign, of certain well-defined control structures (conditional; definite and indefinite iteration). 2. A second major development has been the evolution of the idea of DATA ABSTRACTION. a. In the early days of computer science, most attention was given to algorithms and to language features to support them - e.g. procedural abstraction and control structures. b. In the late 1960's, Donald Knuth published a three volume work called "The Art of Computer Programming" which focussed attention on the fact that data structures are an equally important part of software design. i. His book is really responsible for the birth of the idea that a "Data Structures" course should be part of the CS curriculum. ii. Historically, coverage of data structures migrated from being an upper-level course in the CS major (300 level) to being a core part of the introductory sequence. -

Designing Information Hiding Modularity for Model Transformation Languages

Designing Information Hiding Modularity for Model Transformation Languages Andreas Rentschler Dominik Werle Qais Noorshams Lucia Happe Ralf Reussner Karlsruhe Institute of Technology (KIT) 76131 Karlsruhe, Germany {rentschler, noorshams, happe, reussner}@kit.edu [email protected] Abstract designed to transform models into other models and finally into code artifacts. These so-called transformation languages aim to ease Development and maintenance of model transformations make up a development efforts by offering a succinct syntax to query from and substantial share of the lifecycle costs of software products that rely map model elements between different modeling domains. on model-driven techniques. In particular large and heterogeneous However, development and maintenance of model transforma- models lead to poorly understandable transformation code due to tions themselves are expected to make up a substantial share of missing language concepts to master complexity. At the present time, the lifecycle costs of software products that rely on model-driven there exists no module concept for model transformation languages techniques [20]. Much of the effort in understanding model trans- that allows programmers to control information hiding and strictly formations arises from complexity induced by a high degree of declare model and code dependencies at module interfaces. Yet only data and control dependencies. Complexity is connected to size, then can we break down transformation logic into smaller parts, so structural complexity and heterogeneity of models involved in a that each part owns a clear interface for separating concerns. In this transformation. Visualization techniques can help to master this paper, we propose a module concept suitable for model transforma- complexity [17, 20]. -

Application of Steganography for Anonymity Through the Internet Jacques Bahi, Jean-François Couchot, Nicolas Friot, Christophe Guyeux

Application of Steganography for Anonymity through the Internet Jacques Bahi, Jean-François Couchot, Nicolas Friot, Christophe Guyeux To cite this version: Jacques Bahi, Jean-François Couchot, Nicolas Friot, Christophe Guyeux. Application of Steganog- raphy for Anonymity through the Internet. IHTIAP’2012, 1-st Workshop on Information Hiding Techniques for Internet Anonymity and Privacy, Jan 2012, Italy. pp.96 - 101. hal-00940094 HAL Id: hal-00940094 https://hal.archives-ouvertes.fr/hal-00940094 Submitted on 31 Jan 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Application of Steganography for Anonymity through the Internet Jacques M. Bahi, Jean-François Couchot, Nicolas Friot, and Christophe Guyeux* FEMTO-ST Institute, UMR 6174 CNRS Computer Science Laboratory DISC University of Franche-Comté Besançon, France {jacques.bahi, jean-francois.couchot, nicolas.friot, christophe.guyeux}@femto-st.fr * Authors in alphabetic order Abstract—In this paper, a novel steganographic scheme Considering that the freedom of expression is a fundamen- based on chaotic iterations is proposed. This research work tal right that must be protected, that journalists must be able takes place into the information hiding security framework. to inform the community without risking their own lives, The applications for anonymity and privacy through the Internet are regarded too. -

Information Hiding Interfaces for Aspect-Oriented Design∗

Information Hiding Interfaces for Aspect-Oriented Design∗ Kevin Sullivanφ William G. Griswoldλ Yuanyuan Songφ Yuanfang Caiφ Macneil Shonleλ Nishit Tewariφ Hridesh Rajanφ φComputer Science λComputer Science & Engineering University of Virginia UC San Diego Charlottesville, VA 22903 La Jolla, CA 92093-0114 fsullivan,ys8a,yc7a,nt6x,[email protected] fwgg,[email protected] ABSTRACT aspects. JPs are points in a concrete program execution, such as The growing popularity of aspect-oriented languages, such as As- method calls and executions, that, by the definition of the join pectJ, and of corresponding design approaches, makes it important point model of the AO language, are subject to advising. Advis- to learn how best to modularize programs in which aspect-oriented ing extends or overrides the action at a join point with a CLOS- composition mechanisms are used. We contribute an approach to like [22, Ch. 28] before, after, or around anonymous method called information hiding modularity in programs that use quantified ad- an advice. A PCD is a declarative expression that matches a set of vising as a module composition mechanism. Our approach rests on JPs. An advice extends or overrides the action at each join point a new kind of interface: one that abstracts a crosscutting behavior, matched by a given PCD. Because a PCD can select JPs that span decouples the design of code that advises such a behavior from the unrelated classes, an advice can have effects that cut across a class hierarchy. Advice, pointcuts and ordinary data members and meth- design of the code to be advised, and that can stipulate behavioral 1 contracts. -

Information Hiding Techniques: a Tutorial Review

Information Hiding Techniques: A Tutorial Review Sabu M Thampi Assistant Professor Department of Computer Science & Engineering LBS College of Engineering, Kasaragod Kerala- 671542, S.India [email protected] chemically affected sympathetic inks were developed. Abstract The purpose of this tutorial is to present an Invisible inks were used as recently as World War II. overview of various information hiding techniques. Modern invisible inks fluoresce under ultraviolet light A brief history of steganography is provided along and are used as anti-counterfeit devices. For example, with techniques that were used to hide information. "VOID" is printed on checks and other official Text, image and audio based information hiding documents in an ink that appears under the strong techniques are discussed. This paper also provides ultraviolet light used for photocopies. a basic introduction to digital watermarking. The monk Johannes Trithemius, considered one of the founders of modern cryptography, had ingenuity in 1. History of Information Hiding spades. His three volume work Steganographia, The idea of communicating secretly is as old as written around 1500, describes an extensive system for communication itself. In this section, we briefly concealing secret messages within innocuous texts. On discuss the historical development of information its surface, the book seems to be a magical text, and hiding techniques such as steganography/ the initial reaction in the 16th century was so strong watermarking. that Steganographia was only circulated privately until publication in 1606. But less than five years ago, Jim Early steganography was messy. Before phones, Reeds of AT&T Labs deciphered mysterious codes in before mail, before horses, messages were sent on the third volume, showing that Trithemius' work is foot. -

Constructors and Setters (Even Though Examples in Book Don't Always Do This ...) 9 Coding Advice – Getters and Setters

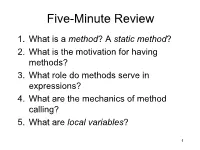

Five-Minute Review 1. What is a method? A static method? 2. What is the motivation for having methods? 3. What role do methods serve in expressions? 4. What are the mechanics of method calling? 5. What are local variables? 1 Programming – Lecture 6 Objects and Classes (Chapter 6) • Local/instance/class variables, constants • Using existing classes: RandomGenerator • The implementor’s perspective • Javadoc: The client’s perspective • Defining your own classes 2 The Art and Science of Java An Introduction to Computer Science C H A P T E R 6 ERIC S. ROBERTS Objects and Classes To beautify life is to give it an object. —José Martí, On Oscar Wilde, 1888 6.1 Using the RandomGenerator class 6.2 The javadoc documentation system 6.3 Defining your own classes 6.4 Representing student information 6.5 Rational numbers 6.6 Extending existing classes Chapter 6—Objects and Classes Local/Instance/Class Variables (See also Lec. 03) public class Example { int someInstanceVariable; static int someClassVariable; static final double PI = 3.14; public void run() { int someLocalVariable; ... } } 4 Local Variables • Declared within method • One storage location ("box") per method invocation • Stored on stack public void run() { int someLocalVariable; ... } 5 Instance Variables • Declared outside method • One storage location per object • A.k.a. ivars, member variables, or fields • Stored on heap int someInstanceVariable; 6 Class Variables • Declared outside method, with static modifier • Only one storage location, for all objects • Stored in static data segment static int someClassVariable; static final double PI = 3.14; 7 Constants • Are typically stored in class variables • final indicates that these are not modified static final double PI = 3.14; 8 this • this refers to current object • May use this to override shadowing of ivars by local vars of same name public class Point { public int x = 0, y = 0; public Point(int x, int y) { this.x = x; this.y = y; } } • Coding Advice: re-use var. -

Information Hiding and Visibility in Interface Specifications Gary T

Computer Science Technical Reports Computer Science 9-2006 Information Hiding and Visibility in Interface Specifications Gary T. Leavens Iowa State University Peter Müller ETH Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Software Engineering Commons, and the Theory and Algorithms Commons Recommended Citation Leavens, Gary T. and Müller, Peter, "Information Hiding and Visibility in Interface Specifications" (2006). Computer Science Technical Reports. 339. http://lib.dr.iastate.edu/cs_techreports/339 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Information Hiding and Visibility in Interface Specifications Abstract Information hiding controls which parts of a class are visible to non-privileged and privileged clients (e.g., subclasses). This affects detailed design specifications in two ways. First, specifications should not expose hidden class members. As noted in previous work, this is important because such hidden members are not meaningful to all clients. But it also allows changes to hidden implementation details without invalidating correctness proofs for client code, which is important for maintaining verified programs. Second, to enable sound modular reasoning, certain specifications must be visible to clients. We present rules for information hiding in specifications for Java-like languages, and demonstrate their application to the specification language JML. These rules restrict proof obligations to only mention visible class members, but retain soundness. This allows maintenance of implementations and their specifications without affecting client reasoning. -

Revisiting Information Hiding: Reflections on Classical and Nonclassical Modularity

Revisiting Information Hiding: Reflections on Classical and Nonclassical Modularity Klaus Ostermann, Paolo G. Giarrusso, Christian K¨astner,Tillmann Rendel University of Marburg, Germany Abstract. What is modularity? Which kind of modularity should devel- opers strive for? Despite decades of research on modularity, these basic questions have no definite answer. We submit that the common under- standing of modularity, and in particular its notion of information hiding, is deeply rooted in classical logic. We analyze how classical modularity, based on classical logic, fails to address the needs of developers of large software systems, and encourage researchers to explore alternative vi- sions of modularity, based on nonclassical logics, and henceforth called nonclassical modularity. 1 Introduction Modularity has been an important goal for software engineers and program- ming language designers, and over the last decades much research has provided modularity mechanisms for different kinds of software artifacts. But despite sig- nificant advances in the theory and practice of modularity, the actual goal of modularity is not clear, and in fact different communities have quite different visions in this regard. On the one hand, there is a classical notion of modularity grounded in information hiding, which manifests itself in modularization mech- anisms such as procedural/functional abstraction and abstract data types. On the other hand, there are novel (and not so novel) notions of modularity that emphasize extensibility and separation of concerns at the expense of information hiding, such as program decompositions using inheritance, reflection, exception handling, aspect-oriented programming, or mutable state and aliasing, all of which may lead to dependencies between modules that are not visible in their interfaces.