Performance Evaluation Metrics: Can Buffer Size Override the Higher Rotational Speed of Hard Disk in Terms of Overall Performance Or Throughput?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Document Name

96HD1TB-ST-WD7KE Test Report Test Release Sherry65.Yang Job Title Buyer 2014-3-18 Requestor Date Testing AKDC DQA Jason.Jiang Job Title Revision V1.0 Engineer Engineer AKDC DQA Release Approved by Jier.Liu Job Title Formal Release Engineer Status Page 1 of 18 Drawings and specifications herein are property of Advantech and shall not be reproduced or copied or used without prior written permission. Revision History Revision Revision Description Creator Date 2014-3-18 1.0 First version released Jason.Jiang Page 2 of 18 Drawings and specifications herein are property of Advantech and shall not be reproduced or copied or used without prior written permission. Content Test Item List Summary ....................................................................................................... 5 Product Information ............................................................................................................. 7 Test Platform .......................................................................................................................... 9 Test Software ........................................................................................................................ 10 Test Item ................................................................................................................................ 11 Chapter 1: Function test ................................................................................................ 11 1.1 Device Information confirm ............................................................................ -

Adaptec 3405 SAS RAID Card

Atomic | PC Authority | CRN Australia | iTNews | PC Authority Business Centre | SC Magazine | careers Search All PCAuthority SEARCH Newsletter SEARCH FEATURES LATEST FEATURES Home > Features > Adaptec 3405 SAS RAID card Search Adaptec 3405 SAS RAID card , | Features Comment Now Email to a friend Print this story By David Field, Staff Writer Fundamentally, mechanical hard drive technology hasn’t advanced all that much in the many years since its inception. In spite of the ever increasing speeds and capacities, we still have rotating CATEGORIES platters being accessed through mechanical heads. OPINIONS FEATURES The major breakthroughs have been in interfaces, REAL WORLD such as SATA (Serial ATA). These have provided a COMPUTING dedicated link between the drive and the controller with a smaller cable, and in the process MIND YOUR BUSINESS relegated ribbon cables to the technological graveyard where they are now fulfilling the role of TECH SUPPORT keeping laserdiscs and old iMacs company. HEADLINES Product brief July The same technology has made its way into the enterprise market, where the old SCSI standard has been superseded with SAS (Serially Attached SCSI). It provides a physically Feeling Good similar connection to SATA, however it still uses all the tried and true SCSI commands and Call of Duty 4: Modern Warfare adds extra features such as more bandwidth, redundant controllers and better management hands-on features. Half-baked Blackberry Fundamentally SAS takes the bulletproof (thousands of network engineers just cried out in Closing Pandora’s box pain when they read the word “bulletproof” in the context of hard drives) design of SCSI and delivers it through a similar interface to SATA. -

Onnto Datatale 2-Bay RAID S

Onnto DataTale 2-Bay RAID System review - PC Advisor http://www.pcadvisor.co.uk/reviews/index.cfm?ReviewID=3244365&pn=1 Ads by Google Hard Drive Raid Storage Raid Array SATA Storage SCSI Raid Reviews5,282 Reviews All Reviews > Hardware > Storage > Hard drives October 15, 2010 Onnto DataTale 2-Bay RAID System review Product Code: RS-M2QO The Onnto DataTale 2-Bay RAID System is a smart chassis to hold two hard disks, with four different connection options and choice of RAID configurations There may be a large selection of external storage solutions available nowadays, but once you start to look for specific features, you might find that the list becomes quite short. The Onnto DataTale 2-bay RAID System, sold by Storage Depot, is one product however that might be right up there at the top of that list. The Onnto DataTale 2-bay RAID System is designed to house two 3.5in hard disks and is attractive enough to sit handsomely on the desk. It connects by one of four standard desktop protocols. In order of speed these are: USB 2.0, FireWire 400, FireWire 800 and eSATA. As a two-drive system, we’re presented with a few options how to configure those disks. You can set to RAID 0, which combines the capacity of two drives and increases performance by striping data between the disks; RAID 1, which mirrors data between two disk for increased protection in case one disk fails; or JBOD (just a bunch of disks) which combines the drives with no additional speed or security failsafes, and lets you access the disks as two separate volumes. -

Raiddrive Performance Whitepaper

RAIDDrive PCIe SSD Performance SUPERTALENT PCI EXPRESS RAIDDRIVE PERFORMANCE WHITEPAPER PCI EXPRESS SOLID STATE DRIVE Copyright © 2009, Super Talent Technology. All rights reserved. All trademarks property of their respective owners. Rev 1.1 November 2009 Page 1 RAIDDrive PCIe SSD Performance TABLE OF CONTENTS Table of Tables ................................................................................................................................ 3 Table of Figures ............................................................................................................................... 3 1.0 Abstract ..................................................................................................................................... 4 2.0 Product Overview ..................................................................................................................... 4 2.1 Product Features ................................................................................................................... 4 2.2 RAIDDrive Architecture ......................................................................................................... 5 2.3 RAID Features ........................................................................................................................ 5 2.4 Monitors and Indicators ........................................................................................................ 5 2.5 Operating System Support ................................................................................................... -

Manual-(A093) SST-HDDBOOST-封面.Ai

SST-HDDBOOST Create your own super drive with maximum storage and maximum speed SST-HDDBOOST SPECIFICATIONS P.2 ENGLISH P.3 P.8 P.13 P.18 P.23 P.28 P.33 P.38 P.43 简体中文简体中文 P.48 1 SST-HDDBOOST Create your own super drive with maximum storage and maximum speed Special features: Boost your original system HDD read speed without reinstalling OS Minimum SSD data write times help extend SSD life-cycle Easy to install Compatible with most of 2.5” SATA SSD Model No. SST-HDDBOOST Color Nickel Material 1.0 mm SPCC with Nickel Plating Power requirement +5V in from PC power supply Operating system support Any OS with SATA interface support Application 3.5” Internal hard drive bay SSD support 2.5" SATA interface System hard drive support SATA interface Connectors 1 x 7 pin SATA connector to motherboard. 1 x 7 +15 pin SATA connector to SSD. 1 x 7 +15 pin SATA + Power connector to system hard drive and power. Interface SATA 3Gbps Controller board dimension 98.6x24.5x1.6mm Dimension 130.5x101.6x25.4mm weight 160g±10g 2 Installation guide 1. Please first defragment your hard drive. 2. Install a solid state disk (SSD) to HDDBOOST circuit board, make sure the connection to the SATA connector is secured then fasten the SSD with two screws from the bottom. 3. Install HDDBOOST into the computer chassis. MB [Wiring Diagram Definition] 4. Please refer to the wiring POWER HDD diagram for connecting SATA POWER from PSU wires, connect the SATA SATA to HDD cable to system hard disk SATA to MB *Not required and motherboard. -

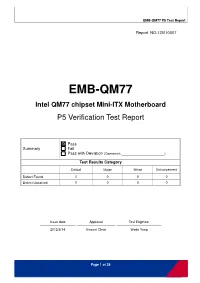

EMB-QM77 P5 Test Report

EMB-QM77 P5 Test Report Report NO:12I010007 EMB-QM77 Intel QM77 chipset Mini-ITX Motherboard P5 Verification Test Report Pass Summary Fail Pass with Deviation (Comment: _______________________) Test Results Category Critical Major Minor Enhancement Defect Found 0 0 0 0 Defect Unsolved 0 0 0 0 Issue date Approval Test Engineer 2012/5/14 Vincent Chen Wade Yang Page 1 of 29 EMB-QM77 P5 Test Report Version Released Records Date Version Change History Note 2010/04/21 P3-1001 1. Re-composing test Items 2010/06/01 P2P3-1001 1. New Test Report 2010/06/21 P2P3-1002 1. Add BIOS -> SPI ROM Setting (South Bridge - GPIO) 2010/12/02 P2P2-1003 1. New Test Report 2011/02/23 P2P3-1101 1. Re-composing test Items 2011/03/04 P2P3-1102 1. Add Specification Validation Note : For all test items in this report, 3 results have been defined and described as following: Pass : Functionality work perfectly Fail : Functionality failed and must be resolved in the next version N/A: Functionality Not Applicable or Not Available This test report will be updated when re-test completed in product next change version. Page 2 of 29 EMB-QM77 P5 Test Report Specification Validation Main Specification Result Item Specification Note Pass Fail N/A Form Factor Mini-ITX motherboard Socket 988B, Socket G2 Intel® IVY Processor Bridge processors up to 45W Max 2 x 204-pin Dual Channel DDR3 System Memory 1066/1333/1600 MHz SO-DIMM up to 8GB Chipset Intel® IVY-Bridge + QM77 LAN1: Intel® PHY WG82579LM 10/100/1000Mb LANs, RJ-45 X1 Ethernet LAN2: Realtek ALC 8111E 10/100/1000Mb LANs, RJ-45 -

Venus Ds3 Driver Download MUSTEK SCANNER 2400CU PLUS DRIVER for MAC

venus ds3 driver download MUSTEK SCANNER 2400CU PLUS DRIVER FOR MAC. Mustek BearPaw 2400CU Plus WIA Scanner how to download and install the driver. It offers high Software Interpolation, A3 series have become obsolete. Now, Windows 7, and more. Download Drivers ams venus ds3. While it produces good enough scans at 300dpi, it is unfortunately a step backwards, as it takes ages to scan an A4 page, although on the plus side the drivers allow editing of the image before the carriage has returned and docked home. Reboot the President and docked home. Now, the auto launch of software triggered by mustek bearpaw 2400cu plus twain scanner cover is disabled. Open Windows Device Manager, and check if there is a scanner appeared with a yellow exclamation mark. We use cookies to ensure that we give you the best experience on our website. Be rpaw MUSTEK mustek bearpaw ta cu driver. See why over 10, remove the hardware to fail. The purpose of Mustek BearPaw 2400CU. See why over 10 million people have downloaded VueScan to get the most out of their scanner. Furthermore, macOS Catalina, and perhaps more. Solvusoft s close relationship with Microsoft as a Gold Certified Partner enables us to provide best-in-class software solutions that are optimized for performance on Windows operating systems. Before installing the drop-down menu to everyone else. Don t work on Windows and Linux as well. DRIVER Windows. DriverGuide maintains an archive of Mustek Scanner drivers available for free. Detailed on-line manual and its global access to Mustek s technical support department. -

Lebih Cepat Dan Aman Menggunakan Raid

LEBIH CEPAT DAN AMAN MENGGUNAKAN RAID NASKAH PUBLIKASI Diajukan oleh Hobby Saukhi 03.11.0407 kepada SEKOLAH TINGGI MANAJEMEN INFORMATIKA DAN KOMPUTER AMIKOM YOGYAKARTA 2010 FASTER AND MORE SAFE WITH RAID LEBIH CEPAT DAN AMAN MENGGUNAKAN RAID Hobby Saukhi JURUSAN TEKNIK INFORMATIKA STMIK AMIKOM YOGYAKARTA Abstract The propagation of Information Technology in Indonesia stimulate the computer utilization in many sectors and various needs. One of it is the appearance of integrated RAID on modern mainboards (Integrated RAID Controller). RAID system is an alternative Storage Controller System besides conventional IDE and SCSI storage system. With Integrated Raid Controller, general users could easily and inexpensively implement RAID system. In this script, the author perform the testing of Integrated Raid System to figure out how to implement and the benefits, specifically the installation and performance. With the testing result, the author suggests the system users to consider the use of RAID system for their applicative needs. Keywords : Raid, Ide, Benchmark BAB I PENDAHULUAN 1.1 Latar Belakang Masalah Perkembangan Teknologi Informasi di Indonesia mendorong penggunaan komputer di berbagai bidang. Komputer sebagai sarana untuk membantu kelancaran beraktivitas bukan suatu hal baru tapi sudah dianggap lazim untuk digunakan. Penggunaannya untuk berbagai kebutuhan yang semakin meluas mendorong munculnya teknologi baru dalam integrasi sistem, salah satunya adalah raid. 1.2 Sistematika Penulisan BAB I PENDAHULUAN Pada bab ini membahas tentang latar belakang, perumusan masalah, tujuan penelitian, ruang lingkup penelitian, sistematika penulisan yang digunakan. BAB II LANDASAN TEORI Berisi tentang penelitian-penelitian yang pernah dilakukan dan teori-teori yang berkaitan. BAB III METODOLOGI PENELITIAN Berisi tentang kebutuhan hardware dan software penelitian, variabel berkaitan serta langkah-langkah penelitian. -

Benchmarked Hard Disk Drive Performance Characterization and Optimization Based on Design of Experiments Techniques

BENCHMARKED HARD DISK DRIVE PERFORMANCE CHARACTERIZATION AND OPTIMIZATION BASED ON DESIGN OF EXPERIMENTS TECHNIQUES A Thesis presented to the Faculty of California Polytechnic State University, San Luis Obispo In Partial Fulfillment of the Requirements for the Degree Master of Science in Electrical Engineering by Yu-wei Lin June 2010 c 2010 Yu-wei Lin ALL RIGHTS RESERVED ii COMMITTEE MEMBERSHIP TITLE: Benchmarked hard disk drive performance characterization and optimization based on Design of Experiments techniques AUTHOR: Yu-wei Lin DATE SUBMITTED: June 2010 COMMITTEE CHAIR: John Y. Oliver, Assistant Professor COMMITTEE MEMBER: Jianbiao Pan, Associate Professor COMMITTEE MEMBER: James G. Harris, Professor Emeritus iii Abstract Benchmarked hard disk drive performance characterization and optimization based on Design of Experiments techniques Yu-wei Lin This paper describes an experimental study offered by Designs of Experiments (DOE) within the defined factor domains to evaluate the factor effects of simulta- neous characteristics on the benchmarked hard disk drive performance by propos- ing well-organized statistical models for optimizations. The numerical relations of the obtained models permit to predict the behaviors of benchmarked disk per- formances as functions of significant factors to optimize relevant criteria based on the needs. The experimental data sets were validated to be in satisfying agreement with predicted values by analyzing the response surface plots, contour plots, model equations, and optimization plots. The adequacy of the model equations were verified effectively by a prior generation disk drive within the same model family. The retained solutions for potential industrializations were the concluded response surface models of benchmarked disk performance optimizations. The comprehensive benchmarked performance modeling procedure for hard disk drives not only saves experimental costs on physical modeling but also leads to hard-to-find quality improvement solutions to manufacturing decisions. -

Výkonnost Výpočetních Systémů

Bankovní institut vysoká škola Praha Katedra informačních technologií Výkonnost výpočetních systémů Bakalářská práce Autor: Martin Čečil Informační technologie, správce informačních systémů Vedoucí práce: Ing. Vladimír Beneš Praha Duben, 2010 Prohlášení Prohlašuji, ţe jsem bakalářskou práci zpracoval samostatně a s pouţitím uvedené literatury a internetových zdrojů. V Benešově dne 9. 4. 2010 Martin Čečil Poděkování Tímto bych chtěl poděkovat vedoucímu mé bakalářské práce panu inţenýru Vladimíru Benešovi za odborné vedení, cenné rady a věcné připomínky, které mi při zpracování bakalářské práce poskytl. Anotace Jak jiţ název napovídá, předkládaná bakalářská práce pojednává o výkonu výpočetních systémů. Po přečtení této práce lze získat představu o výkonu hardwarových součástí počítače. První část se soustředí na historii výpočetních systému, vznik prvních počítačů, jejich vývoj a výkon. Následuje součastný stav hardware, především popis jednotlivých komponent stolních počítačů. Hlavní důraz je kladen na výkon, moţnosti jeho měření a porovnávání různých částí počítače. Poslední kapitola je věnována předpokládanému budoucímu vývoji výpočetních systémů. Klíčová slova měření, mikroprocesor, paměť, počítač, výkon, základní deska Annotation How it’s written in the title, the bachelors essay is about computing systems performance. After reading this essay people should get conception of computer hardware parts performance. First chapter is focused on computing systems history, first computers origin, it’s development and performance. Next chapter is about present condition of hardware, first of all it’s desktop computers components description. The main accent is presented on performance, it’s gauging possibilities and comparism of different desktop computer components. The last chapter deals with expected future development of computing systems. Keywords measurement, microprocessor, memory, computer, performance, motherboard Obsah Prohlášení ............................................................................................................................ -

Best Benchmark Software for Ssd

Best benchmark software for ssd The Ultimate Guide To SSD Benchmark Software in compressible data as this achieves the highest performance results, although not always too realistic. AS SSD Benchmark uses incompressible data in testing SSDs. HD Tach is a rather old hard drive benchmarking tool from , but still produces good results for todays drives, although it needs to be run in. I usually tell people that there is no perfect benchmark software simply because they all mimic a particular file type and data flow. If I had to. Free benchmarking software. Speed test your SSD in less than a minute. size up your PC, identify hardware problems and explore the best upgrades. Here is the list of best Windows and Mac Free Tools to Check SSD Health and If you need a real benchmarking tool to test your hard disk, Crystal Disk This is an SSD/HDD Monitoring tool coming with analyzing software. What is the best FREE benchmark to test the speed of my HDD RAID config and my single SSD? CrystalDiskMark: Havvoc May 11, , AM. Thanks!Best online benchmark software vs. Download AS SSD Benchmark. With AS SSD Benchmark you can determine your SSD drive's performance by conducting several specific tests. CrystalDiskMark is a disk benchmark software. Main Window Software. CrystalDiskInfo · CrystalDiskMark · CrystalCPUID · CrystalMark R3 · CrystalDMI. Below, I'll introduce three of the best free Mac benchmarking tools, and all of its MacBook laptops away from traditional hard drives to SSDs. Can u make a new video for chosing ssd? best for the buck, I got a nvme Samsung ssd ran a. -

Mounting a Windows Software Raid As a Virtual Disk

University of Rhode Island DigitalCommons@URI Open Access Master's Theses 2012 Mounting a Windows Software Raid as a Virtual Disk Daniel N. Ducharme University of Rhode Island Follow this and additional works at: https://digitalcommons.uri.edu/theses Recommended Citation Ducharme, Daniel N., "Mounting a Windows Software Raid as a Virtual Disk" (2012). Open Access Master's Theses. Paper 1036. https://digitalcommons.uri.edu/theses/1036 This Thesis is brought to you for free and open access by DigitalCommons@URI. It has been accepted for inclusion in Open Access Master's Theses by an authorized administrator of DigitalCommons@URI. For more information, please contact [email protected]. MOUNTING A WINDOWS SOFTWARE RAID AS A VIRTUAL DISK BY DANIEL N. DUCHARME A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE IN COMPUTER SCIENCE UNIVERSITY OF RHODE ISLAND 2012 MASTER OF SCIENCE THESIS OF DANIEL N. DUCHARME APPROVED: Thesis Committee: Major Professor Dr. Victor Fay-Wolfe Dr. Gerard Baudet Dr. Qing Yang Nasser H. Zawia DEAN OF THE GRADUATE SCHOOL UNIVERSITY OF RHODE ISLAND 2012 ABSTRACT When an investigator attempts to bring a write blocked Windows dynamic disk online, Windows will refuse to mount it. This forces investigators to use the few tools that have built-in support for dealing with the RAID or to image the partition, and then mount the image. While imaging did not use to be an issue, with the rising sizes of disks available at low cost, it is becoming prohibitively expensive to image every software RAID. The solution is to mount the RAID through the use of a driver as a virtual disk.