Debugging Experience with CUDA-GDB and CUDA-MEMCHECK Geoff Gerfin Vyas Venkataraman CUDA Debugging Solutions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

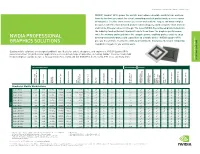

NVIDIA Professional Graphics Solutions | Line Card

PROFEssional GRAPHICS Solutions | MOBILE | LINE Card | JUN17 NVIDIA® Quadro® GPUs power the world’s most advanced mobile workstations and new form-factor devices to meet the visual computing needs of professionals across a range of industries. Creative and technical users can work with the largest and most complex designs, render the most detailed photo-realistic imagery, and develop the most intricate and lifelike VR experiences on-the-go. The latest NVIDIA Pascal based products build on the industry-leading Maxwell lineup with up to three times the graphics performance, twice the memory and nearly twice the compute power, enabling professionals to enjoy NVIDIA PROFESSIONAL desktop-level performance and capabilities on a mobile device. NVIDIA Quadro GPUs GRAPHICS SOLUTIONS give you the ultimate creative freedom, by providing the most powerful visual computing capabilities anywhere you want to work. Quadro mobile solutions are designed and built specifically for artists, designers, and engineers, NVIDIA Quadro GPUs power more than 100 professional applications across a broad range of industries, including Adobe® Creative Cloud, Avid Media Composer, Autodesk Suites, Dassault Systemes, CATIA and SOLIDWORKS, Siemens NX, PTC Creo, and many more. PROFESSIONAL GRAPHICS PROFESSIONAL CARD LINE SOLUTIONS GPU SPECIFICATIONS PERFORMANCE OPTIONS 1 2 NVIDIA CUDA NVIDIA CUDA Cores Processing GPU Memory Memory Bandwidth Memory Type Memory Interface TGP Max Power Consumption Display Port OpenGL Shader Model DirectX PCIe Generation Floating-Point Performance -

Przewodnik Ubuntu 14.04 LTS Trusty Tahr

Zespół Ubuntu.pl Przewodnik po Ubuntu 14.04 LTS Trusty Tahr wersja 1.0 16 maja 2014 Spis treści Spis treści Spis treści 1 Wstęp ....................2 4.15 LibreOffice — pakiet biurowy..... 77 1.1 O Ubuntu................2 4.16 Rhythmbox — odtwarzacz muzyki.. 77 1.2 Dlaczego warto zmienić system na 4.17 Totem — odtwarzacz filmów..... 79 Ubuntu?................3 5 Sztuczki z systemem Ubuntu ...... 81 2 Instalacja Ubuntu .............6 5.1 Wybór szybszych repozytoriów.... 81 2.1 Pobieranie obrazu instalatora.....6 5.2 Wyłączenie Global Menu....... 82 2.2 Nagrywanie pobranego obrazu....6 5.3 Minimalizacja aplikacji poprzez 2.3 Przygotowanie do instalacji......9 kliknięcie na jej ikonę w Launcherze. 82 2.4 Uruchomienie instalatora....... 11 5.4 Normalny wygląd pasków przewijania 83 2.5 Graficzny instalator Ubuntu..... 14 5.5 Prywatność............... 83 2.6 Partycjonowanie dysku twardego... 23 5.6 Unity Tweak Tool........... 84 2.7 Zaawansowane partycjonowanie.... 29 5.7 Ubuntu Tweak............. 84 2.8 Instalacja na maszynie wirtualnej.. 34 5.8 Instalacja nowych motywów graficznych 84 2.9 Aktualizacja z poprzedniego wydania 37 5.9 Instalacja zestawu ikon........ 85 2.10 Rozwiązywanie problemów z instalacją 37 5.10 Folder domowy na pulpicie...... 86 3 Pierwsze uruchomienie systemu .... 40 5.11 Steam.................. 86 3.1 Uruchomienie systemu Ubuntu.... 40 5.12 Wyłączenie raportowania błędów... 87 3.2 Ekran logowania............ 41 5.13 Odtwarzanie szyfrowanych płyt DVD 87 3.3 Rzut oka na pulpit Ubuntu...... 42 5.14 Przyspieszanie systemu poprzez 3.4 Instalacja oprogramowania...... 42 lepsze wykorzystanie pamięci..... 87 3.5 Rzeczy do zrobienia po instalacji 5.15 Oczyszczanie systemu........ -

Nvidia Quadro T1000

NVIDIA professional laptop GPUs power the world’s most advanced thin and light mobile workstations and unique compact devices to meet the visual computing needs of professionals across a wide range of industries. The latest generation of NVIDIA RTX professional laptop GPUs, built on the NVIDIA Ampere architecture combine the latest advancements in real-time ray tracing, advanced shading, and AI-based capabilities to tackle the most demanding design and visualization workflows on the go. With the NVIDIA PROFESSIONAL latest graphics technology, enhanced performance, and added compute power, NVIDIA professional laptop GPUs give designers, scientists, and artists the tools they need to NVIDIA MOBILE GRAPHICS SOLUTIONS work efficiently from anywhere. LINE CARD GPU SPECIFICATIONS PERFORMANCE OPTIONS 2 1 ® / TXAA™ Anti- ® ™ 3 4 * 5 NVIDIA FXAA Aliasing Manager NVIDIA RTX Desktop Support Vulkan NVIDIA Optimus NVIDIA CUDA NVIDIA RT Cores Cores Tensor GPU Memory Memory Bandwidth* Peak Memory Type Memory Interface Consumption Max Power TGP DisplayPort Open GL Shader Model DirectX PCIe Generation Floating-Point Precision Single Peak)* (TFLOPS, Performance (TFLOPS, Performance Tensor Peak) Gen MAX-Q Technology 3rd NVENC / NVDEC Processing Cores Processing Laptop GPUs 48 (2nd 192 (3rd NVIDIA RTX A5000 6,144 16 GB 448 GB/s GDDR6 256-bit 80 - 165 W* 1.4 4.6 7.0 12 Ultimate 4 21.7 174.0 Gen) Gen) 40 (2nd 160 (3rd NVIDIA RTX A4000 5,120 8 GB 384 GB/s GDDR6 256-bit 80 - 140 W* 1.4 4.6 7.0 12 Ultimate 4 17.8 142.5 Gen) Gen) 32 (2nd 128 (3rd NVIDIA RTX A3000 -

Nvidia Professional Graphics Solutions

Take on your most challenging digital prototyping, visual effects, and geophysical or architectural visualization workloads with the graphics horsepower, realism, and interactivity that only NVIDIA® Quadro® can deliver. It’s powered by the latest NVIDIA technologies, features large ultra-fast memory, and supports four NVIDIA PROFESSIONAL displays natively with resolutions of true 4K. Enjoy exceptional accuracy and photorealism in your creative workflow by using NVIDIA Iray® technology* to GRAPHICS SOLUTIONS reach new levels of interactive, physically based rendering. NVIDIA® Quadro® 3D Workstation NVIDIA® Tesla® Co-Processors NVIDIA® Multi-GPU Technology Quadro® Visual Computing Appliance Professional Graphics Solutions NVIDIA Tesla GPU parallel processors are tailored NVIDIA® Multi-GPU Technology leverages (VCA) Designed and built specifically for artists, to provide high-performance NVIDIA CUDA® combinations of Quadro and Tesla GPUs This is a powerful, turnkey, network-attached designers, and engineers, NVIDIA Quadro GPUs acceleration for your workflow. Designed for to intelligently scale the performance of appliance that harnesses the power of the highest power more than 100 professional applications professional systems and demanding professional your application and dramatically speed performing NVIDIA Quadro GPUs. It’s accessible across a broad range of industries. Professionals applications, Tesla GPUs perform the complex up your workflow. This delivers significant to anyone on the network, is easily integrated trust them to enable their best work using calculations required for CAE/CFD calculations, business impact across industries such into design workflows, and can scale to deliver applications such as Adobe® Creative Cloud, Avid seismic processing, ray-traced rendering, as Manufacturing, Media and Entertainment, noiseless, interactive global illumination. Media Composer, Autodesk Suites, Dassault compositing, image processing, physics, and and Energy Exploration. -

Datasheet Lifebook® Nh751 Notebook

DATASHEET FUJITSU LIFEBOOK NH751 NOTEBOOK DATASHEET LIFEBOOK® NH751 NOTEBOOK THE 2ND GENERATION INTEL® CORE™ I7 MOBILE PROCESSOR COUPLED WITH SWITCHABLE NVIDIA® GEFORCE® GT GRAPHICS; DELIVER STELLAR PERFORMANCE AND VISUALLY STUNNING GRAPHICS THAT BOTH GAMERS AND TECHNOLOGY ENTHUSIASTS CRAVE. The LIFEBOOK NH751 notebook takes portability to Boost Technology 2.0, which adapts processor speed a whole new level with its 17.3" High-Resolution for more performance when you need it. The 2nd LED backlit display. Genuine Windows® 7 Home generation Intel® Core™ i7 processor is the ideal Premium allows your notebook to transform into an choice for visibly smart performance at its best. uncompromising mobile media center. Dazzling imagery that seamlessly moves and responds is made possible with 8 GB of dual channel SIGHT AND SOUND DDR3 memory and 2 GB of dedicated memory on the The LIFEBOOK NH751 notebook is encased in a slim switchable NVIDIA GeForce GT graphics processor. 1.3" high-gloss black housing. The high resolution NVIDIA’s Optimus™ switchable graphics processor 17.3" HD+ frameless display, combined with an delivers optimized performance and battery life. You NVIDIA GeForce GT 525M graphics card with 2GB of can surf the web and get great battery life but when dedicated graphics, and 8GB of high-speed memory you need the extra horsepower for more intense will treat you to unparalleled performance, crisp graphics, Optimus automatically switches to the more photos, high-definition video playback, and smooth powerful NVIDIA GPU. gaming performance. From power-hungry 3D games to video editing, With the raw power of theLIFEBOOK NH751, you will with the LIFEBOOK NH751 notebook, you forget that your system is actually portable; movies won’t sacrifice power for portability. -

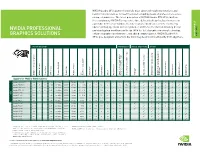

NVIDIA Professional Graphics Solutions | Line Card

NVIDIA Quadro GPUs power the world’s most advanced mobile workstations and new form-factor devices to meet the visual computing needs of professionals across a range of industries. The latest generation of NVIDIA Quadro RTX GPUs, built on the revolutionary NVIDIA Turing architecture, deliver desktop-level performance in a portable form factor. Combine the latest advancements in real-time ray tracing, advanced shading, and AI-based capabilities and tackle the most demanding design NVIDIA PROFESSIONAL and visualization workflows on the go. With the latest graphics memory technology, enhanced graphics performance, and added compute power, NVIDIA Quadro RTX QUADRO MOBILE QUADRO GRAPHICS SOLUTIONS CARD LINE GPUs give designers and artists the tools they need to work efficiently from anywhere. GPU SPECIFICATIONS PERFORMANCE VIRTUAL REALITY (VR) OPTIONS 4 1 2 5 RT Cores 3 ® Tensor Cores Tensor GPU Memory Memory Bandwidth Memory Type Memory Interface TGP Consumption Max Power DisplayPort NVIDIA CUDA Processing Cores Processing NVIDIA CUDA NVIDIA OpenGL Shader Model DirectX PCIe Generation Floating-Point Precision Single Peak) (TFLOPS, Performance Peak) (TOPS, Performance Tensor VR Ready Multi-Projection Simultaneous NVIDIA FXAA / TXAA Antialiasing Display Management NVIDIA nView Technology Video for GPUDirect Support Vulkan NVIDIA 3D Vision Pro NVIDIA Optimus Quadro for Mobile Workstations Quadro RTX 6000 4,608 72 576 24 GB 672 GBps GDDR6 384-bit 250 W 1.4 4.6 5.1 12.1 3 14.9 119.4 Quadro RTX 5000 3,072 48 384 16 GB 448 GBps GDDR6 256-bit 80 -

Precision 5560 Technical Guidebook

Precision 5560 Technical Guidebook Regulatory Model: P91F Regulatory Type: P91F002 June 2021 Rev. A00 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2021 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. Contents Chapter 1: Views of Precision 5560............................................................................................... 5 Right....................................................................................................................................................................................... 5 Left..........................................................................................................................................................................................5 Top.......................................................................................................................................................................................... 6 Front....................................................................................................................................................................................... 7 Bottom.................................................................................................................................................................................. -

GPU Accelerated Approach to Numerical Linear Algebra and Matrix Analysis with CFD Applications

University of Central Florida STARS HIM 1990-2015 2014 GPU Accelerated Approach to Numerical Linear Algebra and Matrix Analysis with CFD Applications Adam Phillips University of Central Florida Part of the Mathematics Commons Find similar works at: https://stars.library.ucf.edu/honorstheses1990-2015 University of Central Florida Libraries http://library.ucf.edu This Open Access is brought to you for free and open access by STARS. It has been accepted for inclusion in HIM 1990-2015 by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation Phillips, Adam, "GPU Accelerated Approach to Numerical Linear Algebra and Matrix Analysis with CFD Applications" (2014). HIM 1990-2015. 1613. https://stars.library.ucf.edu/honorstheses1990-2015/1613 GPU ACCELERATED APPROACH TO NUMERICAL LINEAR ALGEBRA AND MATRIX ANALYSIS WITH CFD APPLICATIONS by ADAM D. PHILLIPS A thesis submitted in partial fulfilment of the requirements for the Honors in the Major Program in Mathematics in the College of Sciences and in The Burnett Honors College at the University of Central Florida Orlando, Florida Spring Term 2014 Thesis Chair: Dr. Bhimsen Shivamoggi c 2014 Adam D. Phillips All Rights Reserved ii ABSTRACT A GPU accelerated approach to numerical linear algebra and matrix analysis with CFD applica- tions is presented. The works objectives are to (1) develop stable and efficient algorithms utilizing multiple NVIDIA GPUs with CUDA to accelerate common matrix computations, (2) optimize these algorithms through CPU/GPU memory allocation, GPU kernel development, CPU/GPU communication, data transfer and bandwidth control to (3) develop parallel CFD applications for Navier Stokes and Lattice Boltzmann analysis methods. -

GPGPU for Embedded Systems White Paper

1 GPGPU for Embedded Systems White Paper Contents 1. Introduction .............................................................................................................................. 2 2. Existing Challenges in Modern Embedded Systems .................................................................... 2 2.1. Not Enough Calculation Power ........................................................................................... 2 2.2. Power Consumption, Form Factor and Heat Dissipation ..................................................... 3 2.3. Generic Approach .............................................................................................................. 3 3. GPGPU Benefits in Embedded Systems ...................................................................................... 3 3.1. GPU Accelerated Computing .............................................................................................. 3 3.2. CUDA and OpenCL Frameworks - Definitions ...................................................................... 4 3.3. CUDA Architecture and Processing Flow ............................................................................. 5 3.4. CUDA Benchmark on Embedded System ............................................................................ 6 3.4.1. “Nbody” - CUDA N-Body Simulation ............................................................................... 6 1.1.1. SobolQRNG - Sobol Quasirandom Number Generator .................................................... 8 1.2. Switchable Graphics -

201804 Aetina Catalogue.Indd

1 CONTENTS About Aetina ................................................................................................ 3 Business Commitment ................................................................................. 4 Industrial / Embedded Focus ........................................................................ 6 Do the Right Technology : Built for Extreme Reliability .............................. 8 Customization Service ................................................................................. 10 Industrial Graphics Cards General Purpose Graphics Cards ................................................................ 11 Selection Guide ................................................................................. 12 PCI ..................................................................................................... 14 PCI Express ........................................................................................ 15 Rugged GPGPU Boards ............................................................................... 23 Selection Guide ................................................................................. 24 XMC .............................................................. ..................................... 25 VPX .............................................................. ...................................... 27 CompactPCI Express ......................................................................... 28 Embedded MXM GPU Modules ................................................................. -

NVIDIA Professional Graphics Solutions | Line Card

Take on your most challenging digital prototyping, visual effects, and geophysical or architectural visualization workloads with the graphics horsepower, realism, and interactivity that only NVIDIA® Quadro® can deliver. It’s powered by the latest NVIDIA technologies, features large ultra-fast memory, and supports four NVIDIA PROFESSIONAL displays natively with resolutions of true 4K. Enjoy exceptional accuracy and photorealism in your creative workflow by using NVIDIA Iray® technology* to GRAPHICS SOLUTIONS reach new levels of interactive, physically based rendering. NVIDIA® Quadro® 3D Workstation NVIDIA® Tesla® Co-Processors NVIDIA® Multi-GPU Technology Quadro® Visual Computing Appliance Professional Graphics Solutions NVIDIA Tesla GPU parallel processors are tailored NVIDIA® Multi-GPU Technology leverages (VCA) Designed and built specifically for artists, to provide high-performance NVIDIA CUDA® combinations of Quadro and Tesla GPUs This is a powerful, turnkey, network-attached designers, and engineers, NVIDIA Quadro GPUs acceleration for your workflow. Designed for to intelligently scale the performance of appliance that harnesses the power of the highest power more than 100 professional applications professional systems and demanding professional your application and dramatically speed performing NVIDIA Quadro GPUs. It’s accessible across a broad range of industries. Professionals applications, Tesla GPUs perform the complex up your workflow. This delivers significant to anyone on the network, is easily integrated trust them to enable their best work using calculations required for CAE/CFD calculations, business impact across industries such into design workflows, and can scale to deliver applications such as Adobe® Creative Cloud, Avid seismic processing, ray-traced rendering, as Manufacturing, Media and Entertainment, noiseless, interactive global illumination. Media Composer, Autodesk Suites, Dassault compositing, image processing, physics, and and Energy Exploration. -

Several Tips on How to Choose a Suitable Computer

Several tips on how to choose a suitable computer This document provides more specific information on how to choose a computer that will be suitable for scanning and post- processing of your data with Artec Studio. On our web-site you can find general requirements, in this document we’ll try to cover all of them and provide more detailed explanations. It also contains some tested configurations and some tips and tricks to make your hardware perform as fast as possible. 1 Table of Contents General requirements for hardware Processor 1.1. Recommended processors and microarchitecture 1.2. What about Xeon? 1.3. What about AMD processors? RAM USB 3.1. General recommendations 3.2. USB 3.0 3.3. Connecting several scanners/sensors to 1 computer Videocard 4.1. What is supported and what is definitely not supported? 4.2. What about Quadro cards? 4.3. What about SLI? 4.4. Do you support laptops with NVIDIA Optimus technology? 4.5. Stereo support 4.6. Real-time fusion requirements 4.7. GCTest OS 5.1. What is recommended and what is not supported? 5.2. Do you have a version for MacOS? Several tips to increase performance 6.1. Desktop machines 6.2. Laptops 6.3. Quadro cards Frequently asked questions 7.1. What laptops does Artec use? Can you recommend any particular model? 7.2. Can I test your software? 2 General requirements for hardware Processor: I5 or I7 recommended Memory: 8-12 Gb for Artec Eva / 12-16 Gb for Artec Spider USB: 1 USB 2.0 port for a regular scanner.