Differences and Similarities Between Journal Impact Factor and Citescore

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sci-Hub Provides Access to Nearly All Scholarly Literature

Sci-Hub provides access to nearly all scholarly literature A DOI-citable version of this manuscript is available at https://doi.org/10.7287/peerj.preprints.3100. This manuscript was automatically generated from greenelab/scihub-manuscript@51678a7 on October 12, 2017. Submit feedback on the manuscript at git.io/v7feh or on the analyses at git.io/v7fvJ. Authors • Daniel S. Himmelstein 0000-0002-3012-7446 · dhimmel · dhimmel Department of Systems Pharmacology and Translational Therapeutics, University of Pennsylvania · Funded by GBMF4552 • Ariel Rodriguez Romero 0000-0003-2290-4927 · arielsvn · arielswn Bidwise, Inc • Stephen Reid McLaughlin 0000-0002-9888-3168 · stevemclaugh · SteveMcLaugh School of Information, University of Texas at Austin • Bastian Greshake Tzovaras 0000-0002-9925-9623 · gedankenstuecke · gedankenstuecke Department of Applied Bioinformatics, Institute of Cell Biology and Neuroscience, Goethe University Frankfurt • Casey S. Greene 0000-0001-8713-9213 · cgreene · GreeneScientist Department of Systems Pharmacology and Translational Therapeutics, University of Pennsylvania · Funded by GBMF4552 PeerJ Preprints | https://doi.org/10.7287/peerj.preprints.3100v2 | CC BY 4.0 Open Access | rec: 12 Oct 2017, publ: 12 Oct 2017 Abstract The website Sci-Hub provides access to scholarly literature via full text PDF downloads. The site enables users to access articles that would otherwise be paywalled. Since its creation in 2011, Sci- Hub has grown rapidly in popularity. However, until now, the extent of Sci-Hub’s coverage was unclear. As of March 2017, we find that Sci-Hub’s database contains 68.9% of all 81.6 million scholarly articles, which rises to 85.2% for those published in toll access journals. -

The Journal Ranking System Undermining the Impact of 2 Brazilian Science 3 4 Rodolfo Jaffé1 5 6 1 Instituto Tecnológico Vale, Belém-PA, Brazil

bioRxiv preprint doi: https://doi.org/10.1101/2020.07.05.188425; this version posted July 6, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. 1 QUALIS: The journal ranking system undermining the impact of 2 Brazilian science 3 4 Rodolfo Jaffé1 5 6 1 Instituto Tecnológico Vale, Belém-PA, Brazil. Email: [email protected] 7 8 Abstract 9 10 A journal ranking system called QUALIS was implemented in Brazil in 2009, intended to rank 11 graduate programs from different subject areas and promote selected national journals. Since this 12 system uses a complicated suit of criteria (differing among subject areas) to group journals into 13 discrete categories, it could potentially create incentives to publish in low-impact journals ranked 14 highly by QUALIS. Here I assess the influence of the QUALIS journal ranking system on the 15 global impact of Brazilian science. Results reveal a steeper decrease in the number of citations 16 per document since the implementation of this QUALIS system, compared to the top Latin 17 American countries publishing more scientific articles. All the subject areas making up the 18 QUALIS system showed some degree of bias, with social sciences being usually more biased 19 than natural sciences. Lastly, the decrease in the number of citations over time proved steeper in a 20 more biased area, suggesting a faster shift towards low-impact journals ranked highly by 21 QUALIS. -

Conference Accreditation and Need of a Bibliometric Measure to Distinguish Predatory Conferences

publications Viewpoint Conference Accreditation and Need of a Bibliometric Measure to Distinguish Predatory Conferences Pooyan Makvandi 1,* , Anahita Nodehi 2 and Franklin R. Tay 3 1 Centre for Materials Interfaces, Istituto Italiano di Tecnologia, Viale Rinaldo Piaggio 34, 56025 Pontedera, Italy 2 Department of Statistics, Computer Science, Applications (DiSIA), Florence University, Viale Morgagni 59, 50134 Florence, Italy; Anahita.nodehi@unifi.it 3 The Graduate School, Augusta University, Augusta, GA 30912, USA; [email protected] * Correspondence: [email protected] or [email protected] Abstract: Academic conferences offer scientists the opportunity to share their findings and knowledge with other researchers. However, the number of conferences is rapidly increasing globally and many unsolicited e-mails are received from conference organizers. These e-mails take time for researchers to read and ascertain their legitimacy. Because not every conference is of high quality, there is a need for young researchers and scholars to recognize the so-called “predatory conferences” which make a profit from unsuspecting researchers without the core purpose of advancing science or collaboration. Unlike journals that possess accreditation indices, there is no appropriate accreditation for international conferences. Here, a bibliometric measure is proposed that enables scholars to evaluate conference quality before attending. Keywords: conference indicator; conference impact factor; conference accreditation; bibliometric measure Citation: Makvandi, P.; Nodehi, A.; Tay, F.R. Conference Accreditation and Need of a Bibliometric Measure 1. Introduction to Distinguish Predatory Conferences. Academic conferences offer scientists the opportunity to share their findings and Publications 2021, 9, 16. https:// knowledge with other researchers. Conferences are organized by institutions or societies, doi.org/10.3390/publications9020016 and in rare cases, by individuals [1]. -

Citation Analysis: Web of Science, Scopus

Citation analysis: Web of science, scopus Masoud Mohammadi Golestan University of Medical Sciences Information Management and Research Network Citation Analysis • Citation analysis is the study of the impact and assumed quality of an article, an author, or an institution based on the number of times works and/or authors have been cited by others • Citation analysis is the examination of the frequency, patterns, and graphs of citations in documents. It uses the pattern of citations, links from one document to another document, to reveal properties of the documents. A typical aim would be to identify the most important documents in a collection. A classic example is that of the citations between academic articles and books.The judgements produced by judges of law to support their decisions refer back to judgements made in earlier cases so citation analysis in a legal context is important. Another example is provided by patents which contain prior art, citation earlier patents relevant to the current claim. Citation Databases • Citation databases are databases that have been developed for evaluating publications. The citation databases enable you to count citations and check, for example, which articles or journals are the most cited ones • In a citation database you get information about who has cited an article and how many times an author has been cited. You can also list all articles citing the same source. • Most important citation database are • “Web of Science”, • “Scopus” • “Google Scholar” Web of Sciences • Web of Science is owned and produced by Thomson Reuters. WoS is composed of three databases containing citations from international scientific journals: • Arts & Humanities Citation Index - AHCI • Social Sciences Citation Index - SSCI • Science Citation Index - SCI • Journal Coverage: • Aims to include the best journals of all fields. -

Introduction to Bibliometrics

Introduction to Bibliometrics Bibliometrics A new service of the university library to support academic research Carolin Ahnert and Martin Bauschmann This work is licensed under: Creative Commons Attribution 4.0 International License. Chemnitz ∙ 16.05.17 ∙ Carolin Ahnert, Martin Bauschmann www.tu-chemnitz.de Introduction to Bibliometrics What is bibliometrics? – short definition „Bibliometrics is the statistical analysis of bibliographic data, commonly focusing on citation analysis of research outputs and publications, i.e. how many times research outputs and publications are being cited“ (University of Leeds, 2014) Quantitative analysis and visualisation of scientific research output based on publication and citation data Chemnitz ∙ 16.05.17 ∙ Carolin Ahnert, Martin Bauschmann www.tu-chemnitz.de Introduction to Bibliometrics What is bibliometrics? – a general classification descriptive bibliometrics evaluative bibliometrics Identification of relevant research topics Evaluation of research performance cognition or thematic trends (groups of researchers, institutes, Identification of key actors universities, countries) interests Exploration of cooperation patterns and Assessment of publication venues communication structures (especially journals) Interdisciplinarity Productivity → visibility → impact → Internationality quality? examined Topical cluster constructs Research fronts/ knowledge bases Social network analysis: co-author, co- Indicators: number of articles, citation methods/ citation, co-word-networks etc. rate, h-Index, -

Understanding Research Metrics INTRODUCTION Discover How to Monitor Your Journal’S Performance Through a Range of Research Metrics

Understanding research metrics INTRODUCTION Discover how to monitor your journal’s performance through a range of research metrics. This page will take you through the “basket of metrics”, from Impact Factor to usage, and help you identify the right research metrics for your journal. Contents UNDERSTANDING RESEACH METRICS 2 What are research metrics? What are research metrics? Research metrics are the fundamental tools used across the publishing industry to measure performance, both at journal- and author-level. For a long time, the only tool for assessing journal performance was the Impact Factor – more on that in a moment. Now there are a range of different research metrics available. This “basket of metrics” is growing every day, from the traditional Impact Factor to Altmetrics, h-index, and beyond. But what do they all mean? How is each metric calculated? Which research metrics are the most relevant to your journal? And how can you use these tools to monitor your journal’s performance? For a quick overview, download our simple guide to research metrics. Or keep reading for a more in-depth look at what’s in the “basket of metrics” and how to interpret it. UNDERSTANDING RESEACH METRICS 3 Citation-based metrics Citation-based metrics IMPACT FACTOR What is the Impact Factor? The Impact Factor is probably the most well-known metric for assessing journal performance. Designed to help librarians with collection management in the 1960s, it has since become a common proxy for journal quality. The Impact Factor is a simple research metric: it’s the average number of citations received by articles in a journal within a two-year window. -

1 Scientometric Indicators and Their Exploitation by Journal Publishers

Scientometric indicators and their exploitation by journal publishers Name: Pranay Parsuram Student number: 2240564 Course: Master’s Thesis (Book and Digital Media Studies) Supervisor: Prof. Fleur Praal Second reader: Dr. Adriaan van der Weel Date of completion: 12 July 2019 Word count: 18177 words 1 Contents 1. Introduction ............................................................................................................................ 3 2. Scientometric Indicators ........................................................................................................ 8 2.1. Journal Impact Factor ...................................................................................................... 8 2.2. h-Index .......................................................................................................................... 10 2.3. Eigenfactor™ ................................................................................................................ 11 2.4. SCImago Journal Rank.................................................................................................. 13 2.5. Source Normalized Impact Per Paper ........................................................................... 14 2.6. CiteScore ....................................................................................................................... 15 2.6. General Limitations of Citation Count .......................................................................... 16 3. Conceptual Framework ....................................................................................................... -

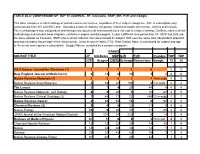

TABLE 46.4: COMPARISON of TOP 10 JOURNAL JIF, Citescore, SNIP, SIR, FCR and Google

TABLE 46.4: COMPARISON OF TOP 10 JOURNAL JIF, Citescore, SNIP, SIR, FCR and Google This table compares current rankings of journals across six sources, regardless of their subject categories. JCR is subscription only, uses journals from SCI and SSCI and calculates a ratio of citations comparing citations to citable documents.- articles and reviews. The methodology is size independent and having more documents and citations does not lead to a higher ranking. CiteScore uses a similar methodology and includes book chapters, conference papers and data papers. It uses a different time period than JIF. SNIP and SJR use the same dataset as Citescore. SNIP uses a similar ratio but normalizes based on subject. SJR uses the same size independent approach and also considers the prestige of the citing journal. It has its own H-Index. FCR, Field Citation Ratio, is normalized for subject and age of the article and requires a subscription. Google Metrics compiled by a computer program. RANKS SOURCE TITLE JIF CiteScore SNIP SJR FCR h5-index TOP TOP JCR Scopus CWTS Scimago Dimensions Google 10 20 CA:A Cancer Journal for Clinicians (1) 1 1 1 1 6 5 5 New England Journal of Medicine (2) 2 13 8 19 1 2 4 6 Nature Reviews Materials (1) 3 3 5 3 4 16 in subc 5 5 Nature Reviews Drug Discovery 4 53 22 156 5 1 in subc 2 2 The Lancet 5 7 4 29 44 4 4 4 Nature Reviews Molecular Cell Biology 6 8 20 5 15 67 3 5 Nature Reviews Clinical Oncology (3) 7 44 71 72 249 13 in subc 1 1 Nature Reviews Cancer 8 10 18 10 9 90 4 5 Chemical Reviews (2) 9 4 9 12 11 9 4 6 Nature Energy -

Choosing the Right Journal for Your Research a Comprehensive Guide for Researchers

Choosing the right journal for your research A comprehensive guide for researchers Guidance, developments, news, and ideas for Taylor & Francis authors @tandfonline TaylorandFrancisGroup authorservices.taylorandfrancis.com AUTHOR SERVICES /// Choosing the right journal for your research / 03 CONTENTS / INTRODUCTION 4 / UNDERSTANDING JOURNAL METRICS 18 Types of journal metric 18 / BEFORE YOU START 4 Impact Factor 18 5-year Impact Factor 18 / CHOOSING A JOURNAL CHECKLIST 5 SNIP (Source Normalized Impact Per Paper) 19 SJR (Scimago Journal Rank) 19 / WHERE TO START WHEN CHOOSING A JOURNAL 6 CiteScore 19 Do some desk research 6 Eigenfactor 19 Speak to colleagues, supervisors and your librarians 7 Cited Half-Life 19 Explore journal suggester tools 7 Altmetric Attention Score 19 Search calls for papers 7 How much should you rely on metrics when choosing a journal? 20 / NARROWING DOWN YOUR SHORTLIST 8 / GETTING TO GRIPS WITH JOURNAL INDEXING AND DISCOVERABILITY 22 Get familiar with the journal’s content 8 Abstracting and indexing 23 Review the journal aims and scope 8 Discovery Services 23 Understand the journal’s audience 10 Review journal affiliations, the editorial board, and previous authors 10 Get to know the journal’s policies and procedures 11 / IS THE JOURNAL TRUSTWORTHY? – HOW TO AVOID ‘PREDATORY PUBLISHERS’ 24 Check which article formats are accepted 12 Think. Check. Submit. 24 Understand your publishing options 12 Quality open access publishing 25 Ask about journal metrics and discoverability 12 / WHERE TO NEXT? 25 / CHOOSING OPEN ACCESS -

How to Turn Phd Thesis Into an Article?

How to turn PhD thesis into an article? Presented by Ozge Sertdemir [email protected] AGENDA o What is a journal article? o Differences of journal article from thesis o Identifying the right journal o Typical Journal Article Structure o How to build your article o Tips WHAT IS A JOURNAL ARTICLE? | 4 What is a Journal Article? Many first-time authors use the research conducted as a part of their PhD as a basis of for a journal article While this is logical, requirements of a journal article significantly differ from those of a thesis Journal articles focus on a specific research written by experts and other professionals and usually scholarly and peer reviewed | 5 Different Audience, Different Standards ▪ Dissertation committees assess whether a student’s work has fulfilled program outcomes and requirements, not whether it’s ready for publication or even widespread release. ▪ Dissertation review certifies the student’s capabilities within the context of the discipline and the institution. (Hawkins AR, et al, The Journal of Academic Librarianship) Editors and reviewers of peer-reviewed journals are experts in their fields and well versed in scholarly communication. Their task is to assess whether papers and projects further the knowledge and thus should be published! | 6 Differences between Thesis and Article THESIS ARTICLE • Meets academic requirements • Meets journal requirements • Reviewed by selected committee members • Reviewed by panel of blind reviewers • Chapters • Sections • Lengthy, no word limits • Word limits • Table of Contents • Manuscript format • Lengthy research of literature • Succinct research of literature • Institutional Review Board approval • Institutional Review Board described described in detail in 1-3 sentences • Description and copies of tools used • Essential and succinct tool • All findings presented information • Verb tenses vary • Selected findings presented • Verb tenses are consistent | 7 Peer – Review Process The editorial process selects suitable articles for publication and publishes papers in one standard format. -

Understanding the Metrics Used to Assess the Quality of Journals

International Journal of Community Medicine and Public Health Pathak VK et al. Int J Community Med Public Health. 2019 Dec;6(12):5368-5371 http://www.ijcmph.com pISSN 2394-6032 | eISSN 2394-6040 DOI: http://dx.doi.org/10.18203/2394-6040.ijcmph20195501 Letter to the Editor Understanding the metrics used to assess the quality of journals Sir, Limitations Various databases offer access to thousands of academic Impact factor is derived from journals indexed in journals. A miscellaneous picture with respect to quality, Web of science and so no other journals can have an scientific influence and prestige is presented by umpteen impact factor.5,6 numbers of journals present. Authors wishing to publish Since the impact factor only looks at citations in the their research aim to publish in journals with the highest current year to articles in the previous two years, it ratings and are ultimately in no man’s land.1 only works well for disciplines in which rapid citation is the standard.7 Traditionally, measures for journal ranking or evaluations It doesn’t take into account disciplinary differences have been provided simply through institutional lists in expected numbers of citations.7 established by academic leaders or through committee Impact factor cannot be computed for those journals votes. An approach of this kind is highly politicized and that are <2 years old.5 does not reflect actual prestige and quality. This also causes the problem of highly disparate evaluations across Five year impact factor institutions.2,3 A modification of impact factor is which articles of past Though quality and impact of the journal is usually five years are considered in place of two years.8 apparent through how widely it is read, how often it is cited, and its perception in the community, several H-index metrics have been proposed to assess the quality of the increasing quantity of journals.4 The H-index is a measure of both quantity (number of publications (Np)) and quality (number of citations for Most indices are based on citation counts, though they each publication (Nc)). -

Web of Science (Wos) and Scopus: the Titans of Bibliographic Information in Today's Academic World

publications Review Web of Science (WoS) and Scopus: The Titans of Bibliographic Information in Today’s Academic World Raminta Pranckute˙ Scientific Information Department, Library, Vilnius Gediminas Technical University, Sauletekio˙ Ave. 14, LT-10223 Vilnius, Lithuania; [email protected] Abstract: Nowadays, the importance of bibliographic databases (DBs) has increased enormously, as they are the main providers of publication metadata and bibliometric indicators universally used both for research assessment practices and for performing daily tasks. Because the reliability of these tasks firstly depends on the data source, all users of the DBs should be able to choose the most suitable one. Web of Science (WoS) and Scopus are the two main bibliographic DBs. The comprehensive evaluation of the DBs’ coverage is practically impossible without extensive bibliometric analyses or literature reviews, but most DBs users do not have bibliometric competence and/or are not willing to invest additional time for such evaluations. Apart from that, the convenience of the DB’s interface, performance, provided impact indicators and additional tools may also influence the users’ choice. The main goal of this work is to provide all of the potential users with an all-inclusive description of the two main bibliographic DBs by gathering the findings that are presented in the most recent literature and information provided by the owners of the DBs at one place. This overview should aid all stakeholders employing publication and citation data in selecting the most suitable DB. Keywords: WoS; Scopus; bibliographic databases; comparison; content coverage; evaluation; citation impact indicators Citation: Pranckute,˙ R. Web of Science (WoS) and Scopus: The Titans 1.