Torch: Tensors and Neural Networks with 'GPU' Acceleration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Practical Guide for Improving Transparency and Reproducibility in Neuroimaging Research Krzysztof J

bioRxiv preprint first posted online Feb. 12, 2016; doi: http://dx.doi.org/10.1101/039354. The copyright holder for this preprint (which was not peer-reviewed) is the author/funder. It is made available under a CC-BY 4.0 International license. A practical guide for improving transparency and reproducibility in neuroimaging research Krzysztof J. Gorgolewski and Russell A. Poldrack Department of Psychology, Stanford University Abstract Recent years have seen an increase in alarming signals regarding the lack of replicability in neuroscience, psychology, and other related fields. To avoid a widespread crisis in neuroimaging research and consequent loss of credibility in the public eye, we need to improve how we do science. This article aims to be a practical guide for researchers at any stage of their careers that will help them make their research more reproducible and transparent while minimizing the additional effort that this might require. The guide covers three major topics in open science (data, code, and publications) and offers practical advice as well as highlighting advantages of adopting more open research practices that go beyond improved transparency and reproducibility. Introduction The question of how the brain creates the mind has captivated humankind for thousands of years. With recent advances in human in vivo brain imaging, we how have effective tools to peek into biological underpinnings of mind and behavior. Even though we are no longer constrained just to philosophical thought experiments and behavioral observations (which undoubtedly are extremely useful), the question at hand has not gotten any easier. These powerful new tools have largely demonstrated just how complex the biological bases of behavior actually are. -

Reproducible Reports with Knitr and R Markdown

Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Reproducible Reports with knitr and R Markdown Yihui Xie, RStudio 11/22/2014 @ UPenn, The Warren Center 1 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... An appetizer Run the app below (your web browser may request access to your microphone). http://bit.ly/upenn-r-voice install.packages("shiny") Or just use this: https://yihui.shinyapps.io/voice/ 2/46 2 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Overview and Introduction 3 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... I know you click, click, Ctrl+C and Ctrl+V 4/46 4 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... But imagine you hear these words after you finished a project Please do that again! (sorry we made a mistake in the data, want to change a parameter, and yada yada) http://nooooooooooooooo.com 5/46 5 of 46 1/15/15 2:18 PM Reproducible Reports with knitr and R Markdown https://dl.dropboxusercontent.com/u/15335397/slides/2014-UPe... Basic ideas of dynamic documents · code + narratives = report · i.e. computing languages + authoring languages We built a linear regression model. -

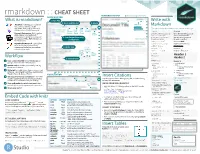

R Markdown Cheat Sheet I

1. Workflow R Markdown is a format for writing reproducible, dynamic reports with R. Use it to embed R code and results into slideshows, pdfs, html documents, Word files and more. To make a report: R Markdown Cheat Sheet i. Open - Open a file that ii. Write - Write content with the iii. Embed - Embed R code that iv. Render - Replace R code with its output and transform learn more at rmarkdown.rstudio.com uses the .Rmd extension. easy to use R Markdown syntax creates output to include in the report the report into a slideshow, pdf, html or ms Word file. rmarkdown 0.2.50 Updated: 8/14 A report. A report. A report. A report. A plot: A plot: A plot: A plot: Microsoft .Rmd Word ```{r} ```{r} ```{r} = = hist(co2) hist(co2) hist(co2) ``` ``` Reveal.js ``` ioslides, Beamer 2. Open File Start by saving a text file with the extension .Rmd, or open 3. Markdown Next, write your report in plain text. Use markdown syntax to an RStudio Rmd template describe how to format text in the final report. syntax becomes • In the menu bar, click Plain text File ▶ New File ▶ R Markdown… End a line with two spaces to start a new paragraph. *italics* and _italics_ • A window will open. Select the class of output **bold** and __bold__ you would like to make with your .Rmd file superscript^2^ ~~strikethrough~~ • Select the specific type of output to make [link](www.rstudio.com) with the radio buttons (you can change this later) # Header 1 • Click OK ## Header 2 ### Header 3 #### Header 4 ##### Header 5 ###### Header 6 4. -

HGC: a Fast Hierarchical Graph-Based Clustering Method

Package ‘HGC’ September 27, 2021 Type Package Title A fast hierarchical graph-based clustering method Version 1.1.3 Description HGC (short for Hierarchical Graph-based Clustering) is an R package for conducting hierarchical clustering on large-scale single-cell RNA-seq (scRNA-seq) data. The key idea is to construct a dendrogram of cells on their shared nearest neighbor (SNN) graph. HGC provides functions for building graphs and for conducting hierarchical clustering on the graph. The users with old R version could visit https://github.com/XuegongLab/HGC/tree/HGC4oldRVersion to get HGC package built for R 3.6. License GPL-3 Encoding UTF-8 SystemRequirements C++11 Depends R (>= 4.1.0) Imports Rcpp (>= 1.0.0), RcppEigen(>= 0.3.2.0), Matrix, RANN, ape, dendextend, ggplot2, mclust, patchwork, dplyr, grDevices, methods, stats LinkingTo Rcpp, RcppEigen Suggests BiocStyle, rmarkdown, knitr, testthat (>= 3.0.0) VignetteBuilder knitr biocViews SingleCell, Software, Clustering, RNASeq, GraphAndNetwork, DNASeq Config/testthat/edition 3 NeedsCompilation yes git_url https://git.bioconductor.org/packages/HGC git_branch master git_last_commit 61622e7 git_last_commit_date 2021-07-06 Date/Publication 2021-09-27 1 2 CKNN.Construction Author Zou Ziheng [aut], Hua Kui [aut], XGlab [cre, cph] Maintainer XGlab <[email protected]> R topics documented: CKNN.Construction . .2 FindClusteringTree . .3 HGC.dendrogram . .4 HGC.parameter . .5 HGC.PlotARIs . .6 HGC.PlotDendrogram . .7 HGC.PlotParameter . .8 KNN.Construction . .9 MST.Construction . 10 PMST.Construction . 10 Pollen . 11 RNN.Construction . 12 SNN.Construction . 12 Index 14 CKNN.Construction Building Unweighted Continuous K Nearest Neighbor Graph Description This function builds a Continuous K Nearest Neighbor (CKNN) graph in the input feature space using Euclidean distance metric. -

Rmarkdown : : CHEAT SHEET RENDERED OUTPUT File Path to Output Document SOURCE EDITOR What Is Rmarkdown? 1

rmarkdown : : CHEAT SHEET RENDERED OUTPUT file path to output document SOURCE EDITOR What is rmarkdown? 1. New File Write with 5. Save and Render 6. Share find in document .Rmd files · Develop your code and publish to Markdown ideas side-by-side in a single rpubs.com, document. Run code as individual shinyapps.io, The syntax on the lef renders as the output on the right. chunks or as an entire document. set insert go to run code RStudio Connect Rmd preview code code chunk(s) Plain text. Plain text. Dynamic Documents · Knit together location chunk chunk show End a line with two spaces to End a line with two spaces to plots, tables, and results with outline start a new paragraph. start a new paragraph. narrative text. Render to a variety of 4. Set Output Format(s) Also end with a backslash\ Also end with a backslash formats like HTML, PDF, MS Word, or and Options reload document to make a new line. to make a new line. MS Powerpoint. *italics* and **bold** italics and bold Reproducible Research · Upload, link superscript^2^/subscript~2~ superscript2/subscript2 to, or attach your report to share. ~~strikethrough~~ strikethrough Anyone can read or run your code to 3. Write Text run all escaped: \* \_ \\ escaped: * _ \ reproduce your work. previous modify chunks endash: --, emdash: --- endash: –, emdash: — chunk run options current # Header 1 Header 1 chunk ## Header 2 Workflow ... Header 2 2. Embed Code ... 11. Open a new .Rmd file in the RStudio IDE by ###### Header 6 Header 6 going to File > New File > R Markdown. -

Statistics with Free and Open-Source Software

Free and Open-Source Software • the four essential freedoms according to the FSF: • to run the program as you wish, for any purpose • to study how the program works, and change it so it does Statistics with Free and your computing as you wish Open-Source Software • to redistribute copies so you can help your neighbor • to distribute copies of your modified versions to others • access to the source code is a precondition for this Wolfgang Viechtbauer • think of ‘free’ as in ‘free speech’, not as in ‘free beer’ Maastricht University http://www.wvbauer.com • maybe the better term is: ‘libre’ 1 2 General Purpose Statistical Software Popularity of Statistical Software • proprietary (the big ones): SPSS, SAS/JMP, • difficult to define/measure (job ads, articles, Stata, Statistica, Minitab, MATLAB, Excel, … books, blogs/posts, surveys, forum activity, …) • FOSS (a selection): R, Python (NumPy/SciPy, • maybe the most comprehensive comparison: statsmodels, pandas, …), PSPP, SOFA, Octave, http://r4stats.com/articles/popularity/ LibreOffice Calc, Julia, … • for programming languages in general: TIOBE Index, PYPL, GitHut, Language Popularity Index, RedMonk Rankings, IEEE Spectrum, … • note that users of certain software may be are heavily biased in their opinion 3 4 5 6 1 7 8 What is R? History of S and R • R is a system for data manipulation, statistical • … it began May 5, 1976 at: and numerical analysis, and graphical display • simply put: a statistical programming language • freely available under the GNU General Public License (GPL) → open-source -

Writing Reproducible Reports Knitr with R Markdown

Writing reproducible reports knitr with R Markdown Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/AdvData I To estimate a p-value? I To estimate some other quantity? I To estimate power? How many simulation replicates? 2 I To estimate a p-value? I To estimate some other quantity? How many simulation replicates? I To estimate power? 2 I To estimate some other quantity? How many simulation replicates? I To estimate power? I To estimate a p-value? 2 How many simulation replicates? I To estimate power? I To estimate a p-value? I To estimate some other quantity? 2 Data analysis reports I Figures/tables + email I Static Word document I LATEX + R ! PDF I R Markdown = knitr + Markdown ! Web page 3 What if the data change? What if you used the wrong version of the data? 4 rmarkdown.rstudio.com knitr in a knutshell kbroman.org/knitr_knutshell 5 knitr in a knutshell kbroman.org/knitr_knutshell rmarkdown.rstudio.com 5 knitr code chunks Input to knitr: We see that this is an intercross with `r nind(sug)` individuals. There are `r nphe(sug)` phenotypes, and genotype data at `r totmar(sug)` markers across the `r nchr(sug)` autosomes. The genotype data is quite complete. ```{r summary_plot, fig.height=8} plot(sug) ``` Output from knitr: We see that this is an intercross with 163 individuals. There are 6 phenotypes, and genotype data at 93 markers across the 19 autosomes. The genotype data is quite complete. ```r plot(sug) ```  6 html <!DOCTYPE html> <html> <head> <meta charset=utf-8"/> <title>Example html file</title> </head> <body> <h1>Markdown example</h1> <p>Use a bit of <strong>bold</strong> or <em>italics</em>. -

Knitr with R Markdown

Writing reproducible reports knitr with R Markdown Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR knitr in a knutshell kbroman.org/knitr_knutshell 2 Data analysis reports I Figures/tables + email I Static LATEX or Word document I knitr/Sweave + LATEX ! PDF I knitr + Markdown ! Web page 3 What if the data change? What if you used the wrong version of the data? 4 knitr code chunks Input to knitr: We see that this is an intercross with `r nind(sug)` individuals. There are `r nphe(sug)` phenotypes, and genotype data at `r totmar(sug)` markers across the `r nchr(sug)` autosomes. The genotype data is quite complete. ```{r summary_plot, fig.height=8} plot(sug) ``` Output from knitr: We see that this is an intercross with 163 individuals. There are 6 phenotypes, and genotype data at 93 markers across the 19 autosomes. The genotype data is quite complete. ```r plot(sug) ```  5 html <!DOCTYPE html> <html> <head> <meta charset=utf-8"/> <title>Example html file</title> </head> <body> <h1>Markdown example</h1> <p>Use a bit of <strong>bold</strong> or <em>italics</em>. Use backticks to indicate <code>code</code> that will be rendered in monospace.</p> <ul> <li>This is part of a list</li> <li>another item</li> </ul> </body> </html> [Example] 6 CSS ul,ol { margin: 0 0 0 35px; } a { color: purple; text-decoration: none; background-color: transparent; } a:hover { color: purple; background: #CAFFFF; } [Example] 7 Markdown # Markdown example Use a bit of **bold** or _italics_. -

R Programming for Data Science

R Programming for Data Science Roger D. Peng This book is for sale at http://leanpub.com/rprogramming This version was published on 2015-07-20 This is a Leanpub book. Leanpub empowers authors and publishers with the Lean Publishing process. Lean Publishing is the act of publishing an in-progress ebook using lightweight tools and many iterations to get reader feedback, pivot until you have the right book and build traction once you do. ©2014 - 2015 Roger D. Peng Also By Roger D. Peng Exploratory Data Analysis with R Contents Preface ............................................... 1 History and Overview of R .................................... 4 What is R? ............................................ 4 What is S? ............................................ 4 The S Philosophy ........................................ 5 Back to R ............................................ 5 Basic Features of R ....................................... 6 Free Software .......................................... 6 Design of the R System ..................................... 7 Limitations of R ......................................... 8 R Resources ........................................... 9 Getting Started with R ...................................... 11 Installation ............................................ 11 Getting started with the R interface .............................. 11 R Nuts and Bolts .......................................... 12 Entering Input .......................................... 12 Evaluation ........................................... -

Using GNU Make to Manage the Workflow of Data Analysis Projects

JSS Journal of Statistical Software June 2020, Volume 94, Code Snippet 1. doi: 10.18637/jss.v094.c01 Using GNU Make to Manage the Workflow of Data Analysis Projects Peter Baker University of Queensland Abstract Data analysis projects invariably involve a series of steps such as reading, cleaning, summarizing and plotting data, statistical analysis and reporting. To facilitate repro- ducible research, rather than employing a relatively ad-hoc point-and-click cut-and-paste approach, we typically break down these tasks into manageable chunks by employing sep- arate files of statistical, programming or text processing syntax for each step including the final report. Real world data analysis often requires an iterative process because many of these steps may need to be repeated any number of times. Manually repeating these steps is problematic in that some necessary steps may be left out or some reported results may not be for the most recent data set or syntax. GNU Make may be used to automate the mundane task of regenerating output given dependencies between syntax and data files. In addition to facilitating the management of and documenting the workflow of a complex data analysis project, such automation can help minimize errors and make the project more reproducible. It is relatively simple to construct Makefiles for small data analysis projects. As projects increase in size, difficulties arise because GNU Make does not have inbuilt rules for statistical and related software. Without such rules, Makefiles can become unwieldy and error-prone. This article addresses these issues by providing GNU Make pattern rules for R, Sweave, rmarkdown, SAS, Stata, Perl and Python to streamline management of data analysis and reporting projects. -

B.Tech. in Computer Science and Engineering with Specialization in Big Data Analytics

B.Tech. in Computer Science and Engineering with Specialization in Big Data Analytics Mission of the Department Mission Stmt - 1 To impart knowledge in cutting edge Computer Science and Engineering technologies in par with industrial standards. To collaborate with renowned academic institutions to uplift innovative research and development in Computer Science and Engineering and Mission Stmt - 2 its allied fields to serve the needs of society To demonstrate strong communication skills and possess the ability to design computing systems individually as well as part of a Mission Stmt - 3 multidisciplinary teams. Mission Stmt - 4 To instill societal, safety, cultural, environmental, and ethical responsibilities in all professional activities To produce successful Computer Science and Engineering graduates with personal and professional responsibilities and commitment to Mission Stmt - 5 lifelong learning Program Educational Objectives (PEO) Graduates will be able to perform in technical/managerial roles ranging from design, development, problem solving to production support in software PEO - 1 industries and R&D sectors. PEO - 2 Graduates will be able to successfully pursue higher education in reputed institutions. Graduates will have the ability to adapt, contribute and innovate new technologies and systems in the key domains of Computer Science and PEO - 3 Engineering. PEO - 4 Graduates will be ethically and socially responsible solution providers and entrepreneurs in Computer Science and other engineering disciplines. PEO - 5 Graduates will possess the additional skills in handling Big Data using the state-of-art tools and techniques PEO - 6 Graduates will be able to apply the principles of data science for providing real world business solutions Mission of the Department to Program Educational Objectives (PEO) Mapping Mission Stmt. -

Introduction to R Programming – a Scilife Lab Course

Introduction to R programming – a SciLife Lab course Marcin Kierczak 31 August 2016 Marcin Kierczak Introduction to R programming – a SciLife Lab course What R is a programming language, a programming platform (=environment + interpreter), a software project driven by the core team and the community. And more: a very powerful tool for statistical computing, a very powerful computational tool in general. , a catalyst between an idea and its presentation. Marcin Kierczak Introduction to R programming – a SciLife Lab course What R is not a tool to replace a statistician, the very best programming language, the most elegant programming solution, the most efficient programming language. Figure 1: Marcin Kierczak Introduction to R programming – a SciLife Lab course A brief history of R conceived c.a. 1992 by Robert Gentleman and Ross Ihaka (R&R) at the University of Auckland, NZ – a tool for teaching statistics, Figure 2: Ross Ihaka and Robert Gentleman, creators of R. 1994 – initial version; 2000 – stable version, Marcin Kierczak Introduction to R programming – a SciLife Lab course A brief history of R cted. open-source solution –> fast development, based on the S language created at the Bell Labs by John Chambers to to turn ideas into software, quickly and faithfully, inspired also by Lisp syntax (lexical scope), since 1997 developed by the R Development Core Team (~20 experts, with Chambers onboard), overviewed by The R Foundation for Statistical Computing learn more Marcin Kierczak Introduction to R programming – a SciLife Lab course The system of R packages – an overview developed by the community, cover several very diverse areas of science/life, uniformely structured and documented, organised in repositiries: CRAN - The Comprehensive R Archive Network, R-Forge, Bioconductor, GitHub.