Scientific Visualization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Anna Lysyanskaya Curriculum Vitae

Anna Lysyanskaya Curriculum Vitae Computer Science Department, Box 1910 Brown University Providence, RI 02912 (401) 863-7605 email: [email protected] http://www.cs.brown.edu/~anna Research Interests Cryptography, privacy, computer security, theory of computation. Education Massachusetts Institute of Technology Cambridge, MA Ph.D. in Computer Science, September 2002 Advisor: Ronald L. Rivest, Viterbi Professor of EECS Thesis title: \Signature Schemes and Applications to Cryptographic Protocol Design" Massachusetts Institute of Technology Cambridge, MA S.M. in Computer Science, June 1999 Smith College Northampton, MA A.B. magna cum laude, Highest Honors, Phi Beta Kappa, May 1997 Appointments Brown University, Providence, RI Fall 2013 - Present Professor of Computer Science Brown University, Providence, RI Fall 2008 - Spring 2013 Associate Professor of Computer Science Brown University, Providence, RI Fall 2002 - Spring 2008 Assistant Professor of Computer Science UCLA, Los Angeles, CA Fall 2006 Visiting Scientist at the Institute for Pure and Applied Mathematics (IPAM) Weizmann Institute, Rehovot, Israel Spring 2006 Visiting Scientist Massachusetts Institute of Technology, Cambridge, MA 1997 { 2002 Graduate student IBM T. J. Watson Research Laboratory, Hawthorne, NY Summer 2001 Summer Researcher IBM Z¨urich Research Laboratory, R¨uschlikon, Switzerland Summers 1999, 2000 Summer Researcher 1 Teaching Brown University, Providence, RI Spring 2008, 2011, 2015, 2017, 2019; Fall 2012 Instructor for \CS 259: Advanced Topics in Cryptography," a seminar course for graduate students. Brown University, Providence, RI Spring 2012 Instructor for \CS 256: Advanced Complexity Theory," a graduate-level complexity theory course. Brown University, Providence, RI Fall 2003,2004,2005,2010,2011 Spring 2007, 2009,2013,2014,2016,2018 Instructor for \CS151: Introduction to Cryptography and Computer Security." Brown University, Providence, RI Fall 2016, 2018 Instructor for \CS 101: Theory of Computation," a core course for CS concentrators. -

Supercomputing in Plain English: Overview

Supercomputing in Plain English An Introduction to High Performance Computing Part I: Overview: What the Heck is Supercomputing? Henry Neeman, Director OU Supercomputing Center for Education & Research Goals of These Workshops To introduce undergrads, grads, staff and faculty to supercomputing issues To provide a common language for discussing supercomputing issues when we meet to work on your research NOT: to teach everything you need to know about supercomputing – that can’t be done in a handful of hourlong workshops! OU Supercomputing Center for Education & Research 2 What is Supercomputing? Supercomputing is the biggest, fastest computing right this minute. Likewise, a supercomputer is the biggest, fastest computer right this minute. So, the definition of supercomputing is constantly changing. Rule of Thumb: a supercomputer is 100 to 10,000 times as powerful as a PC. Jargon: supercomputing is also called High Performance Computing (HPC). OU Supercomputing Center for Education & Research 3 What is Supercomputing About? Size Speed OU Supercomputing Center for Education & Research 4 What is Supercomputing About? Size: many problems that are interesting to scientists and engineers can’t fit on a PC – usually because they need more than 2 GB of RAM, or more than 60 GB of hard disk. Speed: many problems that are interesting to scientists and engineers would take a very very long time to run on a PC: months or even years. But a problem that would take a month on a PC might take only a few hours on a supercomputer. OU Supercomputing -

What Every Computer Scientist Should Know About Floating-Point Arithmetic

What Every Computer Scientist Should Know About Floating-Point Arithmetic DAVID GOLDBERG Xerox Palo Alto Research Center, 3333 Coyote Hill Road, Palo Alto, CalLfornLa 94304 Floating-point arithmetic is considered an esotoric subject by many people. This is rather surprising, because floating-point is ubiquitous in computer systems: Almost every language has a floating-point datatype; computers from PCs to supercomputers have floating-point accelerators; most compilers will be called upon to compile floating-point algorithms from time to time; and virtually every operating system must respond to floating-point exceptions such as overflow This paper presents a tutorial on the aspects of floating-point that have a direct impact on designers of computer systems. It begins with background on floating-point representation and rounding error, continues with a discussion of the IEEE floating-point standard, and concludes with examples of how computer system builders can better support floating point, Categories and Subject Descriptors: (Primary) C.0 [Computer Systems Organization]: General– instruction set design; D.3.4 [Programming Languages]: Processors —compders, optirruzatzon; G. 1.0 [Numerical Analysis]: General—computer arithmetic, error analysis, numerzcal algorithms (Secondary) D. 2.1 [Software Engineering]: Requirements/Specifications– languages; D, 3.1 [Programming Languages]: Formal Definitions and Theory —semantZcs D ,4.1 [Operating Systems]: Process Management—synchronization General Terms: Algorithms, Design, Languages Additional Key Words and Phrases: denormalized number, exception, floating-point, floating-point standard, gradual underflow, guard digit, NaN, overflow, relative error, rounding error, rounding mode, ulp, underflow INTRODUCTION tions of addition, subtraction, multipli- cation, and division. It also contains Builders of computer systems often need background information on the two information about floating-point arith- methods of measuring rounding error, metic. -

Computer Hardware Architecture Lecture 4

Computer Hardware Architecture Lecture 4 Manfred Liebmann Technische Universit¨atM¨unchen Chair of Optimal Control Center for Mathematical Sciences, M17 [email protected] November 10, 2015 Manfred Liebmann November 10, 2015 Reading List • Pacheco - An Introduction to Parallel Programming (Chapter 1 - 2) { Introduction to computer hardware architecture from the parallel programming angle • Hennessy-Patterson - Computer Architecture - A Quantitative Approach { Reference book for computer hardware architecture All books are available on the Moodle platform! Computer Hardware Architecture 1 Manfred Liebmann November 10, 2015 UMA Architecture Figure 1: A uniform memory access (UMA) multicore system Access times to main memory is the same for all cores in the system! Computer Hardware Architecture 2 Manfred Liebmann November 10, 2015 NUMA Architecture Figure 2: A nonuniform memory access (UMA) multicore system Access times to main memory differs form core to core depending on the proximity of the main memory. This architecture is often used in dual and quad socket servers, due to improved memory bandwidth. Computer Hardware Architecture 3 Manfred Liebmann November 10, 2015 Cache Coherence Figure 3: A shared memory system with two cores and two caches What happens if the same data element z1 is manipulated in two different caches? The hardware enforces cache coherence, i.e. consistency between the caches. Expensive! Computer Hardware Architecture 4 Manfred Liebmann November 10, 2015 False Sharing The cache coherence protocol works on the granularity of a cache line. If two threads manipulate different element within a single cache line, the cache coherency protocol is activated to ensure consistency, even if every thread is only manipulating its own data. -

Cryptography

Security Engineering: A Guide to Building Dependable Distributed Systems CHAPTER 5 Cryptography ZHQM ZMGM ZMFM —G. JULIUS CAESAR XYAWO GAOOA GPEMO HPQCW IPNLG RPIXL TXLOA NNYCS YXBOY MNBIN YOBTY QYNAI —JOHN F. KENNEDY 5.1 Introduction Cryptography is where security engineering meets mathematics. It provides us with the tools that underlie most modern security protocols. It is probably the key enabling technology for protecting distributed systems, yet it is surprisingly hard to do right. As we’ve already seen in Chapter 2, “Protocols,” cryptography has often been used to protect the wrong things, or used to protect them in the wrong way. We’ll see plenty more examples when we start looking in detail at real applications. Unfortunately, the computer security and cryptology communities have drifted apart over the last 20 years. Security people don’t always understand the available crypto tools, and crypto people don’t always understand the real-world problems. There are a number of reasons for this, such as different professional backgrounds (computer sci- ence versus mathematics) and different research funding (governments have tried to promote computer security research while suppressing cryptography). It reminds me of a story told by a medical friend. While she was young, she worked for a few years in a country where, for economic reasons, they’d shortened their medical degrees and con- centrated on producing specialists as quickly as possible. One day, a patient who’d had both kidneys removed and was awaiting a transplant needed her dialysis shunt redone. The surgeon sent the patient back from the theater on the grounds that there was no urinalysis on file. -

Embedded System Introduction.Pdf

EmbeddedEmbedded SystemSystem IntroductionIntroduction ANDES Confidential WWW.ANDESTECH.COM Embedded System vs Desktop Page 2 相同點 CPU Storage I/O 相異點 Desktop ‧可執行多種功能 ‧作業系統對於系統資源的管理較為複雜 Embedded System ‧執行特定功能 ‧作業系統對於系統資源的管理較為簡單 Page 3 SystemSystem LayerLayer Application Application Operating System Operating System Firmware Firmware Firmware Hardware Hardware Hardware Desktop computer Complex embedded Simple embedded computer computer Page 4 HardwareHardware ArchitectureArchitecture DesktopDesktop ComputerComputer SystemSystem HardwareHardware ArchitectureArchitecture CPU AGP Slot North Bridge Memory PCI Interface USB Interface South Bridge IDE Interface System BIOS ISA Interface Super IO Port Page 5 HardwareHardware ArchitectureArchitecture EmbeddedEmbedded SystemSystem ComputerComputer HardwareHardware ArchitectureArchitecture ADC CPU SPI Digital I/O ROM IIC Host RAM Timers Computer UART Bus Interface Memory Network Interface I/O Page 6 What is the Embedded System? Page 7 IntroductionIntroduction Challenges in embedded system design. Design methodologies. Page 8 EmbeddedEmbedded SystemSystem ?? •An embedded system is a special-purpose computer system designed to perform one or a few dedicated functions •with real-time computing constraints • include hardware, software and mechanical parts Page 9 EmbeddingEmbedding aa computercomputer Page 10 ComponentsComponents ofof anan embeddedembedded systemsystem Characteristics Low power Closed operating environment Cost sensitive Page 11 ComponentsComponents ofof anan embeddedembedded -

14 Los Alamos National Laboratory

14 Los Alamos National Laboratory Every year for the past 17 years, the director of Los Alamos alternative for assessing the safety, reliability, and perfor- National Laboratory has had a legally required task: write mance of the stockpile: virtual-world simulations. a letter—a personal assessment of Los Alamos–designed warheads and bombs in the U.S. nuclear stockpile. Th i s I, Iceberg letter is sent to the secretaries of Energy and Defense and to Hollywood movies such as the Matrix series or I, Robot the Nuclear Weapons Council. Th rough them the letter goes typically portray supercomputers as massive, room-fi lling to the president of the United States. machines that churn out answers to the most complex questions—all by themselves. In fact, like the director’s Th e technical basis for the director’s assessment comes from Annual Assessment Letter, supercomputers are themselves the Laboratory’s ongoing execution of the nation’s Stockpile the tip of an iceberg. Stewardship Program; Los Alamos’ mission is to study its portion of the aging stockpile, fi nd any problems, and address them. And for the past 17 years, the director’s letter has said, Without people, a supercomputer in eff ect, that any problems that have arisen in Los Alamos would be no more than a humble jumble weapons are being addressed and resolved without the need for full-scale underground nuclear testing. of wires, bits, and boxes. When it comes to the Laboratory’s work on the annual assess- Although these rows of huge machines are the most visible ment, the director’s letter is just the tip of the iceberg. -

Theoretical Computer Science Knowledge a Selection of Latest Research Visit Springer.Com for an Extensive Range of Books and Journals in the field

ABCD springer.com Theoretical Computer Science Knowledge A Selection of Latest Research Visit springer.com for an extensive range of books and journals in the field Theory of Computation Classical and Contemporary Approaches Topics and features include: Organization into D. C. Kozen self-contained lectures of 3-7 pages 41 primary lectures and a handful of supplementary lectures Theory of Computation is a unique textbook that covering more specialized or advanced topics serves the dual purposes of covering core material in 12 homework sets and several miscellaneous the foundations of computing, as well as providing homework exercises of varying levels of difficulty, an introduction to some more advanced contempo- many with hints and complete solutions. rary topics. This innovative text focuses primarily, although by no means exclusively, on computational 2006. 335 p. 75 illus. (Texts in Computer Science) complexity theory. Hardcover ISBN 1-84628-297-7 $79.95 Theoretical Introduction to Programming B. Mills page, this book takes you from a rudimentary under- standing of programming into the world of deep Including well-digested information about funda- technical software development. mental techniques and concepts in software construction, this book is distinct in unifying pure 2006. XI, 358 p. 29 illus. Softcover theory with pragmatic details. Driven by generic ISBN 1-84628-021-4 $69.95 problems and concepts, with brief and complete illustrations from languages including C, Prolog, Java, Scheme, Haskell and HTML. This book is intended to be both a how-to handbook and easy reference guide. Discussions of principle, worked Combines theory examples and exercises are presented. with practice Densely packed with explicit techniques on each springer.com Theoretical Computer Science Knowledge Complexity Theory Exploring the Limits of Efficient Algorithms computer science. -

Advanced Engineering Mathematics

5 Linear Systems of ODEs 5.1 Systems of ODEs In a sense, Chapter 5 equals Chapter 2 “plus” Chapter 3, in the sense that Chapter 5 combines use of matrix theory and ordinary differential equation (ODE) methods. When we have more than one linear ODE, results from matrix theory turn out to be useful. Example 5.1 For the circuit shown in Figure 5.1, let v(t) be the voltage drop across the capacitor and I(t) be the loop current. The input V(t) is a given function. Assume, as usual, that L, R, and C are constants. Write down a system of ODEs in R2 that models this circuit. Method: The series RLC circuit shown in Figure 5.1 is analogous to the DC series RLC circuit discussed near the end of Section 3.3. The first ODE models the voltage drop across the capacitor being v(t) = 1 q(t),whereq(t) is the charge on the capacitor and C ˙ q˙(t) = I(t). The second ODE in the system is Kirchhoff’s voltage law, LI(t)+RI(t)+v(t) = V(t), after dividing through by L. The system is ⎧ ⎫ ⎨˙( ) = 1 ( ) ⎬ v t C I t ⎩ ⎭ . (5.1) ˙( ) = 1 ( ( ) − ( ) − ( )) I t L V t RI t v t More generally, consider a system of two ODEs in unknowns x1(t), x2(t): ⎧ ⎫ ˙ ⎨x1(t) = F1 t, x1(t), x2(t) ⎬ ⎩ ⎭ . (5.2) ˙ x2(t) = F2 t, x1(t), x2(t) A special case is ⎧ ⎫ ˙ ⎨x1(t) = a11(t)x1 + a12(t)x2 + f1(t)⎬ ⎩ ⎭ , (5.3) ˙ x2(t) = a21(t)x1 + a22(t)x2 + f2(t) which is called a linear system. -

The Computer Scientist As Toolsmith—Studies in Interactive Computer Graphics

Frederick P. Brooks, Jr. Fred Brooks is the first recipient of the ACM Allen Newell Award—an honor to be presented annually to an individual whose career contributions have bridged computer science and other disciplines. Brooks was honored for a breadth of career contributions within computer science and engineering and his interdisciplinary contributions to visualization methods for biochemistry. Here, we present his acceptance lecture delivered at SIGGRAPH 94. The Computer Scientist Toolsmithas II t is a special honor to receive an award computer science. Another view of computer science named for Allen Newell. Allen was one of sees it as a discipline focused on problem-solving sys- the fathers of computer science. He was tems, and in this view computer graphics is very near especially important as a visionary and a the center of the discipline. leader in developing artificial intelligence (AI) as a subdiscipline, and in enunciating A Discipline Misnamed a vision for it. When our discipline was newborn, there was the What a man is is more important than what he usual perplexity as to its proper name. We at Chapel Idoes professionally, however, and it is Allen’s hum- Hill, following, I believe, Allen Newell and Herb ble, honorable, and self-giving character that makes it Simon, settled on “computer science” as our depart- a double honor to be a Newell awardee. I am pro- ment’s name. Now, with the benefit of three decades’ foundly grateful to the awards committee. hindsight, I believe that to have been a mistake. If we Rather than talking about one particular research understand why, we will better understand our craft. -

Hardware Components and Internal PC Connections

Technological University Dublin ARROW@TU Dublin Instructional Guides School of Multidisciplinary Technologies 2015 Computer Hardware: Hardware Components and Internal PC Connections Jerome Casey Technological University Dublin, [email protected] Follow this and additional works at: https://arrow.tudublin.ie/schmuldissoft Part of the Engineering Education Commons Recommended Citation Casey, J. (2015). Computer Hardware: Hardware Components and Internal PC Connections. Guide for undergraduate students. Technological University Dublin This Other is brought to you for free and open access by the School of Multidisciplinary Technologies at ARROW@TU Dublin. It has been accepted for inclusion in Instructional Guides by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License Higher Cert/Bachelor of Technology – DT036A Computer Systems Computer Hardware – Hardware Components & Internal PC Connections: You might see a specification for a PC 1 such as "containing an Intel i7 Hexa core processor - 3.46GHz, 3200MHz Bus, 384 KB L1 cache, 1.5MB L2 cache, 12 MB L3 cache, 32nm process technology; 4 gigabytes of RAM, ATX motherboard, Windows 7 Home Premium 64-bit operating system, an Intel® GMA HD graphics card, a 500 gigabytes SATA hard drive (5400rpm), and WiFi 802.11 bgn". This section aims to discuss a selection of hardware parts, outline common metrics and specifications -

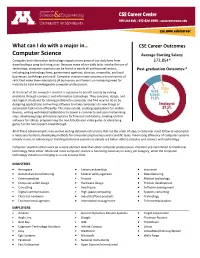

Computer Science Average Starting Salary

What can I do with a major in… CSE Career Outcomes Computer Science Average Starting Salary: Computer and information technology impacts many areas of our daily lives from $73,854* downloading a song to driving a car. Because many of our daily tasks involve the use of technology, computer scientists can be found in nearly all professional sectors, Post-graduation Outcomes:* including big technology firms, government agencies, startups, nonprofits, and local businesses, both large and small. Computer science majors possess a broad variety of skills that make them valuable to all businesses and there is an increasing need for industry to have knowledgeable computer professionals. At the heart of the computer scientist is a passion to benefit society by solving problems through computer and information technology. They conceive, design, and test logical structures for solving problems by computer and find ways to do so by designing applications and writing software to make computers do new things or accomplish tasks more efficiently. This may include, creating applications for mobile devices, writing web-based applications to power e-commerce and social networking sites, developing large enterprise systems for financial institutions, creating control software for robots, programming the next blockbuster video game, or identifying genes for the next biotech breakthrough. All of these advancements may involve writing detailed instructions that list the order of steps a computer must follow to accomplish a necessary function, developing methods for computerizing business and scientific tasks, maximizing efficiency of computer systems already in use, or enhancing or building immersive systems so people are better able to socialize and interact with technology Computer scientists often work on a more abstract level than other computer professionals.