Deep Learned Super Resolution for Feature Film Production

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Artistic Simulation of Curly Hair

Artistic Simulation of Curly Hair Hayley Iben Mark Meyer Lena Petrovic Olivier Soares John Anderson Andrew Witkin Pixar Animation Studios Pixar Technical Memo #12-03a Figure 1: Example of stylized curly hair simulated with our method. c Disney/Pixar. Abstract We present a hair model designed for creating specific visual looks of curly hair that are often non-physical. For example, our artists We present a novel method for stably simulating stylized curly hair want to preserve the helical shape of the hair, regardless of in- that addresses artistic needs and performance demands, both found tense forces caused by extreme motion only possible in anima- in the production of feature films. To satisfy the artistic requirement tion. With a physical model for hair, such as infinitesimally thin of maintaining the curl’s helical shape during motion, we propose elastic rods [Bergou et al. 2008], the helical shape would naturally a hair model based upon an extensible elastic rod. We introduce straighten under such motion unless the material properties are stiff- a novel method for stably computing a frame along the hair curve, ened to maintain the shape, making the hair wire-like. This natural essential for stable simulation of curly hair. Our hair model in- straightening is an example of a physical motion undesired by our troduces a novel spring for controlling the bending of the curl and artists. In addition to behavioral requirements, our hair model must another for maintaining the helical shape during extension. We also be robust and stable, able to handle fairly arbitrary hair shapes cre- address performance concerns often associated with handling hair- ated by artists as depicted in Figure1. -

New Programming Customer Movie

EVERYTHING YOU NEED FOR successful events, SIMPLY Programming Toolkit | Fall/Winter 2017 © Universal Studios Press © 2017 Disney/Pixar © Universal Studios © Paramount Pictures © Columbia Pictures Industries, Inc. New Movie Programming Customer Releases Event Ideas Ideas Spotlight NEW Releases © Warner Bros. Entertainment Inc. © Warner © 2017 Disney/Pixar © Universal Studios Despicable Me 3 Wonder Woman Cars 3 Anticipated October 2017 Anticipated September 2017 Anticipated October 2017 PG; 90 minutes; Universal Studios PG-13; 141 minutes; Warner Bros. G; 109 minutes; Walt Disney Pictures Gru battles Balthazar Bratt, a 1980s child star- An Amazon princess leaves her island home Race car Lightning McQueen suffers a severe turned-supervillain, in this animated sequel. and journeys to the outside world, which is crash while trying to compete with a younger being consumed by a massive war. With the rival named Jackson Storm. Afterward, help of an American pilot, she works to put an McQueen embraces new technologies as he end to the conflict. trains for a return to the racetrack. EVENT Idea! EVENT EVENT Promotion Idea Idea The minions are back at it again! Create Allow guests the opportunity to In this movie, legendary Lightning buzz for your movie showing by passing craft their own Tiara or Bracelets of McQueen has to prove that he still has out yellow balloons in your community that Submission. Give them the chance to what it takes to win. Foster a friendly look just like the little stars of the movie. show off their creations at a pop-up sense of competition that mimics the All you need are markers, yellow balloons, photo booth at the event. -

The Big Draw International Festival: Drawn to Life Saturday, October 5, 2019

PRESS RELEASE The Walt Disney Family Museum Celebrates The Big Draw International Festival: Drawn to Life Saturday, October 5, 2019 San Francisco, October 1, 2019—The Walt Disney Family Museum is delighted to once again host The Big Draw, the world’s largest drawing festival. This annual, museum-wide community event will take place on OctoBer 5 and celeBrates Walt Disney’s contriButions to visual arts. In connection with this year’s “Drawn to Life” theme, experience special drawing sessions exploring nature- inspired art, create a care card for someone who needs a smile, learn how to draw Mickey Mouse, and more. Don’t miss film screenings of Disneynature’s Chimpanzee (2012) and Disney-Pixar’s Inside Out (2015), then connect back to stories told in our main galleries and in our special exhiBition, Mickey Mouse: From Walt to the World. This year, we are proud to partner with The Jane Goodall Institute’s Roots and Shoots. Founded by Goodall in 1991, Roots and Shoots is a youth service program for young people of all ages, whose mission is to foster respect and compassion for all living things, to promote understanding of all cultures and Beliefs, and to inspire each individual to take action to make the world a Better place for people, other animals, and the environment. In partnership with Caltrain and SamTrans, The Walt Disney Family Museum is pleased to offer free general admission to riders and employees, upon showing their Caltrain/SamTrans ticket or employee ID to the museum’s Ticket Desk. Riders and employees will also receive 15% off in the Museum Store. -

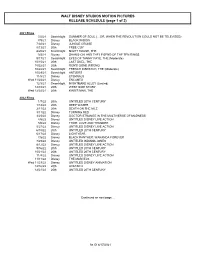

Large Release Calendar MASTER.Xlsx

WALT DISNEY STUDIOS MOTION PICTURES RELEASE SCHEDULE (page 1 of 2) 2021 Films 7/2/21 Searchlight SUMMER OF SOUL (…OR, WHEN THE REVOLUTION COULD NOT BE TELEVISED) 7/9/21 Disney BLACK WIDOW 7/30/21 Disney JUNGLE CRUISE 8/13/21 20th FREE GUY 8/20/21 Searchlight NIGHT HOUSE, THE 9/3/21 Disney SHANG-CHI AND THE LEGEND OF THE TEN RINGS 9/17/21 Searchlight EYES OF TAMMY FAYE, THE (Moderate) 10/15/21 20th LAST DUEL, THE 10/22/21 20th RON'S GONE WRONG 10/22/21 Searchlight FRENCH DISPATCH, THE (Moderate) 10/29/21 Searchlight ANTLERS 11/5/21 Disney ETERNALS Wed 11/24/21 Disney ENCANTO 12/3/21 Searchlight NIGHTMARE ALLEY (Limited) 12/10/21 20th WEST SIDE STORY Wed 12/22/21 20th KING'S MAN, THE 2022 Films 1/7/22 20th UNTITLED 20TH CENTURY 1/14/22 20th DEEP WATER 2/11/22 20th DEATH ON THE NILE 3/11/22 Disney TURNING RED 3/25/22 Disney DOCTOR STRANGE IN THE MULTIVERSE OF MADNESS 4/8/22 Disney UNTITLED DISNEY LIVE ACTION 5/6/22 Disney THOR: LOVE AND THUNDER 5/27/22 Disney UNTITLED DISNEY LIVE ACTION 6/10/22 20th UNTITLED 20TH CENTURY 6/17/22 Disney LIGHTYEAR 7/8/22 Disney BLACK PANTHER: WAKANDA FOREVER 7/29/22 Disney UNTITLED INDIANA JONES 8/12/22 Disney UNTITLED DISNEY LIVE ACTION 9/16/22 20th UNTITLED 20TH CENTURY 10/21/22 20th UNTITLED 20TH CENTURY 11/4/22 Disney UNTITLED DISNEY LIVE ACTION 11/11/22 Disney THE MARVELS Wed 11/23/22 Disney UNTITLED DISNEY ANIMATION 12/16/22 20th AVATAR 2 12/23/22 20th UNTITLED 20TH CENTURY Continued on next page… As Of 6/17/2021 WALT DISNEY STUDIOS MOTION PICTURES RELEASE SCHEDULE (page 2 of 2) 2023 Films 1/13/23 20th UNTITLED 20TH CENTURY 2/17/23 Disney ANT-MAN AND THE WASP: QUANTUMANIA 3/10/23 Disney UNTITLED DISNEY LIVE ACTION 3/24/23 20th UNTITLED 20TH CENTURY 5/5/23 Disney GUARDIANS OF THE GALAXY VOL. -

2018 Catalog

2018 movie CATALOG © Warner Bros. Entertainment Inc. © 2017 Disney/Pixar SWANK.COM 1.800.876.5577 © Open Road Films © Universal Studios © Columbia Pictures Industries, Inc. © Lions Gate Entertainment, Inc. Movie Category Guide PG PG PG PG PG PG G PG PG PG The War with Grandpa PG PG PG-13 PG PG PG PG PG-13 TV-G PG PG PG PG PG PG-13 PG-13 PG-13 PG-13 PG-13 PG-13 Family-Friendly Programming Family-Friendly PG-13 PG-13 PG-13 PG © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar PG-13 PG-13 PG-13 PG-13 PG-13 PG-13 PG-13 PG-13 PG-13 PG-13 © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar PG-13 PG PG-13 PG-13 PG-13 PG-13 PG-13 TV-G PG-13 PG-13 © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar © 2017 Disney/Pixar Teen/Adult Programming Teen/Adult PG-13 PG-13 PG-13 PG-13 PG PG-13 PG-13 PG-13 PG All trademarks are property of their repective owners. MP9188 Find our top 25 most requested throwback movies on page 31 NEW Releases NEW RELEASES © 2017 Disney/Pixar © Warner Bros. -

The Walt Disney Company: a Corporate Strategy Analysis

The Walt Disney Company: A Corporate Strategy Analysis November 2012 Written by Carlos Carillo, Jeremy Crumley, Kendree Thieringer and Jeffrey S. Harrison at the Robins School of Business, University of Richmond. Copyright © Jeffrey S. Harrison. This case was written for the purpose of classroom discussion. It is not to be duplicated or cited in any form without the copyright holder’s express permission. For permission to reproduce or cite this case, contact Jeff Harrison at [email protected]. In your message, state your name, affiliation and the intended use of the case. Permission for classroom use will be granted free of charge. Other cases are available at: http://robins.richmond.edu/centers/case-network.html "Walt was never afraid to dream. That song from Pinocchio, 'When You Wish Upon a Star,' is the perfect summary of Walt's approach to life: dream big dreams, even hopelessly impossible dreams, because they really can come true. Sure, it takes work, focus and perseverance. But anything is possible. Walt proved it with the impossible things he accomplished."1 It is well documented that Walt Disney had big dreams and made several large gambles to propel his visions. From the creation of Steamboat Willie in 1928 to the first color feature film, “Snow White and the Seven Dwarves” in 1937, and the creation of Disneyland in Anaheim, CA during the 1950’s, Disney risked his personal assets as well as his studio to build a reality from his dreams. While Walt Disney passed away in the mid 1960’s, his quote, “If you can dream it, you can do it,”2 still resonates in the corporate world and operations of The Walt Disney Company. -

Creation of Value Through M&A: the Disney's Case

COLEGIO UNIVERSITARIO DE ESTUDIOS FINANCIEROS Máster Universitario en Instituciones y Mercados Financieros Creation of value through M&A: the Disney’s case Realizado por : Hanxiao Zhang Dirigido por :Dr. José J. Massa MADRID , a 10 de sept. de 2020 0 Creation of value through M&A : the Disney’s case 1.Introduction .................................................................................................................................. 2 2.Literature review- Mergers &Acquisitions .................................................................................... 5 2.1 Mergers and Acquisitions definition .................................................................................. 5 2.2 Synergy .............................................................................................................................. 6 2.3 Reasons for M&A .............................................................................................................. 7 3.Overview of American Media and Entertainment Market ........................................................... 8 3.1 Industry profile ................................................................................................................... 8 3.2 The development of M&E supply chain ............................................................................ 9 3.3 Streaming war: Disney’s market share and its competitors ........................................... 16 4.Walt Disney Co. and its M&A strategies .................................................................................. -

Fact Sheet – Courses

Fact Sheet – Courses Chair : Michael Brown, National University of Singapore, Singapore Co-Chair : Jiaya (Leo) Jia, Chinese University of Hong Kong, Hong Kong Conference : Wednesday 28 November – Saturday 1 December 2012 Exhibition : Thursday 29 November – Saturday 1 December 2012 Fast Facts The SIGGRAPH Asia 2012 Courses Program received 22 submissions. In addition, four submissions were invited. Overall, a total of 17 courses have been accepted. The Courses Jury Committee was drawn from a wide range of academic and industry sectors, including Bungie, EonReality, Intel, Nanyang Technological University, NVIDIA, Oregon State University and Pixar. At SIGGRAPH Asia 2012, the Courses program will feature a variety of instructional sessions from introductory to advanced topics in computer graphics and interactive techniques by speakers from institutions around the globe. Practitioners, developers, researchers, artists, and students will attend Courses to broaden and deepen their knowledge of their field, and to learn the trends of new fields. Quote from the SIGGRAPH Asia 2012 Courses Chair, Michael Brown, National University of Singapore, Singapore “This year the SIGGRAPH Asia 2012 Course program has a great spread of themes for both sides of the brain with an excellent mix of artistic focused courses and technical oriented courses. Our course organizers are from all over the world and many are coming from the top industries in computer graphics and animation, including Disney, Pixar, DreamWorks, and AMD.” SIGGRAPH Asia 2012 Courses highlights include • Taming Render Times at Pixar: CPU & GPU, Brave and Beyond Paul Kanyuk, Pixar Animation Studios Laurence Emms, Pixar Animation Studios Despite the exponential growth of computing performance and steady march of algorithmic improvements over the years, the images produced by feature animated film studios like Pixar still take as long as ever to render. -

Press Release

Press release CaixaForum Madrid From 21 March to 22 June 2014 Press release CaixaForum Madrid hosts the first presentation in Spain of a show devoted to the history of a studio that revolutionised the world of animated film “The art challenges the technology. Technology inspires the art.” That is how John Lasseter, Chief Creative Officer at Pixar Animation Studios, sums up the spirit of the US company that marked a turning-point in the film world with its innovations in computer animation. This is a medium that is at once extraordinarily liberating and extraordinarily challenging, since everything, down to the smallest detail, must be created from nothing. Pixar: 25 Years of Animation casts its spotlight on the challenges posed by computer animation, based on some of the most memorable films created by the studio. Taking three key elements in the creation of animated films –the characters, the stories and the worlds that are created– the exhibition reveals the entire production process, from initial idea to the creation of worlds full of sounds, textures, music and light. Pixar: 25 Years of Animation traces the company’s most outstanding technical and artistic achievements since its first shorts in the 1980s, whilst also enabling visitors to discover more about the production process behind the first 12 Pixar feature films through 402 pieces, including drawings, “colorscripts”, models, videos and installations. Pixar: 25 Years of Animation . Organised and produced by : Pixar Animation Studios in cooperation with ”la Caixa” Foundation. Curator : Elyse Klaidman, Director, Pixar University and Archive at Pixar Animation Studios. Place : CaixaForum Madrid (Paseo del Prado, 36). -

Preview Cleveland Orchestra at Blossom: Two Pixar in Concert

Preview Cleveland Orchestra at Blossom: two Pixar in Concert evenings— a conversation with conductor Richard Kaufman by Mike Telin “I’ve been very blessed,” conductor Richard Kaufman says of his impressive musical career — most of which he has devoted to conducting and supervising music for A./#0&6'.'8+5+10241&7%6+105#59'..#52'4(14/+0) A./#0&%.#55+%#./75+%+0%10%'46*#..5#0&104'%14&- ings. On Saturday, August 31, and Sunday, September 1, at Blossom Music Center, Richard Kaufman will lead The Cleveland Orchestra in Pixar in Concert. The pro- duction includes musical selections from A Bug’s Life, Brave, Cars and Cars 2, Finding Nemo, Monsters, Inc., Toy Story, Toy Story 2 and 3, Ratatouille, Up, and WAL- L·E#.10)9+6*8+&'1%.+251('#%*A./16*%10%'465 9+..$'(1..19'&$;A4'914-59'#6*'42'4/+66+0) “I was part of the team that created the show at Disney and this weekend's program is a montage of the 13 Pixar A./5/75+%?#7(/#021+0651766*#62'4(14/+0).+8' 61#0+/#6+10+5016#0'#5;6#5->*#8'615#;6*#6*#8'2.#;'&8+1.+0(14/#0;A./ scores but I think that playing for animation is by far the most challenging. In animation everything moves very quickly: you have to be able to turn on a dime. In the studio all the musicians use click-tracks but this weekend only part of the orchestra will be using click-tracks. And the fact that The Cleveland Orchestra is going to be playing this music on two summer evenings at Blossom is truly wonderful. -

The Walt Disney Company France and Canal+ Announce Strategic Distribution Agreement

THE WALT DISNEY COMPANY FRANCE AND CANAL+ ANNOUNCE STRATEGIC DISTRIBUTION AGREEMENT DEAL EXTENDS THE LONGSTANDING PARTNERSHIP TO INCLUDE DISNEY+ Paris, December 16, 2019: Disney France and the Canal+ Group announced today a new a strategic distribution agreement which will provide Canal+ subscribers with access to a unique offering of films, series and documentaries from the world's leading entertainment company. The deal includes: • Distribution by Canal+ of a wide array of The Walt Disney Company’s most popular branded television channels across numerous genres including DISNEY CHANNEL & DISNEY JUNIOR as well as NATIONAL GEOGRAPHIC & NATIONAL GEOGRAPHIC WILD, VOYAGE & FOX PLAY. • Canal+ premium channels will air the first run broadcasts of Disney, Marvel, Star Wars, Pixar, 20th Century Fox, Blue Sky and Fox Searchlight movies. In 2019 to date, The Walt Disney Studios delivered 5 of the 10 most popular films in France including The Lion King and Avenger’s End Game as well as Frozen II, Toy Story 4 and Captain Marvel. All of these films will air on Canal+. • In tandem with the DISNEY+ direct-to-consumer launch in France, Canal+ will become the exclusive local Pay-TV partner to offer the new streaming service to its 8 million subscribers and will further extend the reach of Disney+ through 3rd party distribution partners such as ISPs. Maxime Saada, Chairman of CANAL+ Group said, “We are very excited to team up with The Walt Disney Company to bring our subscribers the amazing content from the world’s premier entertainment company. This is an ambitious deal under which CANAL+ becomes the exclusive third-party local distributor of Disney’s much-awaited streaming service Disney+, among PayTV and ISP operators, as well as Disney’s incredible line-up of movies, series and animated content via our own premium channels. -

Outstanding Animated Program (For Programming Less Than One Hour)

Keith Crofford, Executive Producer Outstanding Animated Program (For Corey Campodonico, Producer Programming Less Than One Hour) Alex Bulkley, Producer Douglas Goldstein, Head Writer Creature Comforts America • Don’t Choke To Death, Tom Root, Head Writer Please • CBS • Aardman Animations production in association with The Gotham Group Jordan Allen-Dutton, Writer Mike Fasolo, Writer Kit Boss, Executive Producer Charles Horn, Writer Miles Bullough, Executive Producer Breckin Meyer, Writer Ellen Goldsmith-Vein, Executive Producer Hugh Sterbakov, Writer Peter Lord, Executive Producer Erik Weiner, Writer Nick Park, Executive Producer Mark Caballero, Animation Director David Sproxton, Executive Producer Peter McHugh, Co-Executive Producer The Simpsons • Eternal Moonshine of the Simpson Mind • Jacqueline White, Supervising Producer FOX • Gracie Films in association with 20th Century Fox Kenny Micka, Producer James L. Brooks, Executive Producer Gareth Owen, Producer Matt Groening, Executive Producer Merlin Crossingham, Director Al Jean, Executive Producer Dave Osmand, Director Ian Maxtone-Graham, Executive Producer Richard Goleszowski, Supervising Director Matt Selman, Executive Producer Tim Long, Executive Producer King Of The Hill • Death Picks Cotton • FOX • 20th Century Fox Television in association with 3 Arts John Frink, Co-Executive Producer Entertainment, Deedle-Dee Productions & Judgemental Kevin Curran, Co-Executive Producer Films Michael Price, Co-Executive Producer Bill Odenkirk, Co-Executive Producer Mike Judge, Executive Producer Marc Wilmore, Co-Executive Producer Greg Daniels, Executive Producer Joel H. Cohen, Co-Executive Producer John Altschuler, Executive Producer/Writer Ron Hauge, Co-Executive Producer Dave Krinsky, Executive Producer Rob Lazebnik, Co-Executive Producer Jim Dauterive, Executive Producer Laurie Biernacki, Animation Producer Garland Testa, Executive Producer Rick Polizzi, Animation Producer Tony Gama-Lobo, Supervising Producer J.