Forensically Sound Data Acquisition in the Age of Anti-Forensic Innocence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Storage Systems and Technologies - Jai Menon and Clodoaldo Barrera

INFORMATION TECHNOLOGY AND COMMUNICATIONS RESOURCES FOR SUSTAINABLE DEVELOPMENT - Storage Systems and Technologies - Jai Menon and Clodoaldo Barrera STORAGE SYSTEMS AND TECHNOLOGIES Jai Menon IBM Academy of Technology, San Jose, CA, USA Clodoaldo Barrera IBM Systems Group, San Jose, CA, USA Keywords: Storage Systems, Hard disk drives, Tape Drives, Optical Drives, RAID, Storage Area Networks, Backup, Archive, Network Attached Storage, Copy Services, Disk Caching, Fiber Channel, Storage Switch, Storage Controllers, Disk subsystems, Information Lifecycle Management, NFS, CIFS, Storage Class Memories, Phase Change Memory, Flash Memory, SCSI, Caching, Non-Volatile Memory, Remote Copy, Storage Virtualization, Data De-duplication, File Systems, Archival Storage, Storage Software, Holographic Storage, Storage Class Memory, Long-Term data preservation Contents 1. Introduction 2. Storage devices 2.1. Storage Device Industry Overview 2.2. Hard Disk Drives 2.3. Digital Tape Drives 2.4. Optical Storage 2.5. Emerging Storage Technologies 2.5.1. Holographic Storage 2.5.2. Flash Storage 2.5.3. Storage Class Memories 3. Block Storage Systems 3.1. Storage System Functions – Current 3.2. Storage System Functions - Emerging 4. File and Archive Storage Systems 4.1. Network Attached Storage 4.2. Archive Storage Systems 5. Storage Networks 5.1. SAN Fabrics 5.2. IP FabricsUNESCO – EOLSS 5.3. Converged Networking 6. Storage SoftwareSAMPLE CHAPTERS 6.1. Data Backup 6.2. Data Archive 6.3. Information Lifecycle Management 6.4. Disaster Protection 7. Concluding Remarks Acknowledgements Glossary Bibliography Biographical Sketches ©Encyclopedia of Life Support Systems (EOLSS) INFORMATION TECHNOLOGY AND COMMUNICATIONS RESOURCES FOR SUSTAINABLE DEVELOPMENT - Storage Systems and Technologies - Jai Menon and Clodoaldo Barrera Summary Our world is increasingly becoming a data-centric world. -

Hunting Red Team Activities with Forensic Artifacts

Hunting Red Team Activities with Forensic Artifacts By Haboob Team 1 [email protected] Table of Contents 1. Introduction .............................................................................................................................................. 5 2. Why Threat Hunting?............................................................................................................................. 5 3. Windows Forensic.................................................................................................................................. 5 4. LAB Environment Demonstration ..................................................................................................... 6 4.1 Red Team ......................................................................................................................................... 6 4.2 Blue Team ........................................................................................................................................ 6 4.3 LAB Overview .................................................................................................................................. 6 5. Scenarios .................................................................................................................................................. 7 5.1 Remote Execution Tool (Psexec) ............................................................................................... 7 5.2 PowerShell Suspicious Commands ...................................................................................... -

Use External Storage Devices Like Pen Drives, Cds, and Dvds

External Intel® Learn Easy Steps Activity Card Storage Devices Using external storage devices like Pen Drives, CDs, and DVDs loading Videos Since the advent of computers, there has been a need to transfer data between devices and/or store them permanently. You may want to look at a file that you have created or an image that you have taken today one year later. For this it has to be stored somewhere securely. Similarly, you may want to give a document you have created or a digital picture you have taken to someone you know. There are many ways of doing this – online and offline. While online data transfer or storage requires the use of Internet, offline storage can be managed with minimum resources. The only requirement in this case would be a storage device. Earlier data storage devices used to mainly be Floppy drives which had a small storage space. However, with the development of computer technology, we today have pen drives, CD/DVD devices and other removable media to store and transfer data. With these, you store/save/copy files and folders containing data, pictures, videos, audio, etc. from your computer and even transfer them to another computer. They are called secondary storage devices. To access the data stored in these devices, you have to attach them to a computer and access the stored data. Some of the examples of external storage devices are- Pen drives, CDs, and DVDs. Introduction to Pen Drive/CD/DVD A pen drive is a small self-powered drive that connects to a computer directly through a USB port. -

Introduction to Criminal Investigation: Processes, Practices and Thinking Introduction to Criminal Investigation: Processes, Practices and Thinking

Introduction to Criminal Investigation: Processes, Practices and Thinking Introduction to Criminal Investigation: Processes, Practices and Thinking ROD GEHL AND DARRYL PLECAS JUSTICE INSTITUTE OF BRITISH COLUMBIA NEW WESTMINSTER, BC Introduction to Criminal Investigation: Processes, Practices and Thinking by Rod Gehl is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, except where otherwise noted. Introduction to Criminal Investigation: Processes, Practices and Thinking by Rod Gehl and Darryl Plecas is, unless otherwise noted, released under a Creative Commons Attribution 4.0 International (CC BY-NC) license. This means you are free to copy, retain (keep), reuse, redistribute, remix, and revise (adapt or modify) this textbook but not for commercial purposes. Under this license, anyone who revises this textbook (in whole or in part), remixes portions of this textbook with other material, or redistributes a portion of this textbook, may do so without gaining the author’s permission providing they properly attribute the textbook or portions of the textbook to the author as follows: Introduction to Criminal Investigation: Processes, Practices and Thinking by Rod Gehl and Darryl Plecas is used under a CC BY-NC 4.0 International license. Additionally, if you redistribute this textbook (in whole or in part) you must retain the below statement, Download this book for free at https://pressbooks.bccampus.ca/criminalinvestigation/ as follows: 1. digital format: on every electronic page 2. print format: on at least one page near the front of the book To cite this textbook using APA, for example, follow this format: Gehl, Rod & Plecas, Darryl. (2016). Introduction to Criminal Investigation: Processes, Practices and Thinking. -

The Development and Effectiveness of Malware Vaccination

Master of Science in Engineering: Computer Security June 2020 The Development and Effectiveness of Malware Vaccination : An Experiment Oskar Eliasson Lukas Ädel Faculty of Computing, Blekinge Institute of Technology, 371 79 Karlskrona, Sweden This thesis is submitted to the Faculty of Computing at Blekinge Institute of Technology in partial fulfilment of the requirements for the degree of Master of Science in Engineering: Computer Security. The thesis is equivalent to 20 weeks of full time studies. The authors declare that they are the sole authors of this thesis and that they have not used any sources other than those listed in the bibliography and identified as references. They further declare that they have not submitted this thesis at any other institution to obtain a degree. Contact Information: Author(s): Oskar Eliasson E-mail: [email protected] Lukas Ädel E-mail: [email protected] University advisor: Professor of Computer Engineering, Håkan Grahn Department of Computer Science Faculty of Computing Internet : www.bth.se Blekinge Institute of Technology Phone : +46 455 38 50 00 SE–371 79 Karlskrona, Sweden Fax : +46 455 38 50 57 Abstract Background. The main problem that our master thesis is trying to reduce is mal- ware infection. One method that can be used to accomplish this goal is based on the fact that most malware does not want to get caught by security programs and are actively trying to avoid them. To not get caught malware can check for the existence of security-related programs and artifacts before executing malicious code and depending on what they find, they will evaluate if the computer is worth in- fecting. -

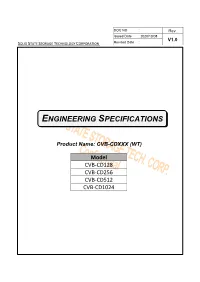

Engineering Specifications

DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : ENGINEERING SPECIFICATIONS Product Name: CVB-CDXXX (WT) Model CVB-CD128 CVB-CD256 CVB-CD512 CVB-CD1024 Author: Ken Liao DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Version History Date 0.1 Draft 2020/07/20 1.0 First release 2020/10/08 DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Copyright 2020 SOLID STATE STORAGE TECHNOLOGY CORPORATION Disclaimer The information in this document is subject to change without prior notice in order to improve reliability, design, and function and does not represent a commitment on the part of the manufacturer. In no event will the manufacturer be liable for direct, indirect, special, incidental, or consequential damages arising out of the use or inability to use the product or documentation, even if advised of the possibility of such damages. This document contains proprietary information protected by copyright. All rights are reserved. No part of this datasheet may be reproduced by any mechanical, electronic, or other means in any form without prior written permission of SOLID STATE STORAGE Technology Corporation. DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Table of Contents 1 Introduction ....................................................................... 5 1.1 Overview ............................................................................................. -

Control Design and Implementation of Hard Disk Drive Servos by Jianbin

Control Design and Implementation of Hard Disk Drive Servos by Jianbin Nie A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Engineering-Mechanical Engineering in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Roberto Horowitz, Chair Professor Masayoshi Tomizuka Professor David Brillinger Spring 2011 Control Design and Implementation of Hard Disk Drive Servos ⃝c 2011 by Jianbin Nie 1 Abstract Control Design and Implementation of Hard Disk Drive Servos by Jianbin Nie Doctor of Philosophy in Engineering-Mechanical Engineering University of California, Berkeley Professor Roberto Horowitz, Chair In this dissertation, the design of servo control algorithms is investigated to produce high-density and cost-effective hard disk drives (HDDs). In order to sustain the continuing increase of HDD data storage density, dual-stage actuator servo systems using a secondary micro-actuator have been developed to improve the precision of read/write head positioning control by increasing their servo bandwidth. In this dissertation, the modeling and control design of dual-stage track-following servos are considered. Specifically, two track-following control algorithms for dual-stage HDDs are developed. The designed controllers were implemented and evaluated on a disk drive with a PZT-actuated suspension-based dual-stage servo system. Usually, the feedback position error signal (PES) in HDDs is sampled on some spec- ified servo sectors with an equidistant sampling interval, which implies that HDD servo systems with a regular sampling rate can be modeled as linear time-invariant (LTI) systems. However, sampling intervals for HDD servo systems are not always equidistant and, sometimes, an irregular sampling rate due to missing PES sampling data is unavoidable. -

Manufacturing Equipment Technologies for Hard Disk's

Manufacturing Equipment Technologies for Hard Disk’s Challenge of Physical Limits 222 Manufacturing Equipment Technologies for Hard Disk’s Challenge of Physical Limits Kyoichi Mori OVERVIEW: To meet the world’s growing demands for volume information, Brian Rattray not just with computers but digitalization and video etc. the HDD must Yuichi Matsui, Dr. Eng. continually increase its capacity and meet expectations for reduced power consumption and green IT. Up until now the HDD has undergone many innovative technological developments to achieve higher recording densities. To continue this increase, innovative new technology is again required and is currently being developed at a brisk pace. The key components for areal density improvements, the disk and head, require high levels of performance and reliability from production and inspection equipment for efficient manufacturing and stable quality assurance. To meet this demand, high frequency electronics, servo positioning and optical inspection technology is being developed and equipment provided. Hitachi High-Technologies Corporation is doing its part to meet market needs for increased production and the adoption of next-generation technology by developing the technology and providing disk and head manufacturing/inspection equipment (see Fig. 1). INTRODUCTION higher efficiency storage, namely higher density HDDS (hard disk drives) have long relied on the HDDs will play a major role. computer market for growth but in recent years To increase density, the performance and quality of there has been a shift towards cloud computing and the HDD’s key components, disks (media) and heads consumer electronics etc. along with a rapid expansion have most effect. Therefore further technological of data storage applications (see Fig. -

Designed to Engage Students and Empower Educators

PRODUCT BRIEF Intel® classmate PC – Convertible Intel® Celeron® Processors 847/NM70 (dual-core) Part of Intel® Education Solutions Designed to Engage Students and Empower Educators Designed to make learning more fun, creative, and engaging, the Intel® classmate PC – convertible brings together the best of Windows* 8 touch and keyboard experiences, the amazing performance of Intel® Celeron® processors, and Intel® Education Software. The new Intel classmate PC – convertible keeps students excited about learning. With the flip of the display, it quickly transforms from a full-featured PC to convenient tablet—with pen— making it easy to adapt the device to the teaching method and learning task at hand. Whether gathering field data with the HD camera and notating it with the pen, or analyzing, interpreting, and reporting results using collaborative Intel Education Software, the convertible classmate “The Intel classmate PC – convertible PC is designed for education and engineered for students to develop the 21st century skills needed to succeed in today’s global economy. creates an ecosystem of digital learning which encompasses 1:1 Highlights: technologies, independent learning, • Two devices in one: tablet and full-feature PC digital creativity, device management, • Supports both Windows 8 desktop and touch modes and learning beyond school. It is truly • Together with Intel Education Software, the convertible classmate PC is part of Intel Education Solutions versatile, easily catering for the • High Definition LCD display produces vivid -

Pro .NET Memory Management for Better Code, Performance, and Scalability

Pro .NET Memory Management For Better Code, Performance, and Scalability Konrad Kokosa Pro .NET Memory Management Konrad Kokosa Warsaw, Poland ISBN-13 (pbk): 978-1-4842-4026-7 ISBN-13 (electronic): 978-1-4842-4027-4 https://doi.org/10.1007/978-1-4842-4027-4 Library of Congress Control Number: 2018962862 Copyright © 2018 by Konrad Kokosa This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Trademarked names, logos, and images may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, logo, or image we use the names, logos, and images only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. While the advice and information in this book are believed to be true and accurate at the date of publication, neither the authors nor the editors nor the publisher can accept any legal responsibility for any errors or omissions that may be made. -

Mobile Computer Comparison

Mobile Computer Comparison System Classmate PC Classmate PC Eee PC 700 Eee PC 900 Kindle Mobilis OLPC HP 2133 Mini- Version 1.5 Version 1.5 Note 1st Generation 2nd Generation Processor (CPU) Intel ® Mobile Intel ® Mobile Intel® Mobile Intel® Mobile CPU Intel PXA255 TI OMAP2431 AMD x86 VIA C7-M Processor ULV Processor ULV 900 Celeron & Chipset 400MHz** 433 MHz 1.0Ghz up to 900 MHz, Zero MHz, Zero L2 cache, 1.6Ghz L2 cache, 400 400 MHz FSB MHz FSB Memory (DRAM) 256 RAM DDR ‐II 256 MB 512 MB ‐ 1GB 1GB 256 MB 128 MB – 512 MB 256 MB 1024 MB (Linux only)or 512 MB Storage/Hard Drive 4GB Flash Drive 1 GB Flash (for 2, 4, or 8GB Flash 12GB (4GB built‐in + 256 MB Flash 128 MB Flash 1024 MB Flash a 4GB SSD low or Linux), 2GB/4GB 8GB flash) SSD end 40GB Hard Flash, 1.8 HDD (Microsoft Windows 120GB HDD Drive OS Version) 20GB (4GB built‐in +16GB flash) SSD (Linux OS Version) Screen (diagonal) 7” LCD 9ʺ LCD 7ʺ LCD 8.9ʺ 6” LCD 7.0ʺ LCD 7.5ʺ LCD 8.9" Input Integrated Water Resistent Integrated keyboard Integrated keyboard 2‐Thumb keyboard Integrated Integrated keyboard 92% full-size, keyboard Hot Keyboard and Touch pad Touch pad Scroll wheel keyboard/ Optional with sealed rubber user-friendly Keys Touchpad USB Keyboard membrane QWERTY Touch Pad with keyboard L/R Wireless Pen Multimedia (built in) Stereo Integrated 2 channel Stereo speakers/mic HD audio / built‐in Headphone jack Stereo speakers/mic Stereo speakers Stereo speakers speakers/mic audio, Built‐in speakers MP3 jack Video Player Mono mic Mono mic Mic input jack speaker and Audio book jack MP3 -

Insight Analysis

WINTER 2016 ISSUE 6 IT ASSET DISPOSAL • RISK MANAGEMENT • COMPLIANCE • IT SECURITY • DATA PROTECTION INSIGHT EU Data Protection Regulation Page 3 ANALYSIS Exploring the Hidden Areas on Erased Drives Page 17 9 TONY BENHAM ON 13 JEFFREY DEAN LOOKS 20 A GAME OF TAG: THE 21 WHO’S WHO: FULL LIST THE TRIALS OF BEING IN DETAIL AT THE DATA CLOSED-LOOP RFID OF CERTIFIED MEMBERS AN ADISA AUDITOR SECURITY ACT SYSTEM WORLDWIDE 2 Audit Monitoring Service EDITORIAL WINTER 2016 EDITOR Steve Mellings COPY EDITOR Richard Burton CONTENT AUTHORS Steve Mellings Anthony Benham When releasing ICT Assets as part of your disposal service it is vital to ensure your supply chain is Gill Barstow Alan Dukinfield processing your equipment correctly. This is both for peace of mind and to show compliance with the Data Protection Act and the Information Commissioner’s Office guidance notes. All members within This edition was due for release in the We welcome external authors who wish DESIGN summer. But the events of June 23 were to discuss anything that will add value Antoney Calvert at the ADISA certification program undergo scheduled and unannounced audits to ensure they meet the not only the stuff of debate in bars and to members. In this edition, Gill Barstow Colourform Creative Studio certified requirements. Issues that arise can lead to changes in their certified status – or even having it boardrooms throughout Europe – they discusses a favourite subject of ours – colour-form.com forced us into countless re-drafts. building your value proposition. And an old withdrawn. These reports can be employed by end-users as part of their own downstream management PRODUCTION friend, Gavin Coates, introduces his ITAD tools and are available free of charge via the ADISA monitoring service.