Change Detection Using SAR Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Circularly-Symmetric Complex Normal Ratio Distribution for Scalar Transmissibility Functions. Part II: Probabilistic Model and Validation

Mechanical Systems and Signal Processing 80 (2016) 78–98 Contents lists available at ScienceDirect Mechanical Systems and Signal Processing journal homepage: www.elsevier.com/locate/ymssp Circularly-symmetric complex normal ratio distribution for scalar transmissibility functions. Part II: Probabilistic model and validation Wang-Ji Yan, Wei-Xin Ren n Department of Civil Engineering, Hefei University of Technology, Hefei, Anhui 23009, China article info abstract Article history: In Part I of this study, some new theorems, corollaries and lemmas on circularly- Received 29 May 2015 symmetric complex normal ratio distribution have been mathematically proved. This Received in revised form part II paper is dedicated to providing a rigorous treatment of statistical properties of raw 3 February 2016 scalar transmissibility functions at an arbitrary frequency line. On the basis of statistics of Accepted 27 February 2016 raw FFT coefficients and circularly-symmetric complex normal ratio distribution, explicit Available online 23 March 2016 closed-form probabilistic models are established for both multivariate and univariate Keywords: scalar transmissibility functions. Also, remarks on the independence of transmissibility Transmissibility functions at different frequency lines and the shape of the probability density function Uncertainty quantification (PDF) of univariate case are presented. The statistical structures of probabilistic models are Signal processing concise, compact and easy-implemented with a low computational effort. They hold for Statistics Structural dynamics general stationary vector processes, either Gaussian stochastic processes or non-Gaussian Ambient vibration stochastic processes. The accuracy of proposed models is verified using numerical example as well as field test data of a high-rise building and a long-span cable-stayed bridge. -

Basic Econometrics / Statistics Statistical Distributions: Normal, T, Chi-Sq, & F

Basic Econometrics / Statistics Statistical Distributions: Normal, T, Chi-Sq, & F Course : Basic Econometrics : HC43 / Statistics B.A. Hons Economics, Semester IV/ Semester III Delhi University Course Instructor: Siddharth Rathore Assistant Professor Economics Department, Gargi College Siddharth Rathore guj75845_appC.qxd 4/16/09 12:41 PM Page 461 APPENDIX C SOME IMPORTANT PROBABILITY DISTRIBUTIONS In Appendix B we noted that a random variable (r.v.) can be described by a few characteristics, or moments, of its probability function (PDF or PMF), such as the expected value and variance. This, however, presumes that we know the PDF of that r.v., which is a tall order since there are all kinds of random variables. In practice, however, some random variables occur so frequently that statisticians have determined their PDFs and documented their properties. For our purpose, we will consider only those PDFs that are of direct interest to us. But keep in mind that there are several other PDFs that statisticians have studied which can be found in any standard statistics textbook. In this appendix we will discuss the following four probability distributions: 1. The normal distribution 2. The t distribution 3. The chi-square (2 ) distribution 4. The F distribution These probability distributions are important in their own right, but for our purposes they are especially important because they help us to find out the probability distributions of estimators (or statistics), such as the sample mean and sample variance. Recall that estimators are random variables. Equipped with that knowledge, we will be able to draw inferences about their true population values. -

Hand-Book on STATISTICAL DISTRIBUTIONS for Experimentalists

Internal Report SUF–PFY/96–01 Stockholm, 11 December 1996 1st revision, 31 October 1998 last modification 10 September 2007 Hand-book on STATISTICAL DISTRIBUTIONS for experimentalists by Christian Walck Particle Physics Group Fysikum University of Stockholm (e-mail: [email protected]) Contents 1 Introduction 1 1.1 Random Number Generation .............................. 1 2 Probability Density Functions 3 2.1 Introduction ........................................ 3 2.2 Moments ......................................... 3 2.2.1 Errors of Moments ................................ 4 2.3 Characteristic Function ................................. 4 2.4 Probability Generating Function ............................ 5 2.5 Cumulants ......................................... 6 2.6 Random Number Generation .............................. 7 2.6.1 Cumulative Technique .............................. 7 2.6.2 Accept-Reject technique ............................. 7 2.6.3 Composition Techniques ............................. 8 2.7 Multivariate Distributions ................................ 9 2.7.1 Multivariate Moments .............................. 9 2.7.2 Errors of Bivariate Moments .......................... 9 2.7.3 Joint Characteristic Function .......................... 10 2.7.4 Random Number Generation .......................... 11 3 Bernoulli Distribution 12 3.1 Introduction ........................................ 12 3.2 Relation to Other Distributions ............................. 12 4 Beta distribution 13 4.1 Introduction ....................................... -

Field Guide to Continuous Probability Distributions

Field Guide to Continuous Probability Distributions Gavin E. Crooks v 1.0.0 2019 G. E. Crooks – Field Guide to Probability Distributions v 1.0.0 Copyright © 2010-2019 Gavin E. Crooks ISBN: 978-1-7339381-0-5 http://threeplusone.com/fieldguide Berkeley Institute for Theoretical Sciences (BITS) typeset on 2019-04-10 with XeTeX version 0.99999 fonts: Trump Mediaeval (text), Euler (math) 271828182845904 2 G. E. Crooks – Field Guide to Probability Distributions Preface: The search for GUD A common problem is that of describing the probability distribution of a single, continuous variable. A few distributions, such as the normal and exponential, were discovered in the 1800’s or earlier. But about a century ago the great statistician, Karl Pearson, realized that the known probabil- ity distributions were not sufficient to handle all of the phenomena then under investigation, and set out to create new distributions with useful properties. During the 20th century this process continued with abandon and a vast menagerie of distinct mathematical forms were discovered and invented, investigated, analyzed, rediscovered and renamed, all for the purpose of de- scribing the probability of some interesting variable. There are hundreds of named distributions and synonyms in current usage. The apparent diver- sity is unending and disorienting. Fortunately, the situation is less confused than it might at first appear. Most common, continuous, univariate, unimodal distributions can be orga- nized into a small number of distinct families, which are all special cases of a single Grand Unified Distribution. This compendium details these hun- dred or so simple distributions, their properties and their interrelations. -

Mathematical Expectation

Mathematical Expectation 1 Introduction 6 Product Moments 2 The Expected Value of a Random 7 Moments of Linear Combinations of Random Variable Variables 3 Moments 8 Conditional Expectations 4 Chebyshev’s Theorem 9 The Theory in Practice 5 Moment-Generating Functions 1 Introduction Originally, the concept of a mathematical expectation arose in connection with games of chance, and in its simplest form it is the product of the amount a player stands to win and the probability that he or she will win. For instance, if we hold one of 10,000 tickets in a raffle for which the grand prize is a trip worth $4,800, our mathemati- cal expectation is 4,800 1 $0 .48. This amount will have to be interpreted in · 10,000 = the sense of an average—altogether the 10,000 tickets pay $4,800, or on the average $4,800 $0 .48 per ticket. 10,000 = If there is also a second prize worth $1,200 and a third prize worth $400, we can argue that altogether the 10,000 tickets pay $4,800 $1,200 $400 $6,400, or on + + = the average $6,400 $0 .64 per ticket. Looking at this in a different way, we could 10,000 = argue that if the raffle is repeated many times, we would lose 99.97 percent of the time (or with probability 0.9997) and win each of the prizes 0.01 percent of the time (or with probability 0.0001). On the average we would thus win 0(0.9997 ) 4,800 (0.0001 ) 1,200 (0.0001 ) 400 (0.0001 ) $0 .64 + + + = which is the sum of the products obtained by multiplying each amount by the corre- sponding probability. -

On the Quotient of a Centralized and a Non-Centralized Complex Gaussian Random Variable

Volume 125, Article No. 125030 (2020) https://doi.org/10.6028/jres.125.030 Journal of Research of National Institute of Standards and Technology On The Quotient of a Centralized and a Non-centralized Complex Gaussian Random Variable Dazhen Gu National Institute of Standards and Technology, Boulder, CO 80305, USA [email protected] A detailed investigation of the quotient of two independent complex random variables is presented. The numerator has a zero mean, and the denominator has a non-zero mean. A normalization step is taken prior to the theoretical developments in order to simplify the formulation. Next, an indirect approach is taken to derive the statistics of the modulus and phase angle of the quotient. That in turn enables a straightforward extension of the statistical results to real and imaginary parts. After the normalization procedure, the probability density function of the quotient is found as a function of only the mean of the random variable that corresponds to the denominator term. Asymptotic analysis shows that the quotient closely resembles a normally-distributed complex random variable as the mean becomes large. In addition, the first and second moments, as well as the approximate of the second moment of the clipped random variable, are derived, which are closely related to practical applications in complex-signal processing such as microwave metrology of scattering-parameters. Tolerance intervals associated with the ratio of complex random variables are presented. Key words: complex random variables; Rayleigh distribution; Rice distribution. Accepted: September 7, 2020 Published: September 23, 2020 https://doi.org/10.6028/jres.125.030 1. -

Supernovae As Cosmological Probes

UNIVERSITY OF COPENHAGEN Supernovae as cosmological probes Jeppe Trøst Nielsen Niels Bohr International Academy, Niels Bohr Institute, University of Copenhagen July 24, 2015 arXiv:1508.07850v1 [astro-ph.CO] 31 Aug 2015 Thesis submitted for the degree of MSc in Physics Academic supervisors: Subir Sarkar and Alberto Guffanti FOREWORD This work started as a wild goose chase for evidence beyond any doubt that supernova data show cosmic acceleration. Through a study involving artificial neural networks1, trying to find parametrisation free constraints on the expansion history of the universe, we ran into trouble that led us all the way to reconsider the standard method. We encountered the same problem that has been lurking in many previous studies, only in an uncommon disguise. Solving this problem for the neural networks degenerated into solving the original problem. Having done that, it turned out that this result in itself is interesting. This thesis is more or less laying out the article [1] in all gory details. The level of rigour throughout is kept, I think, sufficient but not over the top — particularly the chapter on statistics suffers at the hands of a physicist. I have tried to keep unnecessary details out of the way in favor of results and physical insight. I leave the details to be filled in by smarter people — well, more interested people. A bunch of humble thanks to everyone who helped me at the institute, including — but presumably not limited to — Laure, Andy, Chris, Anne Mette, Sebastian, Assaf, Morten, Jenny, Christian, Tristan, and the rest of the Academy and high energy groups, and of course Helle and Anette without whom our building would crumble. -

Probabilistic Modelling for Frequency Response Functions and Transmissibility Functions with Complex Ratio Statistics

Probabilistic Modelling for Frequency Response Functions and Transmissibility Functions with Complex Ratio Statistics Meng-Yun Zhao Department of Civil Engineering, Hefei University of Technology, China. E-mail: [email protected] Wang-Ji Yan* Institute for Aerospace Technology & The Composites Group, The University of Nottingham, United Kingdom. Department of Civil Engineering, Hefei University of Technology, China. E-mail: [email protected] Wei-Xin Ren Department of Civil Engineering, Hefei University of Technology, China. E-mail: [email protected] Michael Beer Institute for Risk and Reliability, Leibniz Universität Hannover, Germany. E-mail: [email protected] The distributions of ratios of random variables arise in many applied problems such as in structural dynamics working with frequency response functions (FRFs) and transmissibility functions (TFs). When analysing the distribution properties of ratio random variables through the definition of probability density functions (PDF), the problem is usually accompanied by multiple integrals. In this study, a unified solution is presented to efficiently calculate the PDF of a ratio random variable with its denominator and numerator specified by arbitrary distributions. With the use of probability density transformation principle, a unified expression can be derived for the ratio random variable by reducing the concerned problem into two-dimensional integrals. As a result, the PDFs of the ratio random variable can be efficiently computed by using effective numerical integration techniques. Finally, based on the vibration tests performed on the Alamosa Canyon Bridge, the proposed method is applied to the data to quantify the uncertainty of FRFs and TFs. Keywords : Frequency response function, transmissibility function, probability density function, ratio distribution, numerical integration, structural dynamics 1. -

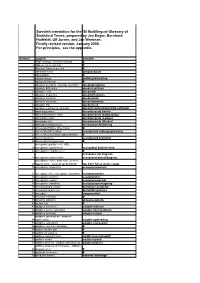

Swedish Translation for the ISI Multilingual Glossary of Statistical Terms, Prepared by Jan Enger, Bernhard Huitfeldt, Ulf Jorner, and Jan Wretman

Swedish translation for the ISI Multilingual Glossary of Statistical Terms, prepared by Jan Enger, Bernhard Huitfeldt, Ulf Jorner, and Jan Wretman. Finally revised version, January 2008. For principles, see the appendix. -

Mixture Models As an Exploratory Tool

Bivariate Models for the Analysis of Internal Nitrogen Use Efficiency: Mixture Models as an Exploratory Tool Isabel Munoz Santa (Masters of Applied Science in Biometrics) A thesis submitted for the degree of Masters of Applied Science in Biometrics in the University of Adelaide School of Agriculture Food and Wine July 2014 ii Acknowledgments I would like to express my deepest gratitude to my advisor Dr. Olena Kravchuck for her expertise, guidance, support and encouragement. This thesis would not have been possible without her. Thank you for your efforts to make me a better professional and give me the opportunity to study here! I would also like to thank my second supervisor Dr. Petra Marschner for her guidance in the biological aspects of my thesis and Dr. Stephan Haefele who kindly provided the data for the case study of this thesis and provided support in the interpretation of the results. I would like to acknowledge the Faculty of Science for providing the Turner Family Scholarship which supported this research and provided travel assistance to attend the Australian Statistical Conference, July 2014 Adelaide, Young Statistician Con- ference, February 2013 Melbourne, International Biometrics Society Conference, De- cember 2014 Mandurah and Australian Statistical Conference in conjunction with the Institute of Mathematical Statistics Annual Meeting, July 2014 Sydney. I would like to thank all the people at the Biometry Hub: Bev, David, Jules, Paul, Stephen and Wayne for their big smiles and for creating a positive working environ- ment as well as the wonderful group of statisticians at Waite, meeting regularly for the professional development discussions. -

The Exact Distribution of the Ratio of Two Independent Hypoexponential Random Variables Abstract 1 Introduction

British Journal of Mathematics & Computer Science X(X): XX-XX, 20XX SCIENCEDOMAIN international www.sciencedomain.org The Exact Distribution of The Ratio of Two Independent Hypoexponential Random Variables Therrar Kadri∗1 and Khaled Smaili2 1Department of Mathematics, Faculty of Sciences, Beirut Arab University, Beirut, Lebanon, 2Department of Applied Mathematics, Faculty of Sciences, Lebanese University, Zahle, Lebanon Original Research Article Received: XX December 20XX Accepted: XX December 20XX Online Ready: XX December 20XX Abstract The distribution of ratio of two random variables are of interest in many areas of the sciences. The study of the ratio of same family and finding their exact density expression was examined by many authors for decades. Some are solved and others gave approximations, but many still unsolved. In this paper, we consider the ratio of two independent Hypoexponential distributions. We find the exact expressions for the probability density function, the cumulative distribution function, moment generating function, the reliability function and hazard function, which was proved to be a linear combination of the Generalized-F distribution. Keywords: Ratio Distribution, Hypoexponential Distribution; Erlang Distribution; Generalized-F Distribution; Log-logistic Distribution; Probability Density Function; Cumulative Distribution Function; Reliability Function; Hazard Function. 1 Introduction The distributions of ratio of random variables are widely used in many applied problems of engineering, physics, number theory, order statistics, economics, biology, genetics, medicine, hydrology, psychology, classification, and ranking and selection, see [1,2,3,4]. Examples include safety factor in engineering, mass to energy ratios in nuclear physics, target to control precipitation in meteorology, inventory ratios in economics and Mendelian inheritance ratios in genetics, see [1,2]. -

Circularly-Symmetric Complex Normal Ratio Distribution for Scalar Transmissibility Functions. Part I: Fundamentals

Mechanical Systems and Signal Processing 80 (2016) 58–77 Contents lists available at ScienceDirect Mechanical Systems and Signal Processing journal homepage: www.elsevier.com/locate/ymssp Circularly-symmetric complex normal ratio distribution for scalar transmissibility functions. Part I: Fundamentals Wang-Ji Yan, Wei-Xin Ren n Department of Civil Engineering, Hefei University of Technology, Hefei, Anhui Province 23009, China article info abstract Article history: Recent advances in signal processing and structural dynamics have spurred the adoption Received 28 May 2015 of transmissibility functions in academia and industry alike. Due to the inherent ran- Received in revised form domness of measurement and variability of environmental conditions, uncertainty 3 February 2016 impacts its applications. This study is focused on statistical inference for raw scalar Accepted 27 February 2016 transmissibility functions modeled as complex ratio random variables. The goal is Available online 22 March 2016 achieved through companion papers. This paper (Part I) is dedicated to dealing with a Keywords: formal mathematical proof. New theorems on multivariate circularly-symmetric complex Transmissibility normal ratio distribution are proved on the basis of principle of probabilistic transfor- Uncertainty quantification mation of continuous random vectors. The closed-form distributional formulas for mul- Ratio distribution tivariate ratios of correlated circularly-symmetric complex normal random variables are Multivariate statistics Complex statistics analytically derived. Afterwards, several properties are deduced as corollaries and lemmas to the new theorems. Monte Carlo simulation (MCS) is utilized to verify the accuracy of some representative cases. This work lays the mathematical groundwork to find prob- abilistic models for raw scalar transmissibility functions, which are to be expounded in detail in Part II of this study.