Big Iron Lessons, Part 3: Performance Monitoring and Tuning Page 1 of 18

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

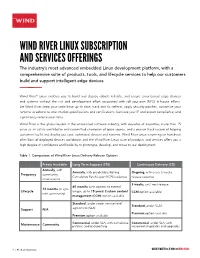

Wind River Linux Subscription and Services Offerings

WIND RIVER LINUX SUBSCRIPTION AND SERVICES OFFERINGS The industry’s most advanced embedded Linux development platform, with a comprehensive suite of products, tools, and lifecycle services to help our customers build and support intelligent edge devices Wind River® Linux enables you to build and deploy robust, reliable, and secure Linux-based edge devices and systems without the risk and development effort associated with roll-your-own (RYO) in-house efforts. Let Wind River keep your code base up to date, track and fix defects, apply security patches, customize your runtime to adhere to strict market specifications and certifications, facilitate your IP and export compliance, and significantly reduce your costs. Wind River is the global leader in the embedded software industry, with decades of expertise, more than 15 years as an active contributor and committed champion of open source, and a proven track record of helping customers build and deploy use case–optimized devices and systems. Wind River Linux is running on hundreds of millions of deployed devices worldwide, and the Wind River Linux suite of products and services offers you a high degree of confidence and flexibility to prototype, develop, and move to real deployment. Table 1. Comparison of Wind River Linux Delivery Release Options Freely Available Long Term Support (LTS) Continuous Delivery (CD) Annually, with Annually, with predictable Rolling Ongoing, with every-3-weeks Frequency community Cumulative Patch Layer (RCPL) cadence release cadence maintenance 3 weeks, until next -

Meltdown and Spectre Understanding And

Meltdown and Spectre Prepared by Christopher Clai January 18th, 2018 Understanding and Remediation Strategy Broad Overview The General Law of Cross-Task Information Leakage “In any setting where short-term performance optimizations have global effect, a sufficiently clever task can infer the recent history of other tasks by observing its own performance.” Meltdown and Spectre are two hardware vulnerabilities that target the Central Processing Unit (CPU) of computing systems with cross-task information leakage. They were identified by four separate teams of security researchers who then disclosed the vulnerabilities to the company’s months prior to public disclosure. These vulnerabilities leverage the performance optimization architecture of modern day processors to their advantage. • Meltdown allows an attacker to retrieve kernel memory, which is privileged space in your Operating System that may contain password hashes and private keys for data decryption. • Spectre allows an attacker to retrieve information within the process it operates in, and execute code even if it was isolated in a sandbox within a process. The vulnerabilities require attackers to already have a way into your network, and at most can exfiltrate data from memory. They are unable to cause things such as privilege escalation, holding your data for ransom, or causing business disruption. They data they retrieve however, may be used for that purpose if it contains the information they are seeking. The initial rush of patches and microcode (firmware for CPU) updates have been somewhat brute and simplistic in their methods and only seek to mitigate potential practical exploit paths for these vulnerabilities. Researchers do not believe there will potential to fully prevent these types of exploits. -

Extended Memory 64 Technology Software Developer's Manual

IA-32 Intel® Architecture and Intel® Extended Memory 64 Technology Software Developer’s Manual Documentation Changes November 2004 Notice: The IA-32 Intel® Architecture and Intel® Extended Memory 64 Technology may contain design defects or errors known as errata that may cause the product to deviate from published specifications. Current characterized errata are documented in the specification updates. Document Number: 252046-011 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The IA-32 Intel® Architecture and Intel® Extended Memory 64 Technology may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. -

Class-Action Lawsuit

Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 1 of 279 Steve D. Larson, OSB No. 863540 Email: [email protected] Jennifer S. Wagner, OSB No. 024470 Email: [email protected] STOLL STOLL BERNE LOKTING & SHLACHTER P.C. 209 SW Oak Street, Suite 500 Portland, Oregon 97204 Telephone: (503) 227-1600 Attorneys for Plaintiffs [Additional Counsel Listed on Signature Page.] UNITED STATES DISTRICT COURT DISTRICT OF OREGON PORTLAND DIVISION BLUE PEAK HOSTING, LLC, PAMELA Case No. GREEN, TITI RICAFORT, MARGARITE SIMPSON, and MICHAEL NELSON, on behalf of CLASS ACTION ALLEGATION themselves and all others similarly situated, COMPLAINT Plaintiffs, DEMAND FOR JURY TRIAL v. INTEL CORPORATION, a Delaware corporation, Defendant. CLASS ACTION ALLEGATION COMPLAINT Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 2 of 279 Plaintiffs Blue Peak Hosting, LLC, Pamela Green, Titi Ricafort, Margarite Sampson, and Michael Nelson, individually and on behalf of the members of the Class defined below, allege the following against Defendant Intel Corporation (“Intel” or “the Company”), based upon personal knowledge with respect to themselves and on information and belief derived from, among other things, the investigation of counsel and review of public documents as to all other matters. INTRODUCTION 1. Despite Intel’s intentional concealment of specific design choices that it long knew rendered its central processing units (“CPUs” or “processors”) unsecure, it was only in January 2018 that it was first revealed to the public that Intel’s CPUs have significant security vulnerabilities that gave unauthorized program instructions access to protected data. 2. A CPU is the “brain” in every computer and mobile device and processes all of the essential applications, including the handling of confidential information such as passwords and encryption keys. -

Intel(R) 64 and IA32 Architectures Software Developer's Manual Vol 3B

Intel® 64 and IA-32 Architectures Software Developer’s Manual Volume 3B: System Programming Guide, Part 2 NOTE: The Intel® 64 and IA-32 Architectures Software Developer's Manual consists of five volumes: Basic Architecture, Order Number 253665; Instruction Set Reference A-M, Order Number 253666; Instruction Set Reference N-Z, Order Number 253667; System Programming Guide, Part 1, Order Number 253668; System Programming Guide, Part 2, Order Number 253669. Refer to all five volumes when evaluating your design needs. Order Number: 253669-031US June 2009 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANT- ED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR IN- TENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUA- TION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "unde- fined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. -

NVM Express and the PCI Express* SSD Revolution SSDS003

NVM Express and the PCI Express* SSD Revolution Danny Cobb, CTO Flash Memory Business Unit, EMC Amber Huffman, Sr. Principal Engineer, Intel SSDS003 Agenda • NVM Express (NVMe) Overview • New NVMe Features in Enterprise & Client • Driver Ecosystem for NVMe • NVMe Interoperability and Plugfest Plans • EMC’s Perspective: NVMe Use Cases and Proof Points The PDF for this Session presentation is available from our Technical Session Catalog at the end of the day at: intel.com/go/idfsessions URL is on top of Session Agenda Pages in Pocket Guide 2 Agenda • NVM Express (NVMe) Overview • New NVMe Features in Enterprise & Client • Driver Ecosystem for NVMe • NVMe Interoperability and Plugfest Plans • EMC’s Perspective: NVMe Use Cases and Proof Points 3 NVM Express (NVMe) Overview • NVM Express is a scalable host controller interface designed for Enterprise and client systems that use PCI Express* SSDs • NVMe was developed by industry consortium of 80+ members and is directed by a 13-company Promoter Group • NVMe 1.0 was published March 1, 2011 • Product introductions later this year, first in Enterprise 4 Technical Basics • The focus of the effort is efficiency, scalability and performance – All parameters for 4KB command in single 64B DMA fetch – Supports deep queues (64K commands per Q, up to 64K queues) – Supports MSI-X and interrupt steering – Streamlined command set optimized for NVM (6 I/O commands) – Enterprise: Support for end-to-end data protection (i.e., DIF/DIX) – NVM technology agnostic 5 NVMe = NVM Express NVMe Command Execution 7 1 -

Renewing Our Energy Future

Renewing Our Energy Future September 1995 OTA-ETI-614 GPO stock #052-003-01427-1 Recommended Citation: U.S. Congress, Office of Technology Assessment, Renewing Our Energy Fulture,OTA-ETI-614 (Washington, DC: U.S. Government Printing Office, September 1995). For sale by the U.S. Government Printing Office Superintendent of Documents, Mail Stop: SSOP, Washington, DC 20402-9328 ISBN 0-16 -048237-2 Foreword arious forms of renewable energy could become important con- tributors to the U.S. energy system early in the next century. If that happens, the United States will enjoy major economic, envi- ronmental, and national security benefits. However, expediting progress will require expanding research, development, and commer- cialization programs. If budget constraints mandate cuts in programs for renewable energy, some progress can still be made if efforts are focused on the most productive areas. This study evaluates the potential for cost-effective renewable energy in the coming decades and the actions that have to be taken to achieve the potential. Some applications, especially wind and bioenergy, are already competitive with conventional technologies. Others, such as photovol- taics, have great promise, but will require significant research and devel- opment to achieve cost-competitiveness. Implementing renewable energy will be also require attention to a variety of factors that inhibit potential users. This study was requested by the House Committee on Science and its Subcommittee on Energy and Environment; Senator Charles E. Grass- ley; two Subcommittees of the House Committee on Agriculture—De- partment Operations, Nutrition and Foreign Agriculture and Resource Conservation, Research and Forestry; and the House Subcommittee on Energy and Environment of the Committee on Appropriations. -

Intel® Optane™ Storage Performance and Implications on Testing Methodology October 27, 2017 Version 1.0

Intel® Optane™ Storage Performance and Implications on Testing Methodology October 27, 2017 Version 1.0 Executive Summary Since the unveiling in 2015, 3D XPoint™ Memory has shown itself to be the disruptive storage technology of the decade. Branded as Intel® Optane™ when packaged together with Intel’s storage controller and software, this new transistor-less solid-state ‘storage class memory’ technology promises lower latencies and increased system responsiveness previously unattainable from a non-volatile memory product. When coupled with NVMe and ever faster interfaces, Intel® Optane™ seeks to bridge the gap between slower storage and faster system RAM. Intel® Optane™ and 3D XPoint™ Technology 3D XPoint™ represents a radical departure from conventional non-volatile memory technologies. NAND flash memory stores bits by trapping an electrical charge within an insulated cell. Efficient use of die space mandates that programming be done by page and erasures by block. These limitations lead to a phenomenon called write amplification, where an SSD must manipulate relatively large chunks of data to achieve a given small random write operation, negatively impacting both performance and endurance. 3D XPoint™ is free of the block erase, page, and write amplification limitations inherent with NAND flash and can be in-place overwritten at the bit/byte/word level with no need for over- provisioning to maintain high random performance and consistency. 3D XPoint™ data access is more akin to that of RAM, and thanks to the significant reduction in write-related overhead compared to NAND, read responsiveness can be maintained even in the face of increased system write pressure. A deeper dive of how 3D XPoint™ Memory works is beyond the scope of this paper but can be found elsewhere on the web. -

The Speculation Meltdown

Security Now! Transcript of Episode #645 Page 1 of 28 Transcript of Episode #645 The Speculation Meltdown Description: This week, before we focus upon the industry-wide catastrophe enabled by precisely timing the instruction execution of all contemporary high-performance processor architectures, we examine a change in Microsoft's policy regarding non- Microsoft AV systems, Firefox Quantum's performance when tracking protections are enabled, the very worrisome hard-coded backdoors in 10 of Western Digital's My Cloud drives; and, if at first (WEP) and at second (WPA) and at third (WPA2) and at fourth (WPS) you don't succeed, try, try, try, try, try yet again with WPA3, another crucial cryptographic system being developed by a closed members-only committee. High quality (64 kbps) mp3 audio file URL: http://media.GRC.com/sn/SN-645.mp3 Quarter size (16 kbps) mp3 audio file URL: http://media.GRC.com/sn/sn-645-lq.mp3 SHOW TEASE: It's time for Security Now!. Finally, the long-awaited what the heck is going on with Intel processors edition. Steve breaks down Meltdown and Spectre - by the way, it's not just for Intel anymore - and talks about what mitigation might involve and what the consequences of fixing this massive flaw could be. There's lots of other security news, too. It's all coming up next on Security Now!. Leo Laporte: This is Security Now! with Steve Gibson, Episode 645, recorded Tuesday, January 9th, 2018: The Speculation Meltdown. It's time for Security Now!, the show where we cover security, now, now, with Steve Gibson. -

Intel SSD 750 Series Evaluation Guide

Intel® Solid State Drive 750 Series Evaluation Guide September 2016 Order Number: 332075-002US Intel® Solid State Drive 750 Series Ordering Information Contact your local Intel sales representative for ordering information. Tests document performance of components on a particular test, in specific systems. Differences in hardware, software, or configuration will affect actual performance. Consult other sources of information to evaluate performance as you consider your purchase. Results have been estimated based on internal Intel analysis and are provided for informational purposes only. Any difference in system hardware or software design or configuration may affect actual performance. All documented performance test results are obtained in compliance with JESD218 Standards; refer to individual sub-sections within this document for specific methodologies. See www.jedec.org for detailed definitions of JESD218 Standards. Intel does not control or audit the design or implementation of third party benchmark data or Web sites referenced in this document. Intel encourages all of its customers to visit the referenced Web sites or others where similar performance benchmark data are reported and confirm whether the referenced benchmark data are accurate and reflect performance of systems available for purchase. The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. Intel and the Intel logo are trademarks of Intel Corporation in the U.S. and other countries. *Other names and brands may be claimed as the property of others. -

WIND RIVER INTELLIGENT DEVICE PLATFORM XT the Foundation for Building Devices That Connect to the Internet of Things

WIND RIVER INTELLIGENT DEVICE PLATFORM XT The Foundation for Building Devices That Connect to the Internet of Things The opportunities presented by the burgeoning Internet of Things (IoT) may be new, but Wind River® has been providing the intelligence that powers interconnected, automated systems for decades. Wind River Intelligent Device Platform XT is a scalable, sustainable, and secure development environment that simplifies the development, integration, and deployment of IoT gateways. It is based on Wind River industry-leading operating systems, which are standards-compliant and fully tested, as well as Wind River development tools. The platform provides device security, smart connectivity, rich network options, and device management; and it includes ready-to-use components built exclusively for developing IoT gateway applications. Intelligent Device Platform XT provides outstanding software and expertise to fuel the rapid innovation and deployment of safe, secure, and reliable intel- ligent devices through more efficient development cycles, standards-based data connec- tions, and intuitive management tools. CONNECTIVITY TOOLS ZigBee Bluetooth WWAN VPN MQTT Cloud Connector Wi MANAGEMENT nd River Integrated Development Environment T Secure Updates OMA DM, TR-069 Device Authentication Web Interface Application Signing API OpenJDK Lua VM SQLite OSGi To SECURITY ol TCG Standards Role Based Access Control Integrity Monitoring Signed Software ool s WIND RIVER OPERATING ENVIRONMENTS Trusted Secure Boot Wind River Intelligent Device Operating Platform Feature Environment Base Figure 1: Wind River Intelligent Device Platform architecture Product Note INNOVATORS START HERE. WIND RIVER INTELLIGENT DEVICE PLATFORM XT INTEL, MCAFEE, AND WIND RIVER, BETTER TOGETHER Intelligent Device Platform XT is part of Intel® Gateway Solutions for Internet of Things (IoT), a family of platforms that enables companies to seamlessly interconnect indus- trial devices and other systems into a system of systems.