Behave Documentation Release 1.2.7.Dev2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Watir Recipes the Problem Solving Guide to Watir

Watir Recipes The problem solving guide to Watir Zhimin Zhan This book is for sale at http://leanpub.com/watir-recipes This version was published on 2017-02-12 This is a Leanpub book. Leanpub empowers authors and publishers with the Lean Publishing process. Lean Publishing is the act of publishing an in-progress ebook using lightweight tools and many iterations to get reader feedback, pivot until you have the right book and build traction once you do. © 2013 - 2017 Zhimin Zhan Also By Zhimin Zhan Practical Web Test Automation Selenium WebDriver Recipes in Ruby Selenium WebDriver Recipes in Java Learn Ruby Programming by Examples Learn Swift Programming by Examples Selenium WebDriver Recipes in Python API Testing Recipes in Ruby Selenium WebDriver Recipes in Node.js To Xindi, for your understanding and support. Thank you! Contents Preface ........................................... i Preface to First Edition ................................ ii Who should read this book .............................. iii How to read this book ................................. iii Get recipe test scripts ................................. iii Send me feedback ................................... iv 1. Introduction ...................................... 1 Watir and its variants ................................. 1 RSpec .......................................... 2 Run recipe scripts ................................... 3 2. Watir and Selenium WebDriver .......................... 10 Install Selenium Browser Drivers ........................... 10 Cross browser testing -

Analysis of Domain Specific Languages for GUI Testing: Rspec and Cucumber for Sikuli

Journal of Multidisciplinary Engineering Science and Technology (JMEST) ISSN: 3159-0040 Vol. 2 Issue 1, January - 2015 Analysis of Domain Specific Languages for GUI testing: RSpec and Cucumber for Sikuli Ivan Evgrafov, [email protected] Raimund Hocke Roman S. Samarev, [email protected] Head Developer of SikuliX, Elena V. Smirnova, [email protected] [email protected], Nidderau, Germany Bauman Moscow State Technical University, Russia Abstract – This article is devoted to new usage tions. Little languages are small DSLs that do not include of the domain specific languages (DSL) for software many features found in General Purpose Programming with a Graphical User Interfaces (GUI) testing. This languages (GPLs). The testers use the GPPL in case if paper’s authors are a developers of the enhanced there is no time to create special DSL - in case of the short software Sikulix, the product which targeted for the term software project or if they could not find a proper programs with graphical interface testing. They pro- DSL, or if they could not create their own DSL. And they pose it as a functional basis for DSL. The SikuliX is use a domain-specific language (DSL), a computer lan- considering as an environment for domain specific guage specialized to a particular application domain in languages (DSL and DSEL). The results of two spe- such a case [17]. This is in contrast to a general-purpose cialized testing DSL’s estimation are being presented language (GPPL), which is broadly applicable across do- in this paper: the frameworks Rspec which belongs mains, and lacks specialized features for a particular do- to a Domain Specific Embedded Language (DSEL) main. -

Careerit Online Trainings Selenium (With Java) Automation + Cucumber

CareerIT Online Trainings Selenium (with Java) Automation + Cucumber – Day wise course contents Selenium Introduction DAY 1: Introduction to Automation & Selenium What is Automation Testing? Why we need Automation? & how to learn any automation tool? Different types of automation approaches Introduction to Selenium. What is Selenium? & why WebDriver? DAY 2: Selenium Overview Brief history of Selenium Features of Selenium Advantages and limitations of Selenium DAY 3: Selenium Components and Introduction to Selenium IDE Selenium IDE Selenium RC Selenium WebDriver Selenium Grid DAY 4: Deep drive into Selenium IDE Learning Core Java Concepts DAY 5: How much Java you need to learn? Exclusive Java session – 1 Java Introduction Download and Installing Java Write a simple Java program Compile and Execute your Java program – Importance of JVM DAY 6: Exclusive Java session – 2 Variables and Data Types Classes, Objects and methods Constructors & Packages DAY 7: Exclusive Java session – 3 Different Access Specifiers Different keywords – static, this Control Structures or Conditional Statements in Java DAY 8: Exclusive Java session – 4 Iterative/Looping statements in Java String Methods DAY 9: Exclusive Java session – 5 OOPs Introduction - Encapsulation Polymorphism Inheritance CareerIT Online Trainings DAY 10: Exclusive Java session – 6 Interfaces in Java Collections in Java DAY 11: Exclusive Java session – 7 Exception Handling in Java DAY 12: Exclusive Java session – 8 Java IO Concepts: Handling Files Database connectivity concepts [JDBC] Selenium -

Behavior-Driven Development and Cucumber

Behavior-Driven Development and Cucumber CSCI 5828: Foundations of Software Engineering Lecture 09 — 02/14/2012 ! © Kenneth M. Anderson, 2012 1 Goals • Introduce concepts and techniques encountered in the first three chapters of the Cucumber textbook (I may also refer to it as the “testing” textbook) • Installing Cucumber • Behavior-Driven Development • Gherkin • Cucumber • It’s integration with ruby (other languages are also supported) © Kenneth M. Anderson, 2012 2 Side Note: Fred Brooks Appears Again • I like receiving confirmation that the information I put in front of you is important • At the start of Chapter 3 of the Cucumber book, take a look at the first citation: • “In his famous essay, No Silver Bullet [Bro95], Fred Brooks says: • ‘The hardest single part of building a software system is deciding precisely what to build.’” • They even reference The Mythical Man-Month on the same page! ! © Kenneth M. Anderson, 2012 3 Installing Cucumber (I) • If you would like to run the examples presented in lecture, you will need to install Cucumber • To do so, you will first need to install ruby • If you are on Linux or Mac OS X, the book recommends first installing rvm (Ruby Version Manager) • Instructions are here: <http://beginrescueend.com/> • Then use it to install ruby version 1.9.3-p0 • On Lion with XCode 4.2.1: “rvm install ruby-1.9.3-p0 --with-gcc=clang” • If you are on Windows, use the Ruby Installer • http://rubyinstaller.org/ © Kenneth M. Anderson, 2012 4 Installing Cucumber (II) • Once you have ruby installed, you may need to install -

Analysis of Code Coverage Through Gui Test Automation and Back End Test Automation Mr Tarik Sheth1, Ms

IJISET - International Journal of Innovative Science, Engineering & Technology, Vol. 3 Issue 3, March 2016. www.ijiset.com ISSN 2348 – 7968 Analysis Of Code Coverage Through Gui Test Automation And Back End Test Automation Mr Tarik Sheth1, Ms. Priyanka Bugade2 , Ms. Sneha, Pokharkar3 AMET University1, Thakur College of Science and Commerce2,3 ABSTRACT coverage measurement through GUI automation and back Software testing provides a means to reduce errors, cut end automation testing of the software covers all aspects of maintenance and overall software costs. Testing has become testing, a particular website or a web application..The most important parameter in the case of software purpose of this project is to invent our own test tool which development lifecycle (SDLC). Testing automation tools will give more sophisticated outcomes then the cucumber enables developers and testers to easily automate the entire tool which will be using .The outcome of our research tool process of testing in software development. It is to examine & should be more better then the testing tool which is already modify source code. The objective of the paper is to conduct available in the market that is cucumber tool.. [3]. The paper a comparative study of automated tools such as available in tries to investigate and evaluate the effect of automation market in Selenium and cucumber test tool. The aim of this testing such as GUI and back end testing. [4]. research paper is to evaluate and compare automated The problems with manual testing are, it is very time software testing tools to determine their usability and consuming process, not reusable, has no scripting facility, effectiveness. -

Ruby Cucumber, Selenium, Watir and Pageobject Course Content

Ruby Cucumber, Selenium, Watir and PageObject Course Content ● Ruby History ● Environments and Editors ● Ruby Download and Installation ● Folder Structure and Gems Location ● Ruby Command Prompt Vs IRB ● puts Vs p Vs print ● Comments ● Variable Types with Naming Convention ● Numeric ● Interpolation ● Conditional and Controller Statements & Modifiers ● Operator Types ● Ruby Ranges and Date-time ● What is an Object in Ruby ● Object Types Built in Objects 1. Strings 2. Symbols 3. Arrays 4. Hashes ● Instance_methods(pre-defined methods) ● Methods(Functions) ● Iterators and Loops Statements ● Regular Expressions ● Files ● Modules Mixins with Naming Conventions ● Basic Object Orientation Concepts with Naming Conventions ● Doubts Clarification Introduction to Cucumber 1. Setup environment 2. What is cucumber 3. Generate cucumber framework 4. Framework flow 5. Setup configuration 6. What are watir, selenium, page-object 7. Writing Features 8. Writing Scenarios 9. Writing Step_definitions 10. Find out html element id’s, name and xpath 11. Running Tests in Different Browsers 12. Background 13. Scenario Outline 14. Tagging 15. Hooks 16. Alert 17. Screen_shot 18. Generate HTML Report 19. Watir-webdriver 20. Selenium-webdriver 21. Page-object ● What does student learn? By the end of the Ruby cucumber online training, student will learn below main concepts practically. ○ Ruby, Regular_expressions, Oop’s, Cucumber framework ○ Selenium-webdriver, watir-webdriver, page-object, testgen, etc., ○ Automation testing with web applications ★ What are advantages after learned this course? ○ Resume preparation by experienced trainer for shortlist for job ○ Student get job easily. ○ Student can manage job easily without job support. ○ This training 90% practical and 10% theory in every session with real time scenarios. ★ Student or Consultant must pay 70% 1st installment after 3 days from demo. -

Key Facts Key Benefits Key Features Continuous Integration for Everybody

7.0 Continuous Integration for Everybody “Continuous Integration is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily — leading to multiple integrations per day. Many teams find that this approach leads to significantly reduced integration problems and allows a team to develop cohesive software more rapidly.” Martin Fowler Key Facts “So the reason I made the TeamCity is a userfriendly continuous integration (CI) server for switch is because of the professional developers and build engineers. It is trivial to setup and time it take to set it up: 20 absolutely free for small teams. minutes. Everything was fully functional in under 20 minutes. I absolutely got no Key Benefits error at all. I just got through all the step of the wizard and • Automate code analyzing, compiling, and testing processes, with having then: done. Unit testing, code instant feedback on build progress, problems and test failures, all in simple, coverage, build, reports, etc... intuitive webinterface; And it’s absolutely free for • Run multiple builds and tests under different configurations and platforms small team.” simultaneously; Sebastien Lachance, Software Developer at • Make sure your team sustains an uninterrupted workflow with the help of BXSYSTEMS Pretested commits and Personal builds; • Have build history insight with customizable statistics on build duration, “Teamcity is totally awesome, success rate, code quality and custom metrics; after i got the grails project • Enable -

Crystal Cucumber Framework Automation Setup Guide

Watir-Webdriver – Cucumber Automation Framework Setup Guide Documentation version table: Document updating summary. Version Date Date Created 1.0 08/05/15 Index Seller Restrictions: This data shall not be duplicated, used nor disclosed in whole or in part for any purpose. Page 1 November 18, 2015 Table of Contents Introduction...................................................................................................................................................................3 Setting up Environment.................................................................................................................................................3 (a) Install Java.........................................................................................................................................................3 (b) Install Ruby Win Installer..................................................................................................................................3 (c) Install Bundler Gem..........................................................................................................................................3 Configuring Eclipse.......................................................................................................................................................4 (a) Extract Eclipse...................................................................................................................................................4 (b) Set Workspace...................................................................................................................................................4 -

TCD65-141111-2205-6028.Pdf

1. TeamCity Documentation . 3 1.1 TeamCityReference . 4 1.1.1 What's New in TeamCity 6.5 . 4 1.1.2 Getting Started . 10 1.1.3 Concepts . 14 1.1.3.1 Agent Home Directory . 15 1.1.3.2 Agent Requirements . 16 1.1.3.3 Agent Work Directory . 16 1.1.3.4 Authentication Scheme . 16 1.1.3.5 Build Agent . 17 1.1.3.6 Build Artifact . 18 1.1.3.7 Build Chain . 18 1.1.3.8 Build Checkout Directory . 19 1.1.3.9 Build Configuration . 20 1.1.3.10 Build Configuration Template . 21 1.1.3.11 Build Grid . 22 1.1.3.12 Build History . 22 1.1.3.13 Build Log . 23 1.1.3.14 Build Number . 23 1.1.3.15 Build Queue . 23 1.1.3.16 Build Runner . 24 1.1.3.17 Build State . 24 1.1.3.18 Build Tag . 25 1.1.3.19 Build Working Directory . 25 1.1.3.20 Change . 26 1.1.3.21 Change State . 26 1.1.3.22 Clean Checkout . 27 1.1.3.23 Clean-Up . 28 1.1.3.24 Code Coverage . 28 1.1.3.25 Code Duplicates . 29 1.1.3.26 Code Inspection . 29 1.1.3.27 Continuous Integration . 29 1.1.3.28 Dependent Build . 30 1.1.3.29 Difference Viewer . 31 1.1.3.30 Guest User . 32 1.1.3.31 History Build . 32 1.1.3.32 Notifier . 33 1.1.3.33 Personal Build . -

Advance Ruby on Rails Topics 1. Rspec, Automation Testing 2

Advance Ruby on Rails Topics 1. Rspec, Automation Testing 2. Cucumber 3. Capybara 4. Mocks and Stubs 5. Action Mailer RAILS: RSPEC AND CAPYBARA BY EXAMPLE How to setup and use two popular gems used in tests in Rails: RSpec and Capybara. Post’s example based on TDD approach. Post based on example application to get context in testing and its purpose is a managing library with books. In this I focus only on the adding new books feature. Setup Project Ruby version: ruby 2.2.2p95 Rails version: rails 4.2.6 Let’s start by creating project: rails new my_app -d mysql 1 rails new my_app -d mysql / Postgres If you prefer to use NO-SQL database, use following command: rails new my_app 1 rails new my_app If you choose MySQL database, you need to setup database access: username and password. It’s better to keep this data secret, so I use config/ secrets.yml. Add to this file 2 key-value pairs and replace root and password_to_database: development: secret_key_base: 6904e69fc...118 database_username: root database_password: password_to_database Page !1 of !101 1 development: 2 secret_key_base: 6904e69fc...118 3 database_username: root 4 database_password: password_to_database Open config/database.yml and add just created secret keys: default: &default adapter: mysql2 encoding: utf8 pool: 5 username: <%= Rails.application.secrets[:database_username] %> password: <%= Rails.application.secrets[:database_password] %> socket: /var/run/mysqld/mysqld.sock 1 default: &default 2 adapter: mysql2 3 encoding: utf8 4 pool: 5 5 username: <%= Rails.application.secrets[:database_username] %> 6 password: <%= Rails.application.secrets[:database_password] %> 7 socket: /var/run/mysqld/mysqld.sock Before you push any changes to repository, tell GIT to ignore our secrets. -

Selenium,Cucumber and Appium

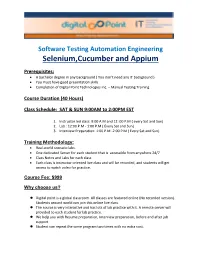

Software Testing Automation Engineering Selenium,Cucumber and Appium Prerequisites: A bachelor degree in any background ( You don’t need any IT background ) You must have good presentation skills Completion of Digital Point Technologies Inc. – Manual Testing Training Course Duration (40 Hours) Class Schedule: SAT & SUN 9:00AM to 2:00PM EST 1. Instructor led class: 9:00 A.M and 12 :00 P.M ( every Sat and Sun) 2. Lab : 12:00 P.M - 1:00 P.M ( Every Sat and Sun) 3. Interview Preparation: 1:00 P.M -2:00 P.M ( Every Sat and Sun) Training Methodology: Real-world scenario labs. One dedicated Server for each student that is accessible from anywhere 24/7 Class Notes and Labs for each class Each class is instructor oriented live class and will be recorded, and students will get access to watch video for practice. Course Fee: $999 Why choose us? Digital point is a global classroom. All classes are featured online (No recorded version). Students around world can join this online live class. The course is very interactive and has lots of lab practice with it. A remote server will provided to each student for lab practice. We help you with Resume preparation, Interview preparation, before and after job support Student can repeat the same program two times with no extra cost. Benefit of the course: Completion of this course, you can apply as a Software Automation Tester or as a DevOps Tester Job Support – We will provide you job support Real World Lab - Server access from your home computer through VPN connection Interview Preparation Mock Interview Resume Writing Course Curriculum Phase 1: Automation Testing Module 1 Java Basics Overview of Object-Oriented Concepts Classes, Objects, Constructors and methods Method Overloading Inheritance Method Overriding Access Modifiers Arrays Looping and Conditions Hands-On: . -

An Automated Assistant for Reducing Duplication in Living Documentation

An Automated Assistant for Reducing Duplication in Living Documentation A dissertation submitted to the University of Manchester for the degree of Master of Science in the Faculty of Engineering and Physical Sciences. 2015 SIA WAI SUAN SCHOOL OF COMPUTER SCIENCE Table of Contents List of Figures ............................................................................................................. 6 List of Tables .............................................................................................................. 9 List of Abbreviations ............................................................................................... 10 Abstract ..................................................................................................................... 11 Declaration ................................................................................................................ 12 Intellectual Property Statement .............................................................................. 13 Acknowledgements ................................................................................................... 14 Chapter 1 : Introduction ......................................................................................... 15 1.1 Overview .......................................................................................................... 15 1.2 Aim ................................................................................................................... 17 1.2.1 Objectives .................................................................................................