Cyber Operations and Nuclear Weapons Technology for Global Security Special Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SPACE and DEFENSE

SPACE and DEFENSE Volume Three Number One Summer 2009 Space Deterrence: The Delicate Balance of Risk by Ambassador Roger G. Harrison, Collins G. Shackelford and Deron R. Jackson with commentaries by Dean Cheng Pete Hays John Sheldon Mike Manor and Kurt Neuman Dwight Rauhala and Jonty Kasku-Jackson EISENHOWER CENTER FOR SPACE AND DEFENSE STUDIES Space and Defense Scholarly Journal of the United States Air Force Academy Eisenhower Center for Space and Defense Studies Editor-in-Chief: Ambassador Roger Harrison, [email protected] Director, Eisenhower Center for Space and Defense Studies Academic Editor: Dr. Eligar Sadeh, [email protected] Associate Academic Editors: Dr. Damon Coletta U.S. Air Force Academy, USA Dr. Michael Gleason U.S. Air Force Academy, USA Dr. Peter Hays National Security Space Office, USA Major Deron Jackson U.S. Air Force Academy, USA Dr. Collins Shackelford U.S. Air Force Academy, USA Colonel Michael Smith U.S. Air Force, USA Reviewers: Andrew Aldrin John Logsdon United Launch Alliance, USA George Washington University, USA James Armor Agnieszka Lukaszczyk ATK, USA Space Generation Advisory Council, Austria William Barry Molly Macauley NASA, France Resources for the Future, USA Frans von der Dunk Scott Pace University of Nebraska, USA George Washington University, USA Paul Eckart Xavier Pasco Boeing, USA Foundation for Strategic Research, France Andrew Erickson Wolfgang Rathbeger Naval War College, USA European Space Policy Institute, Austria Joanne Gabrynowicz Scott Trimboli University of Mississippi, USA University -

PDF Van Tekst

Onze Taal. Jaargang 73 bron Onze Taal. Jaargang 73. Genootschap Onze Taal, Den Haag 2004 Zie voor verantwoording: https://www.dbnl.org/tekst/_taa014200401_01/colofon.php Let op: werken die korter dan 140 jaar geleden verschenen zijn, kunnen auteursrechtelijk beschermd zijn. 1 [Nummer 1] Onze Taal. Jaargang 73 4 Vervlakt de intonatie? Ouderen en jongeren met elkaar vergeleken Vincent J. van Heuven - Fonetisch Laboratorium, Universiteit Leiden Ouderen kunnen jongeren vaak maar moeilijk verstaan. Ze praten te snel, te slordig en vooral te monotoon, zo luidt de klacht. Zou dat wijzen op een verandering? Wordt de Nederlandse zinsmelodie inderdaad steeds vlakker? Twee onderzoeken bieden meer duidelijkheid. Ik heb twee zoons in de adolescente leeftijdsgroep, zo tussen de 16 en de 20. Toen ik ze nog dagelijks om me heen had (ze zijn inmiddels technisch gesproken volwassen en het huis uit), mocht ik graag luistervinken als ze in gesprek waren met hun vrienden. Dan viel op dat alle jongens, niet alleen de mijne, snel spraken, met weinig stemverheffing, en vooral met weinig melodie. Het leek wel of ze het erom deden. Een Amerikaanse collega, die elk jaar een paar weken bij mij logeert om zijn Nederlands bij te houden, gaf ongevraagd toe dat hij in het algemeen weinig moeite heeft om Nederlanders te verstaan, behalve dan mijn zoons en hun vrienden. Een paar jaar eerder was ik in een onderzoek beoordelaar van de uitspraak van 120 Nederlanders, in leeftijd variërend van puber tot bejaarde. Mij viel op dat de bejaarden zo veel prettiger waren om naar te luisteren. Niet alleen spraken ze langzamer dan de jongeren, maar vooral maakten ze veel beter gebruik van de mogelijkheden die onze taal biedt om de boodschap met behulp van het stemgebruik te structureren. -

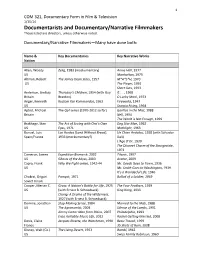

Documentarists and Documentary/Narrative Filmmakers Those Listed Are Directors, Unless Otherwise Noted

1 COM 321, Documentary Form in Film & Television 1/15/14 Documentarists and Documentary/Narrative Filmmakers Those listed are directors, unless otherwise noted. Documentary/Narrative Filmmakers—Many have done both: Name & Key Documentaries Key Narrative Works Nation Allen, Woody Zelig, 1983 (mockumentary) Annie Hall, 1977 US Manhattan, 1979 Altman, Robert The James Dean Story, 1957 M*A*S*H, 1970 US The Player, 1992 Short Cuts, 1993 Anderson, Lindsay Thursday’s Children, 1954 (with Guy if. , 1968 Britain Brenton) O Lucky Man!, 1973 Anger, Kenneth Kustom Kar Kommandos, 1963 Fireworks, 1947 US Scorpio Rising, 1964 Apted, Michael The Up! series (1970‐2012 so far) Gorillas in the Mist, 1988 Britain Nell, 1994 The World is Not Enough, 1999 Brakhage, Stan The Act of Seeing with One’s Own Dog Star Man, 1962 US Eyes, 1971 Mothlight, 1963 Bunuel, Luis Las Hurdes (Land Without Bread), Un Chien Andalou, 1928 (with Salvador Spain/France 1933 (mockumentary?) Dali) L’Age D’Or, 1930 The Discreet Charm of the Bourgeoisie, 1972 Cameron, James Expedition Bismarck, 2002 Titanic, 1997 US Ghosts of the Abyss, 2003 Avatar, 2009 Capra, Frank Why We Fight series, 1942‐44 Mr. Deeds Goes to Town, 1936 US Mr. Smith Goes to Washington, 1939 It’s a Wonderful Life, 1946 Chukrai, Grigori Pamyat, 1971 Ballad of a Soldier, 1959 Soviet Union Cooper, Merian C. Grass: A Nation’s Battle for Life, 1925 The Four Feathers, 1929 US (with Ernest B. Schoedsack) King Kong, 1933 Chang: A Drama of the Wilderness, 1927 (with Ernest B. Schoedsack) Demme, Jonathan Stop Making Sense, -

Michael Moore: a Man on a Mission Or How Far A

Michael Moore: A Man on a Mission or How Far a Reinvigorated Populism Can Take Us Garry Watson (Cineaction 70) My focus in this essay will be on Michael Mooreʼs four documentaries – Roger and Me (1989), The Big One (1997), Bowling for Columbine (2002) and Fahrenheit 9/11 (2004) – with most of my attention being given to the first and third of these, and least to the second. These four films are significant and worth studying for a number of reasons: (i) The size of the audiences they have succeeded in reaching; (ii) the political impact they have had (on which, among other things, see Robert Brent Toplinʼs useful book on Michael Mooreʼs “Fahreneit 9/11”: How One Film Divided A Nation [2006]); (iii) and the extent to which they helped prepare the reception for such recent political documentaries as, for example, Errol Morrisʼs The Fog of War (2004), Mark Achbar and Jennifer Abbottʼs The Corporation (2005), Alex Gibneyʼs Enron: The Smartest Guys in the Room (2005), Eugene Jareckiʼs Why We Fight (2005), David Guggenheimʼs An Inconvenient Truth (2006), and Chris Paineʼs Who Killed the Electric Car? (2006). It may not be redundant to rehearse some of the facts. If Roger and Me was more successful at the box office than any documentary that preceded it, Moore went on to break the same record on two subsequent occasions – first with Bowling for Columbine, then with Fahrenheit 9/11. And as far as the latter is concerned, we get some sense of the excitement that was generated when it first screened in the US by the Foreword that John Berger wrote in 2004 for The Official “Fahrenheit 9/11” Reader (while the film was “still playing in hundreds of theaters across America”1). -

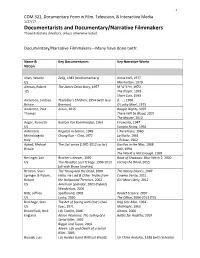

Documentarists and Documentary/Narrative Filmmakers Those Listed Are Directors, Unless Otherwise Noted

1 COM 321, Documentary Form in Film, Television, & Interactive Media 1/27/17 Documentarists and Documentary/Narrative Filmmakers Those listed are directors, unless otherwise noted. Documentary/Narrative Filmmakers—Many have done both: Name & Key Documentaries Key Narrative Works Nation Allen, Woody Zelig, 1983 (mockumentary) Annie Hall, 1977 US Manhattan, 1979 Altman, Robert The James Dean Story, 1957 M*A*S*H, 1970 US The Player, 1992 Short Cuts, 1993 Anderson, Lindsay Thursday’s Children, 1954 (with Guy if. , 1968 Britain Brenton) O Lucky Man!, 1973 Anderson, Paul Junun, 2015 Boogie Nights, 1997 Thomas There Will be Blood, 2007 The Master, 2012 Anger, Kenneth Kustom Kar Kommandos, 1963 Fireworks, 1947 US Scorpio Rising, 1964 Antonioni, Ragazze in bianco, 1949 L’Avventura, 1960 Michelangelo Chung Kuo – Cina, 1972 La Notte, 1961 Italy L'Eclisse, 1962 Apted, Michael The Up! series (1970‐2012 so far) Gorillas in the Mist, 1988 Britain Nell, 1994 The World is Not Enough, 1999 Berlinger, Joe Brother’s Keeper, 1992 Book of Shadows: Blair Witch 2, 2000 US The Paradise Lost Trilogy, 1996-2011 Facing the Wind, 2015 (all with Bruce Sinofsky) Berman, Shari The Young and the Dead, 2000 The Nanny Diaries, 2007 Springer & Pulcini, Hello, He Lied & Other Truths from Cinema Verite, 2011 Robert the Hollywood Trenches, 2002 Girl Most Likely, 2012 US American Splendor, 2003 (hybrid) Wanderlust, 2006 Blitz, Jeffrey Spellbound, 2002 Rocket Science, 2007 US Lucky, 2010 The Office, 2006-2013 (TV) Brakhage, Stan The Act of Seeing with One’s Own Dog Star Man, -

31 Nuclear Weapons—Use, Probabilities and History

1 ROTARY DISTRICT 5440 PEACEBUILDER NEWSLETTER MARCH 2020 NUMBER 31 NUCLEAR WEAPONS—USE, PROBABILITIES AND HISTORY William M. Timpson, Robert Meroney, Lloyd Thomas, Sharyn Selman and Del Benson Fort Collins Rotary Club Lindsey Pointer, 2017 Rotary Global Grant Scholarship Recipient In these newsletters of the Rotary District Peacebuilders, we want to invite readers for contributions and ideas, suggestions and possibilities for our efforts to promote the foundational skills for promoting peace, i.e., nonviolent conflict resolution, improved communication and cooperation, successful negotiation and mediation as well as the critical and creative thinking that can help communities move through obstacles and difficulties. HOW THE MOST VIOLENT OF HUMAN ACTIVITY, A NCLEAR WWIII, HAS BEEN AVOIDED, SO FAR Robert Lawrence, Ph.D. is an Emeritus Professor of Political Science at Colorado State University who has written extensively on this issue of nuclear weapons Let’s play a mind game. Think back in time and pick an actual war, any war. Now imagine you are the leader who began the war. Would you have ordered the attack if you knew for certain that 30 minutes later you, your family, and your people would all be killed. Probably not. Your logic led Presidents Reagan and Gorbachev to jointly declare “a nuclear war can never be won and must never be fought.” This is the sine qua non of American and Russian nuclear deterrence policy. Always before wars made sense to some because they could be won, and there were many winners. How then has this no-win war situation---the first in history-- happened? Because the U.S. -

Empathize with Your Enemy”

PEACE AND CONFLICT: JOURNAL OF PEACE PSYCHOLOGY, 10(4), 349–368 Copyright © 2004, Lawrence Erlbaum Associates, Inc. Lesson Number One: “Empathize With Your Enemy” James G. Blight and janet M. Lang Thomas J. Watson Jr. Institute for International Studies Brown University The authors analyze the role of Ralph K. White’s concept of “empathy” in the context of the Cuban Missile Crisis, Vietnam War, and firebombing in World War II. They fo- cus on the empathy—in hindsight—of former Secretary of Defense Robert S. McNamara, as revealed in the various proceedings of the Critical Oral History Pro- ject, as well as Wilson’s Ghost and Errol Morris’s The Fog of War. Empathy is the great corrective for all forms of war-promoting misperception … . It [means] simply understanding the thoughts and feelings of others … jumping in imagination into another person’s skin, imagining how you might feel about what you saw. Ralph K. White, in Fearful Warriors (White, 1984, p. 160–161) That’s what I call empathy. We must try to put ourselves inside their skin and look at us through their eyes, just to understand the thoughts that lie behind their decisions and their actions. Robert S. McNamara, in The Fog of War (Morris, 2003a) This is the story of the influence of the work of Ralph K. White, psychologist and former government official, on the beliefs of Robert S. McNamara, former secretary of defense. White has written passionately and persuasively about the importance of deploying empathy in the development and conduct of foreign policy. McNamara has, as a policymaker, experienced the success of political decisions made with the benefit of empathy and borne the responsibility for fail- Requests for reprints should be sent to James G. -

Downloads of Technical Information

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2018 Nuclear Spaces: Simulations of Nuclear Warfare in Film, by the Numbers, and on the Atomic Battlefield Donald J. Kinney Follow this and additional works at the DigiNole: FSU's Digital Repository. For more information, please contact [email protected] FLORIDA STATE UNIVERSITY COLLEGE OF ARTS AND SCIENCES NUCLEAR SPACES: SIMULATIONS OF NUCLEAR WARFARE IN FILM, BY THE NUMBERS, AND ON THE ATOMIC BATTLEFIELD By DONALD J KINNEY A Dissertation submitted to the Department of History in partial fulfillment of the requirements for the degree of Doctor of Philosophy 2018 Donald J. Kinney defended this dissertation on October 15, 2018. The members of the supervisory committee were: Ronald E. Doel Professor Directing Dissertation Joseph R. Hellweg University Representative Jonathan A. Grant Committee Member Kristine C. Harper Committee Member Guenter Kurt Piehler Committee Member The Graduate School has verified and approved the above-named committee members, and certifies that the dissertation has been approved in accordance with university requirements. ii For Morgan, Nala, Sebastian, Eliza, John, James, and Annette, who all took their turns on watch as I worked. iii ACKNOWLEDGMENTS I would like to thank the members of my committee, Kris Harper, Jonathan Grant, Kurt Piehler, and Joseph Hellweg. I would especially like to thank Ron Doel, without whom none of this would have been possible. It has been a very long road since that afternoon in Powell's City of Books, but Ron made certain that I did not despair. Thank you. iv TABLE OF CONTENTS Abstract..............................................................................................................................................................vii 1. -

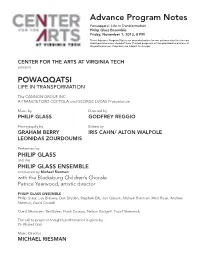

Advance Program Notes Powaqqatsi: Life in Transformation Philip Glass Ensemble Friday, November 1, 2013, 8 PM

Advance Program Notes Powaqqatsi: Life in Transformation Philip Glass Ensemble Friday, November 1, 2013, 8 PM These Advance Program Notes are provided online for our patrons who like to read about performances ahead of time. Printed programs will be provided to patrons at the performances. Programs are subject to change. CENTER FOR THE ARTS AT VIRGINIA TECH presents POWAQQATSI LIFE IN TRANSFORMATION The CANNON GROUP INC. A FRANCIS FORD COPPOLA and GEORGE LUCAS Presentation Music by Directed by PHILIP GLASS GODFREY REGGIO Photography by Edited by GRAHAM BERRY IRIS CAHN/ ALTON WALPOLE LEONIDAS ZOURDOUMIS Performed by PHILIP GLASS and the PHILIP GLASS ENSEMBLE conducted by Michael Riesman with the Blacksburg Children’s Chorale Patrice Yearwood, artistic director PHILIP GLASS ENSEMBLE Philip Glass, Lisa Bielawa, Dan Dryden, Stephen Erb, Jon Gibson, Michael Riesman, Mick Rossi, Andrew Sterman, David Crowell Guest Musicians: Ted Baker, Frank Cassara, Nelson Padgett, Yousif Sheronick The call to prayer in tonight’s performance is given by Dr. Khaled Gad Music Director MICHAEL RIESMAN Sound Design by Kurt Munkacsi Film Executive Producers MENAHEM GOLAN and YORAM GLOBUS Film Produced by MEL LAWRENCE, GODFREY REGGIO and LAWRENCE TAUB Production Management POMEGRANATE ARTS Linda Brumbach, Producer POWAQQATSI runs approximately 102 minutes and will be performed without intermission. SUBJECT TO CHANGE PO-WAQ-QA-TSI (from the Hopi language, powaq sorcerer + qatsi life) n. an entity, a way of life, that consumes the life forces of other beings in order to further its own life. POWAQQATSI is the second part of the Godfrey Reggio/Philip Glass QATSI TRILOGY. With a more global view than KOYAANISQATSI, Reggio and Glass’ first collaboration, POWAQQATSI, examines life on our planet, focusing on the negative transformation of land-based, human- scale societies into technologically driven, urban clones. -

Documentary Film-Making in Theory and Practise

Documentary Film-making in Theory and Practise By Didem Pekün [email protected] +90 537 4160103 Office no: TBC Office Hours: Fridays 2.30- 5 pm(Earlier hours can be arranged by appointment) Course Description The aims of the course are to foster an understanding of documentary as a diverse form, with a range of styles and genres, to root this diversity in its various historical and social contexts, and to introduce you to some analytical tools appropriate for study of your own and other filmmakers’ work. The course is formed by two sections; while the students will be informed of the history of the genre they will also make their own short documentary videos. As the course is practise-based, it is necessary that the students who wish to take it have a basic knowledge of film-making. Learning outcomes By the end of the course the students will be able to • Distinguish between, and critically evaluate, the principle ‘modes’ of documentary making • Be able to read a documentary text closely and write about how it communicates meaning • Understanding documentary production in its social and historical context • Be conversant with, and sensitive to, current debates about documentary ethics and aesthetics. • Produce their own short film, making informed and creative decisions at every stage of production process. Assesment Methods In-class participation and edit analysis presentation to the class 20 – each presentation starting from the 4th week Essay, 30 (1500 words) 17 MAY DEADLINE 2 video projects, 50; first one 5 minutes long - second one 10 – 15 mns long. (it can be an extended version of the first video or a completely new project) – Bibliography 1) History / Accounts on Documentary The Art of Record, John Corner (Manchester, 1996) Documentary, Bill Nichols (Indiana, 2001) New Documentary, Stella Bruzzi Documentary; a history of non-fiction film; Erik Barnouw Documentary : a history of the non-fiction film / Erik Barnouw. -

Truth, Lies, and Videotape: Documentary Film and Issues of Morality U2 Linc Course: Interdisciplinary Studies 290 Fall 2007, T/TH 12:45-3:15

Truth, Lies, and Videotape: Documentary Film and Issues of Morality U2 LinC Course: Interdisciplinary Studies 290 Fall 2007, T/TH 12:45-3:15 Instructor: Krista (Steinke) Finch Office: Art Office/ studio room 103 Office Hours: M, T, TH, W 11:30-12:30 or by appointment Phone: 861-1675 (art office) Email: [email protected] ***Please note that email is the best way to communicate with me COURSE DESCRIPTION: This course will explore issues of morality in documentary film as well provide a conceptual overview of the forms, strategies, structures and conventions of documentary film practice. Filmmaking is a universal language which has proven to be a powerful tool of communication for fostering understanding and change. For this course, students will study the history and theory of the documentary film and its relationship to topics and arguments about the social world. Students will be introduced to theoretical frameworks in ethics and media theory as a means to interpret and reflect upon issues presented in documentary film. Weekly screenings and readings will set the focus for debate and discussion on these specific issues. Students will also work in small groups to create short documentary films on particular subject of their own concern. Through hands on experience, students will learn the basics in planning, producing, and editing a documentary film while gaining an in depth insight into a particular issue through extensive research and exploration. The semester will culminate with a public presentation of the documentary films created during the course at the 2006 Student Film Festival in late April. GOALS: Students will: • understand the history of documentary film and be able to critically address media related arts in relationship to societal issues. -

Climate Change and Justice Between Nonoverlapping Generations ANJA

Climate Change and ANJA Justice between KARNEIN Nonoverlapping Generations Abstract: It is becoming less and less controversial that we ought to aggressively combat climate change. One main reason for doing so is concern for future generations, as it is they who will be the most seriously affected by it. Surprisingly, none of the more prominent deontological theories of intergenerational justice can explain why it is wrong for the present generation to do very little to stop worsening the problem. This paper discusses three such theories, namely indirect reciprocity, common ownership of the earth and human rights. It shows that while indirect reciprocity and common ownership are both too undemanding, the human rights approach misunderstands the nature of our intergenerational relationships, thereby capturing either too much or too little about what is problematic about climate change. The paper finally proposes a way to think about intergenerational justice that avoids the pitfalls of the traditional theories and can explain what is wrong with perpetuating climate change. Keywords: climate change, intergenerational justice, future generations, indirect reciprocity, common ownership, human rights • Introduction When it comes to climate change, it is future generations who are likely to suffer most from both actions and omissions we, people living today, are responsible for.1 Unfortunately, and as dire as the situation threatens to be for them, we are not especially motivated to act on their behalf. There are a number of reasons why, in the case of future generations, we are particularly susceptible to what Stephen Gardiner has called moral corruption.2 Chiefly among them is that future generations are not around to challenge us.