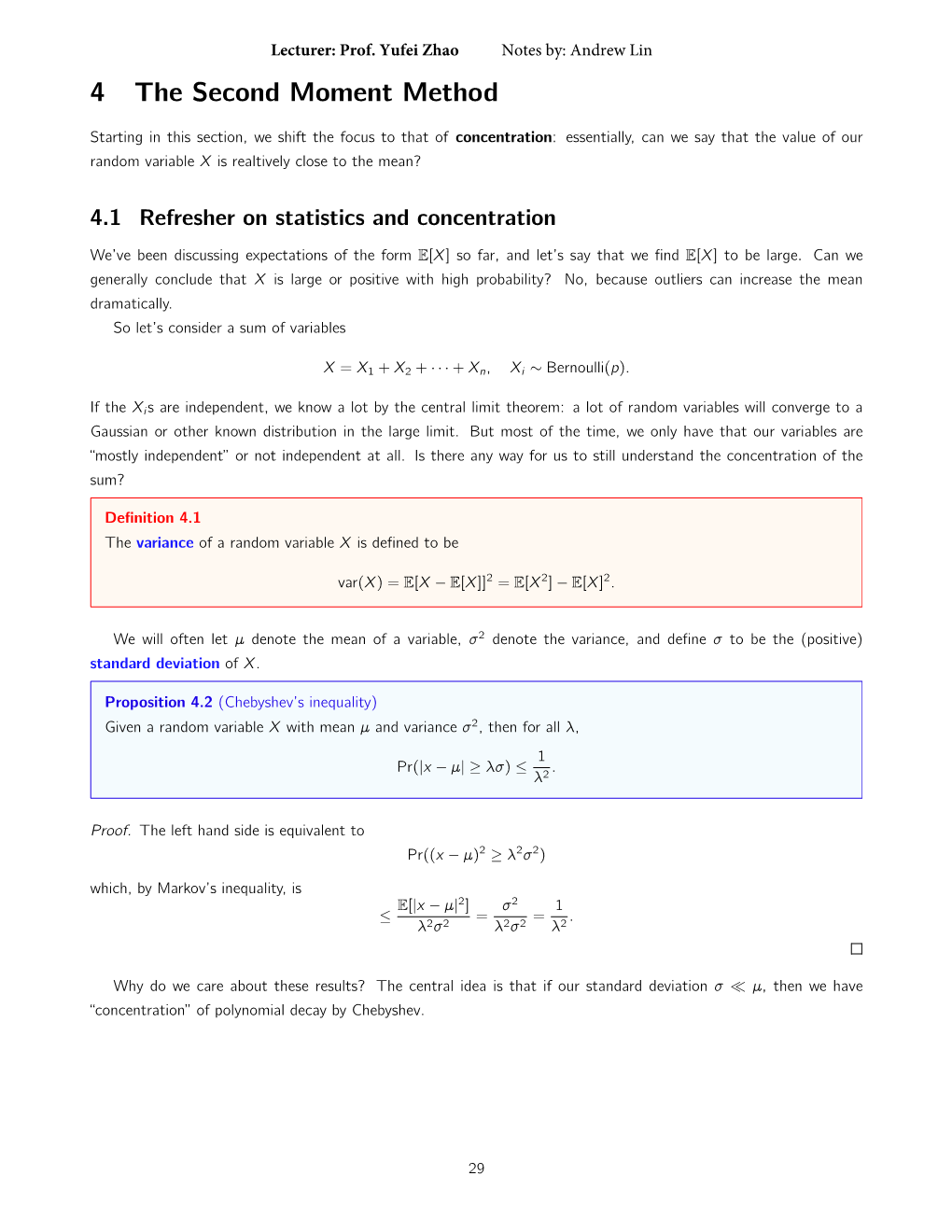

The Second Moment Method

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rotational Motion (The Dynamics of a Rigid Body)

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Robert Katz Publications Research Papers in Physics and Astronomy 1-1958 Physics, Chapter 11: Rotational Motion (The Dynamics of a Rigid Body) Henry Semat City College of New York Robert Katz University of Nebraska-Lincoln, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/physicskatz Part of the Physics Commons Semat, Henry and Katz, Robert, "Physics, Chapter 11: Rotational Motion (The Dynamics of a Rigid Body)" (1958). Robert Katz Publications. 141. https://digitalcommons.unl.edu/physicskatz/141 This Article is brought to you for free and open access by the Research Papers in Physics and Astronomy at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Robert Katz Publications by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. 11 Rotational Motion (The Dynamics of a Rigid Body) 11-1 Motion about a Fixed Axis The motion of the flywheel of an engine and of a pulley on its axle are examples of an important type of motion of a rigid body, that of the motion of rotation about a fixed axis. Consider the motion of a uniform disk rotat ing about a fixed axis passing through its center of gravity C perpendicular to the face of the disk, as shown in Figure 11-1. The motion of this disk may be de scribed in terms of the motions of each of its individual particles, but a better way to describe the motion is in terms of the angle through which the disk rotates. -

Create an Absence/OT Request (Timekeeper)

Create an Absence/OT Request (Timekeeper) 1. From the Employee Self Service home page, select the drop-down at the top of the screen, and choose Time Administration. a. Follow these instructions to add the Time Administration page/tile to your homepage. 2. Select the Time Administration tile. a. It might take a moment for the Time Administration page to load. Create Absence/OT Request (Timekeeper) | 1 3. Select the Report Employee Time tab. 4. Click on the Employee Selection arrow to make the Employee Selection Criteria section appear. Enter full or partial search items to refine the list of employees, and select the Get Employees button. a. If you do not enter search criteria and simply click Get Employees, all employees will appear in the Search Results section. Create Absence/OT Request (Timekeeper) | 2 5. A list of employees will appear. Select the employee for whom you would like to create an absence or overtime request. 6. The employee’s timesheet will appear. Navigate to the date of the leave request using the Date field or Previous Period/Next Period hyperlinks, and select the refresh [ ] icon. Create Absence/OT Request (Timekeeper) | 3 7. Select the Absence/OT tab on the timesheet. 8. Select the Add Absence Event button. Create Absence/OT Request (Timekeeper) | 4 9. Enter the Start and End Dates for the absence/overtime event. 10. Select the Absence Name drop-down to choose the appropriate option. Create Absence/OT Request (Timekeeper) | 5 11. Select the Details hyperlink. 12. The Absence Event Details screen will pop up. Create Absence/OT Request (Timekeeper) | 6 13. -

Welcome to Random Moment Time Survey (RMTS) for School-Based Medi-Cal Administrative Activities (SMAA)

Welcome to Random Moment Time Survey (RMTS) for School-Based Medi-Cal Administrative Activities (SMAA) 2018-2019 Quarter 4 (April – June 2019) Your program administrator(s) has selected you to be a Time Survey Participant (TSP) in the SMAA program. Due to the random nature of the RMTS methodology there is a possibility that you may or may not receive one or more moments in a given quarter. If selected, you will receive your first email notification 5 student attendance days prior to the moment and another email 1 hour prior to the moment. Once each random moment occurs, you will have 5 student attendance days to respond to three simple questions: 1. Who were you with? • DO NOT use proper names, use job title or category 2. What were you doing? • Be specific, detailed and precise for your one minute moment • Do not use abbreviations or acronyms 3. Why were you performing this activity? • Again be specific. This part of the response makes clear to an outside reviewer the purpose of your activity for the moment, and why you did it. Things to Remember: . A response from you is required whether or not you are performing SMAA. Check your email regularly. Notification of pending random moments will be made via email from [email protected] Please ‘white-list’ this email address. You do not need a user log-in or password. Time study notification email contains a unique link to access the system to record your response. The unique link is locked until your moment has passed. Links can be accessed on any computer or smartphone with web capabilities. -

Guide for the Use of the International System of Units (SI)

Guide for the Use of the International System of Units (SI) m kg s cd SI mol K A NIST Special Publication 811 2008 Edition Ambler Thompson and Barry N. Taylor NIST Special Publication 811 2008 Edition Guide for the Use of the International System of Units (SI) Ambler Thompson Technology Services and Barry N. Taylor Physics Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899 (Supersedes NIST Special Publication 811, 1995 Edition, April 1995) March 2008 U.S. Department of Commerce Carlos M. Gutierrez, Secretary National Institute of Standards and Technology James M. Turner, Acting Director National Institute of Standards and Technology Special Publication 811, 2008 Edition (Supersedes NIST Special Publication 811, April 1995 Edition) Natl. Inst. Stand. Technol. Spec. Publ. 811, 2008 Ed., 85 pages (March 2008; 2nd printing November 2008) CODEN: NSPUE3 Note on 2nd printing: This 2nd printing dated November 2008 of NIST SP811 corrects a number of minor typographical errors present in the 1st printing dated March 2008. Guide for the Use of the International System of Units (SI) Preface The International System of Units, universally abbreviated SI (from the French Le Système International d’Unités), is the modern metric system of measurement. Long the dominant measurement system used in science, the SI is becoming the dominant measurement system used in international commerce. The Omnibus Trade and Competitiveness Act of August 1988 [Public Law (PL) 100-418] changed the name of the National Bureau of Standards (NBS) to the National Institute of Standards and Technology (NIST) and gave to NIST the added task of helping U.S. -

Random Moment Time Study PCG Claiming System™ Participant Guide

Random Moment Time Study PCG Claiming System™ Participant Guide www.pcgeducation.com Random Moment Time Study (RMTS) Overview • What is RMTS? • Snapshot of how clinical staff spend their time on specific activities • Required to support Medicaid claims for school health services • Based on ‘moments’ that are equal to one minute • Moments are randomly assigned • Participants are asked to document their activity during that assigned moment • An accurate and candid response from the participant is critical to a successful and valid time study • What RMTS is NOT • RMTS is not a management tool that is in any way used to evaluate employee activities or performance • Employees should not intentionally alter their activity at any particular time because of their participation in the RMTS Delaware CSCRP Random Moment Time Study 2 Web-based RMTS Process • RMTS participants will receive moments via email • Email will be sent to participant listing date and time of assigned moment one day before the moment occurs • After the moment has occurred, participants can click on the link provided in the email • A user ID or password are not required • Participants will receive reminder messages with the moment link at the time of the moment and 24 hours after the moment has occurred. If the moment remains unanswered, additional reminders will be sent Delaware CSCRP Random Moment Time Study 3 Role of the Participant • Respond to all randomly selected moments in a timely fashion – participants have 2 school days to respond to each moment • Answer candidly and thoroughly • Do not delete time study email notifications • Seek assistance from CSCRP specialist or RMTS contractors with questions or concerns • Inform CSCRP specialist if the participant will not be working for more than 3 days at any point during the school year Delaware CSCRP Random Moment Time Study 4 Web-based RMTS Process • Participants who are selected for a moment receive an email from PCG with the date/time of the sample moment 1 day in advance of the date/time of the sample moment. -

1 Detailed Discussion of Spring 2021 Calendar Options Given the Unique

Detailed Discussion of Spring 2021 Calendar Options Given the unique constraints of the current moment, the Calendar and Schedule Committee has identified two potential options for the Spring 2021 calendar. These have been modified to reflect community feedback. Summary of MODIFIED Option A: Continuous Model (Students remain on campus for the full duration of the semester, assuming the public health situation allows) W 2/17: Classes begin (Thursday schedule) Th 2/18: Claiming Williams Day, No Classes M-T 3/22-3/23: Reading Period W-Th 4/21-4/22: Health Days F 5/7: Health Day W 5/19: Last Day of Classes Th-Su 5/20-5/23: Reading Period M-S 5/24-5/29: Finals Summary of MODIFIED Option B: 'Thanksgiving' Model (Most students vacate campus during Spring Break and finish out the semester learning remotely, as in the Fall) W 2/17: Classes begin (Thursday schedule) Th 2/18: Claiming Williams Day, No Classes S-Su 5/1-5/9: Spring Break **All classes are remote for the remainder of the semester W 5/19: Last Day of Classes Th-Su 5/20-23: Reading Period M-S 5/24-5/29: Finals In designing these proposed models of the Spring Calendar, the Calendar and Scheduling Committee (CSC) sought to balance the needs of: Students (considering as fully as possible the range of social, educational, and financial needs represented among them, hailing from diverse locations, learning remotely and in person, seniors and underclassmen, including those who are applying for internships and employment over the summer, as well as those who have petitioned to stay on campus between fall and spring semesters) Faculty (from all three divisions, teaching remotely and in person, using a variety of teaching formats including tutorials and labs, as well as parents facing issues of childcare) Staff (in the full range of positions that they serve the campus community, including parents facing issues of childcare) Some notes: • For the sake of consistency, we aimed to maximize redundancy between the two models. -

Rotation: Moment of Inertia and Torque

Rotation: Moment of Inertia and Torque Every time we push a door open or tighten a bolt using a wrench, we apply a force that results in a rotational motion about a fixed axis. Through experience we learn that where the force is applied and how the force is applied is just as important as how much force is applied when we want to make something rotate. This tutorial discusses the dynamics of an object rotating about a fixed axis and introduces the concepts of torque and moment of inertia. These concepts allows us to get a better understanding of why pushing a door towards its hinges is not very a very effective way to make it open, why using a longer wrench makes it easier to loosen a tight bolt, etc. This module begins by looking at the kinetic energy of rotation and by defining a quantity known as the moment of inertia which is the rotational analog of mass. Then it proceeds to discuss the quantity called torque which is the rotational analog of force and is the physical quantity that is required to changed an object's state of rotational motion. Moment of Inertia Kinetic Energy of Rotation Consider a rigid object rotating about a fixed axis at a certain angular velocity. Since every particle in the object is moving, every particle has kinetic energy. To find the total kinetic energy related to the rotation of the body, the sum of the kinetic energy of every particle due to the rotational motion is taken. The total kinetic energy can be expressed as .. -

Chapter 8: Rotational Motion

TODAY: Start Chapter 8 on Rotation Chapter 8: Rotational Motion Linear speed: distance traveled per unit of time. In rotational motion we have linear speed: depends where we (or an object) is located in the circle. If you ride near the outside of a merry-go-round, do you go faster or slower than if you ride near the middle? It depends on whether “faster” means -a faster linear speed (= speed), ie more distance covered per second, Or - a faster rotational speed (=angular speed, ω), i.e. more rotations or revolutions per second. • Linear speed of a rotating object is greater on the outside, further from the axis (center) Perimeter of a circle=2r •Rotational speed is the same for any point on the object – all parts make the same # of rotations in the same time interval. More on rotational vs tangential speed For motion in a circle, linear speed is often called tangential speed – The faster the ω, the faster the v in the same way v ~ ω. directly proportional to − ω doesn’t depend on where you are on the circle, but v does: v ~ r He’s got twice the linear speed than this guy. Same RPM (ω) for all these people, but different tangential speeds. Clicker Question A carnival has a Ferris wheel where the seats are located halfway between the center and outside rim. Compared with a Ferris wheel with seats on the outside rim, your angular speed while riding on this Ferris wheel would be A) more and your tangential speed less. B) the same and your tangential speed less. -

Moment JS Cheat Sheet by Nothingspare Via Cheatography.Com/20576/Cs/4731

Moment JS Cheat Sheet by nothingspare via cheatography.com/20576/cs/4731/ Loading || Installing Formatting (cont) html <script type="te xt/ jav asc rip t" Day of Week d [0+], dd [Su+], ddd [Sun+], dddd [Sunday+] src="https://cdnjs.cloudflare.com/ajax/libs/moment.js/2.10.6/momen Hour 24h-Clock H [0+], HH [00+] t.min .js "></ scr ipt > Hour 12h-clock h [1+], hh [01+] cdn https:/ /cd njs .cl oud fla re.com /aj ax/ lib s/m ome nt.js/ 2.10 .6/ mo men t.mi n. Minute m [00+], mm [00+] js Second s [0+], ss [00+] npm npm install moment Second, Fractional S [0-9], SS [00-99] bower bower install moment Zone Z [-00:00], ZZ [-0000] git https:/ /gi thu b.co m/ mom ent /mo men t.git Unix Timestamp X [012345 6789] WWW http:// mom ent js.com/ Unix Millise cond x [012345 678 9012] Parsing master these: Y, M, D, H, m, s, Z, X Now moment() Manipul ate, Constrain, or Validate String moment( "197 7-0 9-0 5", "Y YYY -MM -DD ") Unix moment.uni x(0 123 456 789) Get Start of Period .startO f(S tring) ['week'] Object moment( {year: 1977[, month, day, hour, minute, second]}) Get End of Period .endOf( Str ing) ['week'] Array moment( [year, month, day, hour, minute, second, ms]) $DateA Is Before $DateB .isBefo re( Str ing[, String]) ["197 7-0 9-0 5", "y ear "] Get/Set $DateA Is After$D ateB .isAfte r(S tri ng[, String]) ["197 7-0 9-0 5", "y ear "] Second .second() / .second (/* 0- 59*/) $DateA Is the Same as .isSame (St ring[, String]) ["197 7-0 -

Lawsuits, Thereby Weakening Ballot Integrity

No. ______, Original In the Supreme Court of the United States STATE OF TEXAS, Plaintiff, v. COMMONWEALTH OF PENNSYLVANIA, STATE OF GEORGIA, STATE OF MICHIGAN, AND STATE OF WISCONSIN, Defendants. MOTION FOR LEAVE TO FILE BILL OF COMPLAINT Ken Paxton* Attorney General of Texas Brent Webster First Assistant Attorney General of Texas Lawrence Joseph Special Counsel to the Attorney General of Texas Office of the Attorney General P.O. Box 12548 (MC 059) Austin, TX 78711-2548 [email protected] (512) 936-1414 * Counsel of Record i TABLE OF CONTENTS Pages Motion for leave to File Bill of Complaint ................. 1 No. ______, Original In the Supreme Court of the United States STATE OF TEXAS, Plaintiff, v. COMMONWEALTH OF PENNSYLVANIA, STATE OF GEORGIA, STATE OF MICHIGAN, AND STATE OF WISCONSIN, Defendants. MOTION FOR LEAVE TO FILE BILL OF COMPLAINT Pursuant to 28 U.S.C. § 1251(a) and this Court’s Rule 17, the State of Texas respectfully seeks leave to file the accompanying Bill of Complaint against the States of Georgia, Michigan, and Wisconsin and the Commonwealth of Pennsylvania (collectively, the “Defendant States”) challenging their administration of the 2020 presidential election. As set forth in the accompanying brief and complaint, the 2020 election suffered from significant and unconstitutional irregularities in the Defendant States: • Non-legislative actors’ purported amendments to States’ duly enacted election laws, in violation of the Electors Clause’s vesting State legislatures with plenary authority regarding the appointment of presidential electors. • Intrastate differences in the treatment of voters, with more favorable allotted to voters – whether lawful or unlawful – in areas administered by local government under Democrat control and with populations with higher ratios of Democrat voters than other areas of Defendant States. -

GPS Receiver Impact from the UTC Offset (UTCO) Anomaly of 25-26 January 2016

GPS Receiver Impact from the UTC Offset (UTCO) Anomaly of 25-26 January 2016 Karl Kovach, The Aerospace Corporation Philip J. Mendicki, The Aerospace Corporation Edward D. Powers, United States Naval Observatory (USNO) Brent Renfro, Applied Research Laboratories, The University of Texas at Austin BIOGRAPHIES several hours, multiple satellites broadcast information regarding the offset between GPS time and coordinated Karl Kovach is a Senior Project Leader within the time universal (UTC) in a manner that did not conform to Navigation Division of The Aerospace Corporation in El the GPS signal interface specification (IS-GPS-200). This Segundo, CA. Karl has over 35 years working on various paper describes what data was broadcast at what times and aspects of the GPS program, including 3 years as the Air the manner in which the contents of the signal did not Force Officer-in-Charge of the GPS Control Segment conform to the specification. We discuss the scope of when it was at Vandenberg AFB, CA (1983-1986). Karl problems reported and the classes of users that were received his BS degree in Mechanical Engineering from possibly subject to problems. We describe the impact of UCLA in 1978. the anomaly with respect to public assertions made by the U.S. government in the GPS Standard Positioning Service Philip Mendicki is a Senior Project Engineer within the Performance Specification. We describe how the existing Navigation Division of The Aerospace Corporation, interface specification provides sufficient information to supporting the GPS Directorate and the 2nd Space detect the non-conforming data such that receivers can Operations Squadron. -

Rfq Rms Jb100620

REQUEST FOR QUOTATION PROJECT TITLE: Random Moment Sampling System and Title IV-E Administrative Claim Support REFERENCE NUMBER: RFQ RMS 100620 ISSUED BY: Chad Jensen, Director of Juvenile Court Services DATE OF ISSUE: Tuesday, October 6, 2020 CONTACT DETAILS: Iowa Judicial Branch (IJB) 1111 East Court Avenue Des Moines, IA 50319 [email protected] QUOTATION RETURN Friday, November 13, 2020 no later than 5:00 PM CST DATE AND TIME: DOCUMENTATION An electronic submission broken out as outlined below. REQUIRED: Submissions must include the completed RFQ Vendor Response Form posted with this RFQ and any supporting documentation required. DETAILS 1. SUMMARY This RFQ seeks a vendor to assist Juvenile Court Services (JCS) within the Iowa Judicial Branch (IJB) in the State of Iowa with accurate Title IV-E documentation, record retention, and the quarterly IV-E Administrative claim compilation to ensure compliance with current federal and state Title IV-E guidelines. These efforts will include the following activities: web-based time study development and implementation as well as monitoring, preparing or enhancing the claim, assisting JCS with submittals, assisting JCS if audited, for claims on which the selected vendor assisted, and training programs, including evaluation and recommendations for improvement. Both the state and federal government share the cost burden for administering public assistance programs. In 2018, the Family First Prevention Services Act was passed. This act reformed the federal child welfare financing streams, Title IV-E and Title IV-B of the Social Security Act, to provide services to families who are at risk of entering the child welfare system.